In this issue’s “Notes from the Editors,” we discuss various methods available that help assess the quality and impact of a journal. Although, in general, academic impact and public outreach remain difficult to measure and compare objectively for many reasons, it remains a relevant tool for researchers (and often-hiring committees) to evaluate relative publication success. However, we believe that the Declaration on Research Assessment (DORA) is absolutely correct by stating: “Do not use journal-based metrics, such as Journal Impact Factors, as a surrogate measure of the quality of individual research articles, to assess an individual scientist’s contributions, or in hiring, promotion, or funding decisions.” The simple reason is that impact factors are not a reliable metric for the quality of an author or an article, but a useful journal metric, which measures an average number of citations per scholarly publication of a journal over some period, mostly two and five years. If the APSR has an impact factor of five, it means that, on average, APSR articles received five citations in a given period.

Although some journals no longer promote their impact factors, a journal’s measure, especially, its changes, is usually a result of dedicated work and strategic choices of editors, including brand management, product development, and efforts driving awareness of a journal’s content. In our case, we introduced FirstView and the letter as a second publication format. We also changed the style of the APSR cover to an updated academic style with blue cover underlining our affiliation to APSA and its commitment to excellent research in all political science subfields. We also committed to a strong review, careful selection, and consistently high standards for publication to send signals of highest scholarly excellence to researchers. Given that Clarivate Analytics suspends journals that actively push self-citation and citation stacking by withholding IF figures (Davis Reference Davis2018), these are legitimate strategic choices to increase impact factor, whereas self-citations, citation rings in special issues and symposia, and denominator manipulations should not only be seen as illegitimate ways to achieve a higher impact factor but also be detrimental to a journal, its brand, and its publications. Furthermore, although using twitter, blogs, and other social media can be harmless activities pursued by authors, journals, and publishers alike to promote publications, they can quickly move into this same dangerous territory if editors require authors to write additional abstracts, blogs, tweets, etc., for their publications, independent from the review process, rather than allowing authors to opt-in.

As Kent Anderson (Reference Anderson2014) correctly summarized, “[j]ournal impact factors are useful, but like any tool, they should be used correctly. They represent an average for a journal. They change. They can be trended. There are other measures that can be used to complement or contextualize them. Impact factors are journal-level metrics, not article-level or researcher-level metrics.” Although scope and scale of a journal’s publications influence the likelihood of citations, journals with high impact factors are also the most desirable places to publish. In the past, scholars have been most driven by the Clarivate Analytics’ impact factor, but in the last decade or so, other tools, such as the Altmetric Attention Score and Google’s h5-index have surfaced, which offer a different picture in regard to how journals and published pieces are received by the community and the more general public. In the following, we review the development of the APSR’s annually published impact factor as well as the Altmetric Attention Score and Google’s h5-index.

We start with the most conventional measure, the Clarivate Analytics’ two-year

impact factor. It is calculated by taking the number of citations of the year in

question for articles published in the previous two years (and for the five-year impact

factor, then the previous five years).

![]() ${\rm{I}}{{\rm{F}}_y} = {{{\rm{Citation}}{{\rm{s}}_{y - 1}} \,+\, {\rm{Citation}}{{\rm{s}}_{y - 2}}} \over {{\rm{Publication}}{{\rm{s}}_{y - 1}} \,+\, {\rm{Publication}}{{\rm{s}}_{y - 2}}}}$

. So, for instance, the 2018 two-year impact factor measures the number

of citations in 2017 of manuscripts published in 2016 and 2015 divided by the number of

publications during that time. However, in small-scale disciplines like political

science, this number is often influenced by one or two highly cited articles. Another

caveat is the time lag of the measure, which reveal dedicated work and strategic choices

of past editors only over time. In our case, for example, the 2018 impact factor

partially reflects for the first time our editorial decisions starting in mid-2016.

${\rm{I}}{{\rm{F}}_y} = {{{\rm{Citation}}{{\rm{s}}_{y - 1}} \,+\, {\rm{Citation}}{{\rm{s}}_{y - 2}}} \over {{\rm{Publication}}{{\rm{s}}_{y - 1}} \,+\, {\rm{Publication}}{{\rm{s}}_{y - 2}}}}$

. So, for instance, the 2018 two-year impact factor measures the number

of citations in 2017 of manuscripts published in 2016 and 2015 divided by the number of

publications during that time. However, in small-scale disciplines like political

science, this number is often influenced by one or two highly cited articles. Another

caveat is the time lag of the measure, which reveal dedicated work and strategic choices

of past editors only over time. In our case, for example, the 2018 impact factor

partially reflects for the first time our editorial decisions starting in mid-2016.

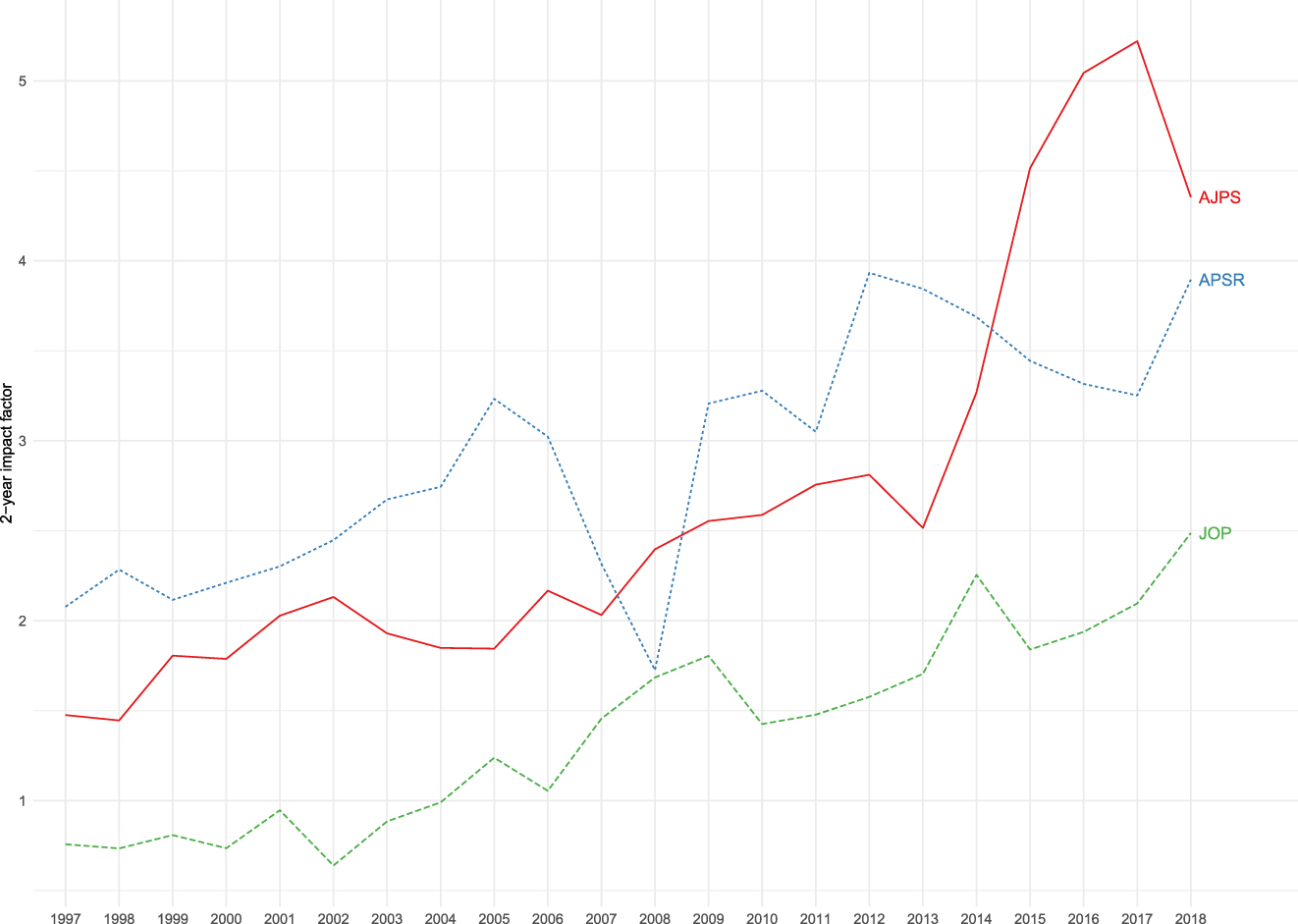

Nevertheless, the measurement has been around for a long time, which allows the evaluation of how a journal has developed in general and with respect to other journals. Figure 1 shows the two-year impact factor of the three main journals with generalist scope over time. Although the APSR has usually had the highest impact in the past among the top-three journals, the AJPS overtook its position in 2015. What is interesting to note is that the APSR’s impact factor has had the lowest volatility during this time with a coefficient of variation of 0.187 compared with JoP’s coefficient of 0.228 and 0.364 of the AJPS. The high volatility of the AJPS may be a consequence of both their comparatively strong increase of the impact factor over time but also its dependency on highly cited outliers. On the other side, APSR pursues a pluralist publication strategy covering all subfields and approaches in political science, which “stabilizes” citations across subfields. Most recently, however, AJPS and APSR appear to be converging again. Despite the uptick in impact factor numbers, the APSR is ranked seventh among all political science journals, according to the 2018 impact factor. In particular, more specialized journals, which also publish special issues and symposia, enormously increased their impact factors in recent years.

FIGURE 1. Two-Year Impact Factor of Generalist Journals in Political Science (1997–2018)

An important factor, which may influence the impact factor, is the subfield composition of published articles because the citation likelihood is higher within than across subfields. This composition varies as a consequence of the type of submissions received and the general review process, but there also may be authors’ perceptions of editorial preferences for certain research at play. As shown in Figure 2, citation patterns vary over subfields. For every manuscript published between 2009 and 2016, we calculated the sum of citations in the first and second year after its publication as documented by Clarivate Analytics’s Web of Science. Note that the subfield information is based on the authors’ classification in our editorial system. What is interesting is variation not only over subfields but also within subfields, with several outliers in five of the eight subfields.

FIGURE 2. Average Citations Two Years after Publication per Subfield for Articles Published 2009–16 (N = Number of Publications)

However, Clarivate’s impact factor is not the only measure available. In the following, we shortly discuss two additional measures that scholars and editors alike can refer to when assessing the impact and outreach of their published research. We first present the Altmetric Attention Score, which shows how much attention—namely, (social) media attention—a publication receives. Then, we discuss Google’s h5-index, which tries to measure the productivity and impact of publications, for either a journal or a scholar. Although the h-index was originally designed to measure an individual scientist’s impact, its attempt to square citations can be applied to any set of articles. Some argue that adding “gray literature” to the h-index is a good thing, but others respond that this is a highly selective set of gray literature (Anderson Reference Anderson2012). What makes the Altmetric Attention Score and the h-index interesting is how they present different ways to view not only a journal’s average impact but also scholars’ impact on their fields as well. It is worth noting that like the impact factor, these measures are also imperfect and come with their own criticisms and modifications.

The Altmetric Attention Score is essentially a measure of public attention of a publication. It is a weighted count of the attention a publication is receiving, in news outlets, on research blogs, in policy documents, and on Twitter, among others Footnote 1 . However, it speaks nothing to the quality of the publication because it is possible to have a large score due to receiving a lot of negative attention, for instance, in cases of fabricated data or debunked research. Furthermore, it is sensitive to editorial activities pushing authors to write additional abstracts and shortcuts for advertisement in social media. To reduce the influence of outliers in the data, Figure 3 shows the median of the Altmetric Attention Scores for all publications in a given year for the period 2003–18.

FIGURE 3. Median Altmetric Attention Score by Year of Publication

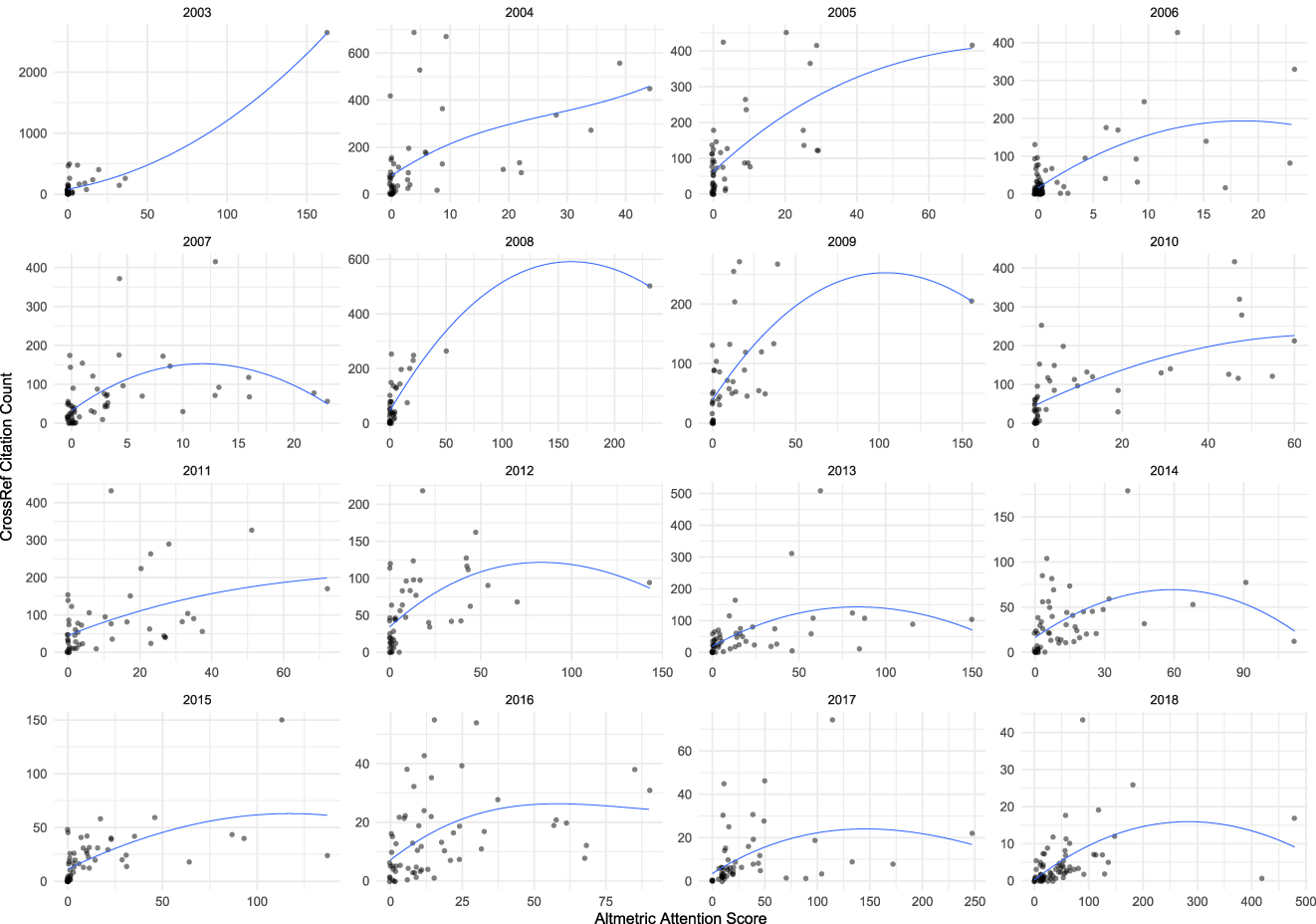

One problem with the impact factor is its delayed feedback, which makes it difficult for editors to evaluate how well dedicated work and careful strategic choices are being received. Yet, how strong does the Altmetric Attention Score correlate with the impact factor? The overall correlation of Altmetric scores and the citation count (according to CrossRef) during 2003 and 2018 is relatively weak with a Pearson’s r of 0.16 (p = 0.00). From visually inspecting the correlation in a scatterplot shown in Figure 4, however, one reason for the weak correlation may be due to a rather nonlinear relationship between the two measures. While increasing Altmetric Scores seem to positively correlate with the number of citations, the relationship does not seem to be deterministic. Instead, the nonlinear trajectory of the blue smoothing curves indicates that publications, which receive a lot of attention in different news and social media outlets, do not necessarily have a similar impact in the discipline’s following publication.

FIGURE 4. Correlation Altmetric Attention Score and Citations

Last but not the least, we also want to draw attention to yet another measure of a journal’s impact: the Google Scholar h5-index. The APSR’s current h5-index is 58, meaning that between 2014 and 2018, i.e. in the last five years, there were 58 articles published that were cited at least 58 times or more. According to the h5-index, the APSR ranks second among all political science journals, just behind AJPS. One thing to be weary of is that h5-indices are hard to compare across fields, which suggests that we should also be careful when comparing across subfields as political science citation rates and style varies greatly depending on the field.

Nevertheless, an advantage over the other impact factors is that the h5-index also includes articles, which were published more recently than those included in the impact factor and are less sensitive to outliers. That being said, for the more recently published articles to influence a journal’s h5-index, they need to gain a high number of citations soon after publication.

Is there a perfect way to measure a journal’s impact in its scientific community? The short answer is no, because one measure may not be enough for making inferences about how the quality of a journal is developing. This is why so many methods have been developed over the years to provide the community with various approaches to understanding the performance of a scholarly outlet. Should publishers cease promoting their impact factors, as suggested by DORA? Again, the short answer is also no because journal impact factors provide useful information, but like any tool, they should be used correctly. In fact, because the disadvantage of Clarivate is its time lag, journals themselves increasingly use other measures that are more sensitive to the selection of new literature and editorial activities—such as social media, blogs, and other tools. Although Altmetric and the h5-index initial intention was to inform about an individual scholar’s impact, we can also use those measures for journal-level metrics, and not only article-level or researcher-level metrics. Taken all together, they represent an average for a journal like the APSR, and can inform editors about dedicated work and their careful strategic choices.