1 Introduction

In today’s programming languages, we find a wealth of powerful constructs and features – exceptions, higher-order store, dynamic method dispatch, coroutines, explicit continuations, concurrency features, Lisp-style ‘quote’ and so on – which may be present or absent in various combinations in any given language. There are, of course, many important pragmatic and stylistic differences between languages, but here we are concerned with whether languages may differ more essentially in their expressive power, according to the selection of features they contain.

One can interpret this question in various ways. For instance, Felleisen (Reference Felleisen1991) considers the question of whether a language

![]() $\mathcal{L}$

admits a translation into a sublanguage

$\mathcal{L}$

admits a translation into a sublanguage

![]() $\mathcal{L}'$

in a way which respects not only the behaviour of programs but also aspects of their (global or local) syntactic structure. If the translation of some

$\mathcal{L}'$

in a way which respects not only the behaviour of programs but also aspects of their (global or local) syntactic structure. If the translation of some

![]() $\mathcal{L}$

-program into

$\mathcal{L}$

-program into

![]() $\mathcal{L}'$

requires a complete global restructuring, we may say that

$\mathcal{L}'$

requires a complete global restructuring, we may say that

![]() $\mathcal{L}'$

is in some way less expressive than

$\mathcal{L}'$

is in some way less expressive than

![]() $\mathcal{L}$

. In the present paper, however, we have in mind even more fundamental expressivity differences that would not be bridged even if whole-program translations were admitted. These fall under two headings.

$\mathcal{L}$

. In the present paper, however, we have in mind even more fundamental expressivity differences that would not be bridged even if whole-program translations were admitted. These fall under two headings.

-

1. Computability: Are there operations of a given type that are programmable in

$\mathcal{L}$

but not expressible at all in

$\mathcal{L}$

but not expressible at all in

$\mathcal{L}'$

?

$\mathcal{L}'$

? -

2. Complexity: Are there operations programmable in

$\mathcal{L}$

with some asymptotic runtime bound (e.g.

$\mathcal{L}$

with some asymptotic runtime bound (e.g.

${{{{{{\mathcal{O}}}}}}}(n^2)$

) that cannot be achieved in

${{{{{{\mathcal{O}}}}}}}(n^2)$

) that cannot be achieved in

$\mathcal{L}'$

?

$\mathcal{L}'$

?

We may also ask: are there examples of natural, practically useful operations that manifest such differences? If so, this might be considered as a significant advantage of

![]() $\mathcal{L}$

over

$\mathcal{L}$

over

![]() $\mathcal{L}'$

.

$\mathcal{L}'$

.

If the ‘operations’ we are asking about are ordinary first-order functions – that is, both their inputs and outputs are of ground type (strings, arbitrary-size integers, etc.) – then the situation is easily summarised. At such types, all reasonable languages give rise to the same class of programmable functions, namely, the Church-Turing computable ones. As for complexity, the runtime of a program is typically analysed with respect to some cost model for basic instructions (e.g. one unit of time per array access). Although the realism of such cost models in the asymptotic limit can be questioned (see, e.g., Knuth, Reference Knuth1997, Section 2.6), it is broadly taken as read that such models are equally applicable whatever programming language we are working with, and moreover that all respectable languages can represent all algorithms of interest; thus, one does not expect the best achievable asymptotic run-time for a typical algorithm to be sensitive to the choice of programming language, except perhaps in marginal cases.

The situation changes radically, however, if we consider higher-order operations: that is, programmable operations whose inputs may themselves be programmable operations. Here it turns out that both what is computable and the efficiency with which it can be computed can be highly sensitive to the selection of language features present. This is essentially because a program may interact with a given function only in ways prescribed by the language (for instance, by applying it to an argument), and typically has no access to the concrete representation of the function at the machine level.

Most work in this area to date has focused on computability differences. One of the best known examples is the parallel if operation which is computable in a language with parallel evaluation but not in a typical ‘sequential’ programming language (Plotkin, Reference Plotkin1977). It is also well known that the presence of control features or local state enables observational distinctions that cannot be made in a purely functional setting: for instance, there are programs involving ‘call/cc’ that detect the order in which a (call-by-name) ‘+’ operation evaluates its arguments (Cartwright & Felleisen, Reference Cartwright and Felleisen1992). Such operations are ‘non-functional’ in the sense that their output is not determined solely by the extension of their input (seen as a mathematical function

![]() ${{{{{{\mathbb{N}}}}}}}_\bot \times {{{{{{\mathbb{N}}}}}}}_\bot \rightarrow {{{{{{\mathbb{N}}}}}}}_\bot$

); however, there are also programs with ‘functional’ behaviour that can be implemented with control or local state but not without them (Longley, Reference Longley1999). More recent results have exhibited differences lower down in the language expressivity spectrum: for instance, in a purely functional setting à la Haskell, the expressive power of recursion increases strictly with its type level (Longley, Reference Longley2018), and there are natural operations computable by recursion but not by iteration (Longley, Reference Longley2019). Much of this territory, including the mathematical theory of some of the natural definitions of computability in a higher-order setting, is mapped out by Longley & Normann (Reference Longley and Normann2015).

${{{{{{\mathbb{N}}}}}}}_\bot \times {{{{{{\mathbb{N}}}}}}}_\bot \rightarrow {{{{{{\mathbb{N}}}}}}}_\bot$

); however, there are also programs with ‘functional’ behaviour that can be implemented with control or local state but not without them (Longley, Reference Longley1999). More recent results have exhibited differences lower down in the language expressivity spectrum: for instance, in a purely functional setting à la Haskell, the expressive power of recursion increases strictly with its type level (Longley, Reference Longley2018), and there are natural operations computable by recursion but not by iteration (Longley, Reference Longley2019). Much of this territory, including the mathematical theory of some of the natural definitions of computability in a higher-order setting, is mapped out by Longley & Normann (Reference Longley and Normann2015).

Relatively few results of this character have so far been established on the complexity side. Pippenger (Reference Pippenger1996) gives an example of an ‘online’ operation on infinite sequences of atomic symbols (essentially a function from streams to streams) such that the first n output symbols can be produced within time

![]() ${{{{{{\mathcal{O}}}}}}}(n)$

if one is working in an ‘impure’ version of Lisp (in which mutation of ‘cons’ pairs is admitted), but with a worst-case runtime no better than

${{{{{{\mathcal{O}}}}}}}(n)$

if one is working in an ‘impure’ version of Lisp (in which mutation of ‘cons’ pairs is admitted), but with a worst-case runtime no better than

![]() $\Omega(n \log n)$

for any implementation in pure Lisp (without such mutation). This example was reconsidered by Bird et al. (Reference Bird, Jones and de Moor1997) who showed that the same speedup can be achieved in a pure language by using lazy evaluation. Another candidate is the familiar

$\Omega(n \log n)$

for any implementation in pure Lisp (without such mutation). This example was reconsidered by Bird et al. (Reference Bird, Jones and de Moor1997) who showed that the same speedup can be achieved in a pure language by using lazy evaluation. Another candidate is the familiar

![]() $\log n$

overhead involved in implementing maps (supporting lookup and extension) in a pure functional language (Okasaki, Reference Okasaki1999), although to our knowledge this situation has not yet been subjected to theoretical scrutiny. Jones (Reference Jones2001) explores the approach of manifesting expressivity and efficiency differences between certain languages by restricting attention to ‘cons-free’ programs; in this setting, the classes of representable first-order functions for the various languages are found to coincide with some well-known complexity classes.

$\log n$

overhead involved in implementing maps (supporting lookup and extension) in a pure functional language (Okasaki, Reference Okasaki1999), although to our knowledge this situation has not yet been subjected to theoretical scrutiny. Jones (Reference Jones2001) explores the approach of manifesting expressivity and efficiency differences between certain languages by restricting attention to ‘cons-free’ programs; in this setting, the classes of representable first-order functions for the various languages are found to coincide with some well-known complexity classes.

Our purpose in this paper is to give a clear example of such an inherent complexity difference higher up in the expressivity spectrum. Specifically, we consider the following generic count problem, parametric in n: given a boolean-valued predicate P on the space

![]() ${\mathbb B}^n$

of boolean vectors of length n, return the number of such vectors q for which

${\mathbb B}^n$

of boolean vectors of length n, return the number of such vectors q for which

![]() $P\,q = \mathrm{true}$

. We shall consider boolean vectors of any length to be represented by the type

$P\,q = \mathrm{true}$

. We shall consider boolean vectors of any length to be represented by the type

![]() $\mathrm{Nat} \to \mathrm{Bool}$

; thus for each n, we are asking for an implementation of a certain third-order operation

$\mathrm{Nat} \to \mathrm{Bool}$

; thus for each n, we are asking for an implementation of a certain third-order operation

Naturally, we do not expect such a generic operation to compete in efficiency with a bespoke counting operation for some specific predicate, but it is nonetheless interesting to ask how efficient it is possible to be with this more modular approach.

A naïve implementation strategy, supported by any reasonable language, is simply to apply P to each of the

![]() $2^n$

vectors in turn. A much less obvious, but still purely ‘functional’, approach inspired by Berger (Reference Berger1990) achieves the effect of ‘pruned search’ where the predicate allows it (serving as a warning that counterintuitive phenomena can arise in this territory). This implementation is of interest in its own right and will be discussed in Section 7. Nonetheless, under a certain natural condition on P (namely that it must inspect all n components of the given vector before returning), both the above approaches will have

$2^n$

vectors in turn. A much less obvious, but still purely ‘functional’, approach inspired by Berger (Reference Berger1990) achieves the effect of ‘pruned search’ where the predicate allows it (serving as a warning that counterintuitive phenomena can arise in this territory). This implementation is of interest in its own right and will be discussed in Section 7. Nonetheless, under a certain natural condition on P (namely that it must inspect all n components of the given vector before returning), both the above approaches will have

![]() $\Omega(n 2^n)$

runtime.

$\Omega(n 2^n)$

runtime.

What we will show is that in a typical call-by-value functional language without advanced control features, one cannot improve on this: any implementation of

![]() $\mathrm{count}_n$

must necessarily take time

$\mathrm{count}_n$

must necessarily take time

![]() $\Omega(n2^n)$

on predicates P of a certain kind. Furthermore, we will show that the same lower bound also applies to a richer language supporting affine effect handlers, which suffices for the encoding of exceptions, local state, coroutines, and single-shot continuations. On the other hand, if we move to a language with general effect handlers, it becomes possible to bring the runtime down to

$\Omega(n2^n)$

on predicates P of a certain kind. Furthermore, we will show that the same lower bound also applies to a richer language supporting affine effect handlers, which suffices for the encoding of exceptions, local state, coroutines, and single-shot continuations. On the other hand, if we move to a language with general effect handlers, it becomes possible to bring the runtime down to

![]() ${{{{{{\mathcal{O}}}}}}}(2^n)$

: an asymptotic gain of a factor of n. We also show that our implementation method transfers to the more familiar generic search problem: that of returning the list of all vectors q such that

${{{{{{\mathcal{O}}}}}}}(2^n)$

: an asymptotic gain of a factor of n. We also show that our implementation method transfers to the more familiar generic search problem: that of returning the list of all vectors q such that

![]() $P\,q = \mathrm{true}$

.

$P\,q = \mathrm{true}$

.

The idea behind the speedup is easily explained and will already be familiar, at least informally, to programmers who have worked with multi-shot continuations. Suppose for example

![]() $n=3$

, and suppose that the predicate P always inspects the components of its argument in the order 0,1,2. A naïve implementation of

$n=3$

, and suppose that the predicate P always inspects the components of its argument in the order 0,1,2. A naïve implementation of

![]() $\mathrm{count}_3$

might start by applying the given P to

$\mathrm{count}_3$

might start by applying the given P to

![]() $q_0 = (\mathrm{true},\mathrm{true},\mathrm{true})$

, and then to

$q_0 = (\mathrm{true},\mathrm{true},\mathrm{true})$

, and then to

![]() $q_1 = (\mathrm{true},\mathrm{true},\mathrm{false})$

. Clearly, there is some duplication here: the computations of

$q_1 = (\mathrm{true},\mathrm{true},\mathrm{false})$

. Clearly, there is some duplication here: the computations of

![]() $P\,q_0$

and

$P\,q_0$

and

![]() $P\,q_1$

will proceed identically up to the point where the value of the final component is requested. What we would like to do, then, is to record the state of the computation of

$P\,q_1$

will proceed identically up to the point where the value of the final component is requested. What we would like to do, then, is to record the state of the computation of

![]() $P\,q_0$

at just this point, so that we can later resume this computation with false supplied as the final component value in order to obtain the value of

$P\,q_0$

at just this point, so that we can later resume this computation with false supplied as the final component value in order to obtain the value of

![]() $P\,q_1$

. (Similarly for all other internal nodes in the evident binary tree of boolean vectors.) Of course, such a ‘backup’ approach is easy to realise if one is implementing a bespoke search operation for some particular choice of P; but to apply this idea of resuming previous subcomputations in the generic setting (that is, uniformly in P) requires some feature such as general effect handlers or multi-shot continuations.

$P\,q_1$

. (Similarly for all other internal nodes in the evident binary tree of boolean vectors.) Of course, such a ‘backup’ approach is easy to realise if one is implementing a bespoke search operation for some particular choice of P; but to apply this idea of resuming previous subcomputations in the generic setting (that is, uniformly in P) requires some feature such as general effect handlers or multi-shot continuations.

One could also obviate the need for such a feature by choosing to present the predicate P in some other way, but from our present perspective this would be to move the goalposts: our intention is precisely to show that our languages differ in an essential way as regards their power to manipulate data of type

![]() $(\mathrm{Nat} \to \mathrm{Bool}) \to \mathrm{Bool}$

. Indeed, a key aspect of our approach, inherited from Longley & Normann (Reference Longley and Normann2015), is that by allowing ourselves to fix the way in which data is given to us, we are able to uncover a wealth of interesting expressivity differences between languages, despite the fact that they are in some sense inter-encodable. Such an approach also seems reasonable from the perspective of programming in the large: when implementing some program module one does not always have the freedom to choose the form or type of one’s inputs, and in such cases, the kinds of expressivity distinctions we are considering may potentially make a real practical difference.

$(\mathrm{Nat} \to \mathrm{Bool}) \to \mathrm{Bool}$

. Indeed, a key aspect of our approach, inherited from Longley & Normann (Reference Longley and Normann2015), is that by allowing ourselves to fix the way in which data is given to us, we are able to uncover a wealth of interesting expressivity differences between languages, despite the fact that they are in some sense inter-encodable. Such an approach also seems reasonable from the perspective of programming in the large: when implementing some program module one does not always have the freedom to choose the form or type of one’s inputs, and in such cases, the kinds of expressivity distinctions we are considering may potentially make a real practical difference.

This idea of using first-class control to achieve ‘backtracking’ has been exploited before and is fairly widely known (see e.g. Kiselyov et al., Reference Kiselyov, Shan, Friedman and Sabry2005), and there is a clear programming intuition that this yields a speedup unattainable in languages without such control features. Our main contribution in this paper is to provide, for the first time, a precise mathematical theorem that pins down this fundamental efficiency difference, thus giving formal substance to this intuition. Since our goal is to give a realistic analysis of the asymptotic runtimes achievable in various settings, but without getting bogged down in inessential implementation details, we shall work concretely and operationally with a CEK-style abstract machine semantics as our basic model of execution time. The details of this model are only explicitly used for showing that our efficient implementation of generic count with effect handlers has the claimed

![]() ${{{{{{\mathcal{O}}}}}}}(2^n)$

runtime; but it also plays a background role as our reference model of runtime for the

${{{{{{\mathcal{O}}}}}}}(2^n)$

runtime; but it also plays a background role as our reference model of runtime for the

![]() $\Omega(n2^n)$

lower bound results, even though we here work mostly with a simpler kind of operational semantics.

$\Omega(n2^n)$

lower bound results, even though we here work mostly with a simpler kind of operational semantics.

In the first instance, we formulate our results as a comparison between a purely functional base language

![]() ${\lambda_{\textrm{b}}}$

(a version of call-by-value PCF) and an extension

${\lambda_{\textrm{b}}}$

(a version of call-by-value PCF) and an extension

![]() ${\lambda_{\textrm{h}}}$

with general effect handlers. This allows us to present the key idea in a simple setting, but we then show how our runtime lower bound is also applicable to a more sophisticated language

${\lambda_{\textrm{h}}}$

with general effect handlers. This allows us to present the key idea in a simple setting, but we then show how our runtime lower bound is also applicable to a more sophisticated language

![]() ${{{{{{\lambda_{\textrm{a}}}}}}}}$

with affine effect handlers, intermediate in power between

${{{{{{\lambda_{\textrm{a}}}}}}}}$

with affine effect handlers, intermediate in power between

![]() ${\lambda_{\textrm{b}}}$

and

${\lambda_{\textrm{b}}}$

and

![]() ${\lambda_{\textrm{h}}}$

and corresponding broadly to ‘single-shot’ uses of delimited control. Our proof involves some general machinery for reasoning about program evaluation in

${\lambda_{\textrm{h}}}$

and corresponding broadly to ‘single-shot’ uses of delimited control. Our proof involves some general machinery for reasoning about program evaluation in

![]() ${{{{{{\lambda_{\textrm{a}}}}}}}}$

which may be of independent interest.

${{{{{{\lambda_{\textrm{a}}}}}}}}$

which may be of independent interest.

In summary, our purpose is to exhibit an efficiency difference between single-shot and multi-shot versions of delimited control which, in our view, manifests a fundamental feature of the programming language landscape. Since many widely used languages do not support multi-shot control features, this challenges a common assumption that all real-world programming languages are essentially ‘equivalent’ from an asymptotic point of view. We also situate our results within a broader context by informally discussing the attainable efficiency for generic count within a spectrum of weaker languages. We believe that such results are important not only for a rounded understanding of the relative merits of existing languages but also for informing future language design.

For their convenience as structured delimited control operators, we adopt effect handlers (Plotkin & Pretnar, Reference Plotkin and Pretnar2013) as our universal control abstraction of choice, but our results adapt mutatis mutandis to other first-class control abstractions such as ‘call/cc’ (Sperber et al., Reference Sperber, Dybvig, Flatt, van Stratten, Findler and Matthews2009), ‘control’ (

![]() $\mathcal{F}$

) and ‘prompt’ (

$\mathcal{F}$

) and ‘prompt’ (

![]() $\textbf{#}$

) (Felleisen, Reference Felleisen1988), or ‘shift’ and ‘reset’ (Danvy & Filinski, Reference Danvy and Filinski1990).

$\textbf{#}$

) (Felleisen, Reference Felleisen1988), or ‘shift’ and ‘reset’ (Danvy & Filinski, Reference Danvy and Filinski1990).

The rest of the paper is structured as follows.

-

Section 2 provides an introduction to effect handlers as a programming abstraction.

-

Section 3 presents a pure PCF-like language

${\lambda_{\textrm{b}}}$

and an extension

${\lambda_{\textrm{b}}}$

and an extension

${\lambda_{\textrm{h}}}$

with general effect handlers.

${\lambda_{\textrm{h}}}$

with general effect handlers. -

Section 4 defines abstract machines for

${\lambda_{\textrm{b}}}$

and

${\lambda_{\textrm{b}}}$

and

${\lambda_{\textrm{h}}}$

, yielding a runtime cost model.

${\lambda_{\textrm{h}}}$

, yielding a runtime cost model. -

Section 5 introduces the generic count problem and some associated machinery and presents an implementation in

${\lambda_{\textrm{h}}}$

with runtime

${\lambda_{\textrm{h}}}$

with runtime

${{{{{{\mathcal{O}}}}}}}(2^n)$

(perhaps with small additional logarithmic factors according to the precise details of the cost model).

${{{{{{\mathcal{O}}}}}}}(2^n)$

(perhaps with small additional logarithmic factors according to the precise details of the cost model). -

Section 6 discusses some extensions and variations of the foregoing result, adapting it to deal with a wider class of predicates and bridging the gap between generic count and generic search. We also briefly outline how one can use sufficient effect polymorphism to adapt the result to a setting with a type-and-effect system.

-

Section 7 surveys a range of approaches to generic counting in languages weaker than

${\lambda_{\textrm{h}}}$

, including the one suggested by Berger (Reference Berger1990), emphasising how the attainable efficiency varies according to the language, but observing that none of these approaches match the

${\lambda_{\textrm{h}}}$

, including the one suggested by Berger (Reference Berger1990), emphasising how the attainable efficiency varies according to the language, but observing that none of these approaches match the

${{{{{{\mathcal{O}}}}}}}(2^n)$

runtime bound of our effectful implementation.

${{{{{{\mathcal{O}}}}}}}(2^n)$

runtime bound of our effectful implementation. -

Section 8 establishes that any generic count implementation within

${\lambda_{\textrm{b}}}$

must have runtime

${\lambda_{\textrm{b}}}$

must have runtime

$\Omega(n2^n)$

on predicates of a certain kind.

$\Omega(n2^n)$

on predicates of a certain kind. -

Section 9 refines our definition of

${\lambda_{\textrm{h}}}$

to yield a language

${\lambda_{\textrm{h}}}$

to yield a language

${{{{{{\lambda_{\textrm{a}}}}}}}}$

for affine effect handlers, clarifying its relationship to

${{{{{{\lambda_{\textrm{a}}}}}}}}$

for affine effect handlers, clarifying its relationship to

${\lambda_{\textrm{b}}}$

and

${\lambda_{\textrm{b}}}$

and

${\lambda_{\textrm{h}}}$

.

${\lambda_{\textrm{h}}}$

. -

Section 10 develops some machinery for reasoning about program evaluation in

${{{{{{\lambda_{\textrm{a}}}}}}}}$

, and applies this to establish the

${{{{{{\lambda_{\textrm{a}}}}}}}}$

, and applies this to establish the

$\Omega(n2^n)$

bound for generic count programs in this language.

$\Omega(n2^n)$

bound for generic count programs in this language. -

Section 11 reports on experiments showing that the theoretical efficiency difference we describe is manifested in practice, using implementations in OCaml of various search procedures.

-

Section 12 concludes.

The languages

![]() ${\lambda_{\textrm{b}}}$

and

${\lambda_{\textrm{b}}}$

and

![]() ${\lambda_{\textrm{h}}}$

are rather minimal versions of previously studied systems – we only include the machinery needed for illustrating the generic search efficiency phenomenon. Some of the less interesting proof details are relegated to the appendices.

${\lambda_{\textrm{h}}}$

are rather minimal versions of previously studied systems – we only include the machinery needed for illustrating the generic search efficiency phenomenon. Some of the less interesting proof details are relegated to the appendices.

Relation to prior work This article is an extended version of the following previously published paper and Chapter 7 of the first author’s PhD dissertation:

-

Hillerström, D., Lindley, S. & Longley, J. (2020) Effects for efficiency: Asymptotic speedup with first-class control. Proc. ACM Program. Lang. 4(ICFP), 100:1–100:29

-

Hillerström, D. (2021) Foundations for Programming and Implementing Effect Handlers. Ph.D. thesis. The University of Edinburgh, Scotland, UK

The main new contribution in the present version is that we introduce a language

![]() ${{{{{{\lambda_{\textrm{a}}}}}}}}$

for arbitrary affine effect handlers and develop the theory needed to extend our lower bound result to this language (Section 9), whereas in the previous version, only an extension with local state was considered. We have also included an account of the Berger search procedure (Section 7.3) and have simplified our original proof of the

${{{{{{\lambda_{\textrm{a}}}}}}}}$

for arbitrary affine effect handlers and develop the theory needed to extend our lower bound result to this language (Section 9), whereas in the previous version, only an extension with local state was considered. We have also included an account of the Berger search procedure (Section 7.3) and have simplified our original proof of the

![]() $\Omega(n2^n)$

bound for

$\Omega(n2^n)$

bound for

![]() ${\lambda_{\textrm{b}}}$

(Section 8). The benchmarks have been ported to OCaml 5.1.1 in such a way that the effectful procedures make use of effect handlers internally (Section 11).

${\lambda_{\textrm{b}}}$

(Section 8). The benchmarks have been ported to OCaml 5.1.1 in such a way that the effectful procedures make use of effect handlers internally (Section 11).

2 Effect handlers primer

Effect handlers were originally studied as a theoretical means to provide a semantics for exception handling in the setting of algebraic effects (Plotkin & Power, Reference Plotkin and Power2001; Plotkin & Pretnar, Reference Plotkin and Pretnar2013). Subsequently, they have emerged as a practical programming abstraction for modular effectful programming (Convent et al., Reference Convent, Lindley, McBride and McLaughlin2020; Kammar et al., Reference Kammar, Lindley and Oury2013; Kiselyov et al., Reference Kiselyov, Sabry and Swords2013; Bauer & Pretnar, Reference Bauer and Pretnar2015; Leijen, Reference Leijen2017; Hillerström et al., Reference Hillerström, Lindley and Longley2020; Sivaramakrishnan et al., Reference Sivaramakrishnan, Dolan, White, Kelly, Jaffer and Madhavapeddy2021). In this section, we give a short introduction to effect handlers. For a thorough introduction to programming with effect handlers, we recommend the tutorial by Pretnar (Reference Pretnar2015), and as an introduction to the mathematical foundations of handlers, we refer the reader to the founding paper by Plotkin & Pretnar (Reference Plotkin and Pretnar2013) and the excellent tutorial paper by Bauer (Reference Bauer2018).

Viewed through the lens of universal algebra, an algebraic effect is given by a signature

![]() $\Sigma$

of typed operation symbols along with an equational theory that describes the properties of the operations (Plotkin & Power, Reference Plotkin and Power2001). An example of an algebraic effect is nondeterminism, whose signature consists of a single nondeterministic choice operation:

$\Sigma$

of typed operation symbols along with an equational theory that describes the properties of the operations (Plotkin & Power, Reference Plotkin and Power2001). An example of an algebraic effect is nondeterminism, whose signature consists of a single nondeterministic choice operation:

![]() $\Sigma \mathrel{\overset{{\mbox{def}}}{=}} \{ \mathrm{Branch} : \mathrm{Unit} \to \mathrm{Bool} \}$

. The operation takes a single parameter of type unit and ultimately produces a boolean value. The pragmatic programmatic view of algebraic effects differs from the original development as no implementation accounts for equations over operations yet.

$\Sigma \mathrel{\overset{{\mbox{def}}}{=}} \{ \mathrm{Branch} : \mathrm{Unit} \to \mathrm{Bool} \}$

. The operation takes a single parameter of type unit and ultimately produces a boolean value. The pragmatic programmatic view of algebraic effects differs from the original development as no implementation accounts for equations over operations yet.

As a simple example, let us use the operation Branch to model a coin toss. Suppose we have a data type

![]() $\mathrm{Toss} \mathrel{\overset{{\mbox{def}}}{=}} \mathrm{Heads} \mid\mathrm{Tails}$

, then we may implement a coin toss as follows.

$\mathrm{Toss} \mathrel{\overset{{\mbox{def}}}{=}} \mathrm{Heads} \mid\mathrm{Tails}$

, then we may implement a coin toss as follows.

From the type signature, it is clear that the computation returns a value of type Toss. It is not clear from the signature of toss whether it performs an effect. However, from the definition, it evidently performs the operation Branch with argument

![]() $\langle \rangle$

using the

$\langle \rangle$

using the

![]() $\mathbf{do}$

-invocation form. The result of the operation determines whether the computation returns either Heads or Tails. Systems such as Effekt (Brachthäuser et al., Reference Brachthäuser, Schuster and Ostermann2020), Frank (Lindley et al., Reference Lindley, McBride and McLaughlin2017; Convent et al., Reference Convent, Lindley, McBride and McLaughlin2020), Helium (Biernacki et al., Reference Biernacki, Piróg, Polesiuk and Sieczkowski2019, Reference Biernacki, Piróg, Polesiuk and Sieczkowski2020), Koka (Leijen, Reference Leijen2017), and Links (Hillerström & Lindley, Reference Hillerström and Lindley2016; Hillerström et al., Reference Hillerström, Lindley and Longley2020) include type-and-effect systems (or in the case of Effekt a capability type system) which track the use of effectful operations, whilst systems such as Eff (Bauer & Pretnar, Reference Bauer and Pretnar2015) and Multicore OCaml (Dolan et al., Reference Dolan, White, Sivaramakrishnan, Yallop and Madhavapeddy2015)/OCaml 5 (Sivaramakrishnan et al., Reference Sivaramakrishnan, Dolan, White, Kelly, Jaffer and Madhavapeddy2021) choose not to track effects in the type system. Our language is closer to the latter two.

$\mathbf{do}$

-invocation form. The result of the operation determines whether the computation returns either Heads or Tails. Systems such as Effekt (Brachthäuser et al., Reference Brachthäuser, Schuster and Ostermann2020), Frank (Lindley et al., Reference Lindley, McBride and McLaughlin2017; Convent et al., Reference Convent, Lindley, McBride and McLaughlin2020), Helium (Biernacki et al., Reference Biernacki, Piróg, Polesiuk and Sieczkowski2019, Reference Biernacki, Piróg, Polesiuk and Sieczkowski2020), Koka (Leijen, Reference Leijen2017), and Links (Hillerström & Lindley, Reference Hillerström and Lindley2016; Hillerström et al., Reference Hillerström, Lindley and Longley2020) include type-and-effect systems (or in the case of Effekt a capability type system) which track the use of effectful operations, whilst systems such as Eff (Bauer & Pretnar, Reference Bauer and Pretnar2015) and Multicore OCaml (Dolan et al., Reference Dolan, White, Sivaramakrishnan, Yallop and Madhavapeddy2015)/OCaml 5 (Sivaramakrishnan et al., Reference Sivaramakrishnan, Dolan, White, Kelly, Jaffer and Madhavapeddy2021) choose not to track effects in the type system. Our language is closer to the latter two.

An effectful computation may be used as a subcomputation of another computation, e.g. we can use toss to implement a computation that performs two coin tosses.

We may view an effectful computation as a tree, where the interior nodes correspond to operation invocations and the leaves correspond to return values. The computation tree for tossTwice is as follows.

It models the interaction with the environment. The operation Branch can be viewed as a query for which the response is either true or false. The response is provided by an effect handler. As an example, consider the following handler which enumerates the possible outcomes of two coin tosses.

The

![]() $\mathbf{handle}$

-construct generalises the exceptional syntax of Benton & Kennedy (Reference Benton and Kennedy2001). This handler has a success clause and an operation clause. The success clause determines how to interpret the return value of tossTwice, or equivalently how to interpret the leaves of its computation tree. It lifts the return value into a singleton list. The operation clause determines how to interpret occurrences of Branch in toss. It provides access to the argument of Branch (which is unit) and its resumption, r. The resumption is a first-class delimited continuation which captures the remainder of the tossTwice computation from the invocation of Branch inside the first instance of toss up to its nearest enclosing handler.

$\mathbf{handle}$

-construct generalises the exceptional syntax of Benton & Kennedy (Reference Benton and Kennedy2001). This handler has a success clause and an operation clause. The success clause determines how to interpret the return value of tossTwice, or equivalently how to interpret the leaves of its computation tree. It lifts the return value into a singleton list. The operation clause determines how to interpret occurrences of Branch in toss. It provides access to the argument of Branch (which is unit) and its resumption, r. The resumption is a first-class delimited continuation which captures the remainder of the tossTwice computation from the invocation of Branch inside the first instance of toss up to its nearest enclosing handler.

Applying r to true resumes evaluation of tossTwice via the true branch, which causes another invocation of Branch to occur, resulting in yet another resumption. Applying this resumption yields a possible return value of

![]() $[\mathrm{Heads},\mathrm{Heads}]$

, which gets lifted into the singleton list

$[\mathrm{Heads},\mathrm{Heads}]$

, which gets lifted into the singleton list

![]() $[[\mathrm{Heads},\mathrm{Heads}]]$

. Afterwards, the latter resumption is applied to false, thus producing the value

$[[\mathrm{Heads},\mathrm{Heads}]]$

. Afterwards, the latter resumption is applied to false, thus producing the value

![]() $[[\mathrm{Heads},\mathrm{Tails}]]$

. Before returning to the first invocation of the initial resumption, the two lists get concatenated to obtain the intermediary result

$[[\mathrm{Heads},\mathrm{Tails}]]$

. Before returning to the first invocation of the initial resumption, the two lists get concatenated to obtain the intermediary result

![]() $[[\mathrm{Heads},\mathrm{Heads}],[\mathrm{Heads},\mathrm{Tails}]]$

. Thereafter, the initial resumption is applied to false, which symmetrically returns the list

$[[\mathrm{Heads},\mathrm{Heads}],[\mathrm{Heads},\mathrm{Tails}]]$

. Thereafter, the initial resumption is applied to false, which symmetrically returns the list

![]() $[[\mathrm{Tails},\mathrm{Heads}],[\mathrm{Tails},\mathrm{Tails}]]$

. Finally, the two intermediary lists get concatenated to produce the final result

$[[\mathrm{Tails},\mathrm{Heads}],[\mathrm{Tails},\mathrm{Tails}]]$

. Finally, the two intermediary lists get concatenated to produce the final result

![]() $[[\mathrm{Heads},\mathrm{Heads}],[\mathrm{Heads},\mathrm{Tails}],[\mathrm{Tails},\mathrm{Heads}],[\mathrm{Tails},\mathrm{Tails}]]$

.

$[[\mathrm{Heads},\mathrm{Heads}],[\mathrm{Heads},\mathrm{Tails}],[\mathrm{Tails},\mathrm{Heads}],[\mathrm{Tails},\mathrm{Tails}]]$

.

3 Calculi

In this section, we present our base language

![]() ${\lambda_{\textrm{b}}}$

and its extension with effect handlers

${\lambda_{\textrm{b}}}$

and its extension with effect handlers

![]() ${\lambda_{\textrm{h}}}$

.

${\lambda_{\textrm{h}}}$

.

3.1 Base calculus

The base calculus

![]() ${\lambda_{\textrm{b}}}$

is a fine-grain call-by-value (Levy et al., Reference Levy, Power and Thielecke2003) variation of PCF (Plotkin, Reference Plotkin1977). Fine-grain call-by-value is similar to A-normal form (Flanagan et al., Reference Flanagan, Sabry, Duba and Felleisen1993) in that every intermediate computation is named, but unlike A-normal form is closed under reduction.

${\lambda_{\textrm{b}}}$

is a fine-grain call-by-value (Levy et al., Reference Levy, Power and Thielecke2003) variation of PCF (Plotkin, Reference Plotkin1977). Fine-grain call-by-value is similar to A-normal form (Flanagan et al., Reference Flanagan, Sabry, Duba and Felleisen1993) in that every intermediate computation is named, but unlike A-normal form is closed under reduction.

The syntax of

![]() ${\lambda_{\textrm{b}}}$

is as follows.

${\lambda_{\textrm{b}}}$

is as follows.

The ground types are Nat and Unit which classify natural number values and the unit value, respectively. The function type

![]() $A \to B$

classifies functions that map values of type A to values of type B. The binary product type

$A \to B$

classifies functions that map values of type A to values of type B. The binary product type

![]() $A \times B$

classifies pairs of values whose first and second components have types A and B, respectively. The sum type

$A \times B$

classifies pairs of values whose first and second components have types A and B, respectively. The sum type

![]() $A + B$

classifies tagged values of either type A or B. Type environments

$A + B$

classifies tagged values of either type A or B. Type environments

![]() $\Gamma$

map term variables to their types. For hygiene, we require that the variables appearing in a type environment are distinct.

$\Gamma$

map term variables to their types. For hygiene, we require that the variables appearing in a type environment are distinct.

We let k range over natural numbers and c range over primitive operations on natural numbers (

![]() $+, -, =$

). We let x, y, z range over term variables. We also use f, g, h, q for variables of function type and i, j for variables of type Nat. The value terms are standard.

$+, -, =$

). We let x, y, z range over term variables. We also use f, g, h, q for variables of function type and i, j for variables of type Nat. The value terms are standard.

All elimination forms are computation terms. Abstraction is eliminated using application (

![]() $V\,W$

). The product eliminator

$V\,W$

). The product eliminator

![]() $(\mathbf{let} \; {{{{{{\langle x,y \rangle}}}}}} = V \; \mathbf{in} \; N)$

splits a pair V into its constituents and binds them to x and y, respectively. Sums are eliminated by a case split (

$(\mathbf{let} \; {{{{{{\langle x,y \rangle}}}}}} = V \; \mathbf{in} \; N)$

splits a pair V into its constituents and binds them to x and y, respectively. Sums are eliminated by a case split (

![]() $\mathbf{case}\; V\;\{\mathbf{inl}\; x \mapsto M; \mathbf{inr}\; y \mapsto N\}$

). A trivial computation

$\mathbf{case}\; V\;\{\mathbf{inl}\; x \mapsto M; \mathbf{inr}\; y \mapsto N\}$

). A trivial computation

![]() $(\mathbf{return}\;V)$

returns value V. The sequencing expression

$(\mathbf{return}\;V)$

returns value V. The sequencing expression

![]() $(\mathbf{let} \; x {{{{{{\leftarrow}}}}}} M \; \mathbf{in} \; N)$

evaluates M and binds the result value to x in N.

$(\mathbf{let} \; x {{{{{{\leftarrow}}}}}} M \; \mathbf{in} \; N)$

evaluates M and binds the result value to x in N.

The typing rules are those given in Figure 1, along with the familiar Exchange, Weakening and Contraction rules for environments. (Note that thanks to Weakening we are able to type terms such as

![]() $(\lambda x^A. (\lambda x^B.x))$

, even though environments are not permitted to contain duplicate variables.) We require two typing judgements: one for values and the other for computations. The judgement

$(\lambda x^A. (\lambda x^B.x))$

, even though environments are not permitted to contain duplicate variables.) We require two typing judgements: one for values and the other for computations. The judgement

![]() $\Gamma \vdash \square : A$

states that a

$\Gamma \vdash \square : A$

states that a

![]() $\square$

-term has type A under type environment

$\square$

-term has type A under type environment

![]() $\Gamma$

, where

$\Gamma$

, where

![]() $\square$

is either a value term (V) or a computation term (M). The constants have the following types.

$\square$

is either a value term (V) or a computation term (M). The constants have the following types.

Fig. 1. Typing rules for

![]() ${\lambda_{\textrm{b}}}$

.

${\lambda_{\textrm{b}}}$

.

We give a small-step operational semantics for

![]() ${\lambda _b}$

${\lambda _b}$

with evaluation contexts in the style of Felleisen (Reference Felleisen1987). The reduction relation

![]() ${{{{{{\leadsto}}}}}}$

is defined on computation terms via the rules given in Figure 2. The statement

${{{{{{\leadsto}}}}}}$

is defined on computation terms via the rules given in Figure 2. The statement

![]() $M {{{{{{\leadsto}}}}}} N$

reads: term M reduces to term N in one step. We write

$M {{{{{{\leadsto}}}}}} N$

reads: term M reduces to term N in one step. We write

![]() $R^+$

for the transitive closure of relation R and

$R^+$

for the transitive closure of relation R and

![]() $R^*$

for the reflexive, transitive closure of relation R.

$R^*$

for the reflexive, transitive closure of relation R.

Fig. 2. Contextual small-step operational semantics.

Most often, we are interested in

![]() ${{{{{{\leadsto}}}}}}$

as a relation on closed terms. However, we will sometimes consider it as a relation on terms involving free variables, with the stipulation that none of these free variables also occur as bound variables within the terms. Since we never perform reductions under a binder, this means that the notation

${{{{{{\leadsto}}}}}}$

as a relation on closed terms. However, we will sometimes consider it as a relation on terms involving free variables, with the stipulation that none of these free variables also occur as bound variables within the terms. Since we never perform reductions under a binder, this means that the notation

![]() $M[V/x]$

in our rules may be taken simply to mean M with V textually substituted for free occurrences of x (no variable capture is possible). We also take

$M[V/x]$

in our rules may be taken simply to mean M with V textually substituted for free occurrences of x (no variable capture is possible). We also take

![]() $\ulcorner c \urcorner$

to mean the usual interpretation of constant c as a meta-level function on closed values.

$\ulcorner c \urcorner$

to mean the usual interpretation of constant c as a meta-level function on closed values.

The type soundness of our system is easily verified. This is subsumed by the property we shall formally state for the richer language

![]() ${\lambda_{\textrm{h}}}$

in Theorem 1 below.

${\lambda_{\textrm{h}}}$

in Theorem 1 below.

When dealing with reductions

![]() $N {{{{{{\leadsto}}}}}} N'$

, we shall often make use of the idea that certain subterm occurrences within N’ arise from corresponding identical subterms of N. For instance, in the case of a reduction

$N {{{{{{\leadsto}}}}}} N'$

, we shall often make use of the idea that certain subterm occurrences within N’ arise from corresponding identical subterms of N. For instance, in the case of a reduction

![]() $(\lambda x^A .M) V {{{{{{\leadsto}}}}}} M[V/x]$

, we shall say that any subterm occurrence P within any of the substituted copies of V on the right-hand side is a descendant of the corresponding subterm occurrence within the V on the left-hand side. (Descendants are called residuals e.g. in Barendregt, Reference Barendregt1984.) Similarly, any subterm occurrence Q of

$(\lambda x^A .M) V {{{{{{\leadsto}}}}}} M[V/x]$

, we shall say that any subterm occurrence P within any of the substituted copies of V on the right-hand side is a descendant of the corresponding subterm occurrence within the V on the left-hand side. (Descendants are called residuals e.g. in Barendregt, Reference Barendregt1984.) Similarly, any subterm occurrence Q of

![]() $M[V/x]$

not overlapping with any of these substituted copies of V is a descendant of the corresponding occurrence of an identical subterm within the M on the left. This notion extends to the other reduction rules in the evident way; we suppress the formal details. If P’ is a descendant of P, we also say that P is an ancestor of P’. By transitivity we extend these notions to the relations

$M[V/x]$

not overlapping with any of these substituted copies of V is a descendant of the corresponding occurrence of an identical subterm within the M on the left. This notion extends to the other reduction rules in the evident way; we suppress the formal details. If P’ is a descendant of P, we also say that P is an ancestor of P’. By transitivity we extend these notions to the relations

![]() ${{{{{{\leadsto}}}}}}^+$

and

${{{{{{\leadsto}}}}}}^+$

and

![]() ${{{{{{\leadsto}}}}}}^*$

. Note that if

${{{{{{\leadsto}}}}}}^*$

. Note that if

![]() $N{{{{{{\leadsto}}}}}}^* N'$

then a subterm occurrence in N’ may have at most one ancestor in N but a subterm occurrence in N may have many descendants in N’.

$N{{{{{{\leadsto}}}}}}^* N'$

then a subterm occurrence in N’ may have at most one ancestor in N but a subterm occurrence in N may have many descendants in N’.

Notation. We elide type annotations when clear from context. For convenience we often write code in direct-style assuming the standard left-to-right call-by-value elaboration into fine-grain call-by-value (Moggi, Reference Moggi1991; Flanagan et al., Reference Flanagan, Sabry, Duba and Felleisen1993). For example, the expression

![]() $f\,(h\,w) + g\,{\langle \rangle}$

is syntactic sugar for:

$f\,(h\,w) + g\,{\langle \rangle}$

is syntactic sugar for:

We define sequencing of computations in the standard way.

We make use of standard syntactic sugar for pattern matching. For instance, we write

for suspended computations, and if the binder has a type other than Unit, we write:

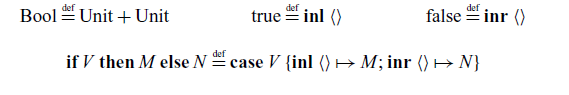

We use the standard encoding of booleans as a sum:

3.2 Handler calculus

We now define

![]() ${\lambda_{\textrm{h}}}$

as an extension of

${\lambda_{\textrm{h}}}$

as an extension of

![]() ${\lambda_{\textrm{b}}}$

.

${\lambda_{\textrm{b}}}$

.

We assume a fixed effect signature

![]() $\Sigma$

that associates types

$\Sigma$

that associates types

![]() $\Sigma(\ell)$

to finitely many operation symbols

$\Sigma(\ell)$

to finitely many operation symbols

![]() $\ell$

. An operation type

$\ell$

. An operation type

![]() $A \to B$

classifies operations that take an argument of type A and return a result of type B. A handler type

$A \to B$

classifies operations that take an argument of type A and return a result of type B. A handler type

![]() $C \Rightarrow D$

classifies effect handlers that transform computations of type C into computations of type D. Following Pretnar (Reference Pretnar2015), we assume a global signature for every program. Computations are extended with operation invocation (

$C \Rightarrow D$

classifies effect handlers that transform computations of type C into computations of type D. Following Pretnar (Reference Pretnar2015), we assume a global signature for every program. Computations are extended with operation invocation (

![]() $\mathbf{do}\;\ell\;V$

) and effect handling (

$\mathbf{do}\;\ell\;V$

) and effect handling (

![]() $\mathbf{handle}\; M \;\mathbf{with}\; H$

). Handlers are constructed from a single success clause

$\mathbf{handle}\; M \;\mathbf{with}\; H$

). Handlers are constructed from a single success clause

![]() $(\{\mathbf{val}\; x \mapsto M\})$

and an operation clause

$(\{\mathbf{val}\; x \mapsto M\})$

and an operation clause

![]() $(\{ \ell \; p \; r \mapsto N \})$

for each operation

$(\{ \ell \; p \; r \mapsto N \})$

for each operation

![]() $\ell$

in

$\ell$

in

![]() $\Sigma$

; here the x,p,r are considered as bound variables. Following Plotkin & Pretnar (Reference Plotkin and Pretnar2013), we adopt the convention that a handler with missing operation clauses (with respect to

$\Sigma$

; here the x,p,r are considered as bound variables. Following Plotkin & Pretnar (Reference Plotkin and Pretnar2013), we adopt the convention that a handler with missing operation clauses (with respect to

![]() $\Sigma$

) is syntactic sugar for one in which all missing clauses perform explicit forwarding:

$\Sigma$

) is syntactic sugar for one in which all missing clauses perform explicit forwarding:

The typing rules for

![]() ${\lambda_{\textrm{h}}}$

are those of

${\lambda_{\textrm{h}}}$

are those of

![]() ${\lambda_{\textrm{b}}}$

(Figure 1) plus three additional rules for operations, handling, and handlers given in Figure 3. The T-Do rule ensures that an operation invocation is only well-typed if the operation

${\lambda_{\textrm{b}}}$

(Figure 1) plus three additional rules for operations, handling, and handlers given in Figure 3. The T-Do rule ensures that an operation invocation is only well-typed if the operation

![]() $\ell$

appears in the effect signature

$\ell$

appears in the effect signature

![]() $\Sigma$

and the argument type A matches the type of the provided argument V. The result type B determines the type of the invocation. The T-Handle rule types handler application. The T-Handler rule ensures that the bodies of the success clause and the operation clauses all have the output type D. The type of x in the success clause must match the input type C. The type of the parameter p (

$\Sigma$

and the argument type A matches the type of the provided argument V. The result type B determines the type of the invocation. The T-Handle rule types handler application. The T-Handler rule ensures that the bodies of the success clause and the operation clauses all have the output type D. The type of x in the success clause must match the input type C. The type of the parameter p (

![]() $A_\ell$

) and resumption r (

$A_\ell$

) and resumption r (

![]() $B_\ell \to D$

) in operation clause

$B_\ell \to D$

) in operation clause

![]() $H^{\ell}$

is determined by the type of

$H^{\ell}$

is determined by the type of

![]() $\ell$

; the return type of r is D, as the body of the resumption will itself be handled by H. We write

$\ell$

; the return type of r is D, as the body of the resumption will itself be handled by H. We write

![]() $H^{\ell}$

and

$H^{\ell}$

and

![]() $H^{\mathrm{val}}$

for projecting success and operation clauses.

$H^{\mathrm{val}}$

for projecting success and operation clauses.

We extend the operational semantics to

![]() ${\lambda_{\textrm{h}}}$

. Specifically, we add two new reduction rules: one for handling return values and another for handling operation invocations.

${\lambda_{\textrm{h}}}$

. Specifically, we add two new reduction rules: one for handling return values and another for handling operation invocations.

The first rule invokes the success clause. The second rule handles an operation via the corresponding operation clause.

Fig. 3. Additional typing rules for

![]() ${\lambda_{\textrm{h}}}$

.

${\lambda_{\textrm{h}}}$

.

To allow for the evaluation of subterms within

![]() $\mathbf{handle}$

expressions, we extend our earlier grammar for evaluation contexts to one for handler contexts:

$\mathbf{handle}$

expressions, we extend our earlier grammar for evaluation contexts to one for handler contexts:

We then replace the S-Lift rule with a corresponding rule for handler contexts.

However, it is critical that the rule S-Op is restricted to pure evaluation contexts

![]() ${{{{{{\mathcal{E}}}}}}}$

rather than handler contexts. This ensures that the

${{{{{{\mathcal{E}}}}}}}$

rather than handler contexts. This ensures that the

![]() $\mathbf{do}$

invocation is handled by the innermost handler (recalling our convention that all handlers handle all operations). If arbitrary handler contexts

$\mathbf{do}$

invocation is handled by the innermost handler (recalling our convention that all handlers handle all operations). If arbitrary handler contexts

![]() ${{{{{{\mathcal{H}}}}}}}$

were permitted in this rule, the semantics would become non-deterministic, as any handler in scope could be selected.

${{{{{{\mathcal{H}}}}}}}$

were permitted in this rule, the semantics would become non-deterministic, as any handler in scope could be selected.

The ancestor-descendant relation for subterm occurrences extends to

![]() ${\lambda_{\textrm{h}}}$

in the obvious way.

${\lambda_{\textrm{h}}}$

in the obvious way.

We now characterise normal forms and state the standard type soundness property of

![]() ${\lambda_{\textrm{h}}}$

.

${\lambda_{\textrm{h}}}$

.

Definition 1 (Computation normal forms). A computation term N is normal with respect to

![]() $\Sigma$

if

$\Sigma$

if

![]() $N = \mathbf{return}\;V$

for some V or

$N = \mathbf{return}\;V$

for some V or

![]() $N = {{{{{{\mathcal{E}}}}}}}[\mathbf{do}\;\ell\,W]$

for some

$N = {{{{{{\mathcal{E}}}}}}}[\mathbf{do}\;\ell\,W]$

for some

![]() $\ell \in dom(\Sigma)$

,

$\ell \in dom(\Sigma)$

,

![]() ${{{{{{\mathcal{E}}}}}}}$

, and W.

${{{{{{\mathcal{E}}}}}}}$

, and W.

Theorem 1 (Type Soundness for

![]() ${\lambda_{\textrm{h}}}$

) If

${\lambda_{\textrm{h}}}$

) If

![]() $ \vdash M : C$

, then either there exists

$ \vdash M : C$

, then either there exists

![]() $ \vdash N : C$

such that

$ \vdash N : C$

such that

![]() $M {{{{{{\leadsto}}}}}}^\ast N$

and N is normal with respect to

$M {{{{{{\leadsto}}}}}}^\ast N$

and N is normal with respect to

![]() $\Sigma$

, or M diverges.

$\Sigma$

, or M diverges.

It is worth observing that our language does not prohibit ‘operation extrusion’: even if we begin with a term in which all

![]() $\mathbf{do}$

invocations fall within the scope of a handler, this property need not be preserved by reductions, since a

$\mathbf{do}$

invocations fall within the scope of a handler, this property need not be preserved by reductions, since a

![]() $\mathbf{do}$

invocation may pass another

$\mathbf{do}$

invocation may pass another

![]() $\mathbf{do}$

to the outermost handler. Such behaviour may be readily ruled out using a type-and-effect system, but this additional machinery is not necessary for our present purposes.

$\mathbf{do}$

to the outermost handler. Such behaviour may be readily ruled out using a type-and-effect system, but this additional machinery is not necessary for our present purposes.

4 Abstract machine semantics

Thus far we have introduced the base calculus

![]() ${\lambda_{\textrm{b}}}$

and its extension with effect handlers

${\lambda_{\textrm{b}}}$

and its extension with effect handlers

![]() ${\lambda_{\textrm{h}}}$

. For each calculus, we have given a small-step operational semantics which uses a substitution model for evaluation. Whilst this model is semantically pleasing, it falls short of providing a realistic account of practical computation as substitution is an expensive operation. We now develop a more practical model of computation based on an abstract machine semantics.

${\lambda_{\textrm{h}}}$

. For each calculus, we have given a small-step operational semantics which uses a substitution model for evaluation. Whilst this model is semantically pleasing, it falls short of providing a realistic account of practical computation as substitution is an expensive operation. We now develop a more practical model of computation based on an abstract machine semantics.

4.1 Base machine

We choose a CEK-style abstract machine semantics (Felleisen & Friedman, Reference Felleisen and Friedman1987) for

![]() ${\lambda _b}$

based on that of Hillerström et al. (Reference Hillerström, Lindley and Longley2020). The CEK machine operates on configurations which are triples of the form

${\lambda _b}$

based on that of Hillerström et al. (Reference Hillerström, Lindley and Longley2020). The CEK machine operates on configurations which are triples of the form

![]() ${{{{{{\langle M \mid \gamma \mid \sigma \rangle}}}}}}$

. The first component contains the computation currently being evaluated. The second component contains the environment

${{{{{{\langle M \mid \gamma \mid \sigma \rangle}}}}}}$

. The first component contains the computation currently being evaluated. The second component contains the environment

![]() $\gamma$

which binds free variables. The third component contains the continuation which instructs the machine how to proceed once evaluation of the current computation is complete. The syntax of abstract machine states is as follows.

$\gamma$

which binds free variables. The third component contains the continuation which instructs the machine how to proceed once evaluation of the current computation is complete. The syntax of abstract machine states is as follows.

Values consist of function closures, constants, pairs, and left or right tagged values. We refer to continuations of the base machine as pure. A pure continuation is a stack of pure continuation frames. A pure continuation frame

![]() $(\gamma, x, N)$

closes a let-binding

$(\gamma, x, N)$

closes a let-binding

![]() $\mathbf{let} \;x{{{{{{\leftarrow}}}}}} [~] \;\mathbf{in}\;N$

over environment

$\mathbf{let} \;x{{{{{{\leftarrow}}}}}} [~] \;\mathbf{in}\;N$

over environment

![]() $\gamma$

. We write

$\gamma$

. We write

![]() ${{{{{{[]}}}}}}$

for an empty pure continuation and

${{{{{{[]}}}}}}$

for an empty pure continuation and

![]() $\phi {{{{{{::}}}}}} \sigma$

for the result of pushing the frame

$\phi {{{{{{::}}}}}} \sigma$

for the result of pushing the frame

![]() $\phi$

onto

$\phi$

onto

![]() $\sigma$

. We use pattern matching to deconstruct pure continuations.

$\sigma$

. We use pattern matching to deconstruct pure continuations.

The abstract machine semantics is given in Figure 4. The transition relation (

![]() ${{{{{{\longrightarrow}}}}}}$

) makes use of the value interpretation (

${{{{{{\longrightarrow}}}}}}$

) makes use of the value interpretation (

![]() $[\![ - ]\!] $

) from value terms to machine values. The machine is initialised by placing a term in a configuration alongside the empty environment (

$[\![ - ]\!] $

) from value terms to machine values. The machine is initialised by placing a term in a configuration alongside the empty environment (

![]() $\emptyset$

) and the identity pure continuation (

$\emptyset$

) and the identity pure continuation (

![]() ${{{{{{[]}}}}}}$

). The rules (M-App), (M-Rec), (M-Const), (M-Split), (M-CaseL), and (M-CaseR) eliminate values. The (M-Let) rule extends the current pure continuation with let bindings. The (M-RetCont) rule extends the environment in the top frame of the pure continuation with a returned value. Given an input of a well-typed closed computation term

${{{{{{[]}}}}}}$

). The rules (M-App), (M-Rec), (M-Const), (M-Split), (M-CaseL), and (M-CaseR) eliminate values. The (M-Let) rule extends the current pure continuation with let bindings. The (M-RetCont) rule extends the environment in the top frame of the pure continuation with a returned value. Given an input of a well-typed closed computation term

![]() $ \vdash M : A$

, the machine will either diverge or return a value of type A. A final state is given by a configuration of the form

$ \vdash M : A$

, the machine will either diverge or return a value of type A. A final state is given by a configuration of the form

![]() ${{{{{{\langle \mathbf{return}\;V \mid \gamma \mid {{{{{{[]}}}}}} \rangle}}}}}}$

in which case the final return value is given by the denotation

${{{{{{\langle \mathbf{return}\;V \mid \gamma \mid {{{{{{[]}}}}}} \rangle}}}}}}$

in which case the final return value is given by the denotation

![]() $[\![ V ]\!] \gamma$

of V under environment

$[\![ V ]\!] \gamma$

of V under environment

![]() $\gamma$

.

$\gamma$

.

Fig. 4. Abstract machine semantics for

![]() ${\lambda_{\textrm{b}}}$

.

${\lambda_{\textrm{b}}}$

.

Correctness. The base machine faithfully simulates the operational semantics for

![]() ${\lambda_{\textrm{b}}}$

; most transitions correspond directly to

${\lambda_{\textrm{b}}}$

; most transitions correspond directly to

![]() $\beta$

-reductions, but M-Let performs an administrative step to bring the computation M into evaluation position. We formally state and prove the correspondence in Appendix A, relying on an inverse map

$\beta$

-reductions, but M-Let performs an administrative step to bring the computation M into evaluation position. We formally state and prove the correspondence in Appendix A, relying on an inverse map

![]() $(|-|)$

from configurations to terms (Hillerström et al., Reference Hillerström, Lindley and Longley2020).

$(|-|)$

from configurations to terms (Hillerström et al., Reference Hillerström, Lindley and Longley2020).

4.2 Handler machine

We now enrich the

![]() ${\lambda_{\textrm{b}}}$

machine to a

${\lambda_{\textrm{b}}}$

machine to a

![]() ${\lambda_{\textrm{h}}}$

machine. We extend the syntax as follows.

${\lambda_{\textrm{h}}}$

machine. We extend the syntax as follows.

The notion of configurations changes slightly in that the continuation component is replaced by a generalised continuation

![]() $\kappa \in \mathsf{Cont}$

(Hillerström et al., Reference Hillerström, Lindley and Longley2020); a continuation is now a list of resumptions. A resumption is a pair of a pure continuation (as in the base machine) and a handler closure (

$\kappa \in \mathsf{Cont}$

(Hillerström et al., Reference Hillerström, Lindley and Longley2020); a continuation is now a list of resumptions. A resumption is a pair of a pure continuation (as in the base machine) and a handler closure (

![]() $\chi$

). A handler closure consists of an environment and a handler definition, where the former binds the free variables that occur in the latter. The machine is initialised by placing a term in a configuration alongside the empty environment (

$\chi$

). A handler closure consists of an environment and a handler definition, where the former binds the free variables that occur in the latter. The machine is initialised by placing a term in a configuration alongside the empty environment (

![]() $\emptyset$

) and the identity continuation (

$\emptyset$

) and the identity continuation (

![]() $\kappa_0$

). The latter is a singleton list containing the identity resumption, which consists of the identity pure continuation paired with the identity handler closure:

$\kappa_0$

). The latter is a singleton list containing the identity resumption, which consists of the identity pure continuation paired with the identity handler closure:

Machine values are augmented to include resumptions as an operation invocation causes the topmost frame of the machine continuation to be reified (and bound to the resumption parameter in the operation clause).

The handler machine adds transition rules for handlers and modifies

![]() $(\text{M-L<sc>et</sc>})$

and

$(\text{M-L<sc>et</sc>})$

and

![]() $(\text{M-RetCont})$

from the base machine to account for the richer continuation structure. Figure 5 depicts the new and modified rules. The

$(\text{M-RetCont})$

from the base machine to account for the richer continuation structure. Figure 5 depicts the new and modified rules. The

![]() $(\text{M-Handle})$

rule pushes a handler closure along with an empty pure continuation onto the continuation stack. The

$(\text{M-Handle})$

rule pushes a handler closure along with an empty pure continuation onto the continuation stack. The

![]() $(\text{M-RetHandler})$

rule transfers control to the success clause of the current handler once the pure continuation is empty. The

$(\text{M-RetHandler})$

rule transfers control to the success clause of the current handler once the pure continuation is empty. The

![]() $(\text{M-Handle-Op})$

rule transfers control to the matching operation clause on the topmost handler, and during the process it reifies the handler closure. Finally, the

$(\text{M-Handle-Op})$

rule transfers control to the matching operation clause on the topmost handler, and during the process it reifies the handler closure. Finally, the

![]() $(\text{M-Resume})$

rule applies a reified handler closure, by pushing it onto the continuation stack. The handler machine has two possible final states: either it yields a value or it gets stuck on an unhandled operation.

$(\text{M-Resume})$

rule applies a reified handler closure, by pushing it onto the continuation stack. The handler machine has two possible final states: either it yields a value or it gets stuck on an unhandled operation.

Fig. 5. Abstract machine semantics for

![]() ${\lambda_{\textrm{h}}}$

.

${\lambda_{\textrm{h}}}$

.

Correctness. The handler machine faithfully simulates the operational semantics of

![]() ${\lambda_{\textrm{h}}}$

. Extending the result for the base machine, we formally state and prove the correspondence in Appendix B.

${\lambda_{\textrm{h}}}$

. Extending the result for the base machine, we formally state and prove the correspondence in Appendix B.

4.3 Realisability and asymptotic complexity

As witnessed by the work of Hillerström & Lindley (Reference Hillerström and Lindley2016), the machine structures are readily realisable using standard persistent functional data structures. Pure continuations on the base machine and generalised continuations on the handler machine can be implemented using linked lists with a time complexity of

![]() ${{{{{{\mathcal{O}}}}}}}(1)$

for the extension operation

${{{{{{\mathcal{O}}}}}}}(1)$

for the extension operation

![]() $(\_{{{{{{::}}}}}}\_)$

. The topmost pure continuation on the handler machine may also be extended in time

$(\_{{{{{{::}}}}}}\_)$

. The topmost pure continuation on the handler machine may also be extended in time

![]() ${{{{{{\mathcal{O}}}}}}}(1)$

, as extending it only requires reaching under the topmost handler closure. Environments,

${{{{{{\mathcal{O}}}}}}}(1)$

, as extending it only requires reaching under the topmost handler closure. Environments,

![]() $\gamma$

, can be realised using a map, with a time complexity of

$\gamma$

, can be realised using a map, with a time complexity of

![]() ${{{{{{\mathcal{O}}}}}}}(\log|\gamma|)$

for extension and lookup (Okasaki, Reference Okasaki1999). We can use the same technique to realise label lookup,

${{{{{{\mathcal{O}}}}}}}(\log|\gamma|)$

for extension and lookup (Okasaki, Reference Okasaki1999). We can use the same technique to realise label lookup,

![]() $H^{\ell}$

, with time complexity

$H^{\ell}$

, with time complexity

![]() ${{{{{{\mathcal{O}}}}}}}(\log|\Sigma|)$

. However, in Section 5.4, we shall work only with a single effect operation, so

${{{{{{\mathcal{O}}}}}}}(\log|\Sigma|)$

. However, in Section 5.4, we shall work only with a single effect operation, so

![]() $|\Sigma| = 1$

, meaning that in our analysis we can practically treat label lookup as being a constant time operation.

$|\Sigma| = 1$

, meaning that in our analysis we can practically treat label lookup as being a constant time operation.

The worst-case time complexity of a single machine transition is exhibited by rules which involves the value translation function,

![]() $[\![ - ]\!] \gamma$

, as it is defined structurally on values. Its worst-time complexity is exhibited by a nesting of pairs of variables

$[\![ - ]\!] \gamma$

, as it is defined structurally on values. Its worst-time complexity is exhibited by a nesting of pairs of variables

![]() $[\![ {{{{{{\langle x_1,{{{{{{\langle x_2,\cdots,{{{{{{\langle x_{n-1},x_n \rangle}}}}}}\cdots \rangle}}}}}} \rangle}}}}}} ]\!] \gamma$

which has complexity

$[\![ {{{{{{\langle x_1,{{{{{{\langle x_2,\cdots,{{{{{{\langle x_{n-1},x_n \rangle}}}}}}\cdots \rangle}}}}}} \rangle}}}}}} ]\!] \gamma$

which has complexity

![]() ${{{{{{\mathcal{O}}}}}}}(n\log|\gamma|)$

.

${{{{{{\mathcal{O}}}}}}}(n\log|\gamma|)$

.

Continuation copying. On the handler machine the topmost continuation frame can be copied in constant time due to the persistent runtime and the layout of machine continuations. An alternative design would be to make the runtime non-persistent in which case copying a continuation frame

![]() $((\sigma, \chi) {{{{{{::}}}}}} \_)$

would be a

$((\sigma, \chi) {{{{{{::}}}}}} \_)$

would be a

![]() ${{{{{{\mathcal{O}}}}}}}(|\sigma| + |\chi|)$

time operation, where

${{{{{{\mathcal{O}}}}}}}(|\sigma| + |\chi|)$

time operation, where

![]() $|\chi|$

is the size of the handler closure

$|\chi|$

is the size of the handler closure

![]() $\chi$

.

$\chi$

.

Primitive operations on naturals. Our model assumes that arithmetic operations on arbitrary natural numbers take

![]() ${{{{{{\mathcal{O}}}}}}}(1)$

time. This is common practice in the study of algorithms when the main interest lies elsewhere (Cormen et al., Reference Cormen, Leiserson, Rivest and Stein2009, Section 2.2). If desired, one could adopt a more refined cost model that accounted for the bit-level complexity of arithmetic operations; however, doing so would have the same impact on both of the situations we are wishing to compare, and thus would add nothing but noise to the overall analysis.

${{{{{{\mathcal{O}}}}}}}(1)$

time. This is common practice in the study of algorithms when the main interest lies elsewhere (Cormen et al., Reference Cormen, Leiserson, Rivest and Stein2009, Section 2.2). If desired, one could adopt a more refined cost model that accounted for the bit-level complexity of arithmetic operations; however, doing so would have the same impact on both of the situations we are wishing to compare, and thus would add nothing but noise to the overall analysis.

5 Predicates, decision trees and generic count

We now come to the crux of the paper. In this section and the next, we prove that

![]() ${\lambda_{\textrm{h}}}$

supports implementations of certain operations with an asymptotic runtime bound that cannot be achieved in

${\lambda_{\textrm{h}}}$

supports implementations of certain operations with an asymptotic runtime bound that cannot be achieved in

![]() ${\lambda_{\textrm{b}}}$

(Section 8). While the positive half of this claim essentially consolidates a known piece of folklore, the negative half appears to be new. To establish our result, it will suffice to exhibit a single ‘efficient’ program in

${\lambda_{\textrm{b}}}$

(Section 8). While the positive half of this claim essentially consolidates a known piece of folklore, the negative half appears to be new. To establish our result, it will suffice to exhibit a single ‘efficient’ program in

![]() ${\lambda_{\textrm{h}}}$

, then show that no equivalent program in

${\lambda_{\textrm{h}}}$

, then show that no equivalent program in

![]() ${\lambda_{\textrm{b}}}$

can achieve the same asymptotic efficiency. We take generic search as our example.

${\lambda_{\textrm{b}}}$

can achieve the same asymptotic efficiency. We take generic search as our example.

Generic search is a modular search procedure that takes as input a predicate P on some multi-dimensional search space and finds all points of the space satisfying P. Generic search is agnostic to the specific instantiation of P, and as a result is applicable across a wide spectrum of domains. Classic examples such as Sudoku solving (Bird, Reference Bird2006), the n-queens problem (Bell & Stevens, Reference Bell and Stevens2009) and graph colouring can be cast as instances of generic search, and similar ideas have been explored in connection with exact real integration (Simpson, Reference Simpson1998; Daniels, Reference Daniels2016).

For simplicity, we will restrict attention to search spaces of the form

![]() ${{{{{{\mathbb{B}}}}}}}^n$

, the set of bit vectors of length n. To exhibit our phenomenon in the simplest possible setting, we shall actually focus on the generic count problem: given a predicate P on some

${{{{{{\mathbb{B}}}}}}}^n$

, the set of bit vectors of length n. To exhibit our phenomenon in the simplest possible setting, we shall actually focus on the generic count problem: given a predicate P on some

![]() ${{{{{{\mathbb{B}}}}}}}^n$

, return the number of points of

${{{{{{\mathbb{B}}}}}}}^n$

, return the number of points of

![]() ${{{{{{\mathbb{B}}}}}}}^n$

satisfying P. However, we shall explain why our results are also applicable to generic search proper.

${{{{{{\mathbb{B}}}}}}}^n$

satisfying P. However, we shall explain why our results are also applicable to generic search proper.

We shall view

![]() ${{{{{{\mathbb{B}}}}}}}^n$

as the set of functions

${{{{{{\mathbb{B}}}}}}}^n$

as the set of functions