1. Introduction

Gardner Murphy, an influential social and personality psychologist, defined learning as something that covers every modification in the behavior to meet the requirements of the environment (Proshansky & Murphy, Reference Proshansky and Murphy1942). Humans and animals rely heavily on the process of trial-and-error to acquire new skills. Experiences like learning how to walk, riding a bicycle, teaching a dog new tricks are all motivated by performing actions that garner positive rewards (e.g., moving forward, not falling, happiness, and getting treats). Such interactions become a significant source of knowledge about new things and uncertain environments. Reinforcement Learning (RL) is a paradigm of Machine Learning (ML) that teaches an agent, a learner, to make sequential decisions in a potentially complex, uncertain environment with the fundamental goal to maximize its overall reward.

Exponential growth in computational power, custom-developed Application Specific Integrated Circuit (ASIC) (Barr, Reference Barr2007), Tensor Processing Units (TPUs)Footnote 1 combined with the availability of enormous training datasets has accelerated training workloads and led to an unprecedented surge of interest in the topic of Deep Reinforcement Learning (DRL), especially in domains like telecommunications (Nikou et al., to appear; Luong et al., Reference Luong2019; Yajnanarayana et al., Reference Yajnanarayana, Ryden and Hevizi2020), networking (Kavalerov et al., Reference Kavalerov, Likhacheva and Shilova2017; Luong et al., Reference Luong2019), game-playing agents (Bellemare et al., Reference Bellemare, Naddaf, Veness and Bowling2013; Mnih et al., Reference Mnih and Kavukcuoglu2015; Silver et al., Reference Silver and Huang2016; Brockman et al., Reference Brockman, Cheung, Pettersson, Schneider, Schulman, Tang and Zaremba2016), robotic manipulations (Abbeel et al., Reference Abbeel, Coates, Quigley and Ng2006; Kalashnikov et al., Reference Kalashnikov2018), and recommender systems (Zheng et al., Reference Zheng, Zhang, Zheng, Xiang, Yuan, Xie and Li2018; Munemasa et al., Reference Munemasa2018).

1.1. Problem

Deep Q-Network (DQN) (Mnih et al., Reference Mnih and Kavukcuoglu2015) uses Neural Networks (NNs) as function approximators to estimate the Q-function, it does so by making enhancements to the original Q-learning algorithm by employing an experience replay buffer and a separate target network that is updated less frequently. Actor-critic algorithms like Asynchronous Advantage Actor Critic (A3C) utilize Monte Carlo samples to estimate the gradients and then learn the parameters of the policy using gradient descent. Revised variants of these algorithms have been published over the past few years, which have improved performance on several complex problems and industry standard benchmarks (Lillicrap et al., Reference Lillicrap2015; van Hasselt et al., Reference van Hasselt, Guez and Silver2016; Wang et al., Reference Wang, Schaul, Hessel, Hasselt, Lanctot and Freitas2016; Silver et al., Reference Silver and Hubert2017). However, all of these approaches entail expensive computations, such as calculating gradients and backpropagation, to train neural networks. Training such models take several hours, even multiple days (Mnih et al., Reference Mnih, Badia, Balcan and Weinberger2016; Mania et al., Reference Mania, Guy and Recht2018), to obtain satisfactory results, mainly when applied to problems that have a very large state space. Some other drawbacks of these algorithms include the sensitivity to the different hyperparameters, poor sample efficiency (Yu, Reference Yu2018), and limited parallelizability.

Recent publications, notably from OpenAI (Salimans et al., Reference Salimans2017) and UberAI (Conti et al., Reference Conti, Madhavan, Such, Lehman, Stanley and Clune2018), exhibited the use of Evolutionary Computation (EC) to train deep neural networks for RL problems. The combined use of EC and RL offers several advantages. These gradient-free methods are more robust to the issues of dense and sparse rewards and are also more tolerant to longer time horizons. The inherent design of such algorithms makes them well suited for distributed training which has shown significant speedup of overall training time (Salimans et al., Reference Salimans2017; Conti et al., Reference Conti, Madhavan, Such, Lehman, Stanley and Clune2018).

1.2. Research question

The research questions we are looking to address in the current work are:

-

1. How effective are gradient-free evolutionary-driven methods, such as variants of Genetic Algorithms (GAs), in training deep neural networks for RL problems with a large state space? How do they compare to gradient-based algorithms in terms of performance and training time?

-

2. To what extent can evolution-based RL algorithms be scaled? Are the gains worth the extra number of workers?

-

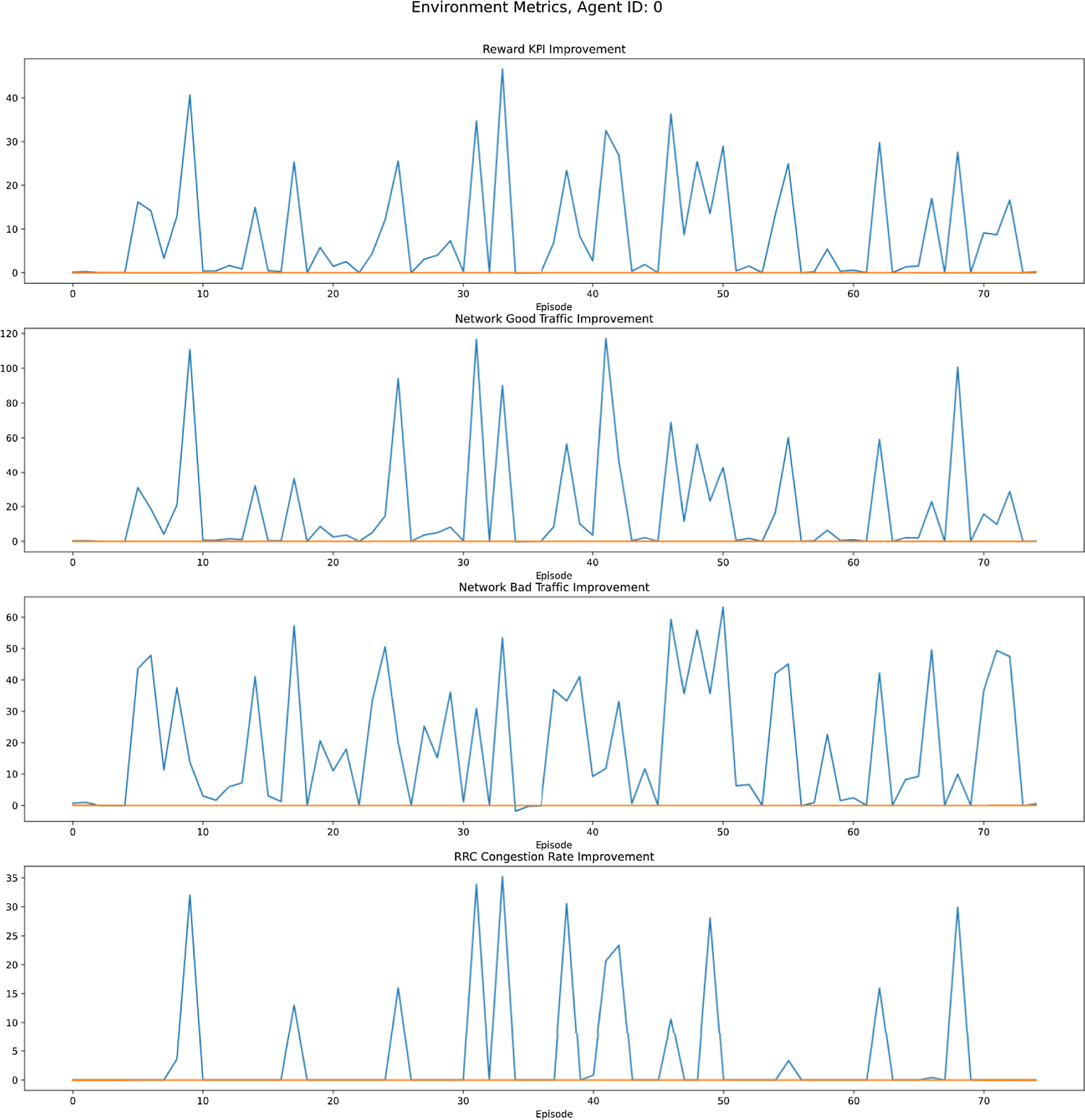

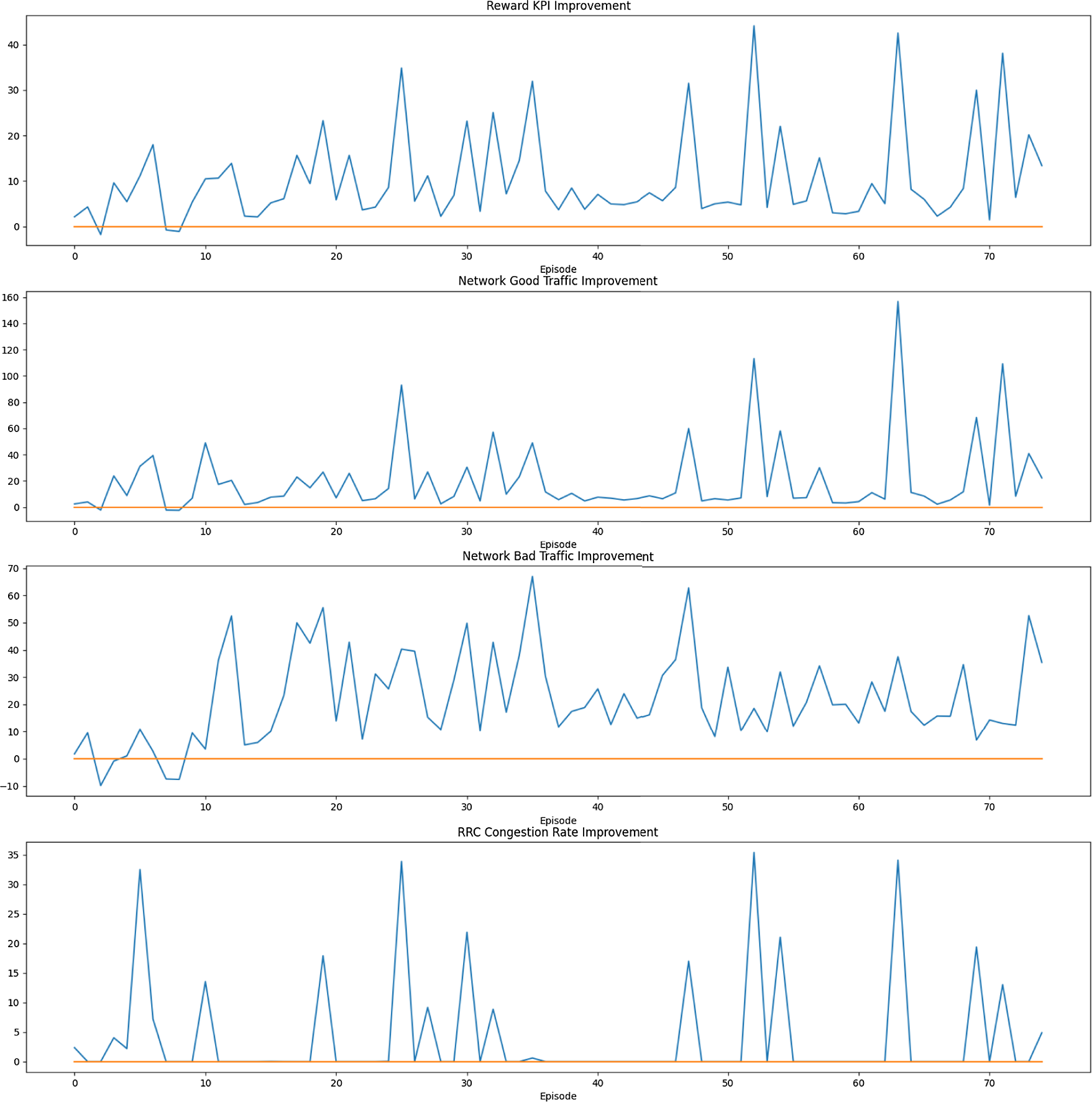

3. How effective are evolution-based methods when applied to RL problems in different domains like the optimization of Remote Electrical Tilt (RET) in telecommunications?

1.3. Contribution

In this work, a novel species-based GA that trains a deep neural network for RL specific problems is proposed. The algorithm which is model-free, gradient-free only utilizes simple genetic operators like mutation, selection, recombination, and crossover for training. A memory efficient encoding of the neural network is presented which simplifies the application of genetic operators and also reduces bandwidth requirement making this approach highly scalable.

1.4. Purpose

The key benefits of using EC-based techniques for training neural networks are the simplicity of implementation, a faster rate of convergence due to distributed training, and the reduced number of hyperparameters. Population-based methods, if implemented efficiently, are massively scalable due to their inherent design and can achieve a significant parallel speedup. The reduced training time makes them well suited for RL problems that require frequent retraining and for those that require multiple policies. Since the method is gradient-free, it does not suffer from known issues of exploding, vanishing gradients and is particularly well suited for non-differentiable domains (Cho et al., Reference Cho2014; Xu et al., Reference Xu2016; Liu et al., Reference Liu2018). Some additional advantages of these black-box optimization techniques include: the improved exploration (Conti et al., Reference Conti, Madhavan, Such, Lehman, Stanley and Clune2018) due to their stochastic nature and the invariance to the scale of rewards (sparse/dense) which is something that has to be addressed explicitly in current state-of-the-art methods.

Our work can also be combined with existing Markov Decision Process (MDP)-based methods to develop a hybrid approach. The use of EC-based training for neural networks can also be extended to supervised learning tasks. Lastly, neuroevolution and its application to RL is thought to be one step toward artificial general intelligence, which is a topic of significant interest to researchers all over the world.

1.5. Ethics and sustainability

A recent research study (Strubell et al., Reference Strubell, Ganesh and Mccallum2019) highlighted that training a deep learning model can generate over 600 000 pounds of

![]() $CO_2$

emissions. In comparison, an average Swedish person generates around 22 000 poundsFootnote 2 of

$CO_2$

emissions. In comparison, an average Swedish person generates around 22 000 poundsFootnote 2 of

![]() $CO_2$

emissions(as of 2020) per year. These numbers, when compared, are pretty concerning. Deep learning models for RL rely on specialized hardware like Graphics Processing Units (GPUs) and TPUs which are not only expensive but also consume significant amount of power (Jouppi et al., Reference Jouppi2017). The long training times (Mnih et al., Reference Mnih and Kavukcuoglu2015; Mnih et al., Reference Mnih, Badia, Balcan and Weinberger2016; van Hasselt et al., Reference van Hasselt, Guez and Silver2016) makes the matter much worse. GPUs require an efficient cooling system and maintenance, or else it can thermal throttle, which adversely effects its efficiency and life.

$CO_2$

emissions(as of 2020) per year. These numbers, when compared, are pretty concerning. Deep learning models for RL rely on specialized hardware like Graphics Processing Units (GPUs) and TPUs which are not only expensive but also consume significant amount of power (Jouppi et al., Reference Jouppi2017). The long training times (Mnih et al., Reference Mnih and Kavukcuoglu2015; Mnih et al., Reference Mnih, Badia, Balcan and Weinberger2016; van Hasselt et al., Reference van Hasselt, Guez and Silver2016) makes the matter much worse. GPUs require an efficient cooling system and maintenance, or else it can thermal throttle, which adversely effects its efficiency and life.

The algorithm proposed in this work does not compute gradients and does not perform back-propagation, both of which are computationally expensive tasks. Lack of gradients means that low precision older hardware and only Central Processing Units (CPUs)s can be used as workers during training. Although a parallel implementation utilizes multiple workers, this is well compensated by the significant reduction of training time as evidenced in the experiments. This approach can also benefit researchers and organizations that do not have access to specialized hardware and cloud-based instances.

1.6. Delimitations

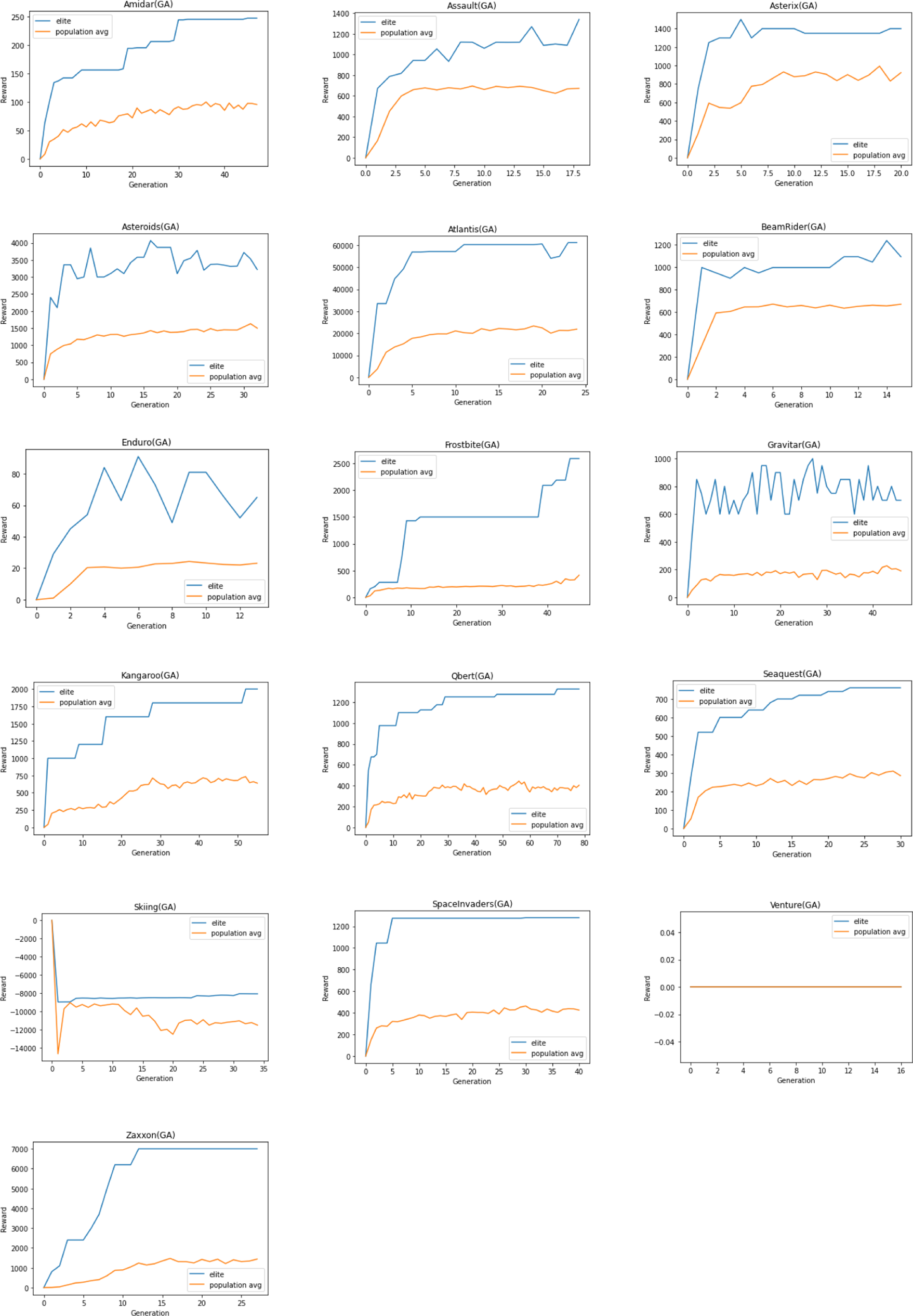

The work aims not to break the records on the Atari 2600 benchmarks but to serve as a proof of concept and explore the potential of a novel approach.

The experiments rely on two open-source libraries, gymFootnote 3 for the environments used for benchmarking and rllibFootnote 4 for the reference implementation of DQN, A3C, and ES. The exact replication of the results is dependant on the specific versions of these libraries.

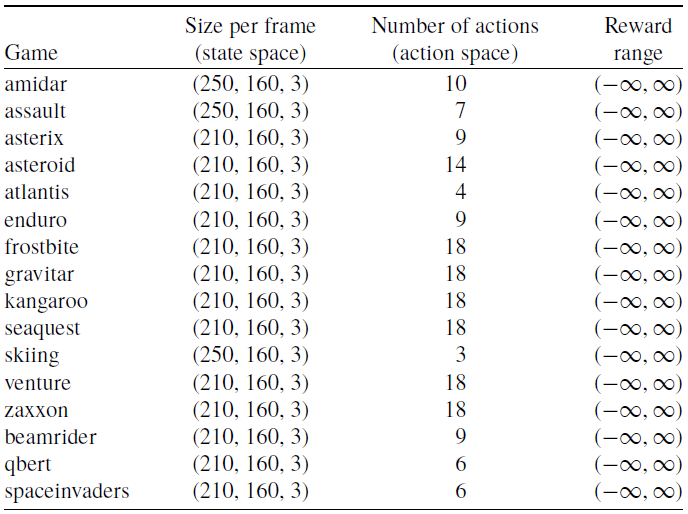

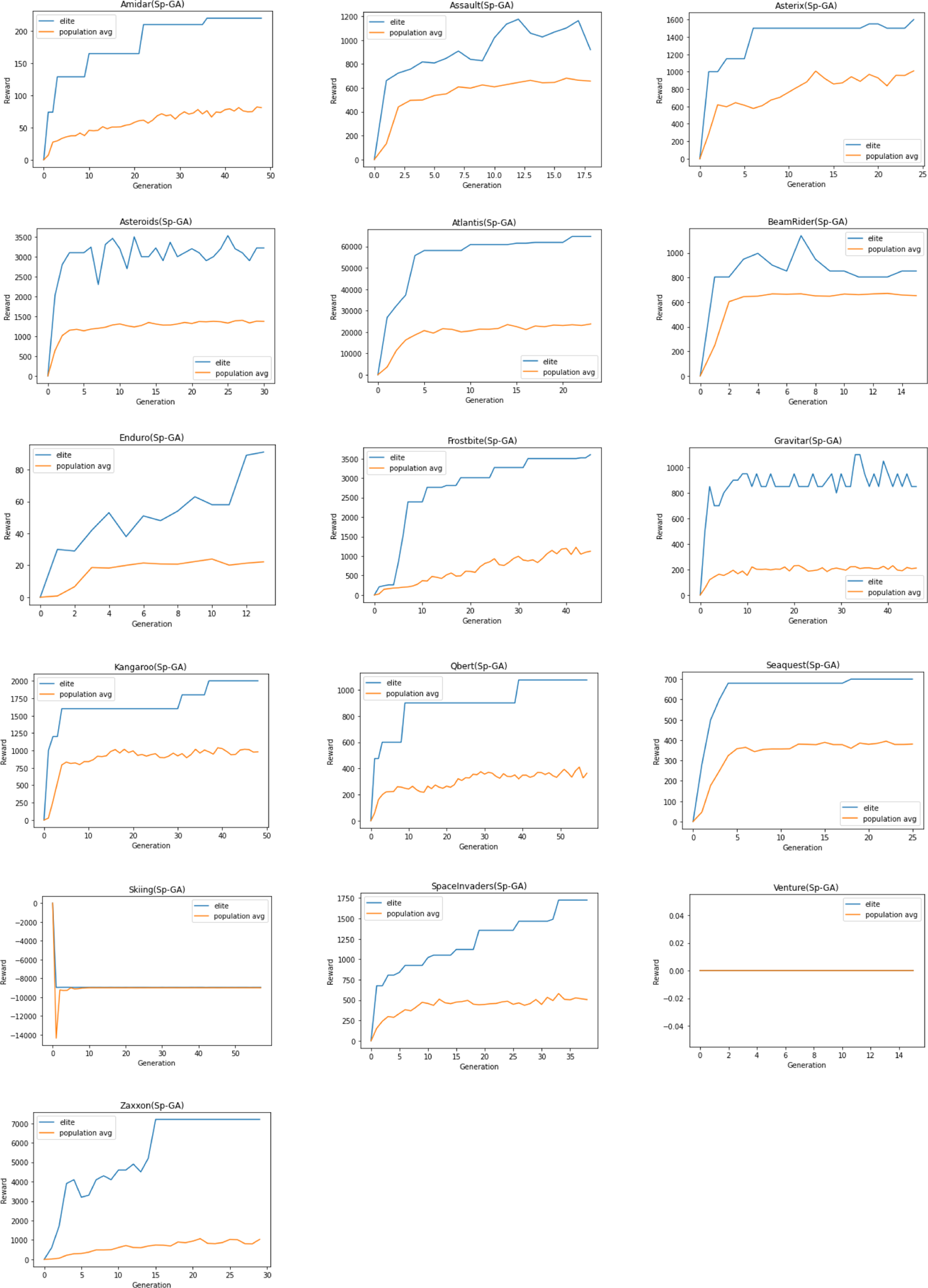

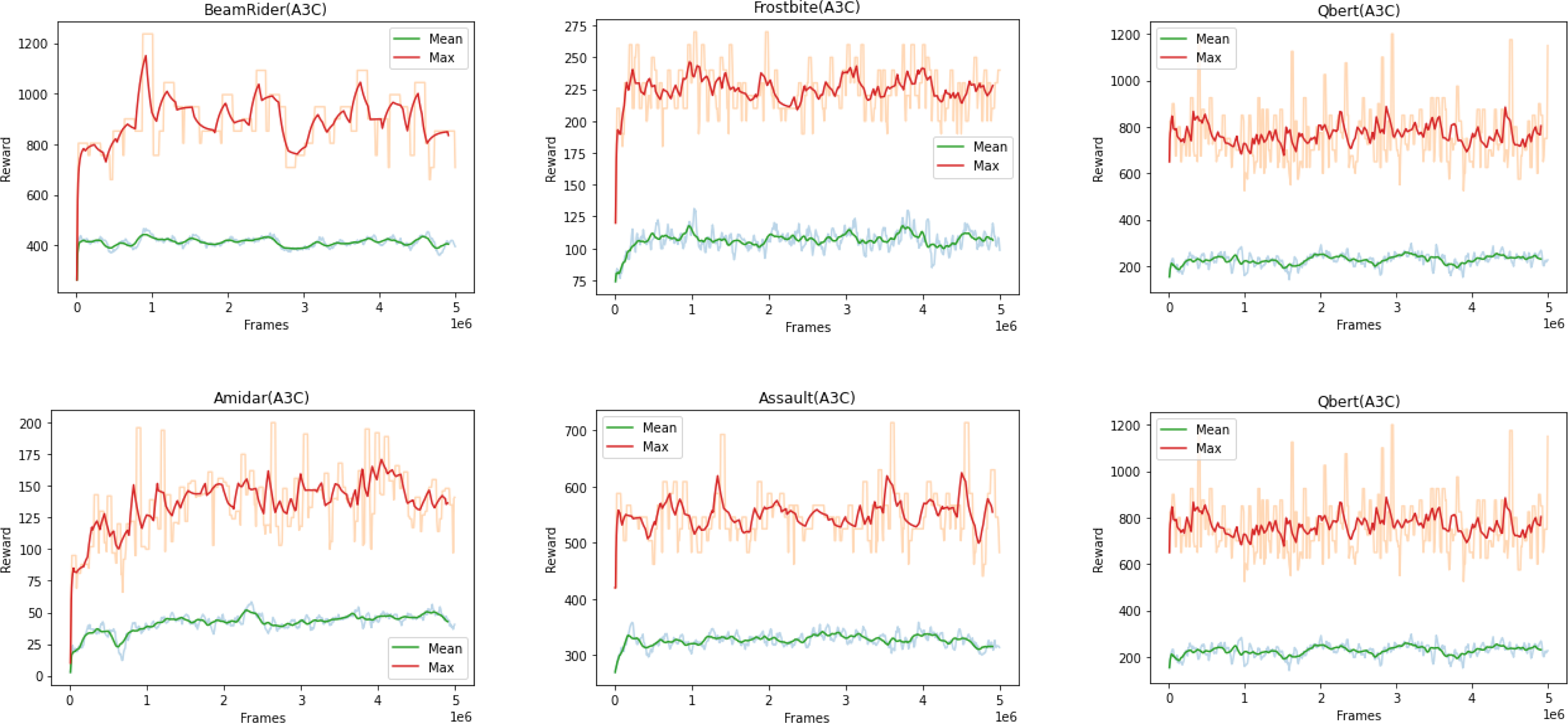

Due to limited resources and time constraints, the same hyperparameters are used for all Atari 2600 games and a single run is performed. Training is limited to a maximum of 25 million training frames on 16 games—some chosen at random while others were chosen from the publication Deep Neuroevolution (Conti et al., Reference Conti, Madhavan, Such, Lehman, Stanley and Clune2018).

2. Background

2.1. Reinforcement learning

RL is inherently different from supervised tasks where labeled samples containing both inputs and targets are available for training. For control problems that involve interaction with an entity or an environment, obtaining samples that are both representative and correct for all situations is often unrealistic. RL tackles this problem by allowing a goal-seeking agent to interact with an uncertain environment. This agent is responsible for maintaining a balance between exploration and exploitation to build a rich experience which it uses as a memory to make better decisions progressively.

A RL system can be characterized by the following elements:

-

• Policy: The agent interacts with an environment at discrete time steps

$t=0,1,2,3, \ldots$

. The policy is a rule that guides the agent on what actions (

$t=0,1,2,3, \ldots$

. The policy is a rule that guides the agent on what actions (

$a_{t}$

) to take. It does so by creating a mapping from states (

$a_{t}$

) to take. It does so by creating a mapping from states (

$s_{t}$

), a perceived representation of the environment, and possible actions. Policies can either be deterministic, usually denoted by

$s_{t}$

), a perceived representation of the environment, and possible actions. Policies can either be deterministic, usually denoted by

$\mu$

,

$\mu$

,

$a_{t}=\mu(s_{t})$

, or they can be stochastic, which are usually denoted by

$a_{t}=\mu(s_{t})$

, or they can be stochastic, which are usually denoted by

$\pi$

,

$\pi$

,

$a_{t} \sim \pi\!\left(\cdot \mid s_{t}\right)$

, representing the probability of taking an action at a particular state.

$a_{t} \sim \pi\!\left(\cdot \mid s_{t}\right)$

, representing the probability of taking an action at a particular state. -

• Rewards: are responses from the environment. They represent the immediate and intrinsic appeal of taking a particular action in a specific state.

-

• Value function: is the maximal expected reward that can be accumulated over the future, starting from a particular state. This takes into account the long-time desirability of the state, which makes it different from rewards that provide an immediate response.

-

• Model: It represents the system dynamics of the environment. The presence of a model can help the agent make better predictions and learn faster.

The agent interacts with the environment in a sequence of time steps. At each time step, it observes a representation of the environment, that is, its state

![]() $s_t \in \mathcal{S}$

, and then decides to take action

$s_t \in \mathcal{S}$

, and then decides to take action

![]() $a_t \in \mathcal{A}$

by following a policy. As a consequence of its action, the agent receives an immediate reward from the environment,

$a_t \in \mathcal{A}$

by following a policy. As a consequence of its action, the agent receives an immediate reward from the environment,

![]() $r_t$

, and transitions to the next state,

$r_t$

, and transitions to the next state,

![]() $s_{t+1}$

. The agent’s objective is to learn a policy to maximize its cumulative reward over a time horizon. This fixed horizon is also known as a trajectory (

$s_{t+1}$

. The agent’s objective is to learn a policy to maximize its cumulative reward over a time horizon. This fixed horizon is also known as a trajectory (

![]() $\tau$

) or an episode.

$\tau$

) or an episode.

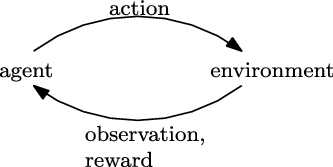

This interaction can be seen in Figure 1.

Figure 1. Interaction of an agent with the environment at a time step t. Image credits (Sutton & Barto, Reference Sutton and Barto2018)

2.1.1. Markov decision process

The elements of an Reinforcement Learning (RL) problem, as described in Section 2.1 can be formalized using the Markov Decision Process (MDP) framework (Puterman, Reference Puterman1994; Boutilier et al., Reference Boutilier, Dean and Hanks1999). Markov Decision Process (MDP) can efficiently model the interactions between the environment and the agent by defining a tuple

![]() $\langle \mathcal{S}, \mathcal{A}, \mathcal{P}, \mathcal{R}\rangle$

, where:

$\langle \mathcal{S}, \mathcal{A}, \mathcal{P}, \mathcal{R}\rangle$

, where:

-

•

$\mathcal{S}$

represents the finite set of states,

$\mathcal{S}$

represents the finite set of states,

$s_{t} \in \mathcal{S}$

with dimensionality N, that is,

$s_{t} \in \mathcal{S}$

with dimensionality N, that is,

$|\mathcal{S}|=N$

. Recall, a state is a distinctive characteristic of the environment or the problem being modeled.

$|\mathcal{S}|=N$

. Recall, a state is a distinctive characteristic of the environment or the problem being modeled. -

•

$\mathcal{A}$

represents the finite set of actions,

$\mathcal{A}$

represents the finite set of actions,

$a_{t} \in \mathcal{A}$

with dimensionality K, that is,

$a_{t} \in \mathcal{A}$

with dimensionality K, that is,

$|\mathcal{A}|=K$

. Each action

$|\mathcal{A}|=K$

. Each action

$a_t$

is used as a control to interact with the environment at time t. A set of available actions at a particular state s is denoted by A(s), where

$a_t$

is used as a control to interact with the environment at time t. A set of available actions at a particular state s is denoted by A(s), where

$A(s) \subseteq \mathcal{A}$

.

$A(s) \subseteq \mathcal{A}$

. -

•

$ \mathcal{P}\,{:}\, \mathcal{S} \times \mathcal{A} \times \mathcal{S} \rightarrow[0,1]$

is the stochastic transition function, where

$ \mathcal{P}\,{:}\, \mathcal{S} \times \mathcal{A} \times \mathcal{S} \rightarrow[0,1]$

is the stochastic transition function, where

$p\left(s^{\prime} \mid s, a\right)$

, also denoted as

$p\left(s^{\prime} \mid s, a\right)$

, also denoted as

$p_{s, s^{\prime}}^a$

is the probability of transitioning to state

$p_{s, s^{\prime}}^a$

is the probability of transitioning to state

$s^{\prime}$

by taking action a at state s. These transition probabilities can also be stationary, that is, they are independent of time t.

$s^{\prime}$

by taking action a at state s. These transition probabilities can also be stationary, that is, they are independent of time t.

For this transition function to represent a proper probability distribution the following properties must hold:

Since MDPs are controlled Markov chains (Markov, Reference Markov1906; Markov, Reference Markov2006), the distribution of state at time

![]() $t+1$

is independent of the past given the state at time t and the action performed by the agent.

$t+1$

is independent of the past given the state at time t and the action performed by the agent.

-

•

$\mathcal{R}\,{:}\, \mathcal{S} \times \mathcal{A} \times \mathcal{S} \rightarrow \mathbb{R}$

is the state reward function. It is a scalar value,

$\mathcal{R}\,{:}\, \mathcal{S} \times \mathcal{A} \times \mathcal{S} \rightarrow \mathbb{R}$

is the state reward function. It is a scalar value,

$r\!\left(s, a, s^{\prime}\right)$

, also denoted as

$r\!\left(s, a, s^{\prime}\right)$

, also denoted as

$r_{s, s^{\prime}}^a$

, which is awarded to the agent for transitioning from state s to

$r_{s, s^{\prime}}^a$

, which is awarded to the agent for transitioning from state s to

$s^\prime$

by performing an action a. (6)Alternatively, rewards can also be defined as

$s^\prime$

by performing an action a. (6)Alternatively, rewards can also be defined as \begin{equation} r\!\left(s, a, s^{\prime}\right)=\mathcal{R}_{s, s^{\prime}}^a=\mathbb{E}\!\left\{R_{t+1}\mid s_{t}=s, a_{t}=a,s_{t+1}=s^{\prime}\right\}. \end{equation}

\begin{equation} r\!\left(s, a, s^{\prime}\right)=\mathcal{R}_{s, s^{\prime}}^a=\mathbb{E}\!\left\{R_{t+1}\mid s_{t}=s, a_{t}=a,s_{t+1}=s^{\prime}\right\}. \end{equation}

$R\,{:}\, S \times A \rightarrow \mathbb{R}$

where a reward is awarded for taking an action in a state. (7)

$R\,{:}\, S \times A \rightarrow \mathbb{R}$

where a reward is awarded for taking an action in a state. (7) \begin{equation} r\!\left(s, a\right)=t_{s, a}=\mathbb{E}\!\left\{R_{t+1}\mid s_{t}=s, a_{t}=a\right\}. \end{equation}

\begin{equation} r\!\left(s, a\right)=t_{s, a}=\mathbb{E}\!\left\{R_{t+1}\mid s_{t}=s, a_{t}=a\right\}. \end{equation}

2.1.2. Policies and optimality criteria

In the previous section, we have defined a mathematical formalism for RL problems using an MDP. To formally determine the expected return,

![]() $G_t$

that is, objective that the policy seeks to optimize, two classes of MDPs are discussed:

$G_t$

that is, objective that the policy seeks to optimize, two classes of MDPs are discussed:

-

• Finite Horizon : Given a fixed time horizon of T, the objective is to find a sequential decision policy

$\pi$

, that maximizes the expected return until the end of the time horizon. If we represent the sequence of rewards received after time t, by

$\pi$

, that maximizes the expected return until the end of the time horizon. If we represent the sequence of rewards received after time t, by

$r_{t+1}, r_{t+2}, r_{t+3}, \ldots$

, then the cumulative reward is simply: (8)

$r_{t+1}, r_{t+2}, r_{t+3}, \ldots$

, then the cumulative reward is simply: (8) \begin{equation} G_{t} \doteq r_{t+1}+r_{t+2}+r_{t+3}+\cdots+r_{T} \end{equation}

\begin{equation} G_{t} \doteq r_{t+1}+r_{t+2}+r_{t+3}+\cdots+r_{T} \end{equation}

-

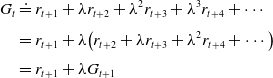

• Infinite Horizon—Discounted rewards : Given an endless time horizon

$T=\infty$

, the objective is to find a sequential decision policy,

$T=\infty$

, the objective is to find a sequential decision policy,

$\pi$

that maximizes the expected discounted return. (9)where

$\pi$

that maximizes the expected discounted return. (9)where \begin{equation} G_{t} \doteq r_{t+1}+\lambda r_{t+2}+\lambda^{2} r_{t+3}+\cdots=\sum_{l=0}^{\infty} \lambda^{l} r_{t+l+1} \end{equation}

\begin{equation} G_{t} \doteq r_{t+1}+\lambda r_{t+2}+\lambda^{2} r_{t+3}+\cdots=\sum_{l=0}^{\infty} \lambda^{l} r_{t+l+1} \end{equation}

$\lambda$

is a parameter,

$\lambda$

is a parameter,

$0 \leq \lambda<1$

, which discounts the future rewards, the rewards received after l time steps are worth

$0 \leq \lambda<1$

, which discounts the future rewards, the rewards received after l time steps are worth

$\lambda^{l-1}$

times their original value. The discounting of rewards also ensures that the expected return converges. The following recursive relation between the rewards of successive time steps is the key result used in several RL algorithms.(10)

$\lambda^{l-1}$

times their original value. The discounting of rewards also ensures that the expected return converges. The following recursive relation between the rewards of successive time steps is the key result used in several RL algorithms.(10) \begin{align} G_{t} & \doteq r_{t+1}+\lambda r_{t+2}+\lambda^{2} r_{t+3}+\lambda^{3} r_{t+4}+\cdots \nonumber \\[3pt] & =r_{t+1}+\lambda\!\left(r_{t+2}+\lambda r_{t+3}+\lambda^{2} r_{t+4}+\cdots\right) \nonumber\\[3pt] & =r_{t+1}+\lambda G_{t+1} \\[-30pt] \nonumber \end{align}

\begin{align} G_{t} & \doteq r_{t+1}+\lambda r_{t+2}+\lambda^{2} r_{t+3}+\lambda^{3} r_{t+4}+\cdots \nonumber \\[3pt] & =r_{t+1}+\lambda\!\left(r_{t+2}+\lambda r_{t+3}+\lambda^{2} r_{t+4}+\cdots\right) \nonumber\\[3pt] & =r_{t+1}+\lambda G_{t+1} \\[-30pt] \nonumber \end{align}

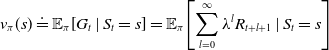

2.1.3. Value functions and Bellman equation

A stochastic policy

![]() $\pi$

, is a mapping from a state

$\pi$

, is a mapping from a state

![]() $s\in \mathcal{S}$

to action

$s\in \mathcal{S}$

to action

![]() $a\in\mathcal{A}(s)$

. It represents the probability of taking action a in state s,

$a\in\mathcal{A}(s)$

. It represents the probability of taking action a in state s,

![]() $\pi(a \mid s)$

. The state-value function, or simply value function, for a state s under a policy

$\pi(a \mid s)$

. The state-value function, or simply value function, for a state s under a policy

![]() $\pi$

is denoted as

$\pi$

is denoted as

![]() $v_{\pi}(s)$

. It is the expected reward that can be accumulated starting with state s and following the policy

$v_{\pi}(s)$

. It is the expected reward that can be accumulated starting with state s and following the policy

![]() $\pi$

thereafter. For an infinite horizon model as described in the previous section, the state-value function can be formalized as:

$\pi$

thereafter. For an infinite horizon model as described in the previous section, the state-value function can be formalized as:

\begin{equation} v_{\pi}(s) \doteq \mathbb{E}_{\pi}\!\left[G_{t} \mid S_{t}=s\right]=\mathbb{E}_{\pi}\!\left[\sum_{l=0}^{\infty} \lambda^{l} R_{t+l+1} \mid S_{t}=s\right]\end{equation}

\begin{equation} v_{\pi}(s) \doteq \mathbb{E}_{\pi}\!\left[G_{t} \mid S_{t}=s\right]=\mathbb{E}_{\pi}\!\left[\sum_{l=0}^{\infty} \lambda^{l} R_{t+l+1} \mid S_{t}=s\right]\end{equation}

Similarly, state-action-value function

![]() $Q\,{:}\, S \times A \rightarrow \mathbb{R}$

is defined as the expected reward that can be gathered starting with state s, taking an action a and following the policy

$Q\,{:}\, S \times A \rightarrow \mathbb{R}$

is defined as the expected reward that can be gathered starting with state s, taking an action a and following the policy

![]() $\pi$

thereafter.

$\pi$

thereafter.

\begin{equation} q_{\pi}(s, a) \doteq \mathbb{E}_{\pi}\!\left[G_{t} \mid S_{t}=s, A_{t}=a\right]=\mathbb{E}_{\pi}\!\left[\sum_{l=0}^{\infty} \lambda^{l} R_{t+l+1} \mid S_{t}=s, A_{t}=a\right]\end{equation}

\begin{equation} q_{\pi}(s, a) \doteq \mathbb{E}_{\pi}\!\left[G_{t} \mid S_{t}=s, A_{t}=a\right]=\mathbb{E}_{\pi}\!\left[\sum_{l=0}^{\infty} \lambda^{l} R_{t+l+1} \mid S_{t}=s, A_{t}=a\right]\end{equation}

A recursive formulation of Equation (11) which relates the value function of a state (

![]() $v_{\pi}(s)$

) to the value function of its successor states (

$v_{\pi}(s)$

) to the value function of its successor states (

![]() $v_{\pi}(s')$

) is a fundamental result utilized throughout RL. It is known as the Bellman equation (Bellman, Reference Bellman1958):

$v_{\pi}(s')$

) is a fundamental result utilized throughout RL. It is known as the Bellman equation (Bellman, Reference Bellman1958):

\begin{equation} \begin{aligned} v_{\pi}(s) & \doteq \mathbb{E}_{\pi}\!\left[G_{t} \mid S_{t}=s\right] \\[3pt] & =\mathbb{E}_{\pi}\!\left[r_{t+1}+\lambda G_{t+1} \mid S_{t}=s\right] \\[3pt] & =\sum_{a} \pi(a \mid s) \sum_{s^{\prime}} \sum_{r} p\!\left(s^{\prime}, r \mid s, a\right)\!\left[r+\lambda \mathbb{E}_{\pi}\!\left[G_{t+1} \mid S_{t+1}=s^{\prime}\right]\right] \\[3pt] & =\sum_{a} \pi(a \mid s) \sum_{s^{\prime}, r} p\!\left(s^{\prime}, r \mid s, a\right)\!\left[r+\lambda v_{\pi}\!\left(s^{\prime}\right)\right] \end{aligned}\end{equation}

\begin{equation} \begin{aligned} v_{\pi}(s) & \doteq \mathbb{E}_{\pi}\!\left[G_{t} \mid S_{t}=s\right] \\[3pt] & =\mathbb{E}_{\pi}\!\left[r_{t+1}+\lambda G_{t+1} \mid S_{t}=s\right] \\[3pt] & =\sum_{a} \pi(a \mid s) \sum_{s^{\prime}} \sum_{r} p\!\left(s^{\prime}, r \mid s, a\right)\!\left[r+\lambda \mathbb{E}_{\pi}\!\left[G_{t+1} \mid S_{t+1}=s^{\prime}\right]\right] \\[3pt] & =\sum_{a} \pi(a \mid s) \sum_{s^{\prime}, r} p\!\left(s^{\prime}, r \mid s, a\right)\!\left[r+\lambda v_{\pi}\!\left(s^{\prime}\right)\right] \end{aligned}\end{equation}

A policy

![]() $\pi$

is considered better than

$\pi$

is considered better than

![]() $\pi^{\prime}$

iff

$\pi^{\prime}$

iff

![]() $v_{\pi}(s) \geq v_{\pi^{\prime}}(s)$

for all states

$v_{\pi}(s) \geq v_{\pi^{\prime}}(s)$

for all states

![]() $s \in \mathcal{S}$

. The policy which is better than all is known as the optimal policy:

$s \in \mathcal{S}$

. The policy which is better than all is known as the optimal policy:

![]() $\pi_{*}$

, its value function, denoted by

$\pi_{*}$

, its value function, denoted by

![]() $v_{*}$

, can be formulated as:

$v_{*}$

, can be formulated as:

The optimal state-action-value function, denoted by

![]() $q_{*}$

, can be formulated as:

$q_{*}$

, can be formulated as:

The Bellman optimality equation declares that the value function of a state under the optimal policy is the expected return received from the ‘best (greedy)’ action in that state.

\begin{equation} \begin{aligned} v_{*}(s) & =\max _{a \in \mathcal{A}(s)} q_{\pi_{*}}(s, a) \\[3pt] & =\max _{a} \mathbb{E}_{\pi_{*}}\!\left[G_{t} \mid S_{t}=s, A_{t}=a\right] \\[3pt] & =\max _{a} \mathbb{E}_{\pi_{*}}\!\left[r_{t+1}+\lambda G_{t+1} \mid S_{t}=s, A_{t}=a\right] \\[3pt] & =\max _{a} \mathbb{E}\!\left[r_{t+1}+\lambda v_{*}\!\left(S_{t+1}\right) \mid S_{t}=s, A_{t}=a\right] \\[3pt] & =\max _{a} \sum_{s^{\prime}, r} p\!\left(s^{\prime}, r \mid s, a\right)\!\left[r+\lambda v_{*}\!\left(s^{\prime}\right)\right]. \end{aligned}\end{equation}

\begin{equation} \begin{aligned} v_{*}(s) & =\max _{a \in \mathcal{A}(s)} q_{\pi_{*}}(s, a) \\[3pt] & =\max _{a} \mathbb{E}_{\pi_{*}}\!\left[G_{t} \mid S_{t}=s, A_{t}=a\right] \\[3pt] & =\max _{a} \mathbb{E}_{\pi_{*}}\!\left[r_{t+1}+\lambda G_{t+1} \mid S_{t}=s, A_{t}=a\right] \\[3pt] & =\max _{a} \mathbb{E}\!\left[r_{t+1}+\lambda v_{*}\!\left(S_{t+1}\right) \mid S_{t}=s, A_{t}=a\right] \\[3pt] & =\max _{a} \sum_{s^{\prime}, r} p\!\left(s^{\prime}, r \mid s, a\right)\!\left[r+\lambda v_{*}\!\left(s^{\prime}\right)\right]. \end{aligned}\end{equation}

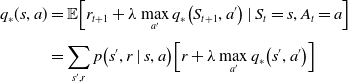

Similarly, the bellman optimality equation for

![]() $q_{*}$

, can be formulated as:

$q_{*}$

, can be formulated as:

\begin{equation} \begin{aligned} q_{*}(s, a) & =\mathbb{E}\!\left[r_{t+1}+\lambda \max _{a^{\prime}} q_{*}\!\left(S_{t+1}, a^{\prime}\right) \mid S_{t}=s, A_{t}=a\right] \\[3pt] & =\sum_{s^{\prime}, r} p\!\left(s^{\prime}, r \mid s, a\right)\!\left[r+\lambda \max _{a^{\prime}} q_{*}\!\left(s^{\prime}, a^{\prime}\right)\right] \end{aligned}\end{equation}

\begin{equation} \begin{aligned} q_{*}(s, a) & =\mathbb{E}\!\left[r_{t+1}+\lambda \max _{a^{\prime}} q_{*}\!\left(S_{t+1}, a^{\prime}\right) \mid S_{t}=s, A_{t}=a\right] \\[3pt] & =\sum_{s^{\prime}, r} p\!\left(s^{\prime}, r \mid s, a\right)\!\left[r+\lambda \max _{a^{\prime}} q_{*}\!\left(s^{\prime}, a^{\prime}\right)\right] \end{aligned}\end{equation}

2.2. RL algorithms

Model-based RL algorithms rely on the availability of the transition and reward function. The critical component of such techniques is ‘planning.’ The agent can plan and make predictions about its possible options and use it to improve the policy (Figure 2).

A major challenge of these techniques is the unavailability of the ground truth model, resulting in a bias where the agent performs poorly when tested in the real environment. In this work, we primarily focus on model-free RL.

Model-free methods don’t require a model and primarily rely on ‘learning’ the value functions directly.

Figure 2. Workflow for model-based learning techniques

2.2.1. Dynamic programming

When the model for the problem is known, dynamic programming (Bellman, Reference Bellman1954), a mathematical optimization technique, can be utilized to learn the optimal policy. Generalized Policy Iteration (GPI) is such an approach, and it uses an iterative two-step procedure to learn the optimal policy.

-

1. Policy evaluation: The first step evaluates the state-value function

$v_{\pi}$

using the recursive formulation in Equation (13).

$v_{\pi}$

using the recursive formulation in Equation (13). -

2. Policy improvement: The second step uses the value function to generate an improved policy

$\pi' \geq \pi$

using a greedy approach formulated in Equation (17).

$\pi' \geq \pi$

using a greedy approach formulated in Equation (17).

Since the improved policy

![]() $\pi'$

is greedy in actions to the current policy, that is,

$\pi'$

is greedy in actions to the current policy, that is,

![]() $\pi'(s) = \arg\max_{a \in \mathcal{A}} q_\pi(s, a)$

$\pi'(s) = \arg\max_{a \in \mathcal{A}} q_\pi(s, a)$

\begin{equation} \begin{aligned} q_\pi(s, \pi'(s)) & = q_\pi(s, \arg\max_{a \in \mathcal{A}} q_\pi(s, a)) \\[3pt] & = \max_{a \in \mathcal{A}} q_\pi(s, a) \geq q_\pi(s, \pi(s)) = v_\pi(s) \end{aligned}\end{equation}

\begin{equation} \begin{aligned} q_\pi(s, \pi'(s)) & = q_\pi(s, \arg\max_{a \in \mathcal{A}} q_\pi(s, a)) \\[3pt] & = \max_{a \in \mathcal{A}} q_\pi(s, a) \geq q_\pi(s, \pi(s)) = v_\pi(s) \end{aligned}\end{equation}

this iterative process is guaranteed to converge to the optimal value (Sutton & Barto, Reference Sutton and Barto2018).

2.2.2. Monte Carlo techniques

Monte Carlo techniques estimate the value functions without the need of system dynamics of the environment making it a model-free method. They rely on complete episodes to approximate the expected return,

![]() $G_{t}$

of a state. Recall, the value function,

$G_{t}$

of a state. Recall, the value function,

![]() $v_t(s) = \mathbb{E}[ G_t \vert S_t=s]$

. In an episode,

$v_t(s) = \mathbb{E}[ G_t \vert S_t=s]$

. In an episode,

![]() $s_{0}, a_{0}, r_{1}, s_{1}, a_{1}, r_{2}, s_{2}, a_{2}, r_{3}, \ldots$

, the occurrence of a state s is known as the visit to s. First-Visit Monte Carlo algorithm estimates the value function as the average of the returns (

$s_{0}, a_{0}, r_{1}, s_{1}, a_{1}, r_{2}, s_{2}, a_{2}, r_{3}, \ldots$

, the occurrence of a state s is known as the visit to s. First-Visit Monte Carlo algorithm estimates the value function as the average of the returns (

![]() $G_t$

) following the first visits to s whereas Every-Visit Monte Carlo algorithm estimates it as the average of the returns following every visit to s.

$G_t$

) following the first visits to s whereas Every-Visit Monte Carlo algorithm estimates it as the average of the returns following every visit to s.

The optimal policy is then evaluated using the same GPI explained previously. The schematic is shown in Figure 3.

Figure 3. GPI iterative process for optimal policy

2.2.3. Temporal-difference methods

Temporal Difference (TD) methods are model-free. Unlike MC methods, where the agents wait until the end of the episode for the final return to estimate the value functions, TD methods rely on an estimated final return,

![]() $r_{t+1} + \lambda v(s_{t+1})$

known as the TD target. The state-value function and state-action-value function are estimated at every step using the below formulation:

$r_{t+1} + \lambda v(s_{t+1})$

known as the TD target. The state-value function and state-action-value function are estimated at every step using the below formulation:

where

![]() $\alpha$

is a hyperparameter called the learning rate, which controls the rate at which the value gets updated at each step. This technique is called bootstrapping because the target value is updated using the current estimate of the return (

$\alpha$

is a hyperparameter called the learning rate, which controls the rate at which the value gets updated at each step. This technique is called bootstrapping because the target value is updated using the current estimate of the return (

![]() $r_{t+1} + \lambda v(s_{t+1})$

) and not the exact return,

$r_{t+1} + \lambda v(s_{t+1})$

) and not the exact return,

![]() $G_t$

.

$G_t$

.

2.2.4. Policy gradient

The methods discussed so far estimate the state-value, state-action-value functions respectively to learn what actions to take to maximize the overall return. Policy gradient consists of a family of methods that learn a parameterized policy without computing these value functions. A stochastic policy, parameterized with a set of parameters,

![]() $\theta \in \mathbb{R}^{d}$

, can be represented as,

$\theta \in \mathbb{R}^{d}$

, can be represented as,

![]() $\pi(a \mid s, \theta)=p\!\left(A_{t}=a \mid S_{t}=s, \boldsymbol{\theta}_{t}=\theta\right)$

, that is, the probability of taking an action at given state with a set of parameters

$\pi(a \mid s, \theta)=p\!\left(A_{t}=a \mid S_{t}=s, \boldsymbol{\theta}_{t}=\theta\right)$

, that is, the probability of taking an action at given state with a set of parameters

![]() $\theta$

at time t.

$\theta$

at time t.

The parameters are learned by computing the gradient of an objective function,

![]() $J(\theta)$

, a scalar quantity that measures the performance of the policy. The goal of these methods is to maximize the performance by updating the parameters of the policy using stochastic gradient ascent (Bottou, Reference Bottou1998) as

$J(\theta)$

, a scalar quantity that measures the performance of the policy. The goal of these methods is to maximize the performance by updating the parameters of the policy using stochastic gradient ascent (Bottou, Reference Bottou1998) as

where

![]() $\nabla_{\theta} J\!\left(\pi_{\theta}\right) \in \mathbb{R}^{d}$

is an estimate whose expectation is equivalent to the gradient of the policy’s performance, known as policy gradient.

$\nabla_{\theta} J\!\left(\pi_{\theta}\right) \in \mathbb{R}^{d}$

is an estimate whose expectation is equivalent to the gradient of the policy’s performance, known as policy gradient.

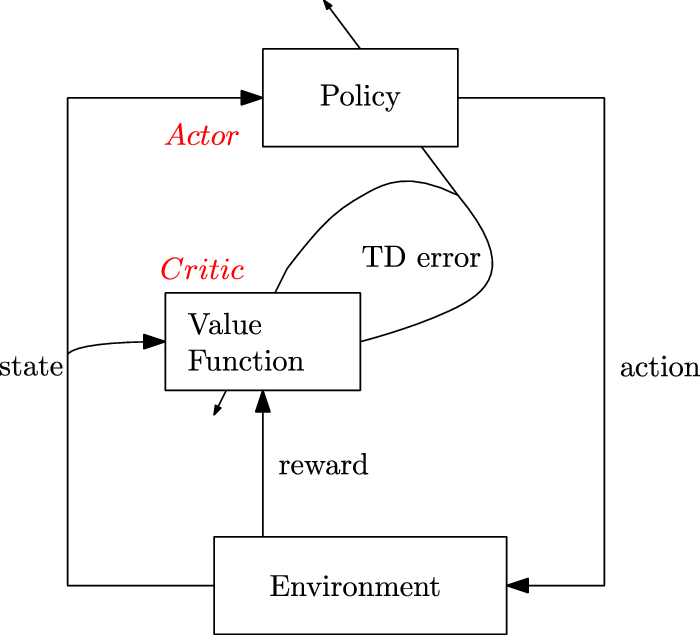

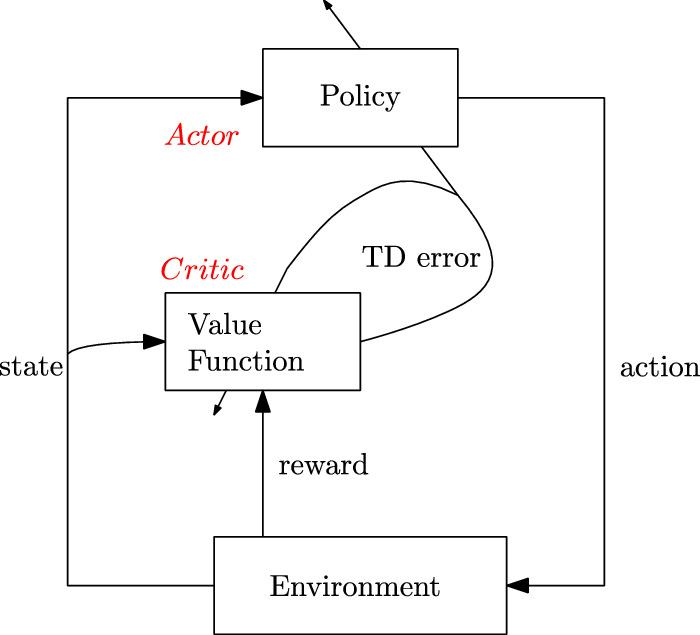

2.2.5. Actor-critic methods

They consist of algorithms that rely on estimating the parameters of the value functions (state-value or state-action-value function) concurrently with the policy. The two components include:

-

1. Actor: is responsible for the agent’s policy, that is, it is used to pick out which action to perform. The underlying objective is to update the parameters of the policy using Equation (21) guided by the estimates from the critic as the baseline.

-

2. Critic: is responsible for estimating the agent’s value functions. The objective is to update the parameters,

$\phi$

, of the state-value function,

$\phi$

, of the state-value function,

$v(s;\ \phi)$

, or the state-action-value function,

$v(s;\ \phi)$

, or the state-action-value function,

$q(s,a;\ \phi)$

by computing the TD error,

$q(s,a;\ \phi)$

by computing the TD error,

$\delta_t$

as (22)Figure 4 shows the working of an actor-critic method.

$\delta_t$

as (22)Figure 4 shows the working of an actor-critic method. \begin{equation} \delta_{t}=r_{t+1}+\lambda v\!\left(s_{t+1}\right)-v(s_{t}) \end{equation}

\begin{equation} \delta_{t}=r_{t+1}+\lambda v\!\left(s_{t+1}\right)-v(s_{t}) \end{equation}

Figure 4. Architecture for actor-critic methods (Sutton & Barto, Reference Sutton and Barto2018)

2.3. Artificial neural networks

Artificial Neural Networks (ANNs), often referred to as NNs, were first proposed several decades ago (Fitch, Reference Fitch1944). The formulation of these methods is heavily inspired by the human brain, a highly intricate organ that is made up of over 100 billion neurons (Herculano-Houzel, Reference Herculano-Houzel2009). Each neuron is a specialized nerve cell that transmits electrochemical signals to other neurons, gland cells, muscles, etc., which further perform specialized tasks. With such an interconnected network of neurons, our brain is able to carry out quite complex tasks. This mechanism is what ANNs are based on.

2.3.1. Mathematical model

A NN is a computational model that consists of an input layer, hidden layers (any number), and an output layer. Each layer consists of several artificial neurons, the fundamental units of the network, which define a function on the inputs. Any network that uses several hidden layers (usually more than 3) is referred to as a Deep Neural Network (DNN), and the paradigm of ML that deals with DNN is known as Deep Learning (DL) (Goodfellow et al., Reference Goodfellow, Bengio and Courville2016).

In a feed-forward network, the interconnections between the nodes, that is, the neurons, do not form a cycle. Network topologies where the connections between neurons include a directed graph over a temporal sequence are known as Recurrent Neural Networks (RNNs). In this work, we primarily focus on a feed-forward network which is trained by performing a forward pass, also referred as forward propagation and a subsequent backward pass, also known as backpropagation.

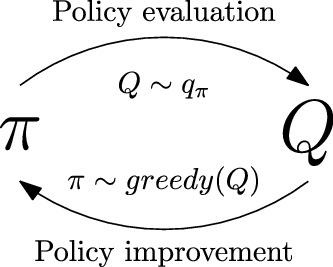

Forward Propagation: When training a NN, the forward pass is the calculation phase, sometimes referred to as the evaluation phase. The input signals are forward propagated through all the neurons in each network layer in a sequential manner. The activation of a single neuron is shown in Figure 5, where the input signals

![]() $x_1,x_2\ldots x_n$

are first multiplied by a set of weights,

$x_1,x_2\ldots x_n$

are first multiplied by a set of weights,

![]() $w_{i,j}$

, and then combined with a bias term,

$w_{i,j}$

, and then combined with a bias term,

![]() $b_j$

to obtain a pre-activation signal,

$b_j$

to obtain a pre-activation signal,

![]() $z_j$

. The bias is usually utilized to offset the pre-activation signal in case of an all-zero input; at times, this can alternatively be seen as an additional weight that is applied to an input, that is, a constant 1.

$z_j$

. The bias is usually utilized to offset the pre-activation signal in case of an all-zero input; at times, this can alternatively be seen as an additional weight that is applied to an input, that is, a constant 1.

Figure 5. Activation of a single neuron in the neural network

An activation function,

![]() $g_j$

is then applied to

$g_j$

is then applied to

![]() $z_j$

to obtain the final output signal of the neuron. The process is then repeated across all neurons of each layer one by one until the final output is received. Figure 6 shows the forward propagation in a NN with a single hidden layer of 3 neurons. The final output,

$z_j$

to obtain the final output signal of the neuron. The process is then repeated across all neurons of each layer one by one until the final output is received. Figure 6 shows the forward propagation in a NN with a single hidden layer of 3 neurons. The final output,

![]() $x_k$

, is the prediction made by the NN given the input. This completes the forward pass of the network.

$x_k$

, is the prediction made by the NN given the input. This completes the forward pass of the network.

Figure 6. Forward propagation in an artificial neural network with a single hidden layer

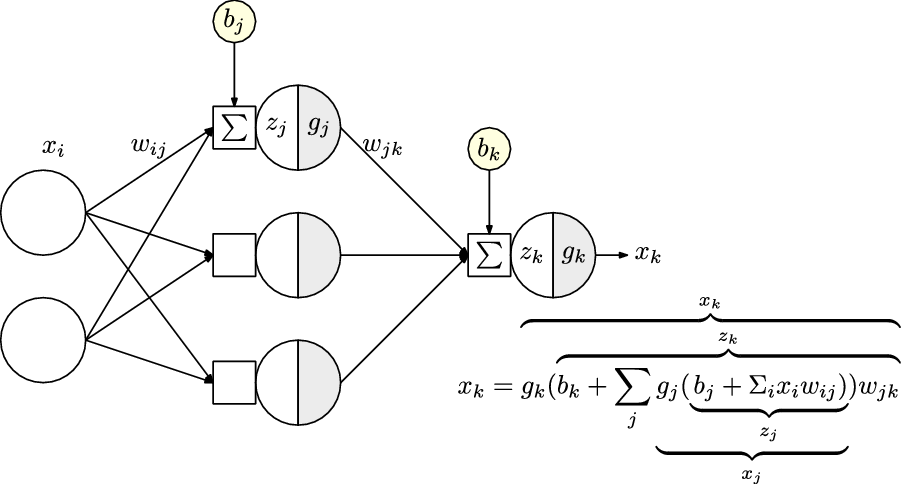

Backpropogation: When a neural network is created, the parameters,

![]() $\theta$

including the weights and biases, are often initialized randomly or else sampled from a distribution. The prediction of the NN from the forward pass is compared with the true output, computing an error, E, using a loss function. The resultant scalar value indicates how well the parameters of the NN perform the specific task.

$\theta$

including the weights and biases, are often initialized randomly or else sampled from a distribution. The prediction of the NN from the forward pass is compared with the true output, computing an error, E, using a loss function. The resultant scalar value indicates how well the parameters of the NN perform the specific task.

Training a NN involves adjusting the parameters of network

![]() $\theta$

, by first computing the derivative of the loss function and then using that information to update the parameters in the direction where the error is reduced. This is called gradient descent. Formally, the parameters are updated as:

$\theta$

, by first computing the derivative of the loss function and then using that information to update the parameters in the direction where the error is reduced. This is called gradient descent. Formally, the parameters are updated as:

where

![]() $\alpha$

is the learning rate. The term

$\alpha$

is the learning rate. The term

![]() $\frac{\partial E}{\partial \theta_{L}^{t}}$

represents the partial derivative of the error with respect to the parameters of a layer L. These gradients can be computed by applying chain rule (shown in Figure 7) and involves the gradients of subsequent neurons and layers. Repeated application of chain rule can be used to calculate these gradients at every layer, all the way from the final layer, by navigating through the network backward, hence the name backpropogation (Rumelhart et al., Reference Rumelhart, Hinton and Mcclelland1986). This is a particular case of Automatic Differentiation (Rall, Reference Rall1981).

$\frac{\partial E}{\partial \theta_{L}^{t}}$

represents the partial derivative of the error with respect to the parameters of a layer L. These gradients can be computed by applying chain rule (shown in Figure 7) and involves the gradients of subsequent neurons and layers. Repeated application of chain rule can be used to calculate these gradients at every layer, all the way from the final layer, by navigating through the network backward, hence the name backpropogation (Rumelhart et al., Reference Rumelhart, Hinton and Mcclelland1986). This is a particular case of Automatic Differentiation (Rall, Reference Rall1981).

Figure 7. Backpropagation of gradients by application of chain rule

A cost function is another name for a loss function when applied to either the entire training data or a smaller subset known as the batch or mini-batch. Some commonly used cost functions include mean squared error, mean absolute error, cross-entropy, hinge loss, Kullback-Leibler divergence (Kullback & Leibler, Reference Kullback and Leibler1951) etc.

2.4. Activation Function and Weight Initialization

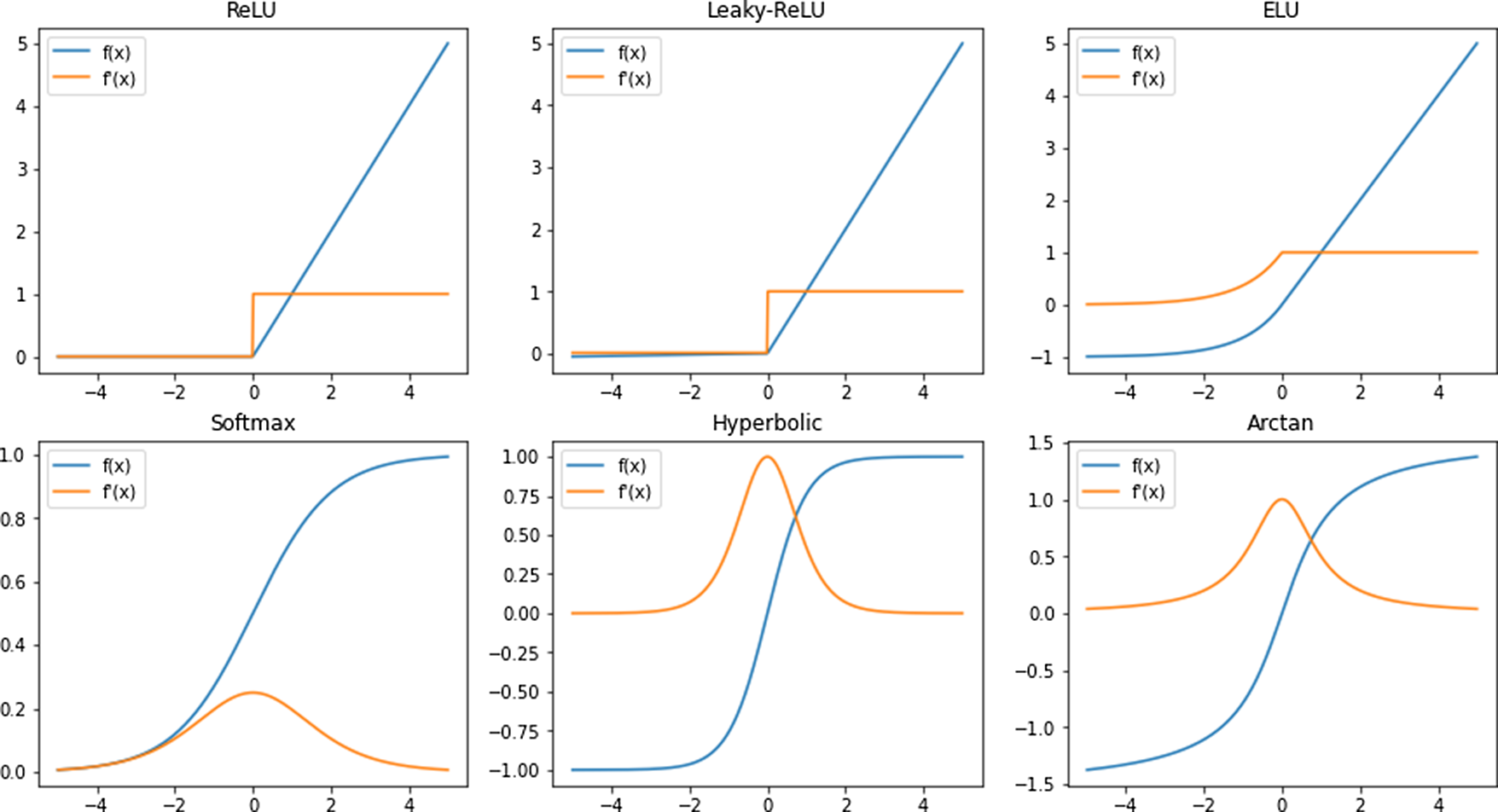

Neural Network (NN) with at least one hidden layer can serve as universal function approximators (Cybenko, Reference Cybenko1989). A NN without an activation function acts like a linear regressor, where the output is a linear combination of its inputs, which restricts its overall learning capacity. An activation function is often used to introduce nonlinearity in the network, which enables it to model much more evolved and complex inputs like images, videos (Ma et al., 2018), audio (Purwins et al., Reference Purwins2019), text (Radford et al., Reference Radford, Wu, Child, Luan, Amodei and Sutskever2019), etc. Some of the widely used nonlinear activation functions are shown in Figure 8.

Figure 8. Commonly used activation functions, f(x) and their derivatives,

![]() $f^{\,\prime}$

(x) for

$f^{\,\prime}$

(x) for

![]() $x \in [\!-5,5]$

$x \in [\!-5,5]$

The initialization of the network parameters, primarily the weights and biases of the different layers, plays a critical role when training a neural network. Situations where the activation output and the gradients explode (i.e., become significantly large) or vanish (i.e., become significantly small) due to these parameter values can significantly affect the convergence of the NN as highlighted in Sousa (Reference Sousa2016). Some of the widely used weight initialization strategies and their properties are summarized below:

-

• Normal: The values for the parameters are drawn from a normal distribution,

$\mathcal{N}\!\left(\mu, \sigma^{2}\right)$

, where

$\mathcal{N}\!\left(\mu, \sigma^{2}\right)$

, where

$\mu$

is the mean, and

$\mu$

is the mean, and

$\sigma^{2}$

is the variance. The probability distribution function for normal distribution is: (24)

$\sigma^{2}$

is the variance. The probability distribution function for normal distribution is: (24) \begin{equation} f(x)=\frac{1}{\sigma \sqrt{2 \pi}} e^{-\frac{1}{2}\!\left(\frac{x-\mu}{\sigma}\right)^{2}} \end{equation}

\begin{equation} f(x)=\frac{1}{\sigma \sqrt{2 \pi}} e^{-\frac{1}{2}\!\left(\frac{x-\mu}{\sigma}\right)^{2}} \end{equation}

-

• Uniform: The values for the parameters are drawn from a uniform distribution,

$\mathcal{U}(a, b)$

, where a is the lower bound and b is the upper bound for the distribution. The probability distribution function for continuous uniform distribution is: (25)

$\mathcal{U}(a, b)$

, where a is the lower bound and b is the upper bound for the distribution. The probability distribution function for continuous uniform distribution is: (25) \begin{equation} f(x)=\left\{\begin{array}{l@{\quad}l} \frac{1}{b-a} & \text { for } a \leq x \leq b, \\ \\[-8pt] 0 & \text { for } xb \end{array}\right. \end{equation}

\begin{equation} f(x)=\left\{\begin{array}{l@{\quad}l} \frac{1}{b-a} & \text { for } a \leq x \leq b, \\ \\[-8pt] 0 & \text { for } xb \end{array}\right. \end{equation}

-

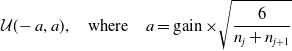

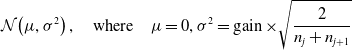

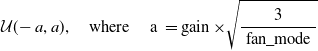

• Xavier: This is also known as Glorot initialization (Glorot & Bengio, Reference Glorot and Bengio2010) and overcomes the problem of vanishing, exploding gradients by adjusting the variance of the weights. Xavier uniform initialization samples the weight matrix, W, between the layer j and

$j+1$

from a uniform distribution. (26)The parameter gain is an optional scaling factor and, n is the number of neurons of the respective layer. Xavier normal initialization draws samples from a normal distribution.

$j+1$

from a uniform distribution. (26)The parameter gain is an optional scaling factor and, n is the number of neurons of the respective layer. Xavier normal initialization draws samples from a normal distribution. \begin{equation} \mathcal{U}(\!-a,a), \quad\text{where}\quad a=\operatorname{gain} \times \sqrt{\frac{6}{n_j+n_{j+1}}} \end{equation}

(27)

\begin{equation} \mathcal{U}(\!-a,a), \quad\text{where}\quad a=\operatorname{gain} \times \sqrt{\frac{6}{n_j+n_{j+1}}} \end{equation}

(27) \begin{equation} \mathcal{N}\!\left(\mu, \sigma^{2}\right), \quad\text{where}\quad \mu=0, \sigma^{2} = \operatorname{gain} \times \sqrt{\frac{2}{n_j+n_{j+1}}} \end{equation}

\begin{equation} \mathcal{N}\!\left(\mu, \sigma^{2}\right), \quad\text{where}\quad \mu=0, \sigma^{2} = \operatorname{gain} \times \sqrt{\frac{2}{n_j+n_{j+1}}} \end{equation}

-

• Kaiming: This is also known as He initialization (He et al., Reference He2015b), and is well suited for NN with asymmetric and nonlinear activation functions, for example, ReLU, Leaky-ReLU, ELU, etc. Kaiming uniform initialization samples the weight matrix, W, between the layer j and

$j+1$

from a uniform distribution. (28)The parameter gain is an optional scaling factor, and

$j+1$

from a uniform distribution. (28)The parameter gain is an optional scaling factor, and \begin{equation} \mathcal{U}(\!-a,a), \quad\text{where}\quad \text { a }=\operatorname{gain} \times \sqrt{\frac{3}{\text { fan_mode }}} \end{equation}

\begin{equation} \mathcal{U}(\!-a,a), \quad\text{where}\quad \text { a }=\operatorname{gain} \times \sqrt{\frac{3}{\text { fan_mode }}} \end{equation}

${\text { fan_mode }}$

is the number of neurons of the respective layer.

${\text { fan_mode }}$

is the number of neurons of the respective layer.

${\text { fan_mode }}=n_{j+1}$

preserves the magnitude of the variance for the weights during the backward pass whereas,

${\text { fan_mode }}=n_{j+1}$

preserves the magnitude of the variance for the weights during the backward pass whereas,

${\text { fan_mode }}=n_{j}$

preserves it in the forward pass. Kaiming normal initialization draws samples from a normal distribution. (29)

${\text { fan_mode }}=n_{j}$

preserves it in the forward pass. Kaiming normal initialization draws samples from a normal distribution. (29) \begin{equation} \begin{aligned} \mathcal{N}\!\left(\mu, \sigma^{2}\right), \quad\text{where}\quad \mu=0, \mathrm{std} & = \frac{\text { gain }}{\sqrt{\text { fan_mode }}} \end{aligned} \end{equation}

\begin{equation} \begin{aligned} \mathcal{N}\!\left(\mu, \sigma^{2}\right), \quad\text{where}\quad \mu=0, \mathrm{std} & = \frac{\text { gain }}{\sqrt{\text { fan_mode }}} \end{aligned} \end{equation}

2.5. Evolutionary computation

EC draws inspiration from nature and utilizes the same concepts to solve computational problems. This family of algorithms has its root spread out in computer science, artificial intelligence as well as evolutionary biology, Figure 9.

Figure 9. Evolutionary computation has its roots within computer science, artificial intelligence and evolutionary biology

EC belongs to a class of population-based optimization techniques where a set of solutions are generated and iteratively updated through genetic operators. A new generation of solutions is produced by stochastically eliminating ‘less fit’ solutions and introducing minor random variations in the population. At a high level, it is simply adapting to the changes within the environment by means of small random changes. The combined use of EC and DL has led to very auspicious results on several complex engineering tasks and drawn great academic interest (Salimans et al., Reference Salimans2017; Conti et al., Reference Conti, Madhavan, Such, Lehman, Stanley and Clune2018).

EC consists of several meta-heuristic optimization algorithms, some of which are explained in the following subsections.

2.5.1. Genetic algorithm

GAs, first developed in 1975 (Holland, Reference Holland1992a), is a widely used class of evolutionary algorithms (Mitchell, Reference Mitchell1996) that takes inspiration from Charles Darwin’s theory of evolution (Darwin, Reference Darwin1859). It is meta-heuristic-based optimization technique that follows the principle of ‘survival of the fittest’.

The key components of any GA (Mitchell, Reference Mitchell1996) are:

-

• Population: is the subset of all possible solutions to a problem. Every GA starts with an initialization of a set of solutions that become the first population. Each solution, individual from the population, is known as a chromosome which consists of several genes.

-

• Genotype: is the representation of the individuals of a population in the computation space. This is done to ensure efficient processing and manipulation by the computing system.

-

• Phenotype: is the actual representation of the population in the space of solutions.

-

• Encoding and Decoding: For more straightforward problems, the genotype and the phenotype may be the same. However, complex problems rely on a translation mechanism to encode a phenotype to a genotype and a decoder for the reverse task.

-

• Fitness function: is a tool to measure the performance of an individual on a specific problem.

-

• Genetic operators: are tools utilized by the algorithm to produce a new set of solutions from the existing ones.

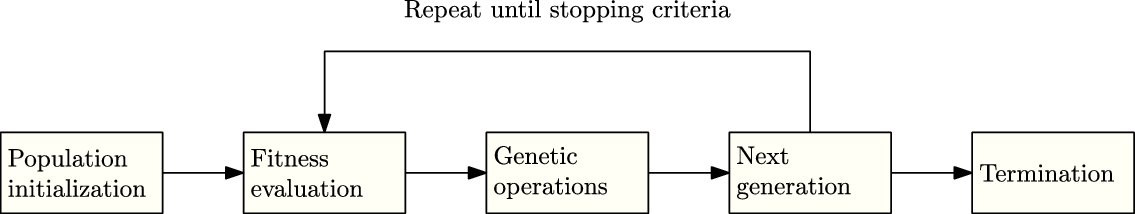

The algorithm starts by initializing a set of solutions, the population, which is also known as a generation. At each iteration, the fitness function is used to evaluate the performance of the entire population. The solutions with higher fitness (Baluja & Caruana, Reference Baluja and Caruana1995) are stochastically selected as the parents of the current generation. Genetic operators are applied to the parents to generate the next generation. This process is repeated until the required level of fitness is achieved. The complete workflow of a simple GA is shown in Figure 10.

Figure 10. Workflow of a simple genetic algorithm

The genetic operators are fundamental components of this algorithm that guides it toward a solution. The widely used genetic operators are summarized in the following subsections.

Selection Operators: The selection operator uses the fitness values as a measure of performance and picks a subset of the population as the current generation’s parents. A common strategy is to assign a probability value proportional to the fitness scores, with the goal that more fit individuals have a higher chance of being selected. Some other strategies include random selection, tournament selection, and roulette wheel selection, proportionate selection, steady-state selection, etc. (Haupt & Haupt, Reference Haupt and Haupt2003). The best performing individual from the current generation is selected and carried to the next generation without any alterations. This strategy is known as elitism (Baluja & Caruana, Reference Baluja and Caruana1995) and ensures that performance does not decrease over iterations.

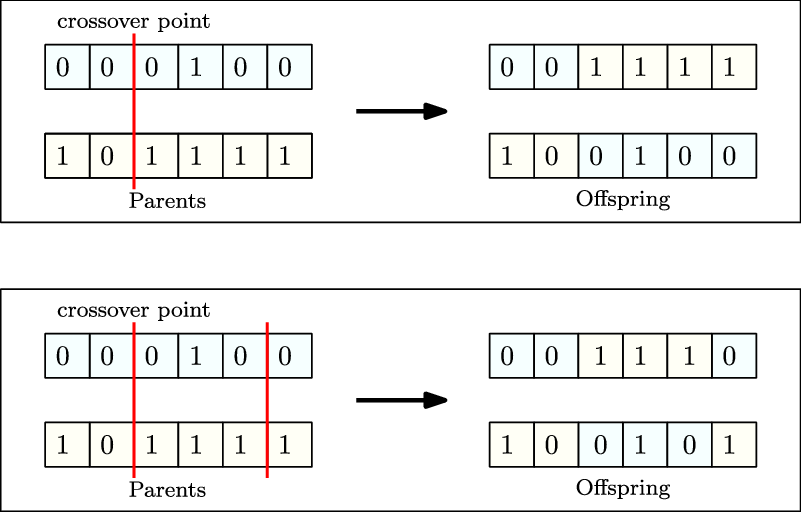

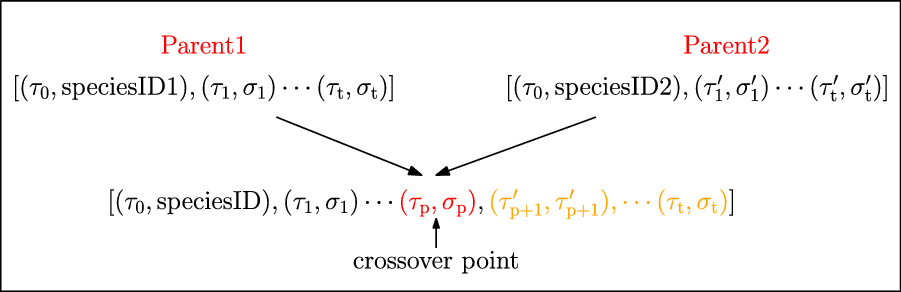

Crossover operator: This operator picks a pair from the solutions shortlisted by the selection operator. The selected pair then mates and generates new solutions, which are known as the offspring. Each offspring is produced stochastically and contains genetic information, that is, features from either parent which is analogous to the crossover of genes in biology.

Single-point crossover is a commonly used crossover operator. A random crossover point is picked on the encoding of both parents. The offspring are then produced by recombination of the left partition of one parent with the right split of the other and vice versa. The same strategy can be extended to more than one crossover point. Figure 11 shows single-point crossover and two-point crossover between two binary encoded parents. In situations, where the encoding is an ordered list (Larranaga et al., Reference Larranaga1999), the solutions generated with the methods mentioned above, may be invalid. To overcome such issues, specialized operators like order crossover (Larranaga et al., Reference Larranaga1996), cycle crossover (CX) (Larranaga et al., Reference Larranaga1999) and partially mapped crossover have been published.

Figure 11. Single-point crossover (top) and two-point crossover (bottom) between two parents to generate offsprings for the next generation

Mutation operator: The mutation operator makes small arbitrary changes to an individual or its encoded representation which promotes the algorithm to explore the search space and find new solutions. As a result, the diversity within the population is improved during successive generations, and the algorithm can avoid getting stuck at local minima. Some commonly used mutation operators include Gaussian operators, uniform operators, bit flip operators (binary encoding), and shrink operators (Da Ronco & Benini, Reference Da Ronco, Benini and Kim2014). These operators are applied with a relatively small probability known as the mutation rate and have a few hyperparameters that can be tuned to specific problems.

2.5.2. Evolution strategies

Evolution Strategy (ES) is a black-box optimization technique that draws inspiration from the theory of natural selection, a process in which individuals from a population adapt to the changes of the environment.

Consider a 2D function,

![]() $f\,{:}\, \mathbb{R}^{2} \rightarrow \mathbb{R}$

, for which the gradient,

$f\,{:}\, \mathbb{R}^{2} \rightarrow \mathbb{R}$

, for which the gradient,

![]() $\nabla f\,{:}\, \mathbb{R}^{n} \rightarrow \mathbb{R}^{n}$

, can not be computed directly, and we wish to obtain the parameters

$\nabla f\,{:}\, \mathbb{R}^{n} \rightarrow \mathbb{R}^{n}$

, can not be computed directly, and we wish to obtain the parameters

![]() $x^*$

and

$x^*$

and

![]() $y^*$

, such that

$y^*$

, such that

![]() $f(x^*,y^*)$

is the global maximum. The ES algorithm starts with a set of solutions for the problem, which are sampled from a parameterized distribution. An objective function then evaluates each solution and returns a single value as a fitness metric which is then utilized to update the parameters of the distribution.

$f(x^*,y^*)$

is the global maximum. The ES algorithm starts with a set of solutions for the problem, which are sampled from a parameterized distribution. An objective function then evaluates each solution and returns a single value as a fitness metric which is then utilized to update the parameters of the distribution.

Gaussian Evolution Strategy: One of the simplest techniques is to sample from a normal distribution,

![]() $\mathcal{N}\!\left(\mu, \sigma^{2}\right)$

, where

$\mathcal{N}\!\left(\mu, \sigma^{2}\right)$

, where

![]() $\mu$

is the mean and

$\mu$

is the mean and

![]() $\sigma^2$

is the variance. For the function defined above, one could start with

$\sigma^2$

is the variance. For the function defined above, one could start with

![]() $\mu=(0,0)$

and some constant,

$\mu=(0,0)$

and some constant,

![]() $\sigma=(\sigma_x,\sigma_y)$

. After one generation, the mean can be updated to the best performing solution from the population. Such a naive approach is only applicable to simple problems and also prone to getting stuck at local minima since it is greedy with respect to the best solution at each step of the training process.

$\sigma=(\sigma_x,\sigma_y)$

. After one generation, the mean can be updated to the best performing solution from the population. Such a naive approach is only applicable to simple problems and also prone to getting stuck at local minima since it is greedy with respect to the best solution at each step of the training process.

Covariance Matrix Adaptation Evolution Strategy: The simple Gaussian strategy explained above assumes a constant variance. In some applications, it is worthwhile to explore the search space by having a larger variance initially and fine-tuning it later on when we are close to a good solution. Covariance-Matrix Adaptation Evolution Strategy (CMA-ES) samples the solutions from a multivariate Gaussian distribution. It then adapts both the mean as well as the covariance matrix from the fitness metrics of the solutions. This algorithm is widely applicable to non-convex and nonlinear optimization problems (Hansen & Auger, Reference Hansen and Auger2011), especially in the continuous domain. There is no need to tune the parameters for a specific application since optimizing the parameters is incorporated within the design of this algorithm.

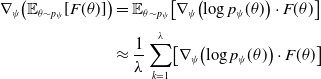

Natural Evolution Strategy: Simple Gaussian ES and CMA-ES both have the identical drawback of utilizing only the best individuals from the population and discarding all the others. At times, it might be beneficial to incorporate the information from low-scoring individuals as well, since they contain the vital information of what not to do. Natural Evolution Strategy (NES) (Wierstra et al., Reference Wierstra2008) utilize the information from the entire population to estimate the gradients, which are utilized to update the distribution from which the solutions were sampled. For an objective function, F with parameters

![]() $\theta$

which are sampled from a parameterized distribution

$\theta$

which are sampled from a parameterized distribution

![]() $p_\psi(\theta)$

. The algorithm’s goal is to optimize the average objective

$p_\psi(\theta)$

. The algorithm’s goal is to optimize the average objective

![]() $\mathbb{E}_{\theta \sim p_{\psi}}[F(\theta)]$

. The parameters of the distribution are iteratively updated using stochastic gradient ascent. The gradient of the objective is computed as follows:

$\mathbb{E}_{\theta \sim p_{\psi}}[F(\theta)]$

. The parameters of the distribution are iteratively updated using stochastic gradient ascent. The gradient of the objective is computed as follows:

\begin{align} \nabla_{\psi}\!\left(\mathbb{E}_{\theta \sim p_{\psi}}[F(\theta)]\right) & =\nabla_{\psi}\!\left(\int_{\theta} p_{\psi}(\theta) \cdot F(\theta) \cdot d \theta\right) \nonumber\\ & =\int_{\theta} \nabla_{\psi}\!\left(p_{\psi}(\theta)\right) \cdot F(\theta) \cdot d \theta \nonumber\\ &=\int_{\theta} p_{\psi}(\theta) \cdot \nabla_{\psi}\!\left(\log p_{\psi}(\theta)\right) \cdot F(\theta) \cdot d \theta \nonumber\\ & =\mathbb{E}_{\theta\sim p_{\psi}}\!\left[\nabla_{\psi}\!\left(\log p_{\psi}(\theta)\right) \cdot F(\theta)\right] \end{align}

\begin{align} \nabla_{\psi}\!\left(\mathbb{E}_{\theta \sim p_{\psi}}[F(\theta)]\right) & =\nabla_{\psi}\!\left(\int_{\theta} p_{\psi}(\theta) \cdot F(\theta) \cdot d \theta\right) \nonumber\\ & =\int_{\theta} \nabla_{\psi}\!\left(p_{\psi}(\theta)\right) \cdot F(\theta) \cdot d \theta \nonumber\\ &=\int_{\theta} p_{\psi}(\theta) \cdot \nabla_{\psi}\!\left(\log p_{\psi}(\theta)\right) \cdot F(\theta) \cdot d \theta \nonumber\\ & =\mathbb{E}_{\theta\sim p_{\psi}}\!\left[\nabla_{\psi}\!\left(\log p_{\psi}(\theta)\right) \cdot F(\theta)\right] \end{align}

Equation: 30 uses the same log-likelihood technique as the popular reinforce algorithm (Sutton & Barto, Reference Sutton and Barto2018). The expectation can be computed using Monte Carlo approximations by sampling points as shown in Equation (31).

\begin{equation}\begin{aligned}\nabla_{\psi}\!\left(\mathbb{E}_{\theta \sim p_{\psi}}[F(\theta)]\right) &=\mathbb{E}_{\theta\sim p_{\psi}}\!\left[\nabla_{\psi}\!\left(\log p_{\psi}(\theta)\right) \cdot F(\theta)\right] \\[3pt] & \approx \frac{1}{\lambda} \sum_{k=1}^{\lambda} \!\left[\nabla_{\psi}\!\left(\log p_{\psi}(\theta)\right) \cdot F(\theta)\right]\end{aligned}\end{equation}

\begin{equation}\begin{aligned}\nabla_{\psi}\!\left(\mathbb{E}_{\theta \sim p_{\psi}}[F(\theta)]\right) &=\mathbb{E}_{\theta\sim p_{\psi}}\!\left[\nabla_{\psi}\!\left(\log p_{\psi}(\theta)\right) \cdot F(\theta)\right] \\[3pt] & \approx \frac{1}{\lambda} \sum_{k=1}^{\lambda} \!\left[\nabla_{\psi}\!\left(\log p_{\psi}(\theta)\right) \cdot F(\theta)\right]\end{aligned}\end{equation}

Evolution Strategy for RL: Researchers in Salimans et al. (Reference Salimans2017) utilized Natural Evolution Strategy to find the optimal parameters of a neural network for RL problems. The parameters represent the weights and biases, and the objective is the stochastic return from the environment. The parameterized distribution,

![]() $p_\psi(\theta)$

is assumed to be multivariate gaussian with mean

$p_\psi(\theta)$

is assumed to be multivariate gaussian with mean

![]() $\psi$

and a fixed noise of

$\psi$

and a fixed noise of

![]() $\sigma^2I$

.

$\sigma^2I$

.

This parametrization allows to rewrite the average objective as

![]() $\mathbb{E}_{\theta \sim p_{\psi}} F(\theta)=\mathbb{E}_{\epsilon \sim N(0, I)} F(\theta+\sigma \epsilon)$

. Given the revised definition of the objective function, the authors derive (Salimans et al., Reference Salimans2017) the gradient of the objective function in terms of

$\mathbb{E}_{\theta \sim p_{\psi}} F(\theta)=\mathbb{E}_{\epsilon \sim N(0, I)} F(\theta+\sigma \epsilon)$

. Given the revised definition of the objective function, the authors derive (Salimans et al., Reference Salimans2017) the gradient of the objective function in terms of

![]() $\theta$

as

$\theta$

as

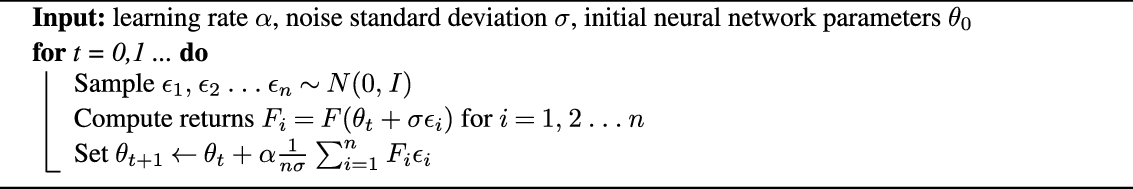

The gradients are approximated by generating samples of noise

![]() $\epsilon$

during training and the parameters are then optimized using stochastic gradient ascent as shown in Algorithm 1.

$\epsilon$

during training and the parameters are then optimized using stochastic gradient ascent as shown in Algorithm 1.

Algorithm 1: Evolution strategy for RL by OpenAI (Salimans et al., Reference Salimans2017)

2.5.3. Neuroevolution

Neuroevolution is a form of Artificial Intelligence (AI) that combines EC with NNs. The objective is to either learn the topology of the NN or its parameters, that is, weights and biases by utilizing algorithms discussed in Sections 2.5.1 and 2.5.2.

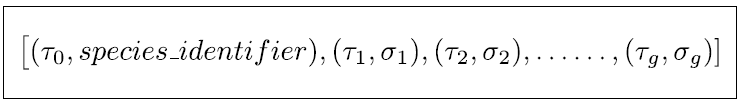

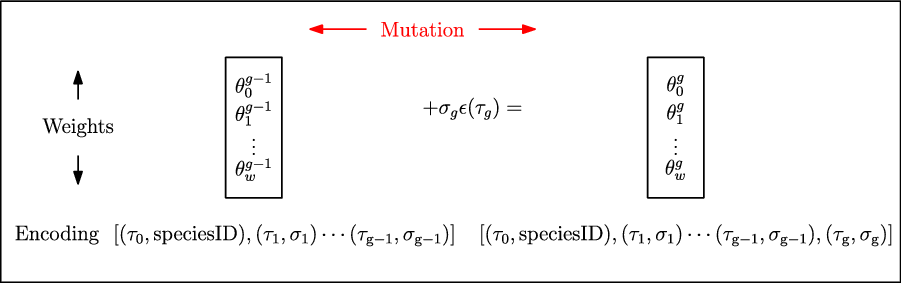

An individual within the population is a NN or its parameters—weights and biases. The trivial method of storing a NN, as a complex data structure, in the parameter space scales poorly in memory, especially with the increasing population size, and makes the application of genetic operators a computationally expensive task. Hence, the need for simplified encoding of the NN.

Direct encoding: In the notable work, ’Evolving neural networks through augmenting topologies’, NEAT (Stanley & Miikkulainen, Reference Stanley and Miikkulainen2002), the authors propose a direct encoding wherein the connections between each neuron are explicitly stored with all the weights and biases, as shown in Figure 12. Such an encoding gives a very high degree of flexibility to the network topology but has a massive search space due to a fine granularity of the encoding. This approach is not well suited for DNN, which consists of several hidden layers, each with thousands of neurons.

Figure 12. A simplified version of direct encoding proposed in NEAT (Stanley & Miikkulainen, Reference Stanley and Miikkulainen2002). Mapping of genotype, a neural network to a phenotype, its encoding

Indirect encoding: This type of encoding relies on a translation function or a mechanism that maps a neural network to its encoded representation. Unlike, direct encoding which is a quick way to encode or decode a neural network, this type of encoding has a processing overhead and limited granularity. Some implementations that employ an indirect encoding include Compositional Pattern Producing Networks (CPPNs) (Stanley, Reference Stanley2007), HyperNEAT (Stanley et al., Reference Stanley, D’Ambrosio and Gauci2009) and Deep Neuroevolution (Conti et al., Reference Conti, Madhavan, Such, Lehman, Stanley and Clune2018).

2.6. Related work

Computing the Q-value for complex problems with a large state-action space using traditional tabular RL algorithms is computationally infeasible. This led to the use of function approximations like neural networks for estimating the Q-values (Melo et al., Reference Melo, Meyn and Ribeiro2008), which is memory efficient and can also be applied to problems with continuous state and action spaces (Van Hasselt, Reference Van Hasselt2013).

The first significant breakthrough in deep reinforcement learning, the combined use of deep learning and reinforcement learning, was the introduction of DQN (Mnih et al., Reference Mnih and Kavukcuoglu2015) which incorporated two critical components in the training process, an experience replay buffer and a separate target network. The replay memory stores the agent’s experience, including the states, actions and rewards over a few episodes and the target network is a periodically updated copy of the main network which is used to decide what actions to perform. During training, samples are drawn from the replay memory to remove the correlations between the observations. The combined use of these two innovative techniques stabilized the learning of Q-values and achieved state-of-the-art performance on many Atari 2600 benchmarksFootnote 5.

Over the past few years, several extensions to DQN have been proposed. A known problem with Q-learning is its tendency to overestimate the targets since it relies on a single estimator for the Q-values. A technique called Double Q-learning (Hasselt, Reference Hasselt2010) tries to rectify this issue by utilizing a double estimator. An extension of DQN with double estimators, was implemented in Double DQN (van Hasselt et al., Reference van Hasselt, Guez and Silver2016). When sampling from the replay buffer as proposed in DQN, all samples, that is, transitions were given the same significance. Research on a prioritized experience replay (Schaul et al., Reference Schaul, Quan, Antonoglou and Silver2015) that offered a higher importance to more frequent transitions resulted in improved performance and was included in most subsequent research. A Dueling network architecture (Wang et al. Reference Wang, Schaul, Hessel, Hasselt, Lanctot and Freitas2016) with two estimators—one for Q-values and the other for state-dependent action advantage function, was proposed and shown to have better generalization properties. Deep Deterministic Policy Gradient (DDPG) (Lillicrap et al., Reference Lillicrap2015) combines the use of deterministic policy gradient and DQN and can be operated on a continuous action space making its application particularly useful in robotics (Dong & Zou, Reference Dong and Zou2020).

The slow rate of convergence and longer duration of training prompted the need for parallelizable algorithms. A distributed framework for RL, Gorilla (Nair et al., Reference Nair, Srinivasan, Blackwell, Alcicek, Fearon, De Maria, Panneershelvam, Suleyman, Beattie, Petersen and Legg2015) was proposed by researchers at Google which consists of several actors, all learning continuously in parallel and syncing their local gradients with a global parameter server after a few steps. This parallelized variant of DQN outperformed its standard counterpart on several benchmarks and reduced the training time.

Asynchronous Advantage Actor Critic (A3C) (Mnih et al., Reference Mnih, Badia, Balcan and Weinberger2016) is a parallelized policy gradient method that consists of several agents, independently interacting with copies of environments in parallel. The algorithm consists of one global network with shared parameters and uses asynchronous gradient descent for optimization. In Asynchronous Advantage Actor Critic (A3C), each agent communicates with the global network independently. The aggregated policy from different agents, when combined together may not be optimal. A2C (Mnih et al., Reference Mnih, Badia, Balcan and Weinberger2016) is deterministic and synchronous version of A3C, which resolves this inconsistency by using a coordinator that ensures a synchronous communication between agents and global network.

2.6.1. Evolution-based RL

The Q-learning-based methods try to solve the Bellman optimality equation whereas, policy gradient algorithms consist of parameterizing the policy itself to learn the probability of taking an action in a state. Regardless, they usually entail expensive computations, such as gradient calculations and back-propagation, which result in very lengthy (i.e., hours to even days) training in order to obtain desirable results, especially when solving complex problems with large state spaces (Lillicrap et al., Reference Lillicrap2015; Mnih et al., Reference Mnih and Kavukcuoglu2015; Mnih et al., Reference Mnih, Badia, Balcan and Weinberger2016).

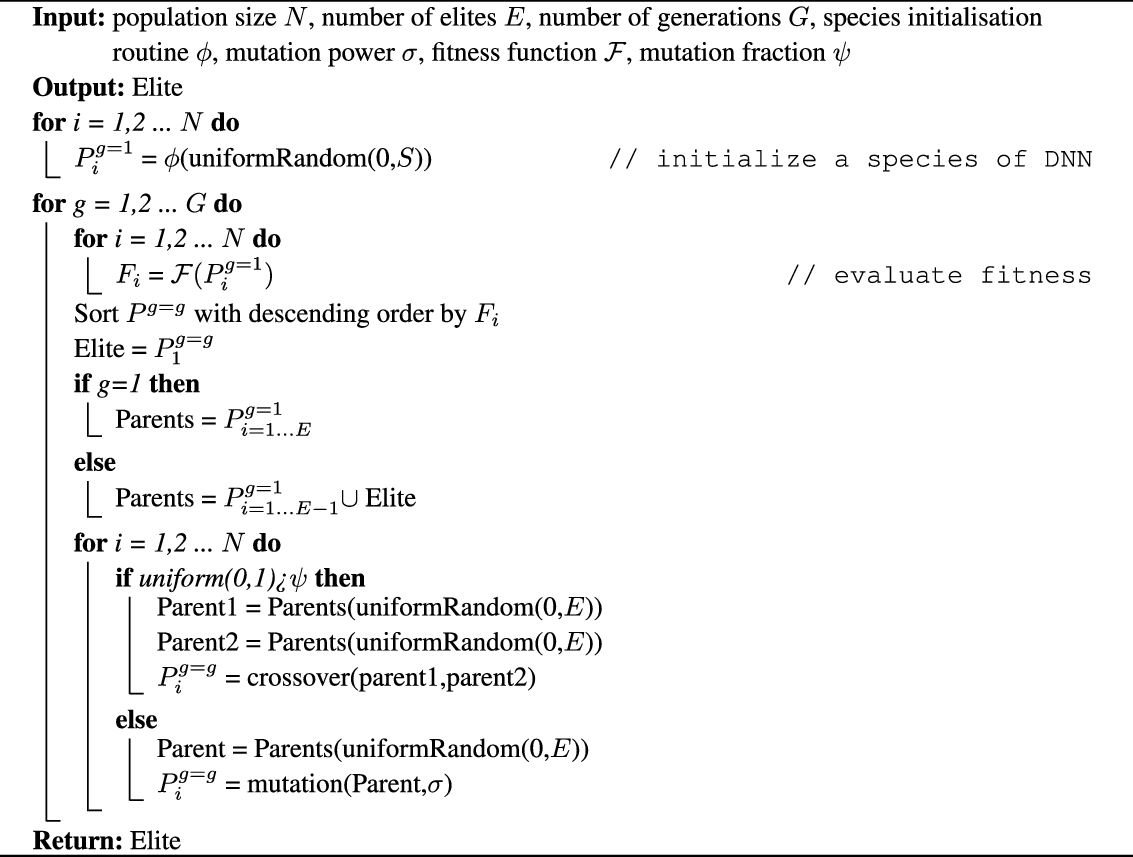

Recently we see a surge of a number of alternative approaches to solving RL problems, including the use of EC. These methods have been quite effective in discovering high-performing policies (Whiteson, Reference Whiteson2012). A notable contribution is the work by OpenAI on Evolution Strategy (ES) (Salimans et al., Reference Salimans2017) to train deep neural networks. ES does not calculate the gradients analytically, instead approximate the gradient of the reward function in the parameter space using minor random tweaks. This massively scalable algorithm, trained on several thousand workers was able to solve the 3D humanoid walking benchmark (Tassa et al., Reference Tassa, Erez and Todorov2012) within minutes and also outperformed several established deep reinforcement learning algorithms on many complex robotic control tasks and Atari 2600 benchmarks. Collaborative Evolutionary Reinforcement Learning (CERL) (Khadka et al., Reference Khadka, Majumdar, Chaudhuri and Salakhutdinov2019) is another scalable framework that was designed to simultaneously explore different regions of the search space and creating a portfolio of policies, the results on continuous control benchmarks highlighted improved sample efficiency of such approaches. Another breakthrough work by researchers at UberAI, is Deep Neuroevolution (Conti et al., Reference Conti, Madhavan, Such, Lehman, Stanley and Clune2018), a black-box optimization technique based on principles of evolutionary computation. Deep Neuroevolution utilizes a simple GA to train a convolutional neural network that approximates the Q-function.

Results from these publications highlight improved performance and significant speedup due to distributed training (Salimans et al., Reference Salimans2017; Conti et al., Reference Conti, Madhavan, Such, Lehman, Stanley and Clune2018). Moreover, these gradient-free approaches can be applied to non-differentiable domains (Cho et al., Reference Cho2014; Xu et al., Reference Xu2016; Liu et al., Reference Liu2018) and for training models that require low precision arithmetic—binary neural networks on low precision hardware. In a subsequent paper (Conti et al., Reference Conti, Madhavan, Such, Lehman, Stanley and Clune2018), the same researchers incorporated quality diversity (QD) (Cully et al., Reference Cully2015; Pugh et al., Reference Pugh, Soros and Stanley2016) and novelty search (NS) (Lehman & Stanley, Reference Lehman and Stanley2008) to ES, and proposed three different algorithms, which improved performance and also directed the exploration. Their work also highlighted the algorithm’s robustness against a local minima.

Several population-based evolutionary algorithms have been used to train neural networks or optimize their architecture (Jaderberg et al., Reference Jaderberg2017) and the hyperparameters (Liu et al., Reference Liu2017), but a rather interesting approach was proposed in Genetic Policy Optimization (GPO) (Gangwani & Peng, Reference Gangwani and Peng2018) algorithm which utilized imitation learning as crossover and policy gradient techniques for mutations. The algorithm had superior performance and improved sample efficiency in comparison to known policy gradient methods. Evolutionary Reinforcement Learning (ERL) (Khadka & Tumer, Reference Khadka and Tumer2018) is another hybrid approach that uses a population-based optimization strategy guided by policy gradients for challenging control problems.

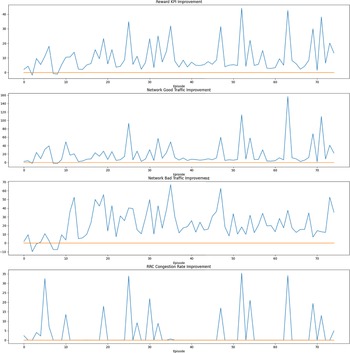

3. Species-based Genetic algorithm (Sp-GA)

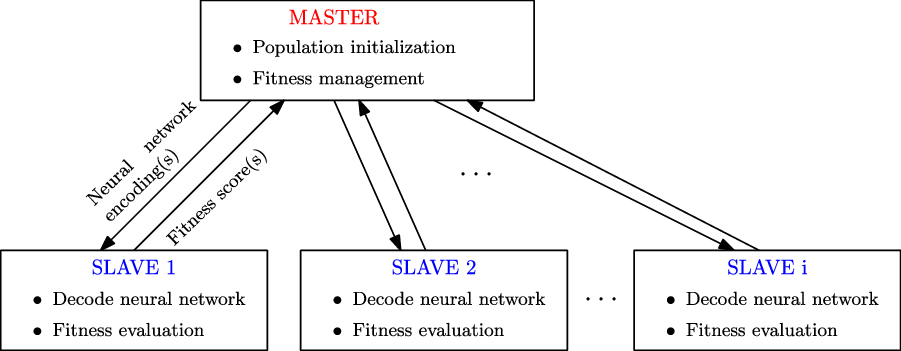

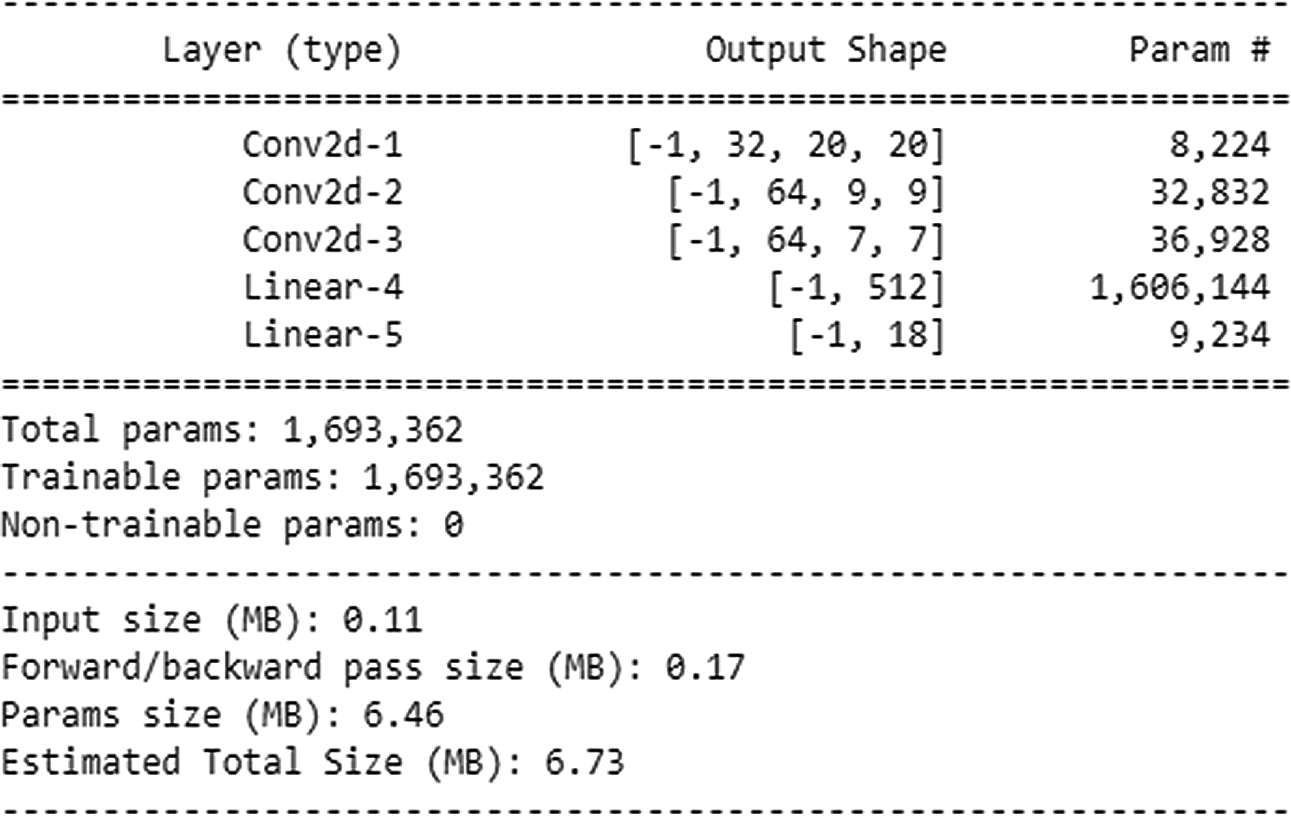

A typical GA (Section 2.5.1) consists of a population of individuals where each individual is a potential solution to the problem. In the context of RL, a solution may represent a policy, a neural network that estimates the Q-function (Mnih et al., Reference Mnih and Kavukcuoglu2015) or a neural network that predicts what action to take given the state as an input. In this work, we propose a GA wherein each individual is a neural network that estimates the Q-function for the specific RL problem. The algorithm initializes the population of such neural networks from a species-based initialization routine and iteratively evolves the population to search for the best solution. We call this algorithm Sp-GA.

3.1. Species initialization

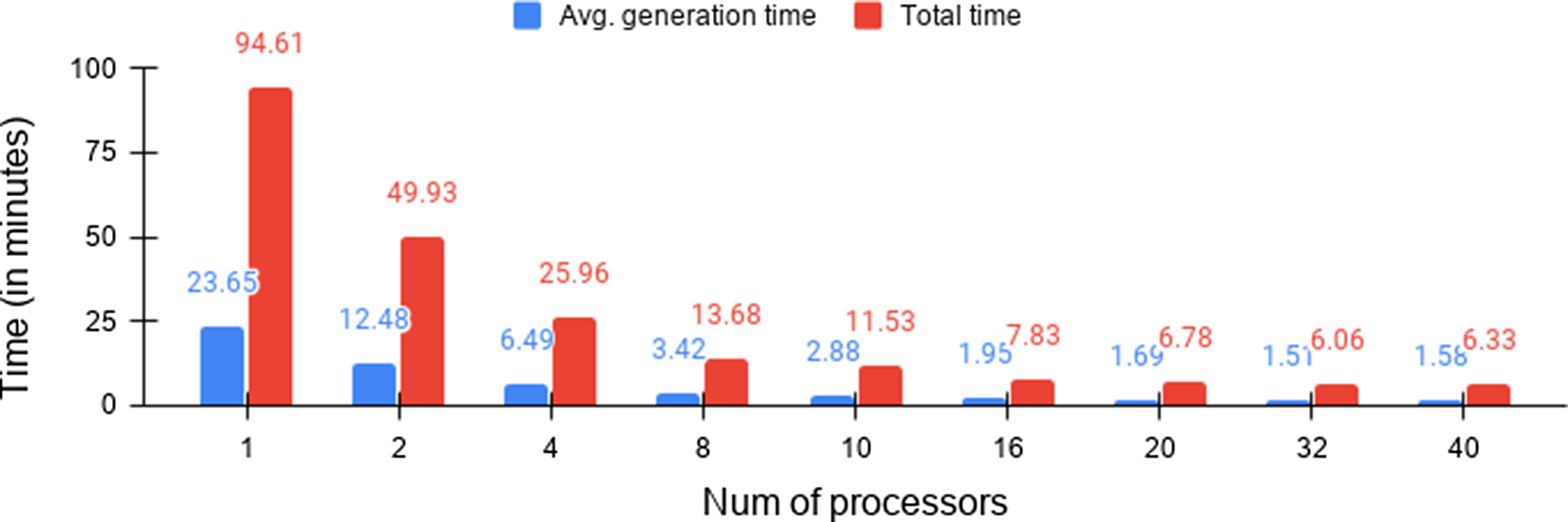

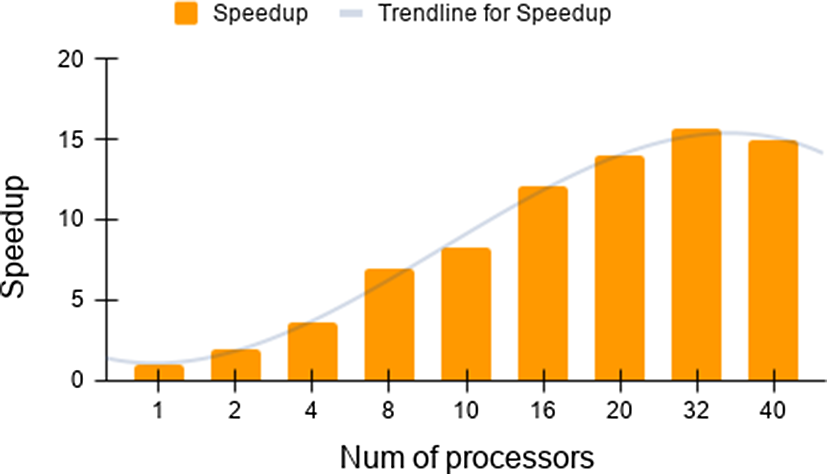

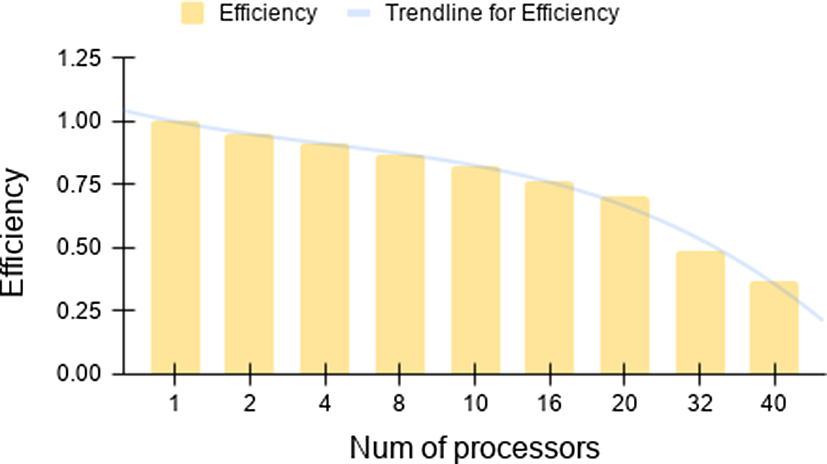

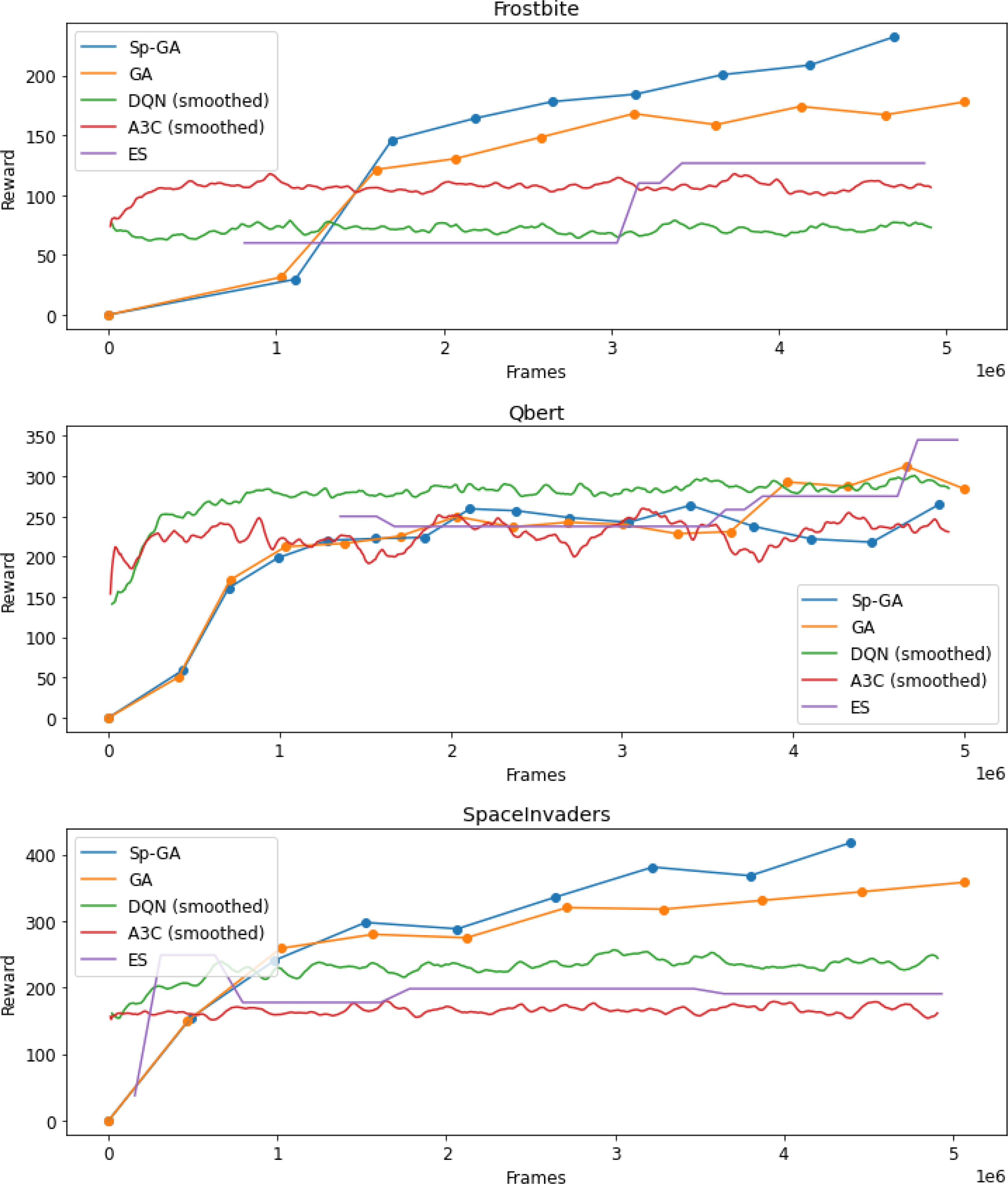

The notion of speciation was first investigated in NEAT (Stanley & Miikkulainen, Reference Stanley and Miikkulainen2002), which clustered neural networks with similar topologies into subclasses called species. The philosophy behind the approach is to give sufficient time for evolution to new solutions within a smaller subset before they compete with other species. As a result, innovation, novelty, and diversity are protected within the population.