1 Introduction

1.1 Corpora and Pragmatics

The role of corpora in pragmatic research has continually gained importance over time, and the number of published handbooks (Reference Aijmer and ChristophAijmer & Rühlemann 2014), text books (Reference RühlemannRühlemann 2019), edited volumes (e.g. Reference Romero-TrilloRomero-Trillo 2008; Reference Jucker, Schreier and HundtJucker, Schreier & Hundt 2009; Reference Suhr and IrmaSuhr & Taavitsainen 2012; Reference Taavitsainen, Jucker and JukkaTaavitsainen, Jucker & Tuominen 2014) and the launch of a journal (Corpus Pragmatics, since 2017) demonstrate clearly that we have moved far beyond the point at which it has to be argued why corpus approaches are suitable and valuable for pragmatic studies. Corpus approaches have gained their place next to introspection, experimentation and non-corpus-based observational approaches (see Reference Jucker, Schneider and BublitzJucker, Schneider & Bublitz 2018). At the same time, there still remain many challenges for corpus approaches to pragmatic research questions. For instance, corpora still tend to be characterised by a lack of access to context, privileging of quantitative results over qualitative interpretation and focus on linguistic forms rather than their functions, all of which can hinder pragmatic studies. We believe that by taking a closer look at these challenges, we can identify new avenues in which to develop corpus pragmatics.

This introductory section presents a brief overview of the main challenges with which corpus pragmatics is faced. These challenges provide the starting point for the following sections, which deal with ways to overcome some of these challenges as well as highlighting the many benefits corpora offer for pragmatics. In doing so, we focus on the wide variety of different corpora that have become available to researchers and we explore the opportunities that each of these types of corpora provides for pragmatic studies and the research avenues that can be pursued. We adopt a wide understanding of corpora, which includes not only prototypical large and publicly released corpora, but also small, purpose-built, ad hoc collections of data. Likewise, we discuss a broad range of different approaches. What they all have in common is that they rely on digital data compilations and that they aim to identify, systematically search for and analyse linguistic patterns and their pragmatic functions with the help of computers. We place special emphasis on function-to-form approaches to corpus pragmatics. This is not to imply that form-to-function approaches are not suitable or relevant for pragmatics – they certainly are, as has been amply demonstrated. Our focus on function-to-form approaches is due to the unique challenge that they pose, given that they do not start with form-based search patterns (see Section 1.4). Since all authors of this Element work in the field of English linguistics, we will focus our discussion on English language corpora. However, the number and variety of corpora is large and continuously growing for many other languages as well, and the methods we discuss can equally be applied to corpora of other languages. Due to space restrictions, we cannot present an extensive overview of the research field, but we refer to relevant resources where interested readers can find more detailed information and overviews of the field.

1.2 Context

Pragmatic meaning is influenced by all levels of context (see Reference Garcés-Conejos Blitvich and SifianouGarcés-Conejos Blitvich & Sifianou 2019). On the micro level, the immediate co-text of an expression influences its interpretation. On the meso level, pragmatic meaning depends on text types, genres and thematic domains. And on the macro level, the social, cultural and historical context affects pragmatic meaning as well. As a consequence, access to contextual information is often crucial for pragmatic studies. This reliance on access to context presents what is perhaps the biggest challenge for corpus pragmatics overall. While there are some corpora that provide excellent support for accessing contextual information, many corpora – especially those that were created for studying grammar – do not sufficiently satisfy this requirement.

At the level of micro-context, access to the co-text is restricted by many corpus interfaces. Search tools mostly present results in concordance views that show the search term with a minimal co-text of a number of words to the left and right. While this word span is usually sufficient for disambiguating word meaning, it often does not provide enough information for a pragmatic analysis. Even corpus interfaces that support an expanded context view, such as the Corpus of Contemporary American English (COCA) and other corpora by the Davies corpus family, often restrict the expanded context to no more than a few lines (information on all corpora mentioned in this Element can be found in Appendix A). For some pragmatic interpretations, a few lines of context may be sufficient, but sometimes access to an entire text or conversation is crucial in order to understand how a specific utterance can be interpreted, or whether several interpretations are possible. Other popular corpus tools, like keyword analysis, collocation analysis and n-gram analysis, present results that are even more decontextualised than concordance lines.

There are at least two reasons for the tendency to restrict access to the co-text in corpora. First, copyright restrictions often keep corpus compilers from sharing full texts with users. This is the case, for instance, with many corpora that are based on films and TV series, such as the Sydney Corpus of Television Dialogue (SydTV), as well as the SOAP Corpus, the Movie Corpus and the TV Corpus from the Davies corpus family (Reference Bednarek, Veirano Pinto and WernerBednarek et al. 2021: 2–3). Second, most corpora that are released to the research community were not built primarily for studying pragmatics. Instead, corpus compilers often focus on grammatical and lexical characteristics. The focus of analysis influences sampling procedures and the development of corpus tools. For instance, many balanced reference corpora include text samples of a limited size, rather than entire texts. For studies of grammar, this has advantages, since it limits the possibility of the corpus being biased due to overrepresentation of individuals. For studies of pragmatics, this is true as well, but at the same time the lack of access to the larger co-text creates problems for the analysis.

With respect to the meso context, i.e. information about the genre or text type, and the thematic domain from which the corpus data was sampled, the information provided by corpora varies a great deal. Many corpora are built around one or more classifications that are relevant for studying variation across genres or text types. While some general information about this categorisation is often available, more fine-grade and more detailed information may be more difficult to access. For instance, while many balanced reference corpora include a category of news texts, distinctions into hard news dealing with political topics and soft news dealing with human interest content are less common. However, this distinction can greatly affect the use of expressions and, thus, such information can be relevant for pragmatic studies. Meso-level classifications can become especially challenging in comparisons across different corpora, when the same label is applied but the data sets are compiled according to different principles. The creation of suitable classifications that can be generalised across different corpora and types of data is often not possible, and classifications tend to vary between corpus compilers. The documentation of the sampling principles can provide valuable information on the kind of data that is included in the corpus, but sometimes this information is not easily available or not very detailed (see also Section 5).

When it comes to the macro context, the situation varies greatly across different types of corpora. Specialised corpora that include data from one specific domain are often published together with studies that present extensive discussions of the kind of data included in the corpus. In contrast, balanced reference corpora like the British National Corpus (BNC) and the COCA tend to assume that users are familiar with the variety that is represented. The challenge of lack of access to information about the macro context is perhaps greatest for historical corpora, where familiarity with the general socio-historical context can be taken for granted the least.

How marked the effect of research focus can be on the composition and metainformation of a corpus becomes apparent if we look at corpora that were composed with pragmatic research questions in mind. A case in point are pragmatically annotated corpora, where pragmatic information is included during the composition process (see Section 2.6). A different example can be found in certain historical corpora which were composed with a wide range of possible research aims in mind, including pragmatic questions. An example of this is the three-part Corpus of Early English Medical Writing 1375–1800 (CEEM). The corpus was compiled as part of a long-running research project investigating changes in scientific thought-styles, hosted at the Research Unit for Variation, Contacts and Change in English at the University of Helsinki. The pragmatic nature of the research interest affected the corpus composition in a number of ways: the corpus includes long, 10,000 word extracts of texts rather than short samples; it comes with its own software, which provides access to passages in the context of the entire extract; it includes extensive metainformation on each text and author; and it provides direct links to additional context information, such as hyperlinks to scanned manuscript pages on Early English Books Online (EEBO) (see Reference Tyrkkö, Hickey, Marttila, Taavitsainen and PahtaTyrkkö, Hickey & Marttila 2010). All of this makes the corpus very well-suited for studies of pragmatic variables, while it can nevertheless be fruitfully used to investigate purely grammatical or lexicological research questions (e.g. Reference Méndez-Naya and PahtaMéndez-Naya & Pahta 2010).

1.3 Qualitative and Quantitative Analysis

Due to the various levels of context-dependency of pragmatic meaning, pragmatic analysis often relies quite strongly on interpretation and qualitative analysis. Researchers make judgements about the pragmatic functions of utterances by interpreting them in the context in which they are used through what is sometimes referred to as horizontal reading (Reference Rühlemann, Aijmer, Aijmer and RühlemannRühlemann & Aijmer 2014: 3), followed by subsequent classification. This process is time-consuming and not easily reconciled with the most prototypical uses of standard corpus tools. Most corpora are developed with the aim to support quantitative analysis and so-called vertical reading of data. They offer tools such as keyword in context (KWIC) views, normalised frequencies, keywords, collocations and n-grams. All of these tools present decontextualised or only partially contextualised views of results that help establish broader patterns across the entire corpus. When these tools are used to carry out pragmatic analysis, researchers often need to find ways of combining them with more contextualised perspectives.

In this Element, we discuss different ways in which this tension between qualitative and quantitative approaches can be resolved. The focus of Section 4 lies on corpus-assisted approaches that rely very heavily on qualitative analysis and, as a consequence, use small corpora, which still make it possible to quantify patterns in the data. In Sections 6 and 7, we focus on two areas in which we see a demand for the development of new corpus methods that take pragmatic research questions into consideration. For Section 6, these are methods that help researchers apply qualitative methods to large corpora in meaningful ways, and Section 7 turns to the analysis of multimodal data, where there is a great deal of demand for new methods that support pragmatic analysis, especially when it comes to quantifying observations.

1.4 Form-to-Function and Function-to-Form Mapping

Most pragmatic research deals with the relationship between linguistic forms and their functions. This relationship can be studied in two different ways. In form-to-function approaches, researchers study the different pragmatic functions of a given form, whereas in function-to-form approaches the aim is to identify forms with which a given function can be expressed (Reference Jacobs, Jucker and JuckerJacobs & Jucker 1995: 13). Corpus pragmatics is particularly well-suited for form-to-function approaches, since corpora make it possible for researchers to search for all surface forms within the data (Reference O’Keeffe, Jucker, Schneider and BublitzO’Keeffe 2018: 587). As Reference O’Keeffe, Clancy and AdolphsO’Keeffe et al. (2020: 47) note, corpus linguistics overall is form-to-function oriented: apart from special cases, such as pragmatically annotated corpora, corpus searches always start with forms. Thus, it is probably no surprise that much of the early research in corpus pragmatics adopted form-to-function approaches. The study of pragmatic markers (or discourse markers) proved especially productive. An influential example of such studies is Reference AijmerAijmer’s (2002) research monograph, which looked at a range of discourse particles, including now, oh and ah, and actually in the London-Lund Corpus. Other corpus-based studies on pragmatic markers have been carried out on a wide range of Present-day English (e.g. Reference Aijmer and JesúsAijmer 2008; Reference BuysseBuysse 2012; Reference Kirk, Amador-Moreno, McCafferty and VaighanKirk 2015; Reference BeechingBeeching 2016) and historical corpora (e.g. Reference Culpeper and KytöCulpeper & Kytö 2010: ch. 15; Reference LutzkyLutzky 2012). Other examples of form-to-function approaches include the study of stance markers like I think (e.g. Reference Aijmer, Swan and WestvikAijmer 1997; Reference KärkkäinenKärkkäinen 2003; Reference Simon-VandenbergenSimon-Vandenbergen 2000) and the earlier form methinks (e.g. Reference Palander-CollinPalander-Collin 1999); the study of the pragmatic functions of basically (Reference Butler and Romero-TrilloButler 2008); the study of interjections (e.g. Reference NorrickNorrick 2009) and the study of the planners uh and um (e.g. Reference TottieTottie 2011, Reference Tottie2014, Reference Tottie2019; Reference Tonetti Tübben and LandertTonetti Tübben & Landert 2022).

In contrast, function-to-form approaches are faced with the challenge that functions cannot be searched for automatically. This has not deterred researchers from investigating pragmatic functions in corpora, though. Speech acts in particular have been investigated with function-to-form approaches. An early example is Reference Kohnen, Mair and HundtKohnen’s (2000) study of directive speech acts in the Lancaster–Oslo/Bergen Corpus and the London-Lund Corpus. The difficulty of overcoming the lack of one-to-one correspondence between form and function is discussed very prominently in this paper. In his conclusion, Reference Kohnen, Mair and HundtKohnen (2000: 184) states: ‘It seems probable that the fundamental difficulty, the open relationship between form and function, cannot be solved … Perhaps a corpus-based study of speech acts will have to focus on the patterns representing the most typical and common manifestations of a speech act and will not seek to cover all the possible manifestations of that speech act.’ It would be overly optimistic to claim that these problems have been completely resolved in the meantime. However, research on speech acts and other function-to-form topics has made much progress (for an overview of types of approaches, see Reference Jucker, Östman and VerschuerenJucker 2013). Approaches that have been developed include the search for illocutionary force indicating devices (IFIDs) (e.g. Reference DeutschmannDeutschmann 2003; Reference Jucker, Schneider, Taavitsainen, Breustedt, Jucker and TaavitsainenJucker & Taavitsainen 2008), the search for known lexico-grammatical patterns (e.g. Reference AdolphsAdolphs 2008; Reference Jucker, Taavitsainen, In Jucker and TaavitsainenJucker et al. 2008) and the study of metacommunicative expressions (e.g. Reference Jucker, Taavitsainen, Andreas and TuominenJucker & Taavitsainen 2014; see also Reference Haugh, Jucker, Schneider and BublitzHaugh 2018). For research of stance expressions, the analysis of high-density passages that include clusters of known expressions has been shown to be an effective method, which may have potential for other research areas as well (Reference Landert, Suhr, Nevalainen and TaavitsainenLandert 2019).

Since automatic retrieval always takes place based on surface forms, even function-to-form approaches usually rely on the identification of forms in the corpus data. Exceptions to this can be found in pragmatically annotated corpora and in small-scale corpus-based approaches. In both cases, the corpus data is read and interpreted by a researcher, either as part of the compilation process (annotated corpora) or as the main focus of the analysis (small-scale corpus-based approaches). It remains an open question to what extent the identification of pragmatic functions will ever be possible without relying on predefined forms and manual evaluation.

1.5 Scalability

Scalability refers to the ease with which research methods can be applied to large sets of data. If a method is scalable, then processing a very large amount of data does not take considerably longer than processing a small amount of data. Generally speaking, quantitative methods have a much better scalability than qualitative methods. For instance, if we are interested in comparing normalised frequencies across different sets of data, the size of the data only marginally affects the duration of the calculation, as long as the number of hits can be retrieved automatically. While the automatic computation of the number of hits in the corpus increases with corpus size, it still is so fast that the duration of computation takes up a very small proportion of the overall research time. In contrast, the manual classification of pragmatic functions increases with the size of data in a linear fashion. Classifying 10,000 instances takes, roughly, 100 times as long as classifying 100 instances. In other words, manual classifications have very poor scalability.

Compared to the other challenges discussed in this section, scalability has received little attention so far. However, scalability is at the core of all of the previously discussed challenges. It is due to the poor scalability of context-dependent interpretations and the manual work involved in identifying pragmatic functions that corpora present challenges for pragmatic studies. This issue is becoming more pressing with the trend towards increasing corpus size. Larger corpora provide more data and, as a consequence, potentially more opportunities for insightful observations into pragmatic phenomena, but the large amount of work involved in qualitative analysis means that either only a small section of the data can be considered or that the focus shifts even more towards quantitative evaluations. This does not have to be the case, though. While linguists have spent a great amount of effort on developing new quantitative methods of exploring corpus data, there has been far less exploration of the ways in which we can support the qualitative analysis of data in larger corpora. As we will discuss in Section 6, it is possible, for instance, to develop semi-automated methods which rely on automated procedures to retrieve particularly relevant instances of a phenomenon for further manual analysis. Such methods help make qualitative analysis more scalable and thus open up new research perspectives for working with large corpora, even when the phenomenon under investigation relies on manual analysis.

1.6 Corpus Pragmatics and its Advantages

Despite all these challenges, corpus pragmatics is a vibrant field of research that can yield meaningful insights that complement studies on the same phenomena that were derived with different methodologies. It addresses a wide variety of research questions and makes use of a large range of corpus resources, tools and approaches. In this Element, we focus on this variety and point out those areas in which we see most potential for further developments. Throughout the Element, we highlight why it might be worth doing corpus pragmatics in the first place. Some of the advantages that corpus approaches offer can be summarised as follows:

– Pattern finding: Corpora present well-defined data sets that make it possible for researchers to identify reoccurring patterns.

– Systematicity: Corpora include samples that represent language use in a given variety or domain in a systematic way. This makes it possible to assess the extent to which corpus data is representative of the variety or domain overall.

– Generalisation: Observations of reoccurring patterns across different sets of data make it possible to generalise findings for the language use that is represented in the corpus, provided that the corpus is compiled in a way that such generalisations are legitimate (e.g. with respect to representative sampling).

– Reproducibility: To the extent that corpus data is accessible to other researchers, and provided that methodological explanations are presented with a sufficient amount of detail, results that are based on corpora can be verified by other researchers.

– Transparency: By making use of often well-established corpus linguistic methodology, corpus pragmatics can present findings in such a way that the methodological steps that lead to them are maximally transparent.

1.7 Outline of the Element

In this Element, we do not attempt to present a comprehensive overview of corpus pragmatics. There are already various handbooks and textbooks that present excellent overviews of how different types of pragmatic phenomena can be studied with corpus data and methods (see Section 1.1). Instead, we focus on the challenges that we introduced in this section and on possible ways of addressing and overcoming them. We also want to emphasise that the term corpus can be used to refer to vastly different types of data collections: from small, manually curated compilations of project-specific datasets to huge (semi-)automatically compiled collections of electronically available documents; from data formatted and annotated for a given research objective to corpora that are compiled to ensure maximum compatibility with existing and future resources; and from purely text-based corpora to corpora including audio and video files and visual resources. This Element is structured along these distinctions, emphasising that each of these types of corpora presents unique challenges and opportunities for corpus pragmatic research.

Section 2 gives an overview of different types of corpora and how their characteristics influence pragmatic research. Each type is illustrated with examples from corpora that have been used for pragmatic studies in the past. Appendices A and B complement this section by providing lists of all corpora (Appendix A) and all corpus tools (Appendix B) mentioned in the Element. Sections 3 and 4 discuss two different ways in which purpose-built corpora can be used. In Section 3, the focus lies on corpus-driven research on project-specific data. The section discusses the manifold benefits, as well as the challenges of working with data compiled from online sources, including ethics, copyright issues and data documentation. While these issues of corpus composition are discussed in the context of corpus-driven research, many of them equally apply to corpus-based studies with self-compiled corpora. Section 4 turns to much smaller purpose-built corpora and the ways in which they can be used for corpus-assisted discourse analysis. The case study presented in the section illustrates the analytic steps needed to apply qualitative methods in such a way that quantifiable patterns can be identified in the data. Section 5 turns to the many practical challenges involved in combining different existing corpora within a single study. These challenges include, for instance, differences in data formats, annotation, metainformation and access to data, and are discussed under the keywords compatibility and comparability. While incompatibility of corpus formats can make it more difficult to work with different corpora side-by-side, the lack of comparability of data and results within or across corpora will negatively affect the reliability of results and interpretations. Section 6 deals with the issue of scalability and argues for the value of developing new scalable methods that make it possible to use large corpora even for research questions that rely on qualitative data analysis. In Section 7, we present an overview of the most recent developments in the area of multimodal corpora and discuss what is needed for pragmatic studies of such corpora. Section 8 concludes the Element with a summary of open issues and an outlook to possible future developments concerning new types of corpus resources, new methods and new research questions.

2 Corpora and their Characteristics: Challenges and Opportunities for Pragmatic Research

2.1 Introduction

In this Element, we adopt a broad perspective on corpus pragmatics and corpora. We present an overview of the many different ways in which corpora can be used for pragmatic research. In order to do so, it is necessary to point out the wide variety of linguistic corpora that exist today, which is the topic of this section. Our aim is not to present a comprehensive list of all corpora – not only would this be an impossible task but the list would be outdated very quickly – but rather to introduce distinctions between types of corpora as discussed in the literature, many of which will be relevant for the remaining sections in the Element.

In what follows, we decided to introduce examples of what the research community has coined at various times as types of corpora of interest to pragmatics: balanced reference corpora, topic- and domain-specific corpora, spoken corpora, multimodal corpora, pragmatically annotated corpora, learner corpora, parallel multilingual corpora, corpora including unsystematic large text collections and purpose-built self-compiled corpora. However, the distinctions between these categories are in fact fuzzy and they are not mutually exclusive. In other words, corpora can belong to more than one group. For instance, there are balanced reference corpora that include multimodal spoken data in the form of audio recordings (e.g. BNC2014), topic-specific large text collections (e.g. News on the Web) and topic-specific learner corpora (e.g. the Giessen–Long Beach Chaplin Corpus). And, of course, there are other distinctions that could be added to the list, such as diachronic corpora and corpora of child language acquisition (e.g. Child Language Data Exchange System (CHILDES)). For a rough and incomplete overview meant to highlight this fuzzy nature of the categories, see Table 1. As a consequence, rather than suggesting a fixed taxonymy-type list, we selected the distinctions to draw attention to those opportunities and challenges of corpus pragmatics where we see most potential for research developments at the moment.

Table 1 Overview of a selection of corpora and the corpus categories they simultaneously belong to, ordered alphabetically according to the name of the corpus

| Corpus categories→ Name of corpus↓ | balanced reference corpus | topic- and domain-specific corpora | spoken corpora | multimodal corpora | pragmatically annotated corpora | learner corpora | unsystematic large text collections |

|---|---|---|---|---|---|---|---|

| BNC 1994 | x | (x) | (audio) | ||||

| CHILDES | x | x | audio/video | ||||

| CLMET3.0 | x | x | |||||

| EEBO | x | x | |||||

| Giessen–Long Beach Chaplin Corpus | x | x | audio | x | |||

| ICE | x | (x) | |||||

| LINDSEI | x | x | |||||

| Longman Corpus of Spoken and Written English | x | (x) | |||||

| News on the Web | x | x | |||||

| NMMC | x | x | audio/video | ||||

| SBC | x | audio | x | ||||

| SPICE-Ireland Corpus | x | x | |||||

| VOICE | x | (audio) |

All abbreviations of corpora mentioned in Table 1 can be found in the discussion in this section.

x: applies

(x): transcripts only

In Section 2.2, we start our discussion with a group of corpora that describes some of the oldest and still very common corpora, namely balanced reference corpora. Their counterpoint are topic-specific corpora, discussed in Section 2.3. The next two sections deal with modality. In Section 2.4, we describe corpora that are based on spoken language and in Section 2.5, we discuss the multimodal representation of data in corpora. Section 2.6 focuses on corpora that include pragmatic annotation. The following two sections deal with what language is represented in a corpus. Corpora on learner English are discussed in Section 2.7, and Section 2.8 introduces parallel multilingual corpora. The final two sections deal with size and data selection. Corpora that are based on unsystematic large text collections are discussed in Section 2.9, and Section 2.10 starts the discussion of purpose-built self-compiled corpora, which will be continued in Sections 3 and 4. Each distinction is introduced briefly and a few examples of relevant corpora are mentioned. We also present some advantages and limitations of such corpora for pragmatic research and provide a few examples of pragmatic studies that have been carried out in the past.

The corpus landscape is changing quickly. For up-to-date information of existing corpora and their accessibility, electronic sources are far more suitable than printed publications. There is not a uniform point of access for all existing linguistic corpus resources, although various attempts at establishing repositories of corpus data and/or information about corpora have been made. Corpora can be found, for instance, in research infrastructures like the Common Language Resources and Technology Infrastructure (CLARIN), online repositories like the Oxford Text Archive (OTA) and corpus managers like Sketch Engine. A valuable database of information about English language corpora is the Corpus Resource Database (CoRD), which includes a corpus finder tool that filters corpora according to language period, word count, type of data and annotation, and availability.

2.2 Balanced Reference Corpora

Balanced reference corpora are corpora that include text samples from a variety of different contexts in order to provide an overall representation of a given language variety. One of the most frequently used reference corpora is the BNC. It includes different types of spoken and written language samples from British English. While the original corpus, the BNC1994, included data from the 1990s, the recently released BNC2014 adds data from around 2014, thus providing opportunities for investigations into recent language change. Other examples are COCA and the International Corpus of English (ICE). The latter consists of an entire collection of corpora that represent English varieties from around the world. Another group of reference corpora are the corpora used as a basis for compiling dictionaries and grammars, such as the Longman Corpus of Spoken and Written English. Important diachronic reference corpora of English are the Helsinki Corpus of English Texts (HC) and the ARCHER corpus, and, for American English, the Corpus of Historical American English (COHA).

Advantages: Balanced reference corpora provide a general-purpose perspective on a language variety and are particularly well-suited for investigating general characteristics of a language variety. They are also very suitable for studying register variation, e.g. the variation of language phenomena across different text types and genres.

Limitations: Balanced reference corpora are usually compiled for facilitating the study of lexico-grammatical characteristics. They usually only support form-based queries and they often present decontextualised views of the data with limited access to context.

Examples of previous studies: Many form-to-function studies have been based on balanced reference corpora, e.g. the study of discourse markers (e.g. Reference AijmerAijmer 2002, Reference Aijmer and Jesús2008) and planners (Reference TottieTottie 2011). Apologies have been studied in the BNC (Reference DeutschmannDeutschmann 2003) and the COHA (Reference JuckerJucker 2018).

2.3 Topic- and Domain-Specific Corpora

Corpora can be compiled for any given topic or domain. Examples of recently released corpora include SydTV, based on transcripts of language used in television series; the Brexit Corpus, a 100-million-word collection of online texts relating to the UK referendum on Brexit; and the Corpus of Historical English Law Reports 1535–1999 (CHELAR). They are often compiled for a specific research project and, thus, their composition, annotation and suitability for pragmatic studies varies a great deal. While a single corpus only provides insight into one domain, different corpora can sometimes be combined to gain a broader perspective (see Section 5).

Advantages: Those topic- and domain-specific corpora that were compiled with pragmatic research questions in mind often offer annotation and contextual information that make them extremely suitable for pragmatic studies.

Limitations: Given that many domain-specific corpora were compiled for a specific research project, it can be challenging for other researchers to use these corpora for different projects. The composition, markup and metainformation may be geared towards some research questions rather than others. Consulting corpus documentations and learning about the corpus data is perhaps even more important than for other corpora. In some cases, it can be difficult to gain access to documentation or the information provided may be insufficient. However, some domain-specific corpora are also among the corpora with the most detailed amount of background information.

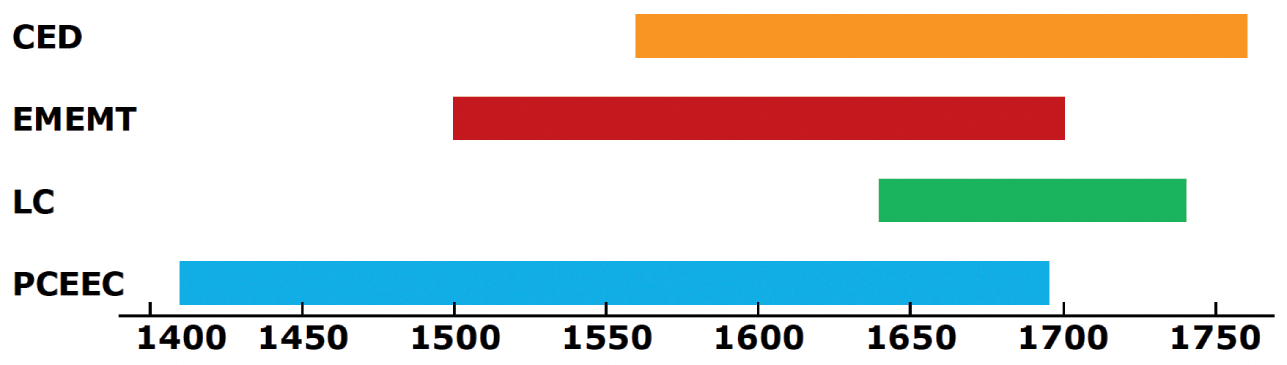

Examples of previous studies: There are many different kinds of examples that could be quoted here. Reference Lutzky and KehoeLutzky and Kehoe’s (2017a, 2017b) studies of apologies in the Birmingham Blog Corpus (BBC) are an example of research based on contemporary data. Examples of studies on historical corpora are Reference TraugottTraugott’s (2015) study of interjections in the Old Bailey Corpus (OBC), Reference Culpeper and KytöCulpeper and Kytö’s (2010) study of pragmatic noise in the Corpus of English Dialogues 1560–1760 (CED), and various studies on the development of scientific thought-styles that are based on the Corpus of Early English Medical Writing (CEEM) (e.g. Reference Gray, Biber, Hiltunen, Taavitsainen and PahtaGray, Biber & Hiltunen 2011; Reference Taavitsainen, Gotti and DossenaTaavitsainen 2001, Reference Taavitsainen, Fanego, Méndez-Naya and Seoane2002, Reference Taavitsainen, Jucker, Schreier and Hundt2009; Reference WhittWhitt 2016).

2.4 Spoken Corpora

Early corpora that were based on spoken language data, such as the original London-Lund Corpus (LLC) often included only written transcriptions. In recent years, it has become more and more common to provide access to audio recordings, which are often aligned with the written corpus data, which makes them multimodal corpora (see Section 2.5 and Section 7). Examples are the spoken section of the original British National Corpus (BNC1994), for which recordings have recently been made accessible, as well as the recently released new corpus component BNC2014 and the soon-to-be released London–Lund Corpus 2 (LLC2). The Santa Barbara Corpus of American English (SBC) is another important resource for the study of spoken language, consisting of sixty recordings and corresponding transcriptions. More specialised spoken corpora include the Cambridge and Nottingham Business English Corpus (CANBEC), British Academic Spoken English Corpus (BASE) and the Vienna-Oxford International Corpus of English (VOICE), which includes spoken language data from speakers of English as a lingua franca. In addition to differences in the availability of audio recordings, the corpora vary greatly with respect to the degree of detail and the amount of prosodic information that is included in the transcriptions.

Advantages: In addition to presenting data from one important mode of language realisation, spoken language corpora that provide access to audio recordings can give insight into how paraverbal features like loudness, stress, speech rhythm and other prosodic features affect pragmatic functions, an aspect that has not been explored in great detail so far (see also Section 2.5).

Limitations: The integration of written transcriptions and audio recordings poses challenges. The new BNC interface has made great progress in this respect, even making it possible to search for phonological phenomena. Still, search queries usually rely on written transcriptions and the transcription conventions vary a great deal across different corpora.

Examples of previous studies: Reference Adolphs and CarterAdolphs & Carter (2013) present a very detailed discussion of spoken corpus linguistics, which includes studies on pragmatic aspects such as response tokens.

2.5 Multimodal Corpora

Multimodal corpora are corpora which, in addition to text, also include data in different modalities. For instance, this can take the form of audio recordings of spoken language or visual information in the form of still images or videos. There are many different ways in which data in different modalities is presented. The most basic form can be found in corpora that include separate files with the different modalities, such as the SBC. The SBC consists of a collection of discourse analytic transcriptions of spoken language recordings, and the recordings are provided as separate audio files. At the opposite end of the scale we can find corpus infrastructures that include multilayered annotation of data aligned across different modalities. An example of this latter type is the Nottingham Multimodal Corpus (NMMC), which includes video recordings, transcriptions of the spoken language and the annotation of non-verbal communication like gestures.

Advantages: Multimodal spoken corpora make it possible for researchers to study verbal, paraverbal and non-verbal features of language in combination. In written language, the combination of text with images, videos, layout and typographic elements can be studied. Thus, multimodal corpora open up new avenues of pragmatic research.

Limitations: By far the biggest limitation of multimodal corpora is that their compilation is very time-consuming and, as a consequence, they tend to be rather small. Corpora that include layers of annotation for non-textual information, such as gestures or eye-gaze, face the same challenges as transcriptions of spoken language, namely that the transcription of such information involves a considerable deal of qualitative interpretation.

Examples of previous studies: We discuss different examples of studies based on multimodal corpora in Section 7.

2.6 Pragmatically Annotated Corpora

Some corpora include annotation that is relevant for pragmatic analysis (for an overview, see Reference O’Keeffe, Jucker, Schneider and BublitzO’Keeffe 2018: 599–605). In some cases, corpus annotations can inform pragmatic analysis, whereas in others, it is pragmatic information that is annotated. For instance, the Sociopragmatic Corpus (SPC) is a subsection of the CED, in which speaker information such as gender, social status, role and age group is annotated on the level of each utterance. This makes it possible to study, for instance, how the role relationship between interlocutors affects the realisation of pragmatic functions. An example of annotation of pragmatic information can be found in the SPICE-Ireland Corpus, a subsection of the spoken component of the ICE-Ireland corpus that is annotated for pragmatic and prosodic information. The annotation includes information about the speech-act function of utterances, discourse markers and quotatives.

Advantages: Depending on the kind of annotation, annotated corpora make it possible for researchers to search for pragmatic functions or to restrict their queries to factors that are relevant for pragmatic analysis.

Limitations: Since annotation is time-consuming, these corpora tend to be small. Attempts at automatising the process have been made (see Reference WeisserWeisser 2014 on the automatic annotation of speech acts), but it is doubtful whether the process of manual classification will ever be fully replaced and, thus, the time needed to add annotations to a corpus will likely continue to pose limits to the size of annotated corpora. Moreover, studies are restricted to the kinds of information that have been annotated in the corpus, and given that the annotation of pragmatic information already involves a great deal of interpretation, the corpus compiler’s assessment of the data influences the results.

Examples of previous studies: Reference Kirk, Amador-Moreno, McCafferty and VaighanKirk’s (2015) study of the pragmatic markers kind of and sort of is based on the SPICE-Ireland Corpus. The Sociopragmatic Corpus has been used for a range of studies on pragmatic features in Early Modern English, including Reference ArcherArcher’s (2005) studies of questions and answers, Reference Culpeper, Archer, Jucker and TaavitsainenCulpeper and Archer’s (2008) study of requests and Reference LutzkyLutzky’s (2012) study of discourse markers.

2.7 Learner Corpora

Learner corpora include language produced by non-native speakers of a language. Such corpora usually provide information on the level of proficiency and/or the amount of instruction of the speakers or writers that contribute data. Examples of learner corpora are the International Corpus of Learner English (ICLE), based on essays from learners from a large number of different language backgrounds, and the Louvain International Database of Spoken English Interlanguage (LINDSEI), the spoken counterpart to ICLE.

Advantages: Learner corpora make it possible to study pragmatic aspects of L2 language learning. They can also provide new perspectives for language teaching.

Limitations: The data in learner corpora is often derived from task-based language production. While written learner corpora may include essays that were produced in the ordinary course of the teaching process, spoken corpora often include task-based interaction that took place for the purpose of corpus composition, and that sometimes also includes language produced by interviewers involved in corpus composition. This distinguishes learner corpora from most other kinds of corpora, which tend to include language that was produced without elicitation for research purposes.

Examples of previous studies: Examples of corpus studies of pragmatic features in learner corpora include the study of hesitation markers (Reference Gilquin and JesúsGilquin 2008) and discourse markers (Reference BuysseBuysse 2012, Reference Buysse2017, Reference Buysse2020) based on the LINDSEI corpus. Another example is Reference HuschováHuschová’s (2021) study of the pragmatic functions of can and could in speech acts in the Corpus of Czech Students’ Spoken English.

2.8 Parallel Multilingual Corpora

The term ‘parallel corpus’ refers to a corpus of specific design, which includes related subcorpora aligned in respect to one another according to certain criteria (these might be structural, e.g. sentence or timestamp alignment, or semantic, e.g. translation unit alignment). A multilingual parallel corpus contains source texts, or transcripts, in one language, and their translation(s) into other language(s). The parallel aligned design makes it possible to locate an excerpt in the source text and its corresponding translation using a single query. Various parallel corpora are available to date (see Examples of previous studies). For researchers interested in compiling their own parallel multilingual corpus, SketchEngine offers a corpus infrastructure that supports this.

Advantages: Parallel corpora enabled a qualitatively new step in the studies of translation and cross-linguistic analysis, finally making it possible to test empirically the assumptions about translation universals, improve machine translation and provide translators with a more reliable, up-to-date and usage-based alternative to a conventional dictionary.

Limitations: Parallel corpora are notoriously difficult to compile since they require an additional step of aligning the subcorpora. This has prompted calls to reuse existing parallel corpora rather than invest in the creation of new purpose-built ones (Reference Doval and Sánchez NietoDoval & Sánchez Nieto 2019). In addition, professional translators in large organisations often rely on shared translation memories such as DGT-TM, i.e. organisation-specific databases that store sentences, paragraphs or segments of text that have been translated before. This makes the resulting translation corpora uninteresting for research in contrastive linguistics (Reference Cartoni, Zufferey and MeyerCartoni et al. 2013).

Examples of previous studies: The key resources of this type, such as the EUROPARL (the proceedings from the European Union in twenty-one languages, see Reference KoehnKoehn 2005) or OPUS (subtitles and localisation resources covering 200 language variants, see Reference TiedemannTiedemann 2012), generated hundreds of studies. They range from classic issues of translation studies, e.g. testing the explicitation hypothesis (Zufferey & Cartoni 2014), to research questions in contrastive linguistics, e.g. studying how impersonalisation is expressed in English versus German (Reference GastGast 2015).

2.9 Unsystematic Large Text Collections

There are a number of interfaces that make it possible for researchers to search collections of often unannotated electronic documents that were not systematically selected to build a linguistic corpus. A prominent example is the Google Ngram Viewer, which can be used to compare the frequencies of word sequences in printed (and electronically available) sources since 1500. Similarly, EEBO provides a search interface for almost 150,000 titles of historical printed books from 1475 to 1640. With EEBO, researchers have the option of searching for some amount of metainformation on the books, as well as of viewing images of page scans of the book. However, there is only limited access to the full text of EEBO and more advanced corpus operations – e.g. search for collocations and keywords – cannot be carried out. Similarly to EEBO, the Corpus of Late Modern English Texts (CLMET 3.0) provides access to the full books published by British authors between 1710 and 1920.

Advantages: The main advantage of such search interfaces is that they often provide access to a massive amount of data. This is especially valuable for studying less frequent linguistic phenomena, for which large corpora are needed to find a sufficient number of attestations. In addition, large text collections can provide some insight into overall diachronic developments, especially when different expressions are compared to each other. Finally, large amounts of data are ideal for the training of machine learning language models, which can be used for the computational analysis of linguistic features, including pragmatic ones.

Limitations: Unsystematic text collections do not have the same degree of reliability as smaller, manually compiled corpora. Documents can be misclassified, leading to wrong results, and automatic text recognition, which is often used in preparing the data, can misrepresent the content of documents. These problems can be reduced by editing the data, but due to the data size, this process is often time-consuming. In addition, contextualisation is often a problem. For instance, the Google Ngram Viewer only provides frequencies of word sequences, but there is no option of viewing instances of the sequence in context. These limitations mean that such data sources can usually only provide one piece of information, which has to be complemented with additional evidence through triangulation of methods.

Examples of previous studies: The Google Ngram Viewer has been used in various studies of metacommunicative expressions, such as in Reference Jucker, Kopaczyk, Culpeper, Haugh and KádárJucker and Kopaczyk’s (2017) account of historical (im)politeness. Likewise, Reference JuckerJucker (2020) presents results from CLMET, in addition to the Google Ngram Viewer, in tracing politeness expressions across time.

2.10 Purpose-Built Self-Compiled Corpora

Many corpus-based studies of pragmatics are based on corpora that are purpose-built for the study in question. Depending on the project for which they are created, such self-compiled corpora can vary greatly in size, composition and metainformation that is included. They can take the form of small, carefully selected and richly annotated collections of data as well as vast compilations of documents that are electronically available. Likewise, they may or may not be made accessible to other researchers (see also Limitations).

Self-compiled corpora can be processed with generic corpus software, which includes freeware such as AntConc, TXM and the tools offered by the IMS Open Corpus Workbench (CWB), as well as paid solutions like WordSmith Tools or Sketch Engine. For smaller projects and qualitatively oriented research questions, tools for qualitative data analysis can be used, such as MAXQDA, NVivo and ATLAS.ti. For researchers comfortable with using programming languages, additional tools are available, such as Python’s Natural Language Toolkit (NLTK) and spaCy, or R’s quanteda and koRpus. For many purposes, a good text editor and pattern-matching with regular expressions can be sufficient to help researchers process text-based data. For more advanced analysis, XML-based corpora can be annotated and searched with XPath or CSS Queries, for instance using the Oxygen XML Editor. CWB corpora can be hosted on a server and made accessible via a graphic user interface (CQPweb).

Advantages: Purpose-built corpora present exactly the kind of data that is needed to answer a given research question. In addition, researchers who compile their own corpora tend to be extremely familiar with the data that is included, which is of great advantage for interpretation.

Limitations: The biggest drawback of self-compiled corpora is probably the amount of work needed for compiling them. This is one of the arguments in favour of making corpora accessible to other researchers, so that they can benefit from the work as well. Another argument is transparency. Only by providing data access to other researchers can results be verified independently. The FAIR Principles (www.go-fair.org/fair-principles/) discuss the relevant considerations behind this. Moreover, researchers need to deal not only with technical issues, such as suitable data repositories, standards of file formats and annotation, but also with a large range of ethical and legal questions concerning data collection and sharing (see Section 3.3). The latter concerns are, of course, also an issue for the corpus compilers of other corpus types but no longer for the users of the finished corpora, who rely on ethics and copyright having been taken care of by the compilers.

Examples of previous studies: We discuss different examples of studies using purpose-built self-compiled corpora in Sections 3 and 4.

2.11 Conclusion

The outline of corpus categories presented in this section makes it clear that corpora come in all kinds of sizes, forms and structures, and with a wide variety of different kinds of contents. As a consequence, corpus pragmatics is far from a homogenous field of research. Methods that are well-suited for searching text in balanced sampler corpora cannot be applied directly to the analysis of gestures in multimodal corpora, and the skills needed by researchers for compiling and annotating their own handcrafted datasets will differ vastly from the skills needed to work with interfaces to unsystematic large text collections. Thus, any discussion of corpus pragmatic methods needs to first establish what kind of corpus data is involved in a given study. In the remainder of this Element, we take a closer look at some types of corpora and the kinds of challenges and opportunities they present for research.

3 Corpus-Driven Approaches with Self-Compiled Corpora

3.1 Introduction

The distinction between corpus-based and corpus-driven approaches to linguistics was introduced by Reference Tognini-BonelliTognini-Bonelli (2001), who discussed the underlying assumptions behind corpus work with language data. She posited that while corpus-based studies use a systematically collected dataset – a corpus – in order to test or explore a pre-existing hypothesis, corpus-driven studies claim that the corpus itself is the sole source of hypothesis about language. A corpus-driven approach to pragmatics, therefore, treats a corpus as more than a repository of examples or a reference for a bottom-up development of evolving taxonomies (as with the methods described in Section 4 of this Element).

While corpora are often created with the aim to allow analyses relatively free from preconceived notions about language, it is important to note that corpus building is itself an analytical step and that no corpus is theory-free. This is especially true for corpus-driven pragmatics, since pragmatic meaning is necessarily more interpretative than, say, lexicogrammar. In order to remove researchers’ bias as far as possible, corpus-driven approaches rely on automatic annotation of pragmatic phenomena. As such, corpus-driven research requires relatively large corpora and sophisticated automatic annotation. Pragmatic annotation layers may include classic pragmatic units (speech acts, deixis) and units on the interface between pragmatics and semantics (such as contextualisation cues or positive versus negative polarity). Some scholars draw on grammatical annotation for corpus pragmatics as well, arguing that the creation of communicative meaning involves all levels of language (for instance, Reference Rühlemann, Clancy, Ilie and NorrickRühlemann & Clancy 2018, who study indicative and subjunctive verb forms as an expression of deixis).

The work on (semi)automatic speech-act annotation of English language corpora includes the Speech Act Annotated Corpus project (SPAAC, University of Lancaster, Reference Leech, Weisser, Archer, Rayson, Wilson and McEneryLeech & Weisser 2003) and the implementations of the Dialogue Annotation & Research Tool (DART, Reference WeisserWeisser 2010, Reference Weisser, Aijmer and Rühlemann2015). Of course, some speech acts lend themselves better to automatic annotation than others. For example, congratulations are strongly associated with predictable surface forms such as ‘congratulations’, ‘happy birthday’, ‘merry Christmas’ – the so-called IFIDs. The bulk of corpus pragmatic research within the corpus-driven approach has been done on speech acts with a stable list of IFIDs, for example, apologies (Reference Lutzky and KehoeLutzky & Kehoe 2017a; Reference JuckerJucker 2018). Some ambitious attempts in natural language processing aimed to include more contextual factors when training an algorithm to recognise speech acts that do not include IFIDs – for example, flirting (see Reference Ranganath, Jurafsky, McFarland, Koehn and MihalceaRanganath et al. 2009).

In this section of the Element, we zero in on a special case of corpus-driven pragmatics: when researchers rely on fully automatic extraction of linguistic features but steer their interest through the process of corpus compilation. Much of automatic corpus analysis relies on the comparison of subcorpora (for example, keyword analysis, contrasting lists of n-grams or ranking collocates of an item). This means that through corpus design, researchers can manifest their interest in specific variables, while keeping the study of those variables entirely corpus-driven. For example, compiling a corpus of book reviews with different reader ratings enables the researcher to analyse how polarity and evaluation differ depending on whether a book is liked (Reference Rebora, Boot and PianzolaRebora et al. 2021: ii240–1).

In this section, we will discuss the process of creating such a corpus, and the benefits and challenges of this type of corpus pragmatic research, based on the example of the r/changemyview study of persuasion. The challenges and choices of the corpus creation process that are discussed in this section are not unique to corpus-driven pragmatic research and can be usefully applied in other research contexts, including those described in Section 4. The present corpus was created by downloading posts and replies from the popular online forum website Reddit (www.reddit.com/) (See Reference Dayter and MesserliDayter & Messerli 2022). Reddit is a platform subdivided into thousands of thematic forum communities, many of which lend themselves well to the study of specific pragmatic phenomena. Below, we discuss a purpose-built corpus of the forum threads from the r/changemyview (CMV) subreddit (www.reddit.com/r/changemyview/), a community devoted to persuasive practices.

3.2 Case study: Persuasion in a Corpus of Reddit Posts

3.2.1 Designing a Corpus for the Study of Persuasion

Persuasive discourse has been defined in linguistic research as all linguistic behaviour that attempts to either change the thinking or behaviour of an audience, or to strengthen its beliefs, should the audience already agree (Reference Virtanen and HalmariVirtanen & Halmari 2005). Since the judgement of whether a stretch of discourse is persuasive or not (i.e. has changed or strengthened the readers’ views) depends on the speaker’s or writer’s intent, the decision is ultimately interpretative and would have to be realised through the process of manual annotation.

However, through the careful choice of data and corpus design, researchers can enable a corpus-driven pragmatic study. The first step in this process is to find a dataset that contains persuasive discourse, recognised as such through both interaction-internal and interaction-external orientation. A good example of data of this kind can be found on the subreddit r/changemyview. Not only does the subreddit self-identify as a community dedicated to changing users’ views, comments posted on it also include several language-external indicators of popularity and persuasiveness. One of these indicators, called karma, is Reddit’s equivalent to upvotes and likes on other platforms and social networks and points to the uptake of individual posts by other members of the community. Collaboratively, the community thus signals their approval by adding to the post’s and poster’s karma. In addition, and more importantly for the persuasion-oriented researcher, threads of comments on CMV award a great deal of interactional power to original posters (henceforward OP). OPs first post a statement with an invitation for others to change their, the OP’s, view. When comments are posted, OPs then decide which of them succeeded in persuading them and reward such convincing posts with a delta (Δ), which signals to others that the OP found the arguments in the awarded post persuasive. Consequently, the researcher who collects a corpus of CMV data and includes delta awards as metadata, can structure their data so that successfully persuasive comments (delta-awarded comments, DACs) and unsuccessful attempts at persuasion (non-DACs) can be contrasted. Without the limitations of automatic annotation of pragmatic features, or the etic judgement of one or several trained coders, it is thus possible to create a large corpus of attempts at persuasion and subcorpora of more and less persuasive comments (Reference Dayter and MesserliDayter & Messerli 2022).

3.2.2 Building a Corpus: Ethics and Copyright

The first concern of any empirical project is the availability and researchability of data, which means that researchers must inevitably ask themselves first whether the data they want to study can be acquired in a manner that is both ethically sound and in agreement with existing copyright laws. While smaller qualitative studies primarily establish this by getting the explicit consent of the rights holders to the data they use (typically the authors themselves), research based on larger corpora cannot follow the same pattern, since it is not feasible to ask, say, hundreds of thousands of CMV users for permission to use their data. This has been recognised in recent times also by new legislation, e.g. in Germany and Switzerland, where some of us work, which specifically allows big data research without consent for scientific purposes. Following these laws is the first step in ensuring sound research, but it does not exempt researchers from carefully assessing the ethical ramifications of their research for the people whose work they are scrutinising. Just like in the case of studies based on consent, researchers need to also ask themselves whether their studies will be beneficial or could potentially be harmful for authors represented in the corpus as well as for society at large. A useful guide for interpreting the existing ethics guidelines with respect to corpus linguistic approaches can be found, for example, in Reference Koene and AdolphsKoene and Adolphs (2015), focusing on internet data, or the AoIR Internet Research Ethics 3.0 (Reference Franzke, Bechmann, Zimmer and Essfranzke et al. 2020), addressing specifically the problem of informed consent in big data analyses.

In the example we choose here, the researchers established that the population chosen for research is not vulnerable and the textual data does not concern issues that might cause potential harm if disclosed in publication. Moreover, the subreddit r/changemyview encourages academic research and has a page dedicated to academic papers using CMV data. Therefore, there is no potential harm to social media users, or potential ethical problems associated with the project.

In addition to ethics concerning those whose work is included in the corpus, there are further ethical concerns and responsibilities towards the scientific community. In this sense, ethical research always attempts to be optimally transparent and to facilitate reproducibility of its result. It is worth pointing out, however, that ethical concerns for the research community and for the researched population are often at odds. Researchers find themselves confronted with the uncomfortable choice of increasing the potential for harmful outcomes for the communities they research or reducing the transparency of research, with the latter typically considered the lesser evil. Thus, the consensus in research ethics is that social media posts should not be redistributed as datasets, since this denies agency to individuals who have subsequently deleted their posts and may be entirely unaware that their data are circulating in third-party datasets shared among researchers (see Reference Proferes, Jones, Gilbert, Fiesler and ZimmerProferes et al. 2021). This stance is fixed both in the Twitter’s Terms of Service and, more generally, in European GDPR’s stipulation on the right to be forgotten. The compromise to satisfy the demand on reproducibility is to create ‘dehydrated datasets’, i.e. lists of unique post IDs instead of files with full textual data and metadata.

3.2.3 Building a Corpus: Technical Challenges

A corpus in the sense we are using it here is typically an operational representation of the linguistic practices of a community. In our example, the researchers considered the subreddit CMV as a speech community whose interactions, while being perhaps similar to others on Reddit, in social media and elsewhere, can reasonably be modelled in a research corpus for internal as well as contrastive studies. Since the corpus is thus in a particular relationship to that which it models, i.e. to the utterances that were and are accessible on r/changemymiew, researchers are faced with decisions about how to represent the population in the corpus. Choices include such aspects as:

– The amount of data – how many posts should be included in the corpus?

– Which texts, if any, should be excluded from the corpus – e.g. duplicates

– The representation of each text, including typography, aesthetic presentation, and linguistic, paralinguistic, and non-linguistic context (see Section 7 for considerations concerning multimodality)

– The inclusion of internal and external metadata – e.g. delta, the time of posting or information about the individual posters

– The hierarchy of and relationship between texts in the corpus

– Additional annotations that should be added by the researchers to facilitate their research

In the case of the CMV corpus, the researchers chose to collect all posts that had ever been posted to r/changemyview, which means that it would include all posts between May 2013 and the time of data collection, May 2020. When it comes to excluding texts, corpus-building choices reflected what is and is not considered to be a part of CMV communication: in some cases, posts marked as deleted can still be accessed through the pushshift.io Reddit API that was used to collect the data, as are those that are marked as being in breach of the subreddit’s rules. Both these types of posts were excluded from the final corpus. In addition, the cut-off date points to a problem concerning the fixity of the text (Reference JuckerJucker 2004). While the raw data of social media in particular may grow indefinitely (submissions to r/changemyview written before 2020 may still receive comments years later), the static representation in the corpus is often fixed at the time of collection, thus forcing dynamically evolving discourse into a rigid snapshot. Corpora such as Davies’ NOW (News on the Web) corpus may instead be updated at regular intervals and thus be conceptually dynamic. Since published research is largely static at the time of rewriting, a more dynamic approach creates issues in terms of reproducibility, and in the inevitable choice between the two strategies, the researchers building the CMV corpus thus chose stability of results over representation of dynamic data.

In terms of the representation of the texts, the CMV corpus only included verbatim the roughly six million texts themselves, but not their visual organisation into threads, no audiovisual materials users may have posted alongside their texts (of which there are very few if any), and no typographic and other aspects of the graphic appearance on Reddit. The corpus includes metadata that is also visible on r/changemyview, like the time of posting, the delta status of posts – were they awarded a delta or not? – and a unique user for each comment. However, for ethical reasons all user names were anonymised. The relationship between texts was also represented as textual metadata, with hierarchies flattened into annotations as to what threads comments belong to and what posts or comments they respond to. Finally, regarding additional annotations, the corpus was lemmatised and tagged for parts of speech.

While the CMV corpus exists in an initial form, encoded in the CWB (see Reference Evert and HardieEvert & Hardie 2011) and accessible locally through command line tools as well as using the R package polmineR (Reference BlaetteBlaette 2020), it is important to point out that individual studies will typically use corpora in particular ways, which often amount to actually or virtually creating new corpora or subcorpora and thus new representations of aspects of communication in the original population. In the CMV project, an initial focus was a contrastive study of linguistic differences between more and less successfully persuasive posts (see Section 3.2.4). Accordingly, the corpus was subsampled into two subcorpora based on the delta-marker: a 14.5 million word delta subcorpus (54,000 texts) was compared to a 133.7 million word non-delta corpus (500,000 texts). In this case, the delta subcorpus contains all comments available in the corpus and thus on r/changemyview (in May 2020), whereas the non-delta segment contains a sizeable sample of the population. This sampling of the non-delta subcorpus included the conscious choice to increase the similarities to the delta subcorpus, which meant that very short and very long texts were included until an almost identical average word length was achieved (266–7 words per comment).

3.2.4 Analysis of Formality and Persuasion

Based on the corpus design we illustrated above, the researchers in the CMV project conducted two studies targeting register differences in the CMV corpus. The detailed results of the first study are published in Reference Dayter and MesserliDayter and Messerli (2022), with the second study forthcoming. Rather than reiterating these results here in full, we will offer a brief summary and use Section 3.3 primarily to highlight the insights the studies offer for corpus-driven research more broadly.

Using a comparative subcorpus design, we have investigated the linguistic differences between delta and non-delta comments along two dimensions: formality–informality and overt expression of persuasion. To operationalise the linguistic exploration of the delta/non-delta distinction inherent in the population and the corpus, procedures established and documented in the existing literature were used. The first study identified twelve quantifiable linguistic markers (for example, frequency of WH questions and of nominalisations) that are associated either with high-formality or low-formality language. The second study drew on Reference BiberBiber’s (1988) variationist analysis, which has proposed and empirically validated seven linguistic features associated with the overt persuasion dimension of variation (such as frequency of infinitive, prediction modals or suasive verbs).

After a statistical analysis (the chosen method here was the Mann–Whitney U test, a test that can be used to compare data that is not normally distributed), we arrived at the conclusion that there are no significant differences of high or medium effect between the delta and non-delta posts. This was true both for the formality and for the overt persuasiveness dimensions. The studies fell squarely into the category of negative results – a situation when the anticipated hypothesis is not borne out by the corpus data.

Taken in isolation, the results of this corpus-driven study did not contribute in a significant way to our understanding of persuasive language on CMV. However, it is exactly in this context that the benefits of combining corpus-driven methods with a closer inspection of the language data become apparent. To triangulate the quantitative findings, we designed our study to also include manual analysis of the concordances of two category-bound actions: to persuade and to change someone’s view, as well as a study of the highest-frequency n-grams. This closer look at community discourses revealed an explicit orientation towards how, in the opinion of community members, a hypothetical ‘good user’ or ‘good persuader’ should write. The users frequently referred to the binary distinction between a personal opinion that cannot be changed by facts and evidence (an undesirable stance) versus a malleable view that can be disproven in an objective manner (desirable stance). The two example below illustrate this community norm:

(3.1) We can’t really change your view of a personal opinion. If you don’t enjoy ET then you don’t enjoy ET.

(3.2) First, you can change your view multiple times, and you claimed secular buddhismis the most common, and I disproved that with numbers.

As Example 3.2 shows, ‘change your view’ is often understood as ‘provide evidence that your view is incorrect’. The members of the CMV community recognise formal register as the appropriate linguistic resource to draw on in evidence-oriented discourse. Taken together, the corpus and the qualitative analytical findings confirmed that r/changemyview has an established linguistic community norm: all users write in a formal, overtly persuasive style, perhaps reflecting the community’s affinity for academic-like argumentation.

3.3 Conclusion

Corpus-driven approaches to pragmatics aim to be as unbiased by pre-existing assumptions about the dataset as possible. This stance is very useful when working with pragmatic categories that can boast robust form-to-function correlation, for example deixis or discourse markers. However, adding an interpretative component in an analysis of higher-level ‘strategies’ or ‘moves’ inevitably requires a departure from an approach to data completely uninformed by any pre-existing theory. In this section, we demonstrated how a compromise may be achieved through careful preliminary corpus design, which is then used as the sole source of hypothesis about language in line with the corpus-driven pragmatics setup. Despite the overall success of the approach, some interesting observations resulting from the study could only be put in sufficient context with help of a qualitative close-up look. The next section of the Element, Section 4, will continue down this avenue of research to describe the benefits and limitations of corpus-based and corpus-assisted approaches to pragmatics.

4 Corpus-Assisted Approaches with Self-Compiled Corpora

4.1 Introduction: Corpora in Discourse Analysis

Corpus pragmatics is an important field of research that enables linguists to analyse public discourses about societal issues, such as gender politics and gender relations (Reference Lutzky and LawsonLutzky & Lawson 2019), voting behaviour (Reference IfukorIfukor 2010), right-wing activism and misogyny (Reference Krendel, McGlashan and KollerKrendel et al. 2022), terrorism (Reference Harrison, Todd and LawtonHarrison et al. 2008) or the anti-vaccination movement (Quo VaDis Project 2022). However, traditional corpus-driven methods (such as the ones described in Section 3 of this Element) may lack the human analyst’s judgement, which is highly important for understanding discourse. The gap between an automatic analysis of corpora of moderate to large scale and a close reading of each example by a human can be bridged by corpus-assisted approaches to pragmatics and discourse analysis.

More qualitatively oriented researchers make use of a number of corpus-assisted techniques that harness the power of comparatively large datasets in order to find patterns, quantify them descriptively or even simply check the presence of posited forms in the data. Perhaps one of the most prominent approaches one should mention is corpus-based discourse analysis, or CBDA (see, e.g., Reference BakerBaker 2006). A common criticism of close-reading approaches to discourse analysis is that they may be too deeply rooted in the researcher’s pre-existing belief. CBDA makes a step towards the benchmark of empirical, unbiased investigation by relying more on quantified and reproducible findings. Reference Heinrich and SchäferHeinrich and Schäfer (2018: 135) emphasise the auxiliary role of the corpus, which should ‘enable hermeneutic researchers to analyze and qualitatively interpret huge amounts of textual data without excessive cherry-picking’. Corpus-based discourse analysis typically involves obtaining concordances of search terms using corpus software, and manually analysing and interpreting them to uncover discourse: ‘a set of meanings, metaphors, representations … that in some way together produce a particular version of events’ (Reference BurrBurr 1995: 48). This has been done for various issues and employing different datasets: some examples among many are Reference Koteyko, Nerlich, Crawford and WrightKoteyko et al. (2008) for infectious diseases in UK media and government discourses, Reference DayterDayter (2016) for self-representation of ballet apprentices online, Reference KimKim (2014) for the representation of North Korea in US media, Reference Messerli and LocherMesserli and Locher (2021) for humour support in timed comments and Reference Dayter and RüdigerDayter and Rüdiger (2022) for manosphere discourses.

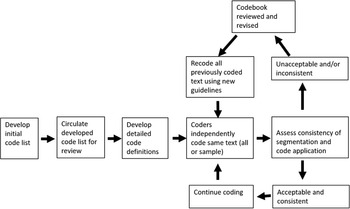

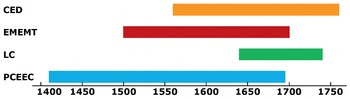

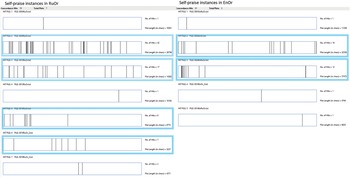

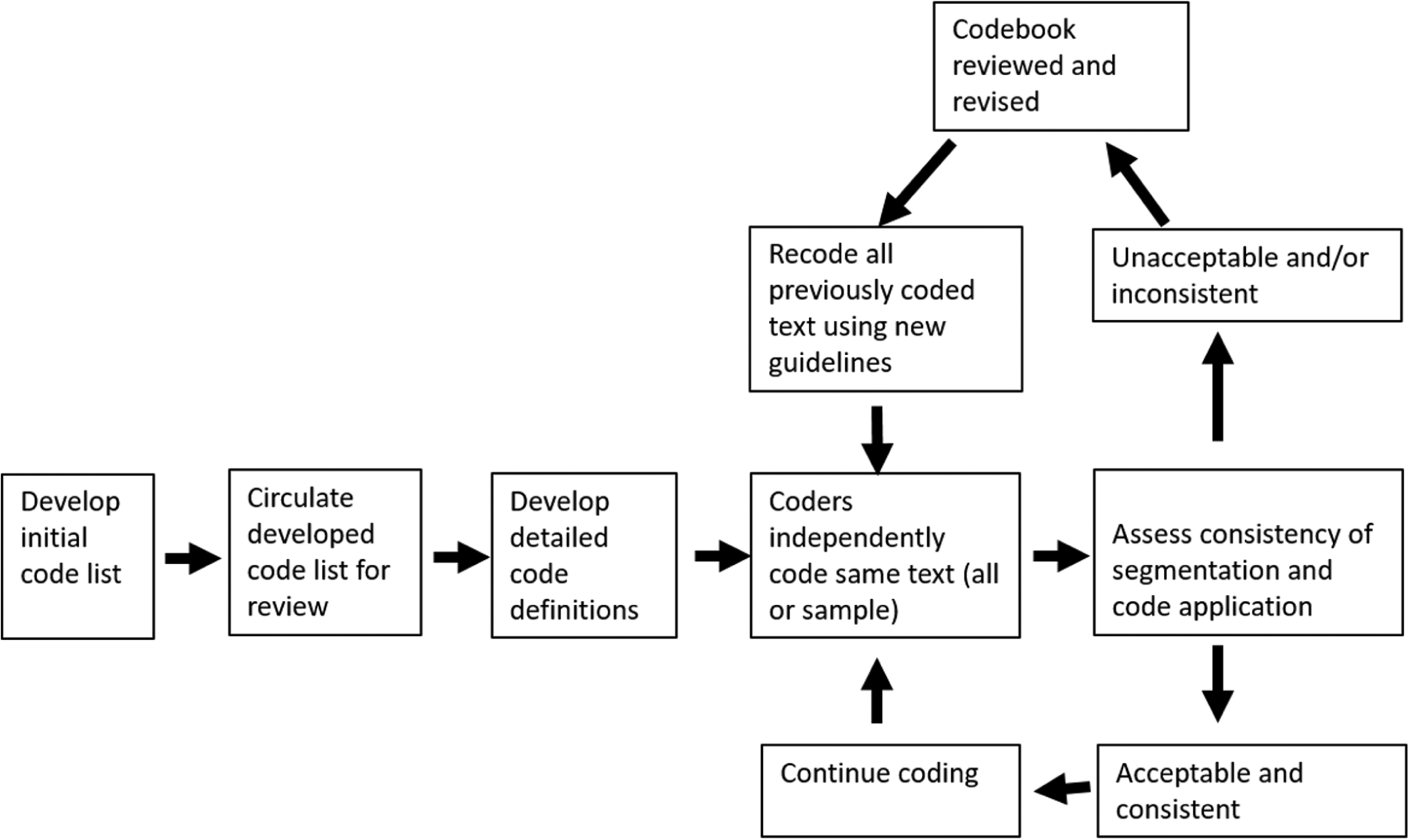

Another step towards the researcher’s pre-existing taxonomies informed by literature, intuition and pilot studies is made in the family of analytical techniques known as corpus-assisted discourse studies (CADS). CADS research often relies on the critical discourse analysis framework and addresses politically laden issues, such as construction of race (Reference Krishnamurthy, Caldas-Coulthard and CoulthardKrishnamurthy 1996), discourse about the Iraq war in the press (Reference Marchi, Taylor, Garzone and CatenaccioMarchi & Taylor 2009) or representation of European citizenship (Reference Bayley and GeoffreyBayley & Williams 2012). CADS differs from CBDA in respect to the degree of presuppositions and taxonomies that the researcher brings to the analysis. Reference Love and BakerLove and Baker (2015), for instance, studied British Parliamentary arguments against LGBT equality and how they evolved over time. On the one hand, the method they used is very similar to classic CBDA. They started with a keyword analysis and a search for the collocates of the relevant terms to identify the salient lexical items in the debate, and then manually examined the concordances for these items. On the other hand, the choice of the search queries was informed by the purpose of the study: analysing the gradual shift from more to less explicit homophobic argumentation in the debates about same-sex marriage. Reference Love and BakerLove and Baker (2015: 84) describe how their pre-existing agenda informed the study: ‘knowing that the speakers … voted against gay marriage, and that public attitudes towards homosexuality had altered enables a fuller interpretation and explanation of their language’.