Growing concern about online misinformation has given rise to debates about possible interventions to mitigate the spread of fake news. Some common solutions considered by scholars, journalists, and policymakers, such as fact-checking corrections and internet platform regulation, seek to reduce the dissemination of misinformation in the news market. While some argue that these solutions have limited effectiveness or tend to be controversial and unfeasible in the short run (McIntery Reference McIntery2018), less attention has been given to solutions that target citizens’ propensity to believe misinformation without providing corrective information or limiting the spread of information online. In this paper, we investigate a specific type of intervention against misinformation in the news market. Preventive interventions, such as awareness and media literacy campaigns, seek to motivate and train individuals to critically engage with news content and distinguish between accurate and inaccurate information (Bulger and Davidson Reference Bulger and Davidson2018). Since such interventions do not attempt to correct specific rumors, which are often tied to political groups and identities, they may be effective at inoculating citizens against misinformation. Despite a burgeoning scholarship on experimental assessments of their effects against misinformation, field experimental interventions are not too common (for exceptions, see Badrinathan Reference Badrinathan2021; Guess et al. Reference Guess, Lerner, Lyons, Montgomery, Nyhan, Reifler and Sircar2020, among others).

We implement two experiments embedded in online survey panels to test the effectiveness of a campaign against misinformation. The first was carried out during the 2020 mayoral elections in São Paulo, Brazil. The intervention was designed through a partnership with the largest Brazilian newspaper, Folha de São Paulo, which provided free subscriptions and email content to be used in the treatment stimuli. The second study, fielded in January 2022, replicated features of the first study and included some additional elements. Our findings show that the interventions decreased rumor acceptance, thus providing further evidence that awareness and media literacy campaigns can reduce people’s belief in misinformation. The results are particularly striking in the context of Brazil, where misinformation is widespread and evidence of fact-checking effectiveness is mixed (Batista Pereira et al. Reference Batista Pereira, Bueno, Nunes and Pavão2022; Carey et al. Reference Carey, Chi, Flynn, Nyhan and Zeitzoff2020; Machado et al. Reference Machado, Kira, Narayanan, Kollanyi and Howard2019; Porter et al. Reference Porter, Velez and Wood2023).

Theory

Preemptive media literacy and awareness interventions seek to increase individuals’ motivation and skills to critically engage with media content (Bulger and Davidson Reference Bulger and Davidson2018). While these interventions are most commonly carried out in educational settings, the growing availability of social media to larger shares of the public has led to a number of initiatives attempting to reach broader audiences (Lee Reference Lee2018). Moreover, organizations such as Whatsapp and Facebook have recently launched large-scale cross-national campaigns to educate their users on misinformation (Guess et al. Reference Guess, Lerner, Lyons, Montgomery, Nyhan, Reifler and Sircar2020).

Much like a vaccine against a disease, preemptive messages aim at protecting individuals from being persuaded by misinformation (McGuire Reference McGuire1961). Additionally, inoculation can operate through motivational or refutational mechanisms (Banas and Miller Reference Banas and Miller2013). While motivational interventions warn individuals about their potential vulnerability to attitude change in the face of persuasion, refutational preventive interventions seek to provide informational content that undermines the effect of future persuasion attempts.

The theory underlying this type of intervention is consistent with classic dual-process models of attitude change and persuasion (Eagly and Chaiken Reference Eagly and Chaiken1993; Petty and Cacioppo Reference Petty and Cacioppo1996). According to this perspective, human cognition can be divided into intuitive/automatic and deliberate/analytical processes of stimuli and information. As Pennycook and Rand (Reference Pennycook and Rand2019) show, rumor acceptance is largely associated with intuitive/automatic processes by which individuals process information. In this sense, preemptive interventions can reduce rumor acceptance by nudging individuals to rely on more deliberate/analytical forms of cognitive processing when they encounter new information. Consequently, preemptive interventions can affect individuals’ willingness to engage in effortful thinking to avoid accepting false information, especially when it reinforces prior beliefs (Pennycook and Rand Reference Pennycook and Rand2019). Those interventions, which can involve a variety of literacy skills to evaluate media content and online information, can promote the skill sets required to engage with media content and resist content containing misinformation (Jones-Jang et al. Reference Jones-Jang, Mortensen and Liu2021).

Extant scholarship provides experimental evidence of the effects of media literacy interventions on different skills related to engagement with media content, such as knowledge and self-efficacy (Jeong et al. Reference Jeong, Cho and Hwang2012). Among observational studies, the evidence suggests that media literacy is associated with lower probability of accepting rumors and conspiracy theories (Jones-Jang et al. Reference Jones-Jang, Mortensen and Liu2021). Similar results are observed in experimental assessments focusing on misinformation related to climate change (Cook et al. Reference Cook, Lewandowsky and Ecker2017; Lutzke et al. Reference Lutzke, Drummond, Slovic and Árvai2019) and genetically modified foods (Tully et al. Reference Tully, Vraga and Bode2020).

Recent scholarship using experimental approaches—restricted to a few countries and settings—finds that preemptive interventions tend to reduce demand for misinformation, but findings are somewhat mixed. On the one hand, Banas and Miller (Reference Banas and Miller2013) find that interventions reduce acceptance of 9/11 conspiracy theories among college students. Similarly, Roozenbeek and van der Linden (Reference Roozenbeek and van der Linden2019) use an online game as an intervention and find reduction in rates of rumor acceptance among players across different countries. Guess et al. (Reference Guess, Lerner, Lyons, Montgomery, Nyhan, Reifler and Sircar2020) show that a Facebook media literacy initiative against misinformation reduces rumor acceptance in the USA and India.

On the other hand, Hameleers (Reference Hameleers2022) finds statistically significant but weak effects of a media literacy intervention on reducing the perceived accuracy of false news in Netherlands and the USA. Clayton et al. (Reference Clayton, Blair, Busam, Forstner, Glance, Kawata, Kovvuri, Martin, Morgan, Sandhu, Sang, ScholzBright, Welch, Wolff, Zhou and Nyhan2020) find that a general “awareness warning” in a US sample reduces rumor acceptance, but more strongly when paired with fact-checking tags. Finally, Badrinathan (Reference Badrinathan2021) does not find evidence that an educative intervention on information processing was sufficient to counter misinformation in India.

Finally, assessing the effectiveness of preemptive messages carries special normative relevance in the context of this study. In Brazil, as in several developing nations, the spread of misinformation occurs primarily through instant messaging cell phone applications, such as Whatsapp (Rossini et al. Reference Rossini, Stromer-Galley, Baptista and de Oliveira2021), making platform regulation and corrections by automated algorithms less viable large-scale solutions in that context.

Hypotheses

We assess the effectiveness of preemptive interventions against misinformation using two field experiments in Brazil. We expect the intervention described in the next section to reduce rumor acceptance by providing individuals with the opportunity, motivation, and ability to reject inaccurate information. We also expect the intervention to have no effect on subjects’ propensity to reject factually accurate information.Footnote 1

Hypothesis 1 (H1) The preemptive intervention will reduce rumor acceptance among subjects in the treatment group relative to subjects in the control group.

Hypothesis 2 (H2) The preemptive intervention will not reduce acceptance of correct information among subjects in the treatment group relative to subjects in the control group.

Study 1

Study 1 uses a two-wave online survey combined with an experimental intervention conducted during the 2020 mayoral elections in São Paulo, Brazil. Footnote 2 The intervention was designed in partnership with Folha de São Paulo, a newspaper founded in 1921 with the largest circulation and online presence in Brazil. Data collection was performed by Quaest Consultoria & Pesquisa, which has an online panel of approximately 150,000 people in more than 2,000 cities in Brazil. The first wave of the survey included 1,000 respondents and was conducted between November 19 and 24, a few days before the second round of the local elections (November 29). The second wave took place between December 8 and 16 and we were able to re-interview 731 respondents from the first wave.

After completing the first wave questionnaire, respondents were randomly assigned, via simple randomization, to one of two conditions. After the first wave of the survey and before the second, the treatment group (n = 575) received the main experimental stimuli comprised of a multidimensional intervention seeking to reduce rumor acceptance. As the first component of the intervention, the treatment group received at the end of the survey a voucher for a free 3-month subscription to Folha de São Paulo. This component of the intervention offered participants the opportunity to learn and distinguish between factually correct and incorrect information, since the newspaper provides professional news coverage and fact checks the main rumors circulated in Brazil.Footnote 3 A week after the first survey wave (December 1), the treatment group also received an email from the survey company containing a message about the spread of fake news during the election. The message included a link to a news piece from Folha de São Paulo that described the newspaper’s fact-checking tools available for respondents. The email also included a list of eight steps for spotting fake news. The email sought to foster both motivation and ability to reject misinformation, since the warning message encouraged respondents to be careful with online content and the 8-step recommendations provided skills to evaluate online content.Footnote 4 The control group (n = 425) did not receive any stimuli related to the campaign.

The survey measured rumor acceptance by presenting four rumors that contained factually incorrect information (according to multiple professional fact-checkers) and then asking whether respondents believed they were true or false.Footnote 5 We used two different sets of four rumors in each wave of the survey, with one rumor repeating between waves.Footnote 6 We assess whether repeated exposure influences treatment effects by analyzing the effects on the gain scores between the first and second rounds for the repeated rumor.Footnote 7 In addition to the new set of rumors and a repeated rumor, the second wave of Study 1 included questions on trust in media and attitudes toward fact-checking.Footnote 8

Since subjects assigned to treatment could choose to ignore the voucher offered by the newspaper and the information sent via e-email (Gerber and Green Reference Gerber and Green2012, 131), we had cases of noncompliance. Out of 575 subjects in the group assigned to treatment, 66 activated the newspaper voucher and completed their registration in the newspaper’s website, and 272 subjects opened the follow-up email.Footnote 9 In total, 304 subjects complied by either opening the email or accessing the newspaper’s website.Footnote 10 We present both intent-to-treat (ITT) estimates and complier average causal effect (CACE) estimates to measure the impact of the intervention in the group of all study subjects and in the subgroup that complies with the assigned treatment, respectively.Footnote 11

We find that treatment assignment is statistically associated with a few pre-treatment covariates, including rumor acceptance. We performed three joint statistically significant tests (F, Chi-Square, and Hotteling’s T), one of them (F-test) was statistically significant at levels lower than 0.05 and all three were equal or lower than levels of 0.10.Footnote 12 We further examined the randomization algorithm used by the polling company and found no systematic error in the randomization procedure.Footnote 13 We show the main results unadjusted for covariates, but results do not change when we control for pre-treatment variables.Footnote 14

We were able to re-interview about 73% of our first-round sample. However, we found evidence of differential panel attrition, with respondents from the group assigned to treatment being more likely to drop out in the second wave of the survey.Footnote 15 To account for potential biases from differential attrition, we also assess the results using different bounding approaches (Gerber and Green Reference Gerber and Green2012, 226).Footnote 16

We present our results for two main dependent variables.Footnote 17 The first is an additive scale of rumor acceptance for the four rumors presented in the second wave of the survey (each coded as 0 for rejection and 1 for acceptance). The variable is re-scaled from 0 to 1 and indicates the extent to which subjects accept false rumors as true. The second dependent variable indicates the change in response to the repeated rumor, taking values −1 (believed in wave 1 but not in wave 2), 0 (did not change response between waves), and 1 (did not believe in wave 1 and believed in wave 2).

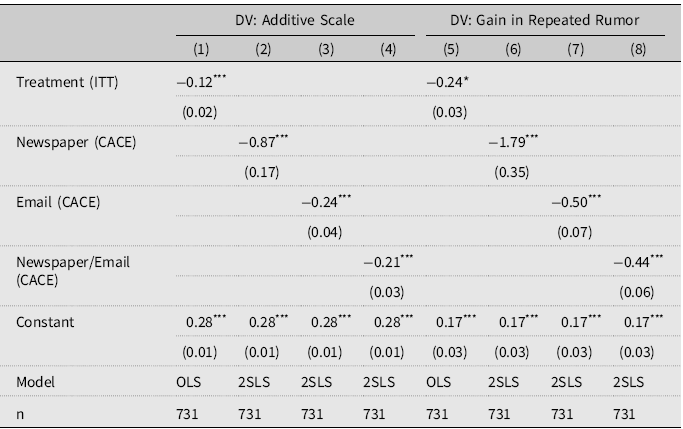

We assess the effect of the study’s intended treatment assignments, or intent-to-treat (ITT) effects, by using ordinary least squares (OLS) models (with robust standard errors). To estimate the complier average treatment effects (CACE), we use two-stage least squares (2SLS) regressions with robust standard errors for three different measures of compliance instrumented in the first-stage regression, while having the treatment assignment as the instrument in the second-stage regression (Angrist et al. Reference Angrist, Imbens and Rubin1996; Gerber and Green Reference Gerber and Green2012). The three measures of compliance refer to whether subjects accessed (activated the voucher and registered for) the newspaper’s website, whether subjects opened the follow-up email, and whether they complied by either accessing the newspaper or opening the email. The results are presented in Table 1.

The intent-to-treat estimates show that the intervention reduced on average 0.12 points in the additive scale of rumor acceptance (column 1)Footnote 18 and 0.24 points in acceptance of the repeated rumor (column 5).Footnote 19 The complier average causal effects also show the expected negative effects for the two outcomes. All of our measures of compliance, by whether subjects used the newspaper subscription (columns 2 and 6), opened the follow-up email (columns 3 and 7), or both combined (columns 4 and 8), indicate the intervention decreased the levels of rumor acceptance among compliers. Overall, our results are consistent with the idea the intervention makes subjects more likely to reject false rumors.

In regards to moderation, the treatment effects (ITT) do not show consistent patterns for both the additive scale and the repeated rumor with respect to pre-treatment levels of political knowledge, trust in the media, political interest, and support for the President. Hence, the intervention was not significantly less effective among subgroups that could be theoretically more prone to reject it.Footnote 20 We also find that the intervention has a significant effect on rumors in favor of President Bolsonaro and not on those against him. However, the reduced number of rumors used per type (2) suggests that the results should be taken with caution, since they could be dependent on specific rumor characteristics. Last, the intervention did not affect post-treatment levels of political knowledge, attitudes towards fact-checking corrections, or trust in the media.Footnote 21

Study 2

We conducted a second study using a two-wave online survey combined with a similar experimental intervention during the early months of 2022 in São Paulo. The first wave included 1,037 respondents and was conducted between February 2 and 14. The second wave occurred between February 23 and March 11, and re-interviewed 694 respondents.Footnote 22

Study 2 has a few important differences relative to Study 1. First, the study was not conducted during an electoral campaign. Second, because we did not partner with Folha de São Paulo in Study 2, the treatment group received only the email used as part of the treatment in the first study, but not the voucher to subscribe to the newspaper. Third, in order to better parse out the roles of ability and motivation to reject rumors, we also included real news pieces to use as dependent variable. The study included a larger battery of seven false rumors, three of which repeated between waves, and a battery of three factually accurate news pieces, with one repeating between waves. Finally, the programming and randomization of Study 2 were conducted on a Qualtrics platform, rather than on the polling company’s platform, to avoid the imbalances we found in Study 1.Footnote 23

Table 1. Intent-to-Treat (ITT) and Complier Average Treatment Effects (CACE) of Intervention on Rumor Acceptance in Study 1

*** p < 0.01. **p < 0.05. *p < 0.10. Robust standard errors are in parentheses.

Variables instrumented in 2SLS models: Newspaper, Email, Newspaper/Email.

We do not find statistical associations between treatment assignment and pre-treatment covariates in Study 2. A total of 521 subjects from wave 1 were assigned to the treatment group (email), while 516 were assigned to the control group (no message). We also do not find evidence of differential attrition, as the subjects from the experimental groups were equally likely to drop between waves 1 and 2 of the survey.Footnote 24 With respect to compliance, a total of 314 subjects from the treatment group opened the email, with 275 of those responding on wave 2. Overall, we were able to reinterview 694 respondents in the second wave (67%).

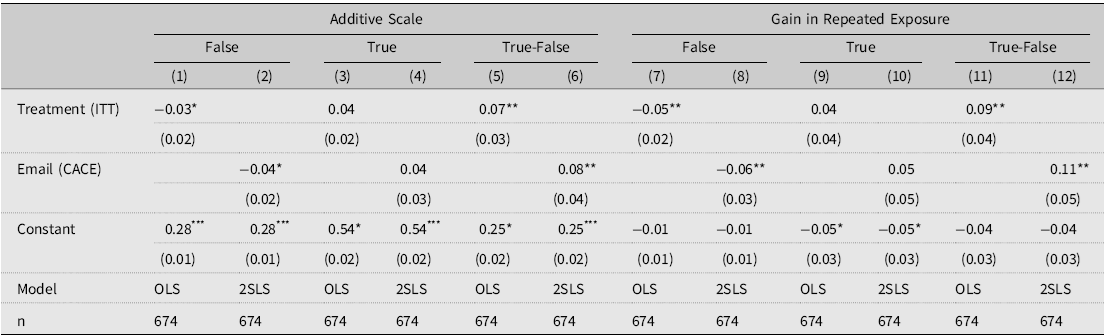

Table 2 shows ITT and CACE estimates for Study 2 unadjusted for covariates.Footnote 25 Similar to study 1, the first dependent variable is the additive scale of rumor acceptance for the seven rumors presented in the second wave of Study 2 (each coded as 0 for rejection and 1 for acceptance). This variable is re-scaled from 0 to 1 and indicates the extent to which subjects accept false rumors as true. The second is the additive scale of acceptance of the three true news pieces we included in Study 2. The third is the difference between the additive scales of acceptance of true and false stories. Columns 1–6 show the estimates for the additive scales described above. Columns 7–12 show the estimates for change in each of the three scales between waves 1 and 2 using only the stories that repeated between waves. This variable takes the value of −1 (believed in wave 1 but not in wave 2), 0 (did not change response between waves), and 1 (did not believe in wave 1 and believed in wave 2).

Table 2. Intent-to-Treat (ITT) and Complier Average Treatment Effects (CACE) of Intervention on Rumor Acceptance in Study 2

*** p < 0.01. **p < 0.05. *p < 0.10. Robust standard errors are in parentheses.

Variable instrumented in 2SLS models: Email.

For the additive scales in wave 2, the intent-to-treat estimate is statistically significant only at the 10% level (p < 0.06, column 1). The same is observed for the CACE estimates (p < 0.06, column 2). The treatment does not affect subjects’ acceptance of real news (columns 3 and 4). More notably, the treatment has a significant effect (both ITT and CACE) on the differences in acceptance between real and false stories (columns 5 and 6), which suggests that the intervention does not only reduce acceptance by fostering generalized skepticism towards news content. With respect to the repeated rumors, both ITT and CACE estimates show that the treatment reduces rumor acceptance (columns 7 and 8) while having no effect on true news acceptance (columns 9 and 10), which results in a positive net effect (columns 11 and 12).Footnote 26

All in all, Studies 1 and 2 have similar findings: preemptive interventions are effective at reducing belief in false stories. Although the estimates of Study 2 are smaller and statistically different from the estimates found in Study 1,Footnote 27 they are qualitatively similar.Footnote 28 Furthermore, Study 2 finding that the intervention did not lead to more skepticism regarding true news stories is consistent with Hypothesis 2 and provides additional insights into the effectiveness of the intervention.

Like in Study 1, we do not find that the treatment affects trust in media.Footnote 29 Similar to Study 1, the intervention had a statistically significant effect on rumors in favor of President Bolsonaro, but not on those against him. However, these estimates are not statistically different from each other in Study 2. Also, we do not observe moderation through variables such as education, political interest, media trust, and support for the president, which follows the patterns observed in Study 1.Footnote 30

Conclusion

Interventions to lower citizens’ propensity to accept false rumors can be effective, as our findings suggest. The intervention during the 2020 mayoral elections in São Paulo reduced rumor acceptance among those assigned to the treatment group, and also among those who complied with the attempt to treat. Moreover, the results from the second study show that the intervention reduced belief in fake news without having the same effect for real news.

It is important to note that our design does not directly test the different possible mechanisms by which preemptive interventions inoculate individuals against misinformation. The intervention may reduce misinformation because individuals receive more access to resources that are necessary for identifying false stories, become more motivated to question and investigate their truthfulness, or more skilled in order to detect their false claims. Each dimension has distinct implications for the ways preemptive interventions can be designed. Therefore, future scholarship can contribute to the debate by assessing the effectiveness of distinct types of interventions with respect to how they foster the various possible mechanisms in the political learning process.

Supplementary material

To view supplementary material for this article, please visit https://doi.org/10.1017/XPS.2023.11

Data availability statement

Support for Study 2 was provided by Emory University. The data, code, and any additional materials required to replicate all analyses in this article are available at the Journal of Experimental Political Science Dataverse within the Harvard Dataverse Network, at https://doi.org/10.7910/DVN/ZHWIWG.

Acknowledgements

We would like to thank Eduardo Scolese and Fábio Takahashi from Folha de São Paulo and Quaest’ operations team for the partnership in Study 1. We are also grateful to Cindy Kam and participants of the INSPER seminar series for their helpful comments and advice and to João Pedro Oliveira dos Santos for the excellent research assistance. Natália S. Bueno is a research associate at FGV/CEPESP and she is grateful for FGV's support in conducting this study.

Competing interest

One of the authors (Felipe Nunes) is also the CEO of Quaest Consultoria & Pesquisa, the company that conducted the two studies presented in the paper. Study 1 resulted from a partnership between Quaest and the newspaper Folha de São Paulo. It was fully funded by Quaest. Quaest did not receive any payment from Folha de São Paulo or the universities involved for conducting the two waves of the study. Felipe Nunes did not receive any financial return from this research either. The partnership between Quaest and the newspaper Folha de São Paulo did not happen in Study 2. Study 2 was fully funded by Research Funds from Emory University.

Ethics statement

This research adheres to APSA’s Principles and Guidance for Human Subjects Research. Both studies were reviewed and approved by Institutional Review Boards in two U.S. institutions (UNCC and Emory University) and one Brazilian institution (Fundação Getúlio Vargas). In any case, exposing subjects to misinformation raises ethical issues. We addressed these concerns by taking the following precautions: we used false stories that had large circulation in Brazil and were not fabricated by the researchers and all participants were debriefed at the end of the study. For more information on subjects and recruitment process, see Section 22 of the Supplemental Appendix. A consort flow diagram is included in Section 23 of the Supplemental Appendix.

Importantly, all subjects consented to take the survey, and it did not contain deception. The researchers did not have access to identifiable data.