No CrossRef data available.

Article contents

Dynamic feature-based deep reinforcement learning for flow control of circular cylinder with sparse surface pressure sensing

Published online by Cambridge University Press: 28 May 2024

Abstract

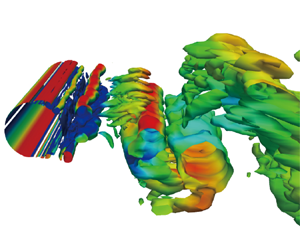

This study proposes a self-learning algorithm for closed-loop cylinder wake control targeting lower drag and lower lift fluctuations with the additional challenge of sparse sensor information, taking deep reinforcement learning (DRL) as the starting point. The DRL performance is significantly improved by lifting the sensor signals to dynamic features (DFs), which predict future flow states. The resulting DF-based DRL (DF-DRL) automatically learns a feedback control in the plant without a dynamic model. Results show that the drag coefficient of the DF-DRL model is 25 % less than the vanilla model based on direct sensor feedback. More importantly, using only one surface pressure sensor, DF-DRL can reduce the drag coefficient to a state-of-the-art performance of approximately 8 % at Reynolds number  $(Re) = 100$ and significantly mitigates lift coefficient fluctuations. Hence, DF-DRL allows the deployment of sparse sensing of the flow without degrading the control performance. This method also exhibits strong robustness in flow control under more complex flow scenarios, reducing the drag coefficient by 32.2 % and 46.55 % at

$(Re) = 100$ and significantly mitigates lift coefficient fluctuations. Hence, DF-DRL allows the deployment of sparse sensing of the flow without degrading the control performance. This method also exhibits strong robustness in flow control under more complex flow scenarios, reducing the drag coefficient by 32.2 % and 46.55 % at  $Re =500$ and 1000, respectively. Additionally, the drag coefficient decreases by 28.6 % in a three-dimensional turbulent flow at

$Re =500$ and 1000, respectively. Additionally, the drag coefficient decreases by 28.6 % in a three-dimensional turbulent flow at  $Re =10\,000$. Since surface pressure information is more straightforward to measure in realistic scenarios than flow velocity information, this study provides a valuable reference for experimentally designing the active flow control of a circular cylinder based on wall pressure signals, which is an essential step toward further developing intelligent control in a realistic multi-input multi-output system.

$Re =10\,000$. Since surface pressure information is more straightforward to measure in realistic scenarios than flow velocity information, this study provides a valuable reference for experimentally designing the active flow control of a circular cylinder based on wall pressure signals, which is an essential step toward further developing intelligent control in a realistic multi-input multi-output system.

JFM classification

- Type

- JFM Papers

- Information

- Copyright

- © The Author(s), 2024. Published by Cambridge University Press