1 Introduction

Automatic enrichment of semantic resources is an important problem (Biemann Reference Biemann2005; Jurgens and Pilehvar Reference Jurgens and Pilehvar2016) as it attempts to alleviate the extremely costly process of manual lexical resource (LR) construction. Distributional semantics (Turney and Pantel Reference Turney and Pantel2010; Baroni, Dinu and Kruszewski Reference Baroni, Dinu and Kruszewski2014; Clark Reference Clark, Lappin and Fox2015) provides an alternative automatic meaning representation framework that has been shown to benefit many text-understanding applications.

Recent years have witnessed an impressive amount of work on combining the symbolic semantic information available in manually constructed LRs with distributional information, where words are usually represented as dense numerical vectors, a.k.a. embeddings. Examples of such approaches include methods that modify the Skip-gram model (Mikolov et al. Reference Mikolov, Sutskever, Chen, Corrado and Dean2013) to learn sense embeddings (Chen, Liu and Sun 2014) based on the sense inventory of WordNet, methods that learn embeddings of synsets as given in an LR (Rothe and Schütze Reference Rothe and Schütze2015) or methods that acquire word vectors by applying random walks over LRs to learn a neural language model (Goikoetxea, Soroa and Agirre 2015). Besides, alternative approaches like NASARI (Camacho-Collados, Pilehvar and Navigli 2015b) and MUFFIN (Camacho-Collados, Pilehvar and Navigli Reference Camacho-Collados, Pilehvar and Navigli2015a) looked at ways to produce joint lexical and semantic vectors for a common representation of word senses in text and in LRs. Retrofitting approaches, e.g. Faruqui et al. (Reference Faruqui, Dodge, Jauhar, Dyer, Hovy and Smith2015), efficiently ‘consume’ LRs as input in order to improve the quality of word embeddings, but do not add anything to the resource itself. Other approaches, such as AutoExtend (Rothe and Schütze Reference Rothe and Schütze2015), NASARI and MUFFIN, learn vector representations for existing synsets that can be added to the resource, however are not able to add missing senses discovered from text.

In these contributions, the benefits of such hybrid knowledge sources for tasks in computational semantics such as semantic similarity and Word Sense Disambiguation (WSD) (Navigli Reference Navigli2009) have been extensively demonstrated. However, none of the existing approaches, to date, have been designed to use distributional information for the enrichment of lexical semantic resources, i.e. adding new symbolic entries.

In this article, we consequently set out to filling this gap by developing a framework for enriching lexical semantic resources with distributional semantic models. The goal of such framework is the creation of a resource that brings together the ‘best of both worlds’, namely the precision and interpretability from the lexicographic tradition that describes senses and semantic relations manually, and the versatility of data-driven, corpus-based approaches that derive senses automatically.

An LR enriched with additional knowledge induced from text can boost the performance of natural language understanding tasks like WSD or Entity Linking (Mihalcea and Csomai Reference Mihalcea and Csomai2007; Rospocher et al. Reference Rospocher, van Erp, Vossen, Fokkens, Aldabe, Rigau, Soroa, Ploeger and Bogaard2016), where it is crucial to have a comprehensive list of word senses as well as the means to assign the correct of many possible senses for a given word in context.

Consider, for instance, the following sentence:

Regulator of calmodulin signaling (RCS) knockout mice display anxiety-like behavior and motivational deficits.Footnote 1

No synset for ‘RCS’ can be found in either WordNet (Fellbaum Reference Fellbaum1998) or BabelNet (Navigli and Ponzetto Reference Navigli and Ponzetto2012a), yet it is distributionally related to other biomedical concepts and thus can help to disambiguate the ambiguous term mice to its ‘animal’ meaning, as opposed to the ‘computer peripheral device’.

Our approach yields a hybrid resource that combines symbolic and statistical meaning representations while (i) staying purely on the lexical-symbolic level, (ii) explicitly distinguishing word senses and (iii) being human readable. These properties are crucial to be able to embed such a resource in an explicit semantic data space such as, for instance, the Linguistic Linked Open Data ecosystem (Chiarcos, Hellmann and Nordhoff 2012). According to Norvig (Reference Norvig2016), the semantic web and natural language understanding are placed at the heart of current efforts to understand the web on a large scale.

We take the current line of research on hybrid semantic representations one step forward by presenting a methodology for inducing distributionally based sense representations from text, and for linking them to a reference LR. Central to our method is a novel sense-based distributional representation that we call proto-conceptualization (PCZ). A PCZ is a repository of word senses induced from text, where each sense is represented with related senses, hypernymous senses and aggregated clues for contexts in which the respective sense occurs in text. Besides, to further improve interpretability, each sense has an associated image. We build a bridge between the PCZ and lexical semantic networks by establishing a mapping between these two kinds of resources.Footnote 2 This results in a new knowledge resource that we refer to as hybrid aligned resource (HAR); here, senses induced from text are aligned to a set of synsets from a reference LR, whereas induced senses that cannot be matched are included as additional synsets. By linking our distributional representations to a repository of symbolically encoded knowledge, we are able to complement wide-coverage statistical meaning representations with explicit relational knowledge as well as to extend the coverage of the reference LR based on the senses induced from a text collection. The main contributions of this article are listed as follows:

-

• A framework for enriching lexical semantic resources: We present a methodology for combining information from distributional semantic models with manually constructed lexical semantic resources.

-

• A hybrid lexical semantic resource: We apply our framework to produce a novel hybrid resource obtained by linking disambiguated distributional lexical semantic networks to WordNet and BabelNet.

-

• Applications of the hybrid resource: Besides intrinsic evaluations of our approach, we test the utility of our resource extrinsically on the tasks of WSD and taxonomy induction, demonstrating the benefits of combining distributional and symbolic lexical semantic knowledge.

The remainder of this article is organized as follows: we first review related work in Section 2 and provide an overview of our framework to build a HAR from distributional semantic vectors and a reference knowledge graph in Section 3. Next, we provide details on our methodology to construct PCZs and to link them to an LR in Sections 4 and 5.1, respectively. In Section 6, we benchmark the quality of our resource in different evaluation settings, including an intrinsic and an extrinsic evaluation on the task of knowledge-based WSD using a dataset from a SemEval task. Section 7 provides two application-based evaluations that demonstrate how our hybrid resource can be used for taxonomy induction. We conclude with final remarks and future directions in Section 8.

2 Related work

2.1 Automatic construction of lexical semantic resources

In the past decade, large efforts have been undertaken to research the automatic acquisition of machine-readable knowledge on a large scale by mining large repositories of textual data (Banko et al. Reference Banko, Cafarella, Soderland, Broadhead and Etzioni2007; Carlson et al. Reference Carlson, Betteridge, Kisiel, Settles, Hruschka and Mitchell2010; Fader, Soderland and Etzioni Reference Fader, Soderland and Etzioni2011; Faruqui and Kumar Reference Faruqui and Kumar2015). At this, collaboratively constructed resources have been exploited, used either in isolation (Bizer et al. Reference Bizer, Lehmann, Kobilarov, Auer, Becker, Cyganiak and Hellmann2009; Ponzetto and Strube Reference Ponzetto and Strube2011; Nastase and Strube Reference Nastase and Strube2013) or complemented with manually assembled knowledge sources (Suchanek, Kasneci and Weikum Reference Suchanek, Kasneci and Weikum2008; Gurevych et al. 2012; Navigli and Ponzetto Reference Navigli and Ponzetto2012a; Hoffart et al. 2013). As a result of this, recent years have seen a remarkable renaissance of knowledge-rich approaches for many different artificial intelligence tasks (Hovy, Navigli and Ponzetto Reference Hovy, Navigli and Ponzetto2013). Knowledge contained within these very large knowledge repositories, however, has major limitations in that these resources typically do not contain any linguistically grounded probabilistic representation of concepts, instances and their attributes – namely, the bridge between wide-coverage conceptual knowledge and its instantiation within natural language texts. While there are large-scale LRs derived from large corpora such as ProBase (Wu et al. Reference Wu, Li, Wang and Zhu2012), these are usually not sense aware but conflate the notions of term and concept. With this work, we provide a framework that aims at augmenting any of these wide-coverage knowledge sources with distributional semantic information, thus extending them with text-based contextual information.

Another line of research has looked at the problem of Knowledge Base Completion (Nickel et al. Reference Nickel, Murphy, Tresp and Gabrilovich2016). Many approaches to Knowledge Base Completion focus on exploiting other KBs (Wang et al. Reference Wang, Li, Wang and Tang2012; Bryl and Bizer Reference Bryl and Bizer2014) for acquiring additional knowledge, or rely on text corpora – either based on distant supervision (Snow, Jurafsky and Ng Reference Snow, Jurafsky and Ng2006; Mintz et al. Reference Mintz, Bills, Snow and Jurafsky2009; Aprosio, Giuliano and Lavelli Reference Aprosio, Giuliano and Lavelli2013) or by rephrasing KB relations as queries to a search engine (West et al. Reference West, Gabrilovich, Murphy, Sun, Gupta and Lin2014) that returns results from the web as corpus. Alternative methods primarily rely on existing information in the KB itself (Bordes et al. Reference Bordes, Weston, Collobert and Bengio2011; Jenatton et al. Reference Jenatton, Roux, Bordes and Obozinski2012; Socher et al. Reference Socher, Chen, Manning and Ng2013; Klein, Ponzetto and Glavaš Reference Klein, Ponzetto and Glavaš2017) to simultaneously learn continuous representations of KB concepts and KB relations by exploiting the KB structure as the ground truth for supervision, inferring additional relations from existing ones. Lexical semantic resources and text are synergistic sources, as shown by complementary work from Faruqui et al. (Reference Faruqui, Dodge, Jauhar, Dyer, Hovy and Smith2015), who improve the quality of semantic vectors based on lexicon-derived relational information.

Here, we follow this intuition of combining structured knowledge resources with distributional semantic information from text, but focus instead on providing hybrid semantic representations for KB concepts and entities, as opposed to the classification task of Knowledge Base Completion that aims at predicting additional semantic relations between known entities.

2.2 Combination of distributional semantics with lexical resources

Several prior approaches combined distributional information extracted from text with information available in LRs like e.g. WordNet. This includes a model (Yu and Dredze Reference Yu and Dredze2014) to learn word embeddings based on lexical relations of words from WordNet and PPDB (Ganitkevitch, Van Durme and Callison-Burch Reference Ganitkevitch, Van Durme and Callison-Burch2013). The objective function of this model combines that of the skip-gram model (Mikolov et al. Reference Mikolov, Sutskever, Chen, Corrado and Dean2013) with a term that takes into account lexical relations of target words. In work aimed at retrofitting word vectors (Faruqui et al. Reference Faruqui, Dodge, Jauhar, Dyer, Hovy and Smith2015), a related approach was proposed that performs post-processing of word embeddings on the basis of lexical relations from LRs. Finally, Pham, Lazaridou and Baroni (Reference Pham, Lazaridou and Baroni2015) also aim at improving word vector representations by using lexical relations from WordNet, targeting similar representations of synonyms and dissimilar representations of antonyms. While all these three approaches show excellent performance on word relatedness evaluations, they do not model word senses – in contrast to other work aimed instead at learning sense embeddings using the word sense inventory of WordNet (Jauhar, Dyer and Hovy Reference Jauhar, Dyer and Hovy2015).

A parallel line of research has recently focused on learning unified statistical and symbolic semantic representations. Approaches aimed at providing unified semantic representations from distributional information and LRs have accordingly received an increasing level of attention (Chen et al. Reference Chen, Liu and Sun2014; Goikoetxea, Soroa and Agirre Reference Goikoetxea, Soroa and Agirre2015; Rothe and Schütze Reference Rothe and Schütze2015; Camacho-Collados et al. Reference Camacho-Collados, Pilehvar and Navigli2015b; Nieto Piña and Johansson Reference Nieto Piña and Johansson2016), inter alia (cf. also our introductory discussion in Section 1) and hybrid meaning representations have been shown to benefit challenging semantic tasks such as WSD and semantic similarity at word level and text level.

All these diverse contributions indicate the benefits of hybrid knowledge sources for learning word and sense representations; here, we further elaborate along this line of research and develop a new hybrid resource that combines information from the knowledge graph with distributional sense representations that are human readable and easy to interpret, in contrast to dense vector representations, a.k.a. word embeddings like GloVe (Pennington, Socher and Manning 2014) or word2vec (Mikolov et al. Reference Mikolov, Sutskever, Chen, Corrado and Dean2013). As a result of this, we are able to provide, to the best of our knowledge, the first hybrid knowledge resource that is fully integrated and embedded within the semantic web ecosystem provided by the Linguistic Linked Open Data cloud (Chiarcos, Hellmann and Nordhoff Reference Chiarcos, Hellmann, Nordhoff, Chiarcos, Nordhoff and Hellmann2012). Note that this complementary to recent efforts aimed at linking natural language expressions in text with semantic relations found within LOD knowledge graphs (Krause et al. Reference Krause, Hennig, Gabryszak, Xu and Uszkoreit2015), in that we focus instead on combining explicit semantic information with statistical, distributional semantic representations of concepts and entities into an augmented resource.

3 Enriching lexical semantic resources with distributional semantics

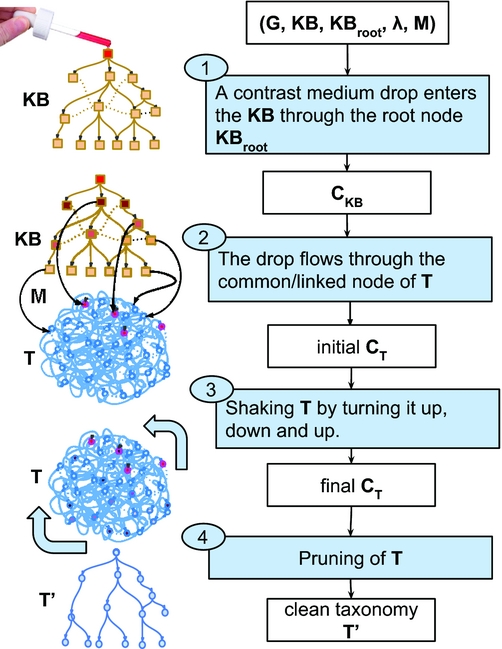

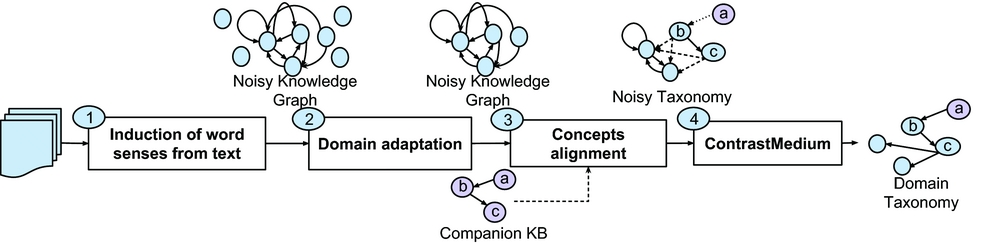

The construction of our HAR builds upon methods used to link various manually constructed LRs to construct BabelNet (Ponzetto and Navigli Reference Ponzetto and Navigli2010) and UBY (Gurevych et al. Reference Gurevych, Eckle-Kohler, Hartmann, Matuschek, Meyer and Wirth2012), among others. In our method, however, linking is performed between two networks that are structurally similar, but have been constructed in two completely different ways: one resource is built using an unsupervised bottom-up approach from text corpora, while the second is constructed in a top-down manner using manual labor, e.g. codified knowledge from human experts such as lexicographers (WordNet). In particular, the method consists of following two major phases, as illustrated in Figure 1:

-

(1) Construction of a PCZ from text. Initially, a symbolic disambiguated distributional lexical semantic network, called a PCZ, is induced from a text corpus. To this end, we first create a sense inventory from a large text collection using graph-based word sense induction as provided by the JoBimText project (Biemann and Riedl Reference Biemann and Riedl2013). The resulting structure contains induced word senses, their yet undisambiguated related terms, as well as context clues per term. First, we obtain sense representations by aggregating context clues over sense clusters. Second, we disambiguate related terms and hypernyms to produce a fully disambiguated resource where all terms have a sense identifier. Hence, the PCZ is a repository of corpus-induced word senses, where each sense is associated with a list of related senses, hypernymous senses, as well as aggregated contextual clues (Table 1).

-

(2) Linking a PCZ to an LR. Next, we align the PCZ with an existing LR. In our work, we make use of lexical semantic resources such as WordNet and BabelNet featuring large vocabularies of lexical units with explicit meaning representations as well as semantic relations between these. In this phase, we create a mapping between the two sense inventories from the PCZ and the LR, and combine them into a new extended sense inventory, our HAR. Finally, to obtain a complete unified resource, we link the ‘orphan’ PCZ senses for which no corresponding sense could be found by inferring their type (i.e. their most specific generalization) in the LR.

Fig. 1. Overview of the proposed framework for enriching lexical resources: a distributional semantic model is used to construct a disambiguated distributional lexical semantic network (a proto-conceptualization, PCZ), which is subsequently linked to the lexical resource.

Table 1. Examples of entries of the PCZ resource for words mouse and keyboard after disambiguation of their related terms and hypernyms (Section 4.4)

Context clues are represented as typed dependency relations to context words in the input corpus, e.g. keyboard:0 appears as direct object of ‘to play’. Trailing numbers indicate automatically induced sense identifiers.

In the following sections, we present each stage of our approach in detail.

4 Construction of a proto-conceptualization

Our method for PCZ construction consists of the four steps illustrated in the upper half of Figure 1, inducing a graph of semantically related words (Section 4.1), word sense induction (Section 4.2), labeling of clusters with hypernyms and images (Section 4.3) and disambiguation of related words and hypernyms with respect to the induced sense inventory (Section 4.4). Further, we describe an additional property of our PCZs, namely the availability of corpus-derived context clues (Section 4.5), as well as alternative ways to construct PCZs on the basis of dense vector representations (Section 4.6).

The PCZ resource with a pipeline as outlined in Figure 1 consists of word senses induced from a corpus. For each word sense, similar and superordinate terms are disambiguated with respect to the induced sense inventory: the structure of a PCZ resembles that of a lexical semantic resource. Sense distinctions and distributions depend on the training corpus, which causes the resource to adapt to its domain. In contrast to manually created resources, each sense also contains context clues that allow disambiguating polysemous terms in context. Table 1 shows example senses for the terms mouse and keyboard. Note that PCZs may contain many entries for the same word, e.g. mouse has two senses, the ‘animal’ and the ‘computer device’, respectively. The context clues are not disambiguated, since they are meant for directly matching (undisambiguated) textual context. PCZs can be interpreted by humans at two levels, as exemplified in Figure 2.

-

(1) The word sense inventory is interpretable due to the use of the hypernyms, images and related senses.

-

(2) The sense feature representation is interpretable due to the use of the sparse context clues relevant to the sense.

Fig. 2. Word sense representations of the word mouse induced from text generated using the online demo at http://jobimtext.org/wsd. The sense labels (device and animal) are obtained automatically based on cluster labeling with hypernyms. The images associated with the senses are retrieved with a search engine using the queries: mouse device and mouse animal. Note the ‘BabelNet Link’ button, leading to the sense in BabelNet linked to the induced sense with the algorithm described in Section 5.1.

Note that while in our experiments we rely on a count-based sparse distributional model, the PCZ is a symbolic structure that can be also constructed using alternative distributional models, e.g. word and sense embeddings (cf. Section 4.6).

4.1 Inducing a graph of semantically related words

The goal of this first stage is to build a graph of semantically related words, with edges such as (mouse, keyboard, 0.78), i.e. a distributional thesaurus (DT) (Lin Reference Lin1998). To induce such graph in an unsupervised way, we rely on a count-based approach to distributional semantics based on the JoBimText framework (Biemann and Riedl Reference Biemann and Riedl2013). Each word is represented by a bag of syntactic dependencies such as conj_and(rat, •) or prep_of(click, •) extracted from the Stanford Dependencies (De Marneffe, MacCartney and Manning Reference De Marneffe, MacCartney and Manning2006) obtained with the PCFG model of the Stanford parser (Klein and Manning Reference Klein and Manning2003).

Features of each word are weighted and ranked using the Local Mutual Information (LMI) metric (Evert Reference Evert2005). Subsequently, these word representations are pruned keeping 1,000 most salient features per word and 1,000 most salient words per feature. The pruning reduces computational complexity and noise (Riedl Reference Riedl2016). Finally, word similarities are computed as the number of common features for two words. This is, again, followed by a pruning step in which for every word, only the 200 most similar terms are kept. The resulting graph of word similarities is browsable online (Ruppert et al. Reference Ruppert, Kaufmann, Riedl and Biemann2015).Footnote 3

There are many possible ways to compute a graph of semantically similar words, including count-based approaches, such as Lin (Reference Lin1998), Curran (Reference Curran2002) or prediction-based approaches, such as word2vec (Mikolov et al. Reference Mikolov, Sutskever, Chen, Corrado and Dean2013), GloVe (Pennington et al. Reference Pennington, Socher and Manning2014) and word2vecf (Levy and Goldberg Reference Levy and Goldberg2014). Here, we opt for a count-based approach to distributional semantics based on Local Mutual Information based on two considerations, namely their higher quality of similarity scores and their interpretability.

A thorough experimental comparison of different approaches to computing distributional semantic similarity to build a DT is presented by Riedl (Reference Riedl2016, Section 5.7.4) using the WordNet taxonomy as a gold standard. In this evaluation, different DTs are compared by computing, for each term, the average similarity between the term itself and its k most similar terms (based on the DT) using the WordNet path-based similarity measure (Pedersen, Patwardhan and Michelizzi Reference Pedersen, Patwardhan and Michelizzi2004). The overall similarity of the DT with the ground-truth taxonomy (e.g. WordNet) is then given by the average similarity score across all terms. Using this evaluation framework, Riedl is able to compare a wide range of different approaches for the construction of a weighted similarity graph, including state-of-the-art approaches based on sparse vector representations (Lin Reference Lin1998; Curran Reference Curran2002), as well as dense representations based on word2vec (Mikolov et al. Reference Mikolov, Sutskever, Chen, Corrado and Dean2013) and word2vecf, which makes use of dependency-based features (Levy and Goldberg Reference Levy and Goldberg2014). In his experiment, all methods were trained on the same corpus, and all dependency-based models, including the Skip-gram approach trained with the word2vecf tool (Levy and Goldberg Reference Levy and Goldberg2014), used the same feature representations.

We report some of the results from Riedl’s experiments in Table 2. In this experiment, 1,000 infrequent and 1,000 frequent nouns proposed by Weeds, Weir and McCarthy (Reference Weeds, Weir and McCarthy2004) were used. Dependency-based models (all models except the Skip-gram) used syntactic features extracted using the Stanford Parser. In addition to this dependency-based model, we report results of the same model based on trigram features, where a context is formed by the concatenation of the two adjacent words. All models were trained on the 105 million sentences of newspaper data described in Section 6.1. Further details of the experiment, e.g. parameters of the models, are available in Riedl (Reference Riedl2016, Section 5.7.4).

Table 2. Comparison of state-of-the-art count- and prediction-based methods to distributional semantics on the basis of the average of the averaged similarity scores between each term in the DT and its top-10 most similar terms using the WordNet path similarity measure (higher means better) averaged over 1,000 high- and low-frequency words

In this article, we use ‘LMI with dependency features’ as the similarity function.

The performance figures indicate that the method we use here yields the overall best performance in terms of semantic similarity compared to other count-based or word-embedding approaches (including both word2vec and word2vecf). Besides, the results generally indicate the advantage of using dependency-based context representations over the bag-of-words representations. This is in line with prior studies on semantic similarity (Padó and Lapata Reference Padó and Lapata2007; Van de Cruys Reference Van de Cruys2010; Panchenko and Morozova Reference Panchenko and Morozova2012). For this reason, we use syntactic features in our experiments but would like to stress that the overall framework also allows simpler context representations, giving rise to its application to resource-poor languages.

The second reason for using the Local Mutual Information approach to compute a graph of semantically related words is the fact that the resulting word representations are human interpretable, since words are represented by sparse features – as opposed to dense features such as those found within word embeddings. Besides being a value on its own, this feature enables a straightforward implementation of word sense disambiguation methods on the basis of the learned representations (Panchenko et al. 2017a, 2017c).

4.2 Word sense induction

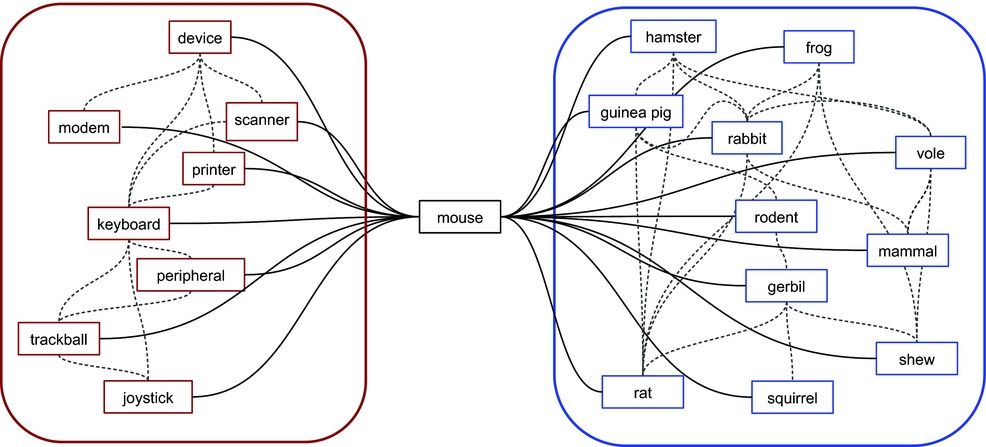

In the next stage, we induce a sense inventory on top of the DT by clustering ego-networks of similar words. In our case, an inventory represents senses by a word cluster, such as {rat, rodent, monkey, . . .} for the ‘animal’ sense of the word mouse.

Sense induction is conducted one word t at the time on the DT. First, we retrieve nodes of the ego-network G of t being the N most similar words of t according to the DT. Figure 3 presents a sample ego network of related words.Footnote 4 Note that the target word t itself is excluded during clustering. Second, we connect each node in G to its n most similar words according to DT. The n parameter regulates the granularity of the induced sense inventory: we experiment with n ∈ {200, 100, 50} and N = 200. Finally, the ego network is clustered with Chinese Whispers (Biemann Reference Biemann2006), a non-parametric algorithm that discovers the number of clusters (word senses, in our case) automatically. The algorithm is iterative and proceeds in a bottom-up fashion. Initially, all nodes have distinct cluster identifiers. At each step, a node obtains the cluster identifier from the dominant cluster in its direct neighborhood, which is the cluster with the highest sum of edge weights to the current node.

Fig. 3. Example of graph-based word sense induction for the word mouse: the two circles denote two induced word senses, as found by analysis of the ego graph of mouse.

The choice of Chinese Whispers among other algorithms, such as HyperLex (Véronis Reference Véronis2004) or Markov Cluster Algorithm (Van Dongen Reference Van Dongen2008), was motivated by the absence of meta-parameters, its state-of-the-art performance on Word Sense Induction tasks (Di Marco and Navigli Reference Di Marco and Navigli2013), as well as its efficiency (time-linear in the number of edges), see Cecchini, Riedl and Biemann (2017) for a comparative evaluation.

4.3 Labeling induced senses with hypernyms and images

At the third stage, each sense cluster is automatically labeled to characterize it in more detail and to improve its interpretability. First, we extract hypernyms from the input corpus. Here, we rely on the Hearst (Reference Hearst1992) patterns, yet the approach we use can benefit also from more advanced methods for extraction of hypernyms, e.g. HypeNet (Shwartz, Goldberg and Dagan 2016) or the Dual Tensor Model (Glavaš and Ponzetto Reference Glavaš and Ponzetto2017). Note that despite their simplicity, Hearst patterns still are a strong baseline, used for applications like, for instance, taxonomy induction (Panchenko et al. 2016; Bordea, Lefever and Buitelaar 2016).

Second, we rank the quality of a hypernym h to act as generalization for the meaning expressed by cluster c on the basis of the product of two scores, namely hypernym relevance and coverage:

$$

\begin{align*}

relevance (c,h) = \sum _{w \in c} rel(t,w) \cdot freq(w,h)\\

coverage(c,h) = \sum _{w \in c} \min (freq(w,h),1)

\end{align*}

$$

$$

\begin{align*}

relevance (c,h) = \sum _{w \in c} rel(t,w) \cdot freq(w,h)\\

coverage(c,h) = \sum _{w \in c} \min (freq(w,h),1)

\end{align*}

$$

where rel(t, w) is the relatedness of the cluster word w to the target word t (the ambiguous word in an ego network, cf. Figure 3) and freq(w, h) is the frequency of the hypernymy relation (w, h) as extracted via patterns. Thus, a highly ranked hypernym h needs to be observed frequently in a hypernym pattern, but also needs to be confirmed by several cluster words. This stage results in a ranked list of labels that specify the word sense, which we add to the PCZ. The highest scoring hypernym is further used in the title of the word sense, e.g. mouse (device) or mouse (animal).

Finally, to further improve the interpretability of the induced senses, we add images to our sense clusters as follows. Previous work (Faralli and Navigli Reference Faralli and Navigli2012) showed that web search engines can be used to bootstrap sense-related information. Consequently, we assign an image to each word in the cluster querying the Bing image search APIFootnote 5 using the query composed of the target word and its highest scoring hypernym, e.g. mouse device. The first image result of this query is selected to represent the induced word sense. This step is optional in our pipeline, and is primarily aimed at improving the user interaction with the word sense inventory.

The resulting sense representation is illustrated in Figure 2 for two induced senses of the word mouse. Providing to the user hypernyms, images, list of related senses and the list of the most salient context clues ensures interpretability of each sense. Note that all these elements are obtained without manual intervention, see Panchenko et al. (Reference Panchenko, Marten, Ruppert, Faralli, Ustalov, Ponzetto and Biemann2017b) for more details.

4.4 Disambiguation of related terms and hypernyms

Next, we disambiguate the lexical graphs induced in the previous step. Each word in the induced inventory has one or more senses; however, the list of related words and hypernyms of each induced sense does not carry sense information yet. In our example (Table 1), the sense of mouse for the entry keyboard:1 could have either referred to the ‘animal’ or the ‘electronic device’. Consequently, we apply a semantic closure procedure to arrive at a resource in which all terms get assigned a unique, best-fitting sense identifier. Our method assigns each disambiguation target word w – namely, a similar or superordinate term from each sense of the induced word sense inventory – the sense ŝ whose context (i.e. the list of similar or superordinate terms) has the maximal similarity with the target word’s context (i.e. the other words in the target word’s list of similar or superordinate items). We use the cosine similarity between context vectors to find the most appropriate sense ŝ matching the ‘context’ of an ambiguous word cluster one of its ‘definitions’ WSI(w′).cluster:

This way we are able to link, for instance, keyboard in the list of similar terms for mouse:1 to its ‘device’ sense (keyboard:1), since mouse:1 and keyboard:1 share a large amount of terms from the information technology domain. This simple, local approach is scalable (cf. the complexity analysis at the end of this section) and it performs well, as we show later in the evaluation.

Algorithm 1 presents our method to compute the semantic closure. The input is a JoBimText model as a set of tuples word, sense_id, cluster, isas), where cluster is a list of similar terms in the format (word i , sim i ) with sim i being the similarity value between word and word i , and isas is a list of hypernym terms in the same format. The algorithm outputs a PCZ in the form of a set of tuples word, sense_id, cluster′, isas′), where cluster′ is a list of disambiguated similar terms and isas′ is a list of disambiguated hypernym terms both in the format wordi, simi, sense_idi ). The algorithm starts by creating an empty PCZ structure PCZ. For each entry of an input JoBimText model, we disambiguate related words (cluster) and hypernym terms (isas) with the function Disambiguate (lines 3–4). This function retrieves for each word in a cluster the set of its senses with the GetSenses function. Next, we calculate similarity between the cluster of the word and the cluster of the candidate sense (denoted as dcluster). The word i obtains the sense_id of the candidate sense that maximizes this similarity (lines 8–13).

Our disambiguation approach is a rather straightforward algorithm based on similarity computations. Despite its simplicity, we are able to achieve a disambiguation accuracy in the high ninety per cent range for noun word senses, while at the same time having a time-linear complexity in the number of word senses, as we will show in the evaluation below (Section 6.2). We can assume, in fact, that the function GetSenses has a run-time complexity of O(1) and the function cos (Equation (1)) has complexity O(m), where m is the average number of neighbors of each word sense. Then, the run-time complexity of the algorithm is O(n*m 2*k), where n is the overall number of induced word senses, and k is the average polysemy of a word. Since k is small and m is bound by the maximum number of neighbors (200 in our case), the amortized run time is linear in the vocabulary size. This makes our approach highly scalable: in recent experiments, we have been accordingly able to apply our method at web scale on the CommonCrawl,Footnote 6 the largest existing public repository of web content.

4.5 Construction of sense feature representations

Finally, we calculate feature representations for each sense in the induced inventory – that is, grammatical dependency features that are meant to provide an aggregated representation of the contexts in which a word sense occurs.

We assume that a word sense is a composition of cluster words that represent the sense and accordingly define a sense vector as a function of word vectors representing cluster items. Let W be a set of all words in the training corpus and let S i = {w 1, . . ., w n }⊆W be a sense cluster obtained in a previous step. Consider a function vecw : W → ℝ m that maps words to their vectors and a function γi : W → ℝ that maps cluster words to their weight in the cluster S i . The sense vector representation (the context clues) is then a weighted average of word vectors:

Table 1 (column 4) provides an example of such feature representations. While the averaged word vectors are ambiguous and can contain features related to various senses, features with high weights tend to belong to the target sense as the secondary senses of the averaged words vectors rarely match semantically, hence the aggregation amplifies the correct sense.

This concludes the description of steps we use to construct PCZs from text corpora.

4.6 Inducing PCZs with dense vector representations

In this section, we briefly describe alternative routes to the construction of a PCZ from text in an unsupervised way. In the remainder of this article, we will rely on the results of the approach described above. The goal of this section is to show that our overall framework is agnostic to the type of underlying distributional semantic model. In this section, we consider three approaches to generating a PCZ using word or sense embeddings.

Option 1: Inducing PCZs using word embeddings with explicit disambiguation. As illustrated in Figure 1, the first stage of our approach involves the computation of a graph of semantically similar words. Above, the graph was induced using a count-based model, however, any of the models listed in Table 2 can be used to generate such a graph of ambiguous words including models based on dense vector representations, such as the Skip-gram model. In this strategy, one would need to generate top nearest neighbors of word on the basis of cosine similarity between word embeddings. Table 3 shows an excerpt of nearest neighbors generated using the JoBimText and word2vec toolkits. The obtained word graphs can be subsequently used to induce word senses using the graph-based approach described in Section 4.2. The obtained clusters can be labeled using a database of hypernyms exactly in the same way as for the models based on the count-based JoBimText framework (cf. Section 4.3). All further steps of the workflow presented in Figure 1 remain the same.

Table 3. Sense inventories derived from the Wikipedia corpus via a sparse count-based (JoBimText) and dense predict-based (Skip-gram) distributional models

The main difference between the approach described above and the methods based on dense representations of words is the representation of the context clues of PCZ (cf. Table 1). In the case of an underlying sparse count-based representation, context clues remain human readable and interpretable, whereas in case of dense representations, context clues are represented by a dense vector embedding, and it is not straightforward to aggregate context clues over sense clusters.

Option 2: Inducing PCZs using word embeddings without explicit disambiguation. The first two stages of this approach are the same compared to the previous strategy. Namely, first one needs to generate a graph of ambiguous semantically related words (Section 4.1) and then to run ego-network clustering to induce word senses (Section 4.2). However, instead of explicit disambiguation of nearest neighbors (Section 4.4), the third stage could obtain vector sense representations by averaging the word embeddings of sense clusters (Section 4.5). Finally, disambiguated nearest neighbors can be obtained by calculating nearest neighbors of each sense vector in the space of word sense embeddings. This step is equivalent to the computation of a DT (Section 4.1); however, it directly yields disambiguated nearest neighbors (cf. Table 4). Note, however that, disambiguation of hypernyms using Algorithm 1 is still required when using this approach.

Table 4. A Skip-gram-based PCZ model by Pelevina et al. (2016): Neighbors of the word mouse and the induced senses

The neighbors of the initial vector belong to both senses, while those of sense vectors are sense specific.

This approach was explored in our previous work (Pelevina et al. Reference Pelevina, Arefiev, Biemann and Panchenko2016), where we showed that words sense embeddings obtained in this way can be successfully used for unsupervised WSD, yielding results comparable to the state of the art.

Option 3: Inducing PCZ using word sense embeddings. Finally, a PCZ can be also induced using sparse (Reisinger and Mooney Reference Reisinger and Mooney2010) and dense (Neelakantan et al. Reference Neelakantan, Shankar, Passos and McCallum2014; Li and Jurafsky Reference Li and Jurafsky2015; Bartunov et al. Reference Bartunov, Kondrashkin, Osokin and Vetrov2016) multi-prototype vector space models (the latter are also known as word sense embeddings). These models directly induce sense vectors from a text corpus, not requiring the word sense induction step of our method (Section 4.2). Instead of ego-network-based sense induction, these methods rely on some form of context clustering, maintaining several vector representations for each word type during training. To construct a PCZ using such models within our framework, we need to compute a list of nearest neighbors (Section 4.1), label the obtained sense clusters with hypernyms (Section 4.3) and disambiguate these hypernyms using Algorithm 1. The sense vectors replace the aggregated context clues, so the stage described in Section 4.5 is superfluous for this option as well.

We also experimented in previous work with the construction of PCZs using this approach (Panchenko Reference Panchenko2016), showing how to use sense embeddings for building PCZs, reaching satisfactory levels of recall and precision of matching as compared to a mapping defined by human judges.

While an empirical comparison of these options would be interesting, it is beyond the scope of this paper, where our main point is to demonstrate the benefits of linking manually created LRs with models induced by distributional semantics (by example of a count-based model).

5 Linking a proto-conceptualization to a lexical semantic resource

This section describes how a corpus-induced semantic network (a PCZ) is linked to a manually created semantic network, represented by an LR.

5.1 Linking induced senses to senses of the lexical resource

Now, we link each sense in our PCZ to the most suitable sense (if any) of an LR (see Figure 1 step 3). There exist many algorithms for knowledge base linking (Pavel and Euzenat Reference Pavel and Euzenat2013); here, we build upon simple, yet high-performing previous approaches to linking LRs that achieved state-of-the-art performance. These rely at their core on computing the overlap between the bags of words built from the LRs’ concept lexicalizations, e.g. Navigli and Ponzetto (Reference Navigli and Ponzetto2012a) and Gurevych et al. (Reference Gurevych, Eckle-Kohler, Hartmann, Matuschek, Meyer and Wirth2012) (inter alia). Specifically, we develop (i) an iterative approach – so that the linking can benefit from the availability of linked senses from previous iterations – (ii) leveraging the lexical content of the source and target resources. Algorithm 2 takes as input

-

(1) a PCZ T = {(ji , R ji , H ji )} where j i is a sense identifier (i.e. mouse:1), R ji the set of its semantically related senses (i.e. R ji = {keyboard:1, computer:0, . . .} and H ji the set of its hypernym senses (i.e. H ji = {equipment:3, . . .};

-

(2) an LR W: we experiment with: WordNet, a lexical database for English and BabelNet, a very large multilingual ‘encyclopedic dictionary’;

-

(3) a threshold th over the similarity between pairs of concepts and a number m of iterations as a stopping criterion.

The algorithm outputs a mapping M, which consists of a set of pairs of the kind (source, target) where source ∈ T.senses is a sense of the input PCZ T and target ∈ W.senses∪source is the most suitable sense of W or source when no such sense has been identified.

The algorithm starts by creating an empty mapping M (line 2). Then for each monosemous sense (e.g. Einstein:0 is the only sense in the PCZ for the term Einstein) it searches for a candidate monosemous sense (lines 2–2). If such monosemous candidate senses exist (line 2), we compare the two senses (line 2) with the following similarity function:

where

-

(1) T.BoW(j, M, W) is the set of words containing all the terms extracted from related/hypernym senses of j and all the terms extracted from the related/ hypernym (i.e. already linked in M) synsets in W. For each synset from the LR, we use all synonyms and content words of the gloss.

-

(2) W.BoW(c) contains the synonyms and the gloss content words for the synset c and all the related synsets of c.

Then a new link pair (j i , c 0) is added to M if the similarity score between j i and c 0 meets or exceeds the threshold th (line 2). At this point, we collected a first set of disambiguated (monosemous) senses in M and start to iteratively disambiguate the remaining (polysemous) senses in T (lines 2–2). This iterative disambiguation process is similar to the one we described for the monosemous case (lines 2–2), with the main difference that, due to the polysemy of the candidates synsets, we instead use the similarity function to rank all candidate senses (lines 2–2) and select the top-ranked candidates for the mapping (lines 2–2). At the end of each iteration, we add all collected pairs to M (line 2). Finally, all unlinked j of T, i.e. induced senses that have no corresponding LR sense, are added to the mapping M (lines 2–2).

Comparison with other mapping algorithms. Previous work for the construction of BabelNet (Navigli and Ponzetto Reference Navigli and Ponzetto2012a) and UBY (Gurevych et al. Reference Gurevych, Eckle-Kohler, Hartmann, Matuschek, Meyer and Wirth2012) looked at the related problem of matching heterogeneous lexical semantic resources, i.e. Wikipedia and WordNet. In our scenario, however, we aim instead at establishing a bridge between any of these latter reference KBs and a PCZ – i.e. a fully disambiguated distributional semantic representation of distributionally induced word senses (cf. Section 4.5). Since we are working with a PCZ on the source side, as opposed to using Wikipedia, we cannot rely on graph-based algorithms such as PageRank (Niemann and Gurevych Reference Niemann and Gurevych2011) ‘out of the box’: while PCZs can be viewed as graphs, these are inherently noisy and require cleaning techniques in order to remove cycles and wrong relations (cf. Section 7 where we accordingly address the topic of taxonomy induction and cleaning within our framework). Similarly, the fact that PCZs are automatically induced from text – and hence potentially noisier that clean collaboratively generated content from Wikipedia – forces us to limit evidence for generating the mapping to local information, as opposed to, e.g. graph-based expansions used to boost the recall of BabelNet–WordNet mappings, cf. Navigli and Ponzetto (Reference Navigli and Ponzetto2012a). To overcome this ‘locality’ constraint, we develop an iterative approach to indirectly include non-local evidence based on previous mapping decisions. Our algorithm, in fact, uses previous mappings to additionally expand the bag-of-word of the candidate PCZ sense to be mapped, based on related/hypernym synsets linked in the previous iterations only (i.e. to keep the expansion ‘safe’, cf. Equation (3)).

5.2 Typing of the unmapped induced senses

An approach based on the bag-of-words from concept lexicalizations has the advantage of being simple, as well as high performing as we show later in the evaluation – cf. also findings from Navigli and Ponzetto (Reference Navigli and Ponzetto2012a). However, there could be still PCZ senses that cannot be mapped to the target LR, e.g. because of vocabulary mismatches, sparse concepts’ lexicalizations, or because they are simply absent in the resource.

Consequently, in the last phase of our resource creation pipeline, we link these ‘orphan’ PCZ senses (i.e. those from lines 2–2 of Algorithm 2), in order to obtain a unified resource, and propose a method to infer the type of those concepts that were not linked to the target LR. For example, so far we were not able to find a BabelNet sense for the PCZ item Roddenberry:10 (the author of ‘Star Trek’). However, by looking at the linked related concepts that share the same BabelNet hypernym – e.g. the PCZ items Asimov:3 is-a author BabelNet , Tolkien:7 is-a author BabelNet , Heinlein:8 is-a author BabelNet , etc. – we can infer that Roddenberry:10 is-a author:1, since the latter was linked to the Babel synset author BabelNet .

The input of Algorithm 3 consist of the mapping M of a PCZ to an LR W (cf. Algorithm 2). The output is a new mapping H containing pairs of the kind (source, type) where type is a type in W for the concept source ∈ PCZ. We first initialize the new mapping H as an empty set (line 3). Then for all the pairs (source, target) where the target is a concept not included in the target LR W (line 3), we compute a rank of all the ancestors of each related sense that has a counterpart trelated in W (lines 3–3). In other words, starting from linked related senses trelated, we traverse the taxonomy hierarchy (at most for three hops) in W and each time we encounter a sense ancestor we increment its rank by the inverse of the distance to trelated. Finally, we add the pairs (source, ntype) to H for all the ntype at the top top h in the Rank.

Finally, our final resource consists of (i) the PCZ; (ii) the mapping M of PCZ entries to the LR (e.g. WordNet or BabelNet); (iii) the mapping H of suggested types for the PCZ entries not mapped in M.

6 Experiments

In this section, we present results of four experiments, which intrinsically and extrinsically evaluate the quality of our HAR.

6.1 Corpora used for the induction of proto-conceptualizations

We evaluate our method using texts of different genres, namely standard newswire text vs. encyclopedic texts in order to examine performance in different settings. The corpora, described in Table 5, are a 105 million sentence news corpus composed of Gigaword (Parker et al. Reference Parker, Graff, Kong, Chen and Maeda2011) and the Leipzig Corpora Collection, (Richter et al. Reference Richter, Quasthoff, Hallsteinsdóttir and Biemann2006)Footnote 7 and a 35 million-sentence Wikipedia corpusFootnote 8 from a 2011 dump.

Table 5. Text corpora used in our experiments to induce distributional disambiguated semantic networks (proto-conceptualizations, PCZs)

We opt for these text collections because they were previously extensively used for the evaluation of distributional models based on the JoBimText framework (Biemann and Riedl Reference Biemann and Riedl2013; Riedl and Biemann Reference Riedl and Biemann2013). Specifically, previous work (Riedl and Biemann Reference Riedl and Biemann2013) experimented with the induction of distributional models on the basis of both corpora, and showed that the quality of semantic similarity (which, in turn, is used to build the DT, cf. Section 4.1) increases with corpus size. Since ‘more data’ helps, we experiment in this work with the full-sized corpora. Further description of the wiki and news text collections can be found in Riedl and Biemann (Reference Riedl and Biemann2013) and Riedl (Reference Riedl2016, p. 94).

We experiment with different parameterizations of the sense induction algorithm to obtain PCZs with different average sense granularities, since a priori, there is no clear evidence for what the ‘right’ sense granularity of a sense inventory should be. Chinese Whispers sense clustering with the default parameters (n = 200) produced an average number of 2.3 (news) and 1.8 (wiki) senses per word with the usual power-law distribution of sense cluster sizes. Decreasing connectivity of the ego network via the n parameter leads to more fine-grained inventories (cf. Table 6).

Table 6. Structural analysis of our five word sense inventories of the proto-conceptualizations (PCZs) used in our experiments

Finally, we use the method described in Riedl and Biemann (Reference Riedl and Biemann2015) to compute a dataset that includes automatically extracted multiword terms using the Wikipedia corpus (wiki-p1.6-mwe). Since most of the multiwords are monosemous, average polysemy of this dataset decreased from 1.8 to 1.6 for the analogous model without multiwords (wiki-p1.8). To obtain a feature representation of a multiword expression, we gathered all outgoing dependency relations of this term as illustrated in Figure 4.

Fig. 4. Extraction of distributional dependency features for a multiword expression Mickey Mouse: all outgoing dependencies are used as features. This image was created using the Stanford dependency visualizer (http://nlp.stanford.edu:8080/corenlp).

In Table 6, we present statistics for the five different resources we induce from our corpora. For each dataset, we report the counts of overall number of words (vocabulary size), including monosemous words and polysemous ones, respectively. For each PCZ, we report the cardinality, the average polysemy and the maximum polysemy. Finally, we report the overall and the average number of related senses and hypernyms. Numbers vary across datasets due to the different nature of the two source corpora and the selection of different parameter values for sense induction.

While inducing senses directly from corpus data allows for large coverage and flexibility, it also makes it difficult to evaluate the quality of the resulting sense clusters (Agirre and Soroa Reference Agirre and Soroa2007). Since we do not a priori know the sense granularity of the PCZs, the sense inventory input to our disambiguation and linking algorithms cannot be fixed in advance, e.g. in order to produce a static gold standard. Therefore, in our intrinsic evaluations (Sections 6.2–6.4), we assess the quality of our resources by manually validating a sample of the output of the different steps of our method. Later, in Section 6.5, we perform an extrinsic evaluation against a gold standard on a WSD benchmarking dataset from a SemEval task.

The PCZ described above were subsequently linked to WordNet 3.1 and BabelNet 2.5 using the method described above. All the models described above as well as the induced sense inventories and word similarity graphs can be accessed online (cf. Section 8).

6.2 Experiment 1: Quality of disambiguation of the related terms

Experimental setting. In this experiment, we evaluate the quality of Algorithm 1 for the disambiguation of related words (cf. Table 1) by performing a post-hoc evaluation using manual judgments on a sample of sense-disambiguated terms from one of our PCZ resources.

We manually selected a set of frequent nouns and proper nouns, such that each word has least two homonymous (as opposed to polysemous) word senses. We deliberately avoided words with polysemous senses, as word sense induction algorithms are known to robustly extract mostly coarse-grained inventories of homonymous words (Di Marco and Navigli Reference Di Marco and Navigli2013; Cecchini et al. Reference Cecchini, Riedl and Biemann2017). The words were selected according to two criteria. First, each of the two homonymous word senses should have a comparable frequency – compare, for instance, the fairly common senses of python (animal) and python (language), as opposed to boa (animal) and boa (language), where the ‘language’ sense of the word boa is much rarer as compared to its ‘animal’ sense. Second, each of these senses should be common enough to be recognizable without the need of consulting a dictionary by a non-native, graduate-level speaker of English. We tested for sense frequencies and popularity by checking that selected senses were found among the top ones as listed in BabelNet. Using these criteria, we manually selected a set of seventeen nouns, such as apple, java, python, etc.Footnote 9

Since our resources only partially overlap in terms of sense inventory, and there is no a priori reference sense granularity, we cannot perform evaluation on a shared gold standard. Consequently, we opt instead for a post-hoc evaluation of the accuracy of the disambiguation step, namely the fraction of correctly disambiguated related words among all disambiguated words. Post-hoc validations have major limitations in that they are time consuming, do not scale and hinder direct comparability across methods – nevertheless, they are commonly used in the field of knowledge acquisition to estimate the quality of knowledge resources (Banko et al. 2007; Suchanek et al. 2008; Carlson et al. 2010; Velardi, Faralli and Navigli Reference Velardi, Faralli and Navigli2013) (inter alia).

We performed manual validation as follows. We first collected all disambiguated entries of the wiki-p1.6 model (cf. Table 1), where these seventeen target words appear and randomly sampled 15% of these entries to make annotation feasible, resulting in a dataset of 2,884 ambiguous-related words in context. We restrict evaluation to the wiki-p1.6 model for two reasons: an encyclopedic source is expected to provide better sense coverage a priori, thus providing us with more evaluation instances, while a low number of clusters is in line with findings that graph-based sense induction methods can produce rather coarse high-quality clusterings (Cecchini et al. Reference Cecchini, Riedl and Biemann2017).

Table 7 presents statistics of our dataset: note that we gathered word senses of all parts of speech that correspond to the selected seventeen words, including verbs and adjectives, for the sake of completeness of our study. An annotator with previous experience in lexicographic annotation performed the judgment of the 2,884 contexts in a curated way (using several rounds of feedback on random samples of annotations). The annotator was presented with a table containing four columns: (i) the target word, (ii) a sense cluster defining the sense of the target word, (iii) a sense cluster that defines the context of the target word. The last column collected the binary answer on the question whether the ‘definition’ cluster is semantically compatible with the ‘context’ cluster. Table 8 illustrates two examples of semantically compatible and incompatible clusters. The reasons of incompatibility of sense clusters are either the absence of obvious semantic relations between the words in the clusters (cf. the ‘planet’ vs. ‘basketball’ sense of sun) or simply incoherence of one or both sense clusters – i.e. the case when the annotator cannot figure out the meaning of the sense denoted by a cluster, such as the case for the context cluster of tiger. The annotator was instructed to consider a sense cluster to be interpretable if it was possible to grasp a dominant meaning by looking at the top twenty words, while allowing for a small fraction of spurious terms (since sense clusters are automatically generated).

Table 7. Accuracy of Algorithm 1 for disambiguation of related words evaluated on a set of seventeen frequent words each having two non-marginal homonymous word senses, e.g. as in mouse (keyboard) and mouse (animal)

Table 8. Examples provided to the assessor participating in the study with correct judgments

The subject was asked to determine if the first sense cluster (representing a sense definition of the ambiguous-related word) is semantically related to the second sense cluster (representing the context of the ambiguous-related word).

Results and discussion. The results of the experiment are summarized in Table 7.Footnote 10 Performance of the disambiguation procedure for the proper names and nouns ranges from 0.85 to 0.94, thus indicating an overall high quality of the procedure. Note that the word senses of adjectives and verbs are mostly the result of part-of-speech tagging errors, since in the seed set of seventeen words, we added only nouns and proper nouns. Wrongly tagged words have in general more noisy, uninterpretable clusters.

To better understand the amount of spurious items in our sense clusters, we performed an additional manual evaluation where, for a sample of hundred randomly sampled noun PCZ items, we counted the ratio between wrong (e.g. rat for the computer sense of mouse) and correct (keyboard, computer, etc.) related sense that were found within the PCZs. We obtained a macro average of 0.0495 and a micro average of 0.0385 wrongly related senses within the PCZs. Moreover, eighty-three per cent of the above sample has no unrelated senses, and only two per cent have only a single unrelated sense with a macro average ratio between the wrong and correct related PCZs of 0.067. This indicates that, overall, the amount of spurious senses within clusters is indeed small, thus providing a high-quality context for an accurate disambiguation of noun DT clusters.

6.3 Experiment 2: Linking induced senses to lexical resources

Experimental setting. In this experiment, we evaluate the performance of our linking component (Section 5.1). For this, we choose two lexical-semantic networks: WordNet (Fellbaum Reference Fellbaum1998), which has a high coverage on English common nouns, verbs and adjectives, and BabelNet (Navigli and Ponzetto Reference Navigli and Ponzetto2012a), which also includes a large amount of proper nouns and senses gathered from multiple other sources, including Wikipedia.

We follow standard practices, e.g. Navigli and Ponzetto (Reference Navigli and Ponzetto2012a), and create five evaluation test sets, one for each dataset from Section 6.1, by randomly selecting a subset of 300 induced word senses for each dataset, and manually establishing a mapping from these senses to WordNet and BabelNet senses (senses that cannot be mapped are labeled as such in the gold standard).

We compare against the following two most frequent sense (MFS) baselines, which select from all the possible senses for a given term t:

-

(1) The MFS in WordNet, where frequencies of senses are observed on a manually annotated semantic concordance (Miller et al. Reference Miller, Leacock, Tengi and Bunker1993).

-

(2) The MFS in BabelNet. Since BabelNet combines WordNet and Wikipedia, this amounts to (i) the WordNet MFS for senses originally found in WordNet and (ii) the most cited (i.e. internally hyperlinked) Wikipedia page for senses derived from Wikipedia.

The quality and correctness of the mapping is estimated as accuracy on the ground-truth judgments, namely the amount of true mapping decisions among the total number of (potentially, empty) mappings in the gold standard. Each pair (j, c) in a mapping M created with Algorithm 2 is evaluated as (i) true positive (TP) when c is the most suitable sense in the LR for the induced word sense j; (ii) true negative (TN) when c refers to j itself and there are no senses in the LR to capture the meaning expressed by j; (iii) false positive (FP) when c is not the most suitable sense in the LR for the sense t; (iv) false negative (FN) when c refers to j itself and there is a sense in the LR that captures the same meaning of j.

We also evaluate our mapping by quantifying coverage and extra-coverage on the reference resource:

where A is the set of LR synsets or induced word senses mapped in M using Algorithm 2, and B is the whole set of LR synsets. That is, Coverage indicates the percentage of senses of the LR sense inventory covered by the mapping M, whereas ExtraCoverage indicates the ratio of senses in M not linked to the LR sense inventory over the total number of senses in an LR. That is, ExtraCoverage is a measure of novelty to quantify the amount of senses discovered in T and not represented by the LR: it indicates the amount of ‘added’ knowledge we gain with our resource based on the amount of senses that cannot be mapped and are thus included as novel senses.

Results and discussion. In Table 9, we present the results using the optimal parameter values (i.e. th = 0.0 and m = 5 of Algorithm 2).Footnote 11 For all datasets, the number of linked senses, Coverage and ExtraCoverage are directly proportional to the number of entries in the dataset – i.e. the finer the sense granularity, as given by a lower sense clustering n parameter, the lower the number of mapped senses, Coverage and ExtraCoverage.

Table 9. Results on linking to lexical semantic resource: number of linked induced word senses, Coverage, ExtraCoverage, accuracy of our method and of the MFS baseline for our five datasets

In general, we report rather low coverage figures: the coverage in WordNet is always lower than fifty per cent (thirty per cent in one setting) and coverage in BabelNet is in all settings lower than five per cent. Low coverage is due to different levels of granularities between the source and target resource. Our target LRs, in fact, have very fine-grained sense inventories. For instance, BabelNet lists seventeen senses of the word python including two (arguably obscure ones) referring to particular roller coasters. In contrast, word senses induced from text corpora tend to be coarse and corpus specific. Consequently, the low coverage comes from the fact that we connect a coarse and a fine-grained sense inventory – cf. also previous work (Faralli and Navigli Reference Faralli and Navigli2013) showing comparable proportions between coverage and extra-coverage of automatically acquired knowledge (i.e. glosses) from corpora.

Finally, our results indicate differences between the order of magnitude of the Coverage and ExtraCoverage when linking to WordNet and BabelNet. This high difference is rooted in the cardinality of the two sense inventories, whereas BabelNet encompasses millions of senses, WordNet contains hundreds of thousands – many of them not covered in our corpora. Please note that an ExtraCoverage of about three per cent in BabelNet corresponds to about 300k novel senses. Overall, we take our results to be promising in that, despite the relative simplicity of our approach (i.e. almost parameter-free unsupervised linking), we are able to reach high accuracy figures in the range of around 87–95 per cent for WordNet and accuracies consistently above eighty per cent for BabelNet. This compares well against a random linking baseline that is able to achieve 44.2 per cent and 40.6 per cent accuracy on average when mapping to WordNet and BabelNet, respectively. Also, we consistently outperform the strong performance exhibited by the MFS baselines, which, in line with previous findings on similar tasks (Suchanek et al. Reference Suchanek, Kasneci and Weikum2008; Ponzetto and Navigli Reference Ponzetto and Navigli2009) provide a hard-to-beat competitor. Thanks to our method, in fact, we are able to achieve an accuracy improvement over the MFS baseline ranging from 1.4 per cent to 14.3 per cent on WordNet mappings, and from 24.4 per cent to 45.4 per cent on BabelNet. Despite not being comparable, our accuracy figures are in the same ballpark as those reported by Navigli and Ponzetto (Reference Navigli and Ponzetto2012a) (cf. Table 1), who use a similar method for linking Wikipedia to WordNet.

Error analysis. To gain insights into the performance of our approach, as well as its limitations, we performed a manual error analysis of the output on the WordNet mappings, identifying a variety of sources of errors that impact the quality of the output resource. These include

-

• part-of-speech tagging errors, which may produce wrong senses such as non-existent ‘verbs’ (e.g. tortilla:VB) (about ten per cent of the errors);

-

• Hearst patterns errors that may extract wrong hypernyms such as issue for the entry emotionalism (about twenty per cent of the errors);

-

• linking errors where the accuracy strongly depends on the granularity of senses and relationships of the target LR (about seventy per cent of the errors).

More specifically, false positives are often caused by the selection of a synset that is slightly different from the most suitable one (i.e. semantic shift), whereas false negatives typically occur due to the lack of connectivity in the semantic network.

Even if the high values of the estimated accuracy (see Table 9) of our mapping approach indicate that we are generally performing well over all the classes of test examples (i.e. true positive, true negative, false positive and false negative), the performance figures exhibit a different order of magnitude between the count of true positives and true negatives. True negatives are senses in the ExtraCoverage that we estimate to be correct new senses not contained in the reference LR. For a sample of such senses, we performed an additional manual analysis, and identified the following reasons that explain our generally high ExtraCoverage scores:

-

• Named entities and domain-specific senses (about forty per cent of the true negatives): true negative senses are due to correct new senses not contained in the target LR. This holds in particular for WordNet, where encyclopedic content occurs in a spotty fashion in the form of a few examples for some classes.

-

• Sense granularity misalignment (about sixty per cent of the true negatives): true negatives that derive from excessively fine clustering, and should have been combined with other senses to represent a more generic sense.

6.4 Experiment 3: Typing of the unmapped induced senses

Experimental setting. The high ExtraCoverage rates from Section 6.3 show that our resource contains a large number of senses that are not contained in existing LRs such as WordNet and BabelNet. Besides, high accuracy scores in the evaluation of the quality of the sense clusters from Section 6.2 seem to indicate that such extra items are, in fact, of high quality. Crucially, for our purposes, information found among the extra coverage has enormous potential, e.g. to go beyond ‘Wikipedia-only’ sense spaces. Consequently, we next evaluate our semantic typing component (Section 5.2) to assess the quality of our method to include also these good extra clusters that, however, have no perfect mapping in the reference LR (WordNet, BabelNet).

Similarly to the experiments for the resource mapping (Section 6.3), we manually create five test sets, one for each dataset from Section 6.1, by randomly selecting 300 unmapped PCZ items for each dataset, and manually identifying the most appropriate type of each induced sense among WordNet or BabelNet senses. Given these gold standards, performance is then computed as standard accuracy on each dataset.

Results and discussion. In Table 10, we report the statistics and the estimated accuracy for the task of typing the previously unmapped senses found among the ExtraCoverage. For each dataset and LR, we report the number of senses in the ExtraCoverage, the number of senses for which we inferred the type, the number of senses for which we were not able to compute a type, and the estimated accuracy for the types inferred by our method on the basis of either the links generated using our approach from Section 5.1, or those created using the MFS linking baseline. The results show that accuracy decreases for those datasets with higher polysemy. In particular, we obtain a low accuracy of 41.2 per cent for the ‘wiki-p6.0’ where the disambiguated thesaurus contains only a low number of related senses, resulting in sparsity issues. For the other settings, the accuracy ranges from 78.8 per cent to 91.4 per cent (WordNet) and from 87.2 per cent to 91.2 per cent (BabelNet). The MFS baseline accuracies of typing the unmapped induced senses (see Section 6.3) are lower, scoring 0.2 per cent to 2.7 per cent less accuracy for WordNet and 0.5 per cent to 4.2 per cent less accuracy for BabelNet: these results corroborate the previous ones on linking, where the MFS was shown to be a tough baseline. Besides, the higher performance figures achieved by the MFS on typing when compared to linking indicate that the typing task has a lower degree of difficulty in the respect that popular (i.e. frequent) types provide generally good type recommendations.

Table 10. Statistics and performance on typing unmapped PCZ items: number of induced senses counting for ExtraCoverage, number of typed and untyped induced senses, accuracy of our method and accuracy of the MFS baseline for our five datasets

6.5 Experiment 4: Evaluation of enriched lexical semantic resources

Experimental setting. In our next experiment, we follow previous work (Navigli and Ponzetto Reference Navigli and Ponzetto2012a) and benchmark the quality of our resources by making use of the evaluation framework provided by the SemEval-2007 task 16 (Cuadros and Rigau Reference Cuadros and Rigau2007) on the ‘Evaluation of wide-coverage knowledge resources’. This SemEval task is meant to provide an evaluation benchmark to assess wide-coverage LRs on the basis of a traditional lexical understanding task, namely WSD (Navigli Reference Navigli2009). The evaluation framework consists of the following two main phases:

-

(1) Generation of sense representations. From each LR, sense representations, also known as ‘topic signatures’, are generated, which are sets of terms that are taken to be highly correlated with a set of target senses. In practice, a sense representation consists of a weighted vector, where each element corresponds to a term that is deemed to be related to the sense, and the corresponding weight quantifies its strength of association.

-

(2) WSD evaluation. Next, sense representations are used as weighted bags of words in order to perform monolingual WSD using a Lesk-like method (cf. Lesk 1986) applied to standard lexical sample datasets. Given a target word in context and the sense representations for each of the target word’s senses, the WSD algorithm selects the sense with the highest lexical overlap (i.e. the largest number of words in common) between the sense representation and the target word’s textual context.

This SemEval benchmark utilizes performance on the WSD task as an indicator of the quality of the employed LR. This approach makes it possible to extrinsically compare the quality of different knowledge resources, while making as few assumptions as possible over their specific properties – this is because knowledge resources are simply viewed as sense representations, namely weighted bags of words. Besides, to keep the comparison fair, it uses a common and straightforward disambiguation strategy (i.e. Lesk-like word overlap) and a knowledge representation formalism (i.e. sense representations) that is equally shared across all LRs when evaluating them on the same reference dataset. Specifically, the evaluation is performed on two lexical sample datasets, from the Senseval-3 (Mihalcea, Chklovski and Kilgarriff Reference Mihalcea, Chklovski and Kilgarriff2004) and SemEval-2007 Task 17 (Pradhan et al. Reference Pradhan, Loper, Dligach and Palmer2007) evaluation campaigns. The first dataset has coarse-grained and fine-grained sense annotations, while the second contains only fine-grained annotations. In all experiments, we follow the original task formulation and quantify WSD performance using standard metrics of recall, precision and balanced F-measure.

Here, we use the SemEval task to benchmark the ‘added value’ in knowledge applicable for WSD that can be achieved by enriching a standard resource like WordNet with disambiguated distributional information from our PCZs on the basis of our linking. To this end, we experiment with different ways of enriching WordNet-based sense representations with contextual information from our HAR. For each WordNet sense of a disambiguation target, we first build a ‘core’ sense representation from the content and structure of WordNet, and then expand it with different kinds of information that can be collected from the PCZ sense that is linked to it (cf. Table 11):

-

• WordNet. The baseline model relies solely on the WordNet LR. It builds a sense representation for each sense of interest by collecting synonyms and definition terms from the corresponding WordNet synset, as well as all synsets directly connected to it (we remove stop words and weigh words with term frequency).

-

• WordNet + Related (news). We augment the WordNet-based representation with related terms from the PCZ. That is, if the WordNet sense is linked to a corresponding induced sense in our resource, we add all related terms found in the linked PCZ sense to the sense representation.

-

• WordNet + Related (news) + Context (news/wiki). Sense representations of this model are built by taking the previously generated ones, and additionally including terms obtained from the context clues of either the news (+ Context (news)) or Wikipedia (+ Context (wiki)) corpora we use (see Section 6.1).

Table 11. Sample entries of the hybrid aligned resource (HAR) for the words mouse and keyboard

Trailing numbers indicate sense identifiers. To enrich WordNet sense representations, we rely on related terms and context clues.

In the last class of models, we used up to 5,000 most relevant context clues per word sense. This value was set experimentally: performance of the WSD system gradually increased with the number of context clues, reaching a plateau at the value of 5,000. During aggregation, we excluded stop words and numbers from context clues. Besides, we transformed syntactic context clues to terms, stripping the dependency type, so they can be added to other lexical representations. For instance, the context clue rat:conj_and of the entry mouse:0 was reduced to the feature rat.

Table 12 shows a complete example from our dataset that demonstrate how, thanks to our HAR, we are able to expand WordNet-based sense representations with many relevant terms from the related terms of our sense-disambiguated PCZs.

Table 12. WordNet-only and PCZ-enriched sense representations for the fourth WordNet sense of the word disk (i.e. the ‘computer science’ one): the ‘core’ WordNet sense representation is additionally enriched with related words from our hybrid aligned resource