1. Introduction

Let

![]() $\mu$

be a probability measure on the unit square

$\mu$

be a probability measure on the unit square

![]() $[0,1]^2$

. Following [Reference Dawid, DeGroot and Mortera12], this measure is called coherent if it is the joint distribution of a two-variate random vector (X, Y) defined on some arbitrary probability space

$[0,1]^2$

. Following [Reference Dawid, DeGroot and Mortera12], this measure is called coherent if it is the joint distribution of a two-variate random vector (X, Y) defined on some arbitrary probability space

![]() $(\Omega, \mathcal{F}, \mathbb{P})$

, such that

$(\Omega, \mathcal{F}, \mathbb{P})$

, such that

![]() $X=\mathbb{P}(E\mid\mathcal{G})$

and

$X=\mathbb{P}(E\mid\mathcal{G})$

and

![]() $Y=\mathbb{P}(E\mid\mathcal{H})$

almost surely for some measurable event

$Y=\mathbb{P}(E\mid\mathcal{H})$

almost surely for some measurable event

![]() $E\in \mathcal{F}$

and two sub-

$E\in \mathcal{F}$

and two sub-

![]() $\sigma$

-fields

$\sigma$

-fields

![]() $\mathcal{G}, \mathcal{H} \subset \mathcal{F}$

. Throughout the text, the class of all coherent probability measures will be denoted by

$\mathcal{G}, \mathcal{H} \subset \mathcal{F}$

. Throughout the text, the class of all coherent probability measures will be denoted by

![]() $\mathcal{C}$

; for the sake of convenience (and with a slight abuse of notation), we will also write

$\mathcal{C}$

; for the sake of convenience (and with a slight abuse of notation), we will also write

![]() $(X, Y) \in \mathcal{C}$

to indicate that the distribution of a random vector (X, Y) is coherent.

$(X, Y) \in \mathcal{C}$

to indicate that the distribution of a random vector (X, Y) is coherent.

Coherent measures enjoy the following nice interpretation. Suppose that two experts provide their personal estimates on the likelihood of some random event E, and assume that the knowledge of the first and the second expert is represented by the

![]() $\sigma$

-algebras

$\sigma$

-algebras

![]() $\mathcal{G}$

and

$\mathcal{G}$

and

![]() $\mathcal{H}$

, respectively. Then a natural idea to model the predictions of the experts is to use conditional expectations: this leads to the random variables X and Y as above.

$\mathcal{H}$

, respectively. Then a natural idea to model the predictions of the experts is to use conditional expectations: this leads to the random variables X and Y as above.

The importance of coherent distributions stems from their numerous applications in statistics (cf. [Reference Dawid, DeGroot and Mortera12, Reference DeGroot13, Reference Ranjan and Gneiting17, Reference Satopää, Pemantle and Ungar19]) and economics [Reference Arieli and Babichenko1–Reference Arieli, Babichenko, Sandomirskiy and Tamuz3, Reference He, Sandomirskiy and Tamuz15]. Coherent distributions are also closely related to graph theory and combinatorial matrix theory; see, for instance, [Reference Boza, Kr̆epela and Soria4, Reference Cichomski7, Reference Cichomski and Petrov11, Reference Tao20]. Moreover, there has been substantial purely probabilistic advancement on this subject during the last decade [Reference Burdzy and Pal5, Reference Burdzy and Pitman6, Reference Cichomski and OsĘkowski8–Reference Cichomski and OsĘkowski10, Reference Zhu21]. The main interest, both in applied and theoretical considerations, involves bounding the maximal discrepancy of coherent vectors measured by different functionals. A canonical result of this type is the following threshold bound [Reference Burdzy and Pal5].

Theorem 1.1. For any parameter

![]() $\delta \in \big(\frac{1}{2},1\big]$

,

$\delta \in \big(\frac{1}{2},1\big]$

,

For a generalisation of this equation to n-variate coherent vectors, consult [Reference Cichomski and OsĘkowski9]. Another important example is the expectation bound established independently in [Reference Arieli, Babichenko, Sandomirskiy and Tamuz3, Reference Cichomski7].

Theorem 1.2. For any exponent

![]() $\alpha\in (0,2]$

,

$\alpha\in (0,2]$

,

![]() $\sup_{(X,Y)\in \mathcal{C}}\mathbb{E}|X-Y|^{\alpha} = 2^{-\alpha}$

.

$\sup_{(X,Y)\in \mathcal{C}}\mathbb{E}|X-Y|^{\alpha} = 2^{-\alpha}$

.

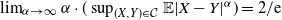

Analysis of the left-hand side of this latter equation for

![]() $\alpha>2$

remains a major open problem and constitutes one of the main motivations for this paper. Accordingly, we investigate the asymptotic behaviour of this expression and derive an appropriate sharp estimate.

$\alpha>2$

remains a major open problem and constitutes one of the main motivations for this paper. Accordingly, we investigate the asymptotic behaviour of this expression and derive an appropriate sharp estimate.

Theorem 1.3.

![]() $\lim_{\alpha \to \infty}\alpha\cdot(\sup_{(X,Y)\in \mathcal{C}}\mathbb{E}|X-Y|^{\alpha}) = {2}/{\mathrm{e}}$

.

$\lim_{\alpha \to \infty}\alpha\cdot(\sup_{(X,Y)\in \mathcal{C}}\mathbb{E}|X-Y|^{\alpha}) = {2}/{\mathrm{e}}$

.

The proof of Theorem 1.3 that we present below rests on a novel, geometric-type approach. As verified in [Reference Burdzy and Pitman6], the family of coherent distributions is a convex, compact subset of the space of probability distributions on

![]() $[0,1]^2$

equipped with the usual weak topology. One of the main results of this paper is to provide a characterisation of the extremal points of

$[0,1]^2$

equipped with the usual weak topology. One of the main results of this paper is to provide a characterisation of the extremal points of

![]() $\mathcal{C}$

, which is considered to be one of the major challenges of the topic [Reference Burdzy and Pitman6, Reference Zhu21].

$\mathcal{C}$

, which is considered to be one of the major challenges of the topic [Reference Burdzy and Pitman6, Reference Zhu21].

It is instructive to take a look at the corresponding problem arising in the theory of martingales, the solution to which is well known. Namely (see [Reference Dubins and Schwarz14]), fix

![]() $N\in \mathbb{N}$

and consider the class of all finite martingales

$N\in \mathbb{N}$

and consider the class of all finite martingales

![]() $(M_1,M_2,\dots, M_N)$

and the induced distributions on

$(M_1,M_2,\dots, M_N)$

and the induced distributions on

![]() $\mathbb R^N$

. The extremal distributions can be characterised as follows:

$\mathbb R^N$

. The extremal distributions can be characterised as follows:

-

(i)

$M_1$

is concentrated in one point;

$M_1$

is concentrated in one point; -

(ii) for any

$n=2,3,\ldots,N$

, the conditional distribution of

$n=2,3,\ldots,N$

, the conditional distribution of

$M_n$

given

$M_n$

given

$(M_i)_{i=1}^{n-1}$

is concentrated on a set of cardinality at most two.

$(M_i)_{i=1}^{n-1}$

is concentrated on a set of cardinality at most two.

In particular, the support of a two-variate martingale with an extremal distribution cannot exceed two points. Surprisingly, the structure of

![]() $\mathrm{ext}(\mathcal{C})$

(the set of extreme points of

$\mathrm{ext}(\mathcal{C})$

(the set of extreme points of

![]() $\mathcal{C}$

) is much more complex, as there exist extremal coherent measures with arbitrarily large or even countably infinite numbers of atoms [Reference Arieli, Babichenko, Sandomirskiy and Tamuz3, Reference Zhu21]. Conversely, as proved in [Reference Arieli, Babichenko, Sandomirskiy and Tamuz3], elements of

$\mathcal{C}$

) is much more complex, as there exist extremal coherent measures with arbitrarily large or even countably infinite numbers of atoms [Reference Arieli, Babichenko, Sandomirskiy and Tamuz3, Reference Zhu21]. Conversely, as proved in [Reference Arieli, Babichenko, Sandomirskiy and Tamuz3], elements of

![]() $\mathrm{ext}(\mathcal{C})$

are always supported on sets of Lebesgue measure zero. The existence of nonatomic extreme points remains yet another open problem.

$\mathrm{ext}(\mathcal{C})$

are always supported on sets of Lebesgue measure zero. The existence of nonatomic extreme points remains yet another open problem.

For further discussion, we need to introduce some additional background and notation. For a measure

![]() $\mu$

supported on

$\mu$

supported on

![]() $[0,1]^2$

, we write

$[0,1]^2$

, we write

![]() $\mu^x$

and

$\mu^x$

and

![]() $\mu^y$

for the marginal measures of

$\mu^y$

for the marginal measures of

![]() $\mu$

on [0, 1], i.e. for the measures obtained by projecting

$\mu$

on [0, 1], i.e. for the measures obtained by projecting

![]() $\mu$

on the first and the second coordinate, correspondingly.

$\mu$

on the first and the second coordinate, correspondingly.

Definition 1.1. Introduce the family

![]() $\mathcal{R}$

, which consists of all ordered pairs

$\mathcal{R}$

, which consists of all ordered pairs

![]() $(\mu, \nu)$

of nonnegative Borel measures on

$(\mu, \nu)$

of nonnegative Borel measures on

![]() $[0,1]^2$

for which

$[0,1]^2$

for which

![]() $\int_{A}(1-x)\,\mathrm{d}\mu^x = \int_{A}x\,\mathrm{d}\nu^x$

and

$\int_{A}(1-x)\,\mathrm{d}\mu^x = \int_{A}x\,\mathrm{d}\nu^x$

and

![]() $\int_{B}(1-y)\,\mathrm{d}\mu^y = \int_{B}y\,\mathrm{d}\nu^y$

, for any Borel subsets

$\int_{B}(1-y)\,\mathrm{d}\mu^y = \int_{B}y\,\mathrm{d}\nu^y$

, for any Borel subsets

![]() $A,B \in \mathcal{B}([0,1])$

.

$A,B \in \mathcal{B}([0,1])$

.

It turns out that the family

![]() $\mathcal{R}$

is very closely related to the class of coherent distributions. We will prove the following statement (a slightly different formulation can be found in [Reference Arieli, Babichenko, Sandomirskiy and Tamuz3]).

$\mathcal{R}$

is very closely related to the class of coherent distributions. We will prove the following statement (a slightly different formulation can be found in [Reference Arieli, Babichenko, Sandomirskiy and Tamuz3]).

Proposition 1.1. Let m be a probability measure on

![]() $[0,1]^2$

. Then m is coherent if and only if there exists

$[0,1]^2$

. Then m is coherent if and only if there exists

![]() $(\mu, \nu)\in \mathcal{R}$

such that

$(\mu, \nu)\in \mathcal{R}$

such that

![]() $m=\mu+\nu$

.

$m=\mu+\nu$

.

Proposition 1.1 motivates the following.

Definition 1.2. For a fixed

![]() $m\in\mathcal{C}$

, consider the class

$m\in\mathcal{C}$

, consider the class

![]() $\mathcal{R}(m) = \{(\mu,\nu)\in\mathcal{R}\colon m=\mu+\nu\}$

. Any element

$\mathcal{R}(m) = \{(\mu,\nu)\in\mathcal{R}\colon m=\mu+\nu\}$

. Any element

![]() $(\mu,\nu)\in\mathcal{R}(m)$

will be called a representation of a coherent distribution m.

$(\mu,\nu)\in\mathcal{R}(m)$

will be called a representation of a coherent distribution m.

By the very definition, both

![]() $\mathcal{C}$

and

$\mathcal{C}$

and

![]() $\mathcal{R}$

, and hence also

$\mathcal{R}$

, and hence also

![]() $\mathcal{R}(m)$

, are convex sets. To proceed, let us distinguish the ordering in the class of measures, which will often be used in our considerations below. Namely, for two Borel measures

$\mathcal{R}(m)$

, are convex sets. To proceed, let us distinguish the ordering in the class of measures, which will often be used in our considerations below. Namely, for two Borel measures

![]() $\mu_1, \mu_2$

supported on the unit square, we write

$\mu_1, \mu_2$

supported on the unit square, we write

![]() $\mu_1\leq \mu_2$

if we have

$\mu_1\leq \mu_2$

if we have

![]() $\mu_1(A)\le \mu_2(A)$

for all

$\mu_1(A)\le \mu_2(A)$

for all

![]() $A\in \mathcal{B}([0,1]^2)$

.

$A\in \mathcal{B}([0,1]^2)$

.

Definition 1.3. Let

![]() $m\in\mathcal{C}$

. We say that the representation

$m\in\mathcal{C}$

. We say that the representation

![]() $(\mu,\nu)$

of m is

$(\mu,\nu)$

of m is

-

unique if, for every

$(\tilde{\mu}, \tilde{\nu})\in \mathcal{R}$

with

$(\tilde{\mu}, \tilde{\nu})\in \mathcal{R}$

with

$m=\tilde{\mu}+\tilde{\nu}$

, we have

$m=\tilde{\mu}+\tilde{\nu}$

, we have

$\tilde{\mu}=\mu$

and

$\tilde{\mu}=\mu$

and

$\tilde{\nu}=\nu$

;

$\tilde{\nu}=\nu$

; -

minimal if, for all

$(\tilde{\mu}, \tilde{\nu})\in \mathcal{R}$

with

$(\tilde{\mu}, \tilde{\nu})\in \mathcal{R}$

with

$\tilde{\mu}\le \mu$

and

$\tilde{\mu}\le \mu$

and

$\tilde{\nu}\le \nu$

, there exists

$\tilde{\nu}\le \nu$

, there exists

$\alpha \in [0,1]$

such that

$\alpha \in [0,1]$

such that

$(\tilde{\mu}, \tilde{\nu}) = \alpha \cdot (\mu, \nu)$

.

$(\tilde{\mu}, \tilde{\nu}) = \alpha \cdot (\mu, \nu)$

.

In practice, we are interested only in the minimality of those representations that have been previously verified to be unique. In such a case, the minimality of

![]() $(\mu, \nu)$

is just an indecomposability condition for m: we are asking whether every ‘coherent subsystem’

$(\mu, \nu)$

is just an indecomposability condition for m: we are asking whether every ‘coherent subsystem’

![]() $(\tilde{\mu}, \tilde{\nu})$

contained in m is necessarily just a smaller copy of m. To gain some intuition about the above concepts, let us briefly discuss the following example.

$(\tilde{\mu}, \tilde{\nu})$

contained in m is necessarily just a smaller copy of m. To gain some intuition about the above concepts, let us briefly discuss the following example.

Example 1.1. Consider an arbitrary probability distribution m supported on the diagonal. This distribution is coherent. To see this, let

![]() $\xi$

be a random variable on some probability space

$\xi$

be a random variable on some probability space

![]() $(\Omega,\mathcal{F},\mathbb{P})$

such that

$(\Omega,\mathcal{F},\mathbb{P})$

such that

![]() $\xi\sim m^x$

. Consider the product space

$\xi\sim m^x$

. Consider the product space

![]() $(\tilde{\Omega},\tilde{\mathcal{F}},\tilde{\mathbb{P}}) = (\Omega\times[0,1],\mathcal{F}\otimes \mathcal{B}([0,1]),\mathbb{P}\otimes|\cdot|)$

, where

$(\tilde{\Omega},\tilde{\mathcal{F}},\tilde{\mathbb{P}}) = (\Omega\times[0,1],\mathcal{F}\otimes \mathcal{B}([0,1]),\mathbb{P}\otimes|\cdot|)$

, where

![]() $|\cdot|$

denotes the Lebesgue measure. Then

$|\cdot|$

denotes the Lebesgue measure. Then

![]() $\xi$

has the same distribution as

$\xi$

has the same distribution as

![]() $\tilde{\mathbb{P}}(E\mid\mathcal{G})$

, where

$\tilde{\mathbb{P}}(E\mid\mathcal{G})$

, where

![]() $\mathcal{G}$

is the sub-

$\mathcal{G}$

is the sub-

![]() $\sigma$

-algebra of

$\sigma$

-algebra of

![]() $\tilde{\mathcal{F}}$

consisting of all sets of the form

$\tilde{\mathcal{F}}$

consisting of all sets of the form

![]() $A\times [0,1]$

, with

$A\times [0,1]$

, with

![]() $A\in \mathcal{F}$

, and the event

$A\in \mathcal{F}$

, and the event

![]() $E\in \tilde{\mathcal{F}}$

is given by

$E\in \tilde{\mathcal{F}}$

is given by

![]() $E = \{(\omega,y)\in\Omega\times[0,1]\colon y\leq\xi(\omega)\}$

. Consequently, we have

$E = \{(\omega,y)\in\Omega\times[0,1]\colon y\leq\xi(\omega)\}$

. Consequently, we have

![]() $(\tilde{\mathbb{P}}(E\mid\mathcal{G}),\tilde{\mathbb{P}}(E\mid\mathcal{G}))\sim(\xi,\xi)\sim m$

and hence m is coherent.

$(\tilde{\mathbb{P}}(E\mid\mathcal{G}),\tilde{\mathbb{P}}(E\mid\mathcal{G}))\sim(\xi,\xi)\sim m$

and hence m is coherent.

Next, let us describe the representation

![]() $(\mu,\nu)$

of m. Since the measure is supported on the diagonal, both components

$(\mu,\nu)$

of m. Since the measure is supported on the diagonal, both components

![]() $\mu$

and

$\mu$

and

![]() $\nu$

(if they exist) must also have this property and hence, when checking the conditions in the definition of the family

$\nu$

(if they exist) must also have this property and hence, when checking the conditions in the definition of the family

![]() $\mathcal{R}$

, it is enough to verify the first of them. But the first condition is equivalent to saying that

$\mathcal{R}$

, it is enough to verify the first of them. But the first condition is equivalent to saying that

![]() $\mathrm{d} \mu^x = x\,\mathrm{d} m^x$

and

$\mathrm{d} \mu^x = x\,\mathrm{d} m^x$

and

![]() $\mathrm{d} \nu^x = (1-x)\,\mathrm{d} m^x$

; this gives the existence and uniqueness of the representation.

$\mathrm{d} \nu^x = (1-x)\,\mathrm{d} m^x$

; this gives the existence and uniqueness of the representation.

Finally, let us discuss the minimality of the representation of m. If m is concentrated at a single point

![]() $(\delta,\delta)$

, then the same is true for

$(\delta,\delta)$

, then the same is true for

![]() $\mu$

and

$\mu$

and

![]() $\nu$

, and hence also for

$\nu$

, and hence also for

![]() $\tilde{\mu}$

and

$\tilde{\mu}$

and

![]() $\tilde{\nu}$

, where

$\tilde{\nu}$

, where

![]() $(\tilde{\mu},\tilde{\nu})\in\mathcal{R}$

is a pair as in the definition of minimality. Now, we can easily verify that

$(\tilde{\mu},\tilde{\nu})\in\mathcal{R}$

is a pair as in the definition of minimality. Now, we can easily verify that

![]() $(\tilde{\mu},\tilde{\nu})$

is proportional to

$(\tilde{\mu},\tilde{\nu})$

is proportional to

![]() $(\mu,\nu)$

, directly applying the equations in the definition of class

$(\mu,\nu)$

, directly applying the equations in the definition of class

![]() $\mathcal{R}$

with

$\mathcal{R}$

with

![]() $A=B=\{\delta\}$

; thus, the representation is minimal. It remains to study the case in which m is not concentrated at a single point. Then there is a measure

$A=B=\{\delta\}$

; thus, the representation is minimal. It remains to study the case in which m is not concentrated at a single point. Then there is a measure

![]() $\tilde{m}$

satisfying

$\tilde{m}$

satisfying

![]() $\tilde{m}\leq m$

, which is not proportional to m. Repeating the above argumentation with m replaced by

$\tilde{m}\leq m$

, which is not proportional to m. Repeating the above argumentation with m replaced by

![]() $\tilde{m}$

, we see that

$\tilde{m}$

, we see that

![]() $\tilde{m}$

can be decomposed as the sum

$\tilde{m}$

can be decomposed as the sum

![]() $\tilde{\mu}+\tilde{\nu}$

, where

$\tilde{\mu}+\tilde{\nu}$

, where

![]() $(\tilde{\mu},\tilde{\nu})\in\mathcal{R}$

is a pair of measures supported on the diagonal uniquely determined by

$(\tilde{\mu},\tilde{\nu})\in\mathcal{R}$

is a pair of measures supported on the diagonal uniquely determined by

![]() $\mathrm{d}\tilde{\mu}^x = x\,\mathrm{d}\tilde{m}^x$

and

$\mathrm{d}\tilde{\mu}^x = x\,\mathrm{d}\tilde{m}^x$

and

![]() $\mathrm{d}\tilde{\nu}^x = (1-x)\,\mathrm{d}\tilde{m}^x$

. Since

$\mathrm{d}\tilde{\nu}^x = (1-x)\,\mathrm{d}\tilde{m}^x$

. Since

![]() $\tilde{m}\leq m$

, we also have

$\tilde{m}\leq m$

, we also have

![]() $\tilde{\mu}\leq\mu$

and

$\tilde{\mu}\leq\mu$

and

![]() $\tilde{\nu}\leq\nu$

. It remains to note that

$\tilde{\nu}\leq\nu$

. It remains to note that

![]() $(\tilde{\mu},\tilde{\nu})$

is not proportional to

$(\tilde{\mu},\tilde{\nu})$

is not proportional to

![]() $(\mu,\nu)$

, since the same is true for

$(\mu,\nu)$

, since the same is true for

![]() $\tilde{m}$

and m. This proves that the representation

$\tilde{m}$

and m. This proves that the representation

![]() $(\mu,\nu)$

is not minimal.

$(\mu,\nu)$

is not minimal.

With these notions at hand, we give the following general characterisation of

![]() $\textrm{ext}(\mathcal{C})$

.

$\textrm{ext}(\mathcal{C})$

.

Theorem 1.4. Let m be a coherent distribution on

![]() $[0,1]^2$

. Then m is extremal if and only if the representation of m is unique and minimal.

$[0,1]^2$

. Then m is extremal if and only if the representation of m is unique and minimal.

This statement will be established in the next section. Then, in Section 3, we concentrate on extremal coherent measures with finite support. Let

![]() $\textrm{ext}_\mathrm{f}(\mathcal{C}) = \{\eta\in\textrm{ext}(\mathcal{C})\colon|\mathrm{supp}(\eta)|<\infty\}$

. Theorem 1.4 enables us to deduce several structural properties of

$\textrm{ext}_\mathrm{f}(\mathcal{C}) = \{\eta\in\textrm{ext}(\mathcal{C})\colon|\mathrm{supp}(\eta)|<\infty\}$

. Theorem 1.4 enables us to deduce several structural properties of

![]() $\textrm{ext}_\mathrm{f}(\mathcal{C})$

; most importantly, as conjectured in [Reference Zhu21], we show that the support of

$\textrm{ext}_\mathrm{f}(\mathcal{C})$

; most importantly, as conjectured in [Reference Zhu21], we show that the support of

![]() $\eta\in\textrm{ext}_\mathrm{f}(\mathcal{C})$

cannot contain any axial cycles. Here is the definition.

$\eta\in\textrm{ext}_\mathrm{f}(\mathcal{C})$

cannot contain any axial cycles. Here is the definition.

Definition 1.4. The sequence

![]() $\big((x_i,y_i)\big)_{i=1}^{2n}$

with values in

$\big((x_i,y_i)\big)_{i=1}^{2n}$

with values in

![]() $[0,1]^2$

is called an axial cycle if all the points

$[0,1]^2$

is called an axial cycle if all the points

![]() $(x_i, y_i)$

are distinct, the endpoint coordinates

$(x_i, y_i)$

are distinct, the endpoint coordinates

![]() $x_1$

and

$x_1$

and

![]() $x_{2n}$

coincide, and

$x_{2n}$

coincide, and

![]() $x_{2i} = x_{2i+1}$

and

$x_{2i} = x_{2i+1}$

and

![]() $y_{2i-1} = y_{2i}$

for all i.

$y_{2i-1} = y_{2i}$

for all i.

Remarkably, the same ‘no axial cycle’ property holds true for extremal doubly stochastic measures (permutons)—for the relevant discussion, see [Reference Hestir and Williams16]. Next, in Section 4, we apply our previous results and obtain the following reduction towards Theorem 1.3: for all

![]() $\alpha \ge1$

,

$\alpha \ge1$

,

Here, the supremum is taken over all n and all sequences

![]() $\tilde{\textbf{z}} = (z_0, z_1, \dots, z_{n+1})$

such that

$\tilde{\textbf{z}} = (z_0, z_1, \dots, z_{n+1})$

such that

![]() $z_0=z_{n+1}=0$

,

$z_0=z_{n+1}=0$

,

![]() $z_i> 0$

for all

$z_i> 0$

for all

![]() $i=1,2,\ldots,n$

, and

$i=1,2,\ldots,n$

, and

![]() $\sum_{i=1}^{n}z_i=1$

. Finally, using several combinatorial arguments and reductions, we prove Theorem 1.3 by direct analysis of the right-hand side of (1.1).

$\sum_{i=1}^{n}z_i=1$

. Finally, using several combinatorial arguments and reductions, we prove Theorem 1.3 by direct analysis of the right-hand side of (1.1).

2. Coherent measures, representations

Let

![]() $\mathcal{M}([0,1]^2)$

and

$\mathcal{M}([0,1]^2)$

and

![]() $\mathcal{M}([0,1])$

denote the space of nonnegative Borel measures on

$\mathcal{M}([0,1])$

denote the space of nonnegative Borel measures on

![]() $[0,1]^2$

and [0, 1], respectively. For

$[0,1]^2$

and [0, 1], respectively. For

![]() $\mu \in \mathcal{M}([0,1]^2)$

, let

$\mu \in \mathcal{M}([0,1]^2)$

, let

![]() $\mu^x, \mu^y \in \mathcal{M}([0,1])$

be defined by

$\mu^x, \mu^y \in \mathcal{M}([0,1])$

be defined by

![]() $\mu^x(A) = \mu(A\times [0,1])$

and

$\mu^x(A) = \mu(A\times [0,1])$

and

![]() $\mu^y(B)=\mu([0,1]\times B)$

for all Borel subsets

$\mu^y(B)=\mu([0,1]\times B)$

for all Borel subsets

![]() $A,B\in\mathcal{B}([0,1])$

. We begin with the following characterisation of

$A,B\in\mathcal{B}([0,1])$

. We begin with the following characterisation of

![]() $\mathcal{C}$

.

$\mathcal{C}$

.

Proposition 2.1. Let

![]() $m \in \mathcal{M}([0,1]^2)$

. The measure m is a coherent distribution if and only if it is the joint distribution of a two-variate random vector (X,Y) such that

$m \in \mathcal{M}([0,1]^2)$

. The measure m is a coherent distribution if and only if it is the joint distribution of a two-variate random vector (X,Y) such that

![]() $X=\mathbb{E}(Z\mid X)$

and

$X=\mathbb{E}(Z\mid X)$

and

![]() $Y=\mathbb{E}(Z\mid Y)$

almost surely for some random variable Z with

$Y=\mathbb{E}(Z\mid Y)$

almost surely for some random variable Z with

![]() $0\le Z\le 1$

.

$0\le Z\le 1$

.

Proof. This is straightforward. See [Reference Burdzy and Pitman6, Reference Cichomski7].

Recall the definition of the class

![]() $\mathcal{R}$

formulated in the previous section. Let us study the connection between this class and the family of all coherent distributions.

$\mathcal{R}$

formulated in the previous section. Let us study the connection between this class and the family of all coherent distributions.

Proof of Proposition 1.1. First, we show that the decomposition

![]() $m=\mu+\nu$

exists for all

$m=\mu+\nu$

exists for all

![]() $m\in \mathcal{C}$

. Indeed, by virtue of Proposition 2.1, we can find a random vector

$m\in \mathcal{C}$

. Indeed, by virtue of Proposition 2.1, we can find a random vector

![]() $(X,Y)\sim m$

defined on some probability space

$(X,Y)\sim m$

defined on some probability space

![]() $(\Omega, \mathcal{F}, \mathbb{P})$

such that

$(\Omega, \mathcal{F}, \mathbb{P})$

such that

![]() $X=\mathbb{E}(Z\mid X)$

and

$X=\mathbb{E}(Z\mid X)$

and

![]() $Y=\mathbb{E}(Z\mid Y)$

for some random variable

$Y=\mathbb{E}(Z\mid Y)$

for some random variable

![]() $Z\in[0,1]$

. For a set

$Z\in[0,1]$

. For a set

![]() $C\in\mathcal{B}([0,1]^2)$

, we put

$C\in\mathcal{B}([0,1]^2)$

, we put

Then the equality

![]() $m=\mu+\nu$

is evident. Furthermore, for a fixed

$m=\mu+\nu$

is evident. Furthermore, for a fixed

![]() $A\in \mathcal{B}([0,1])$

,

$A\in \mathcal{B}([0,1])$

,

where the first equality is due to

![]() $X=\mathbb{E}(Z\mid X)$

and the second is a consequence of (2.1). Moreover, we may also write

$X=\mathbb{E}(Z\mid X)$

and the second is a consequence of (2.1). Moreover, we may also write

Combining (2.2) and (2.3), we get

![]() $\int_{A}(1-x)\,\mathrm{d}\mu^x = \int_{A}x\,\mathrm{d}\nu^x$

for all

$\int_{A}(1-x)\,\mathrm{d}\mu^x = \int_{A}x\,\mathrm{d}\nu^x$

for all

![]() $A\in \mathcal{B}([0,1])$

. The symmetric condition (the second requirement in Definition 1.1) is shown analogously. This completes the first part of the proof.

$A\in \mathcal{B}([0,1])$

. The symmetric condition (the second requirement in Definition 1.1) is shown analogously. This completes the first part of the proof.

Now, pick a probability measure m on

![]() $[0,1]^2$

such that

$[0,1]^2$

such that

![]() $m=\mu+\nu$

for some

$m=\mu+\nu$

for some

![]() $(\mu, \nu) \in \mathcal{R}$

. We need to show that m is coherent. To this end, consider the probability space

$(\mu, \nu) \in \mathcal{R}$

. We need to show that m is coherent. To this end, consider the probability space

![]() $([0,1]^2, \mathcal{B}([0,1]^2),m)$

and the random variables

$([0,1]^2, \mathcal{B}([0,1]^2),m)$

and the random variables

![]() $X,Y\colon[0,1]^2 \rightarrow [0,1]$

defined by

$X,Y\colon[0,1]^2 \rightarrow [0,1]$

defined by

![]() $X(x,y)=x$

and

$X(x,y)=x$

and

![]() $Y(x,y)=y$

,

$Y(x,y)=y$

,

![]() $x,y\in [0,1]$

. Additionally, let Z denote the Radon–Nikodym derivative of

$x,y\in [0,1]$

. Additionally, let Z denote the Radon–Nikodym derivative of

![]() $\mu$

with respect to m: we have

$\mu$

with respect to m: we have

![]() $0\le Z \le 1$

m-almost surely and

$0\le Z \le 1$

m-almost surely and

![]() $\mu(C) = \int_{C}Z\,\mathrm{d}m$

for all

$\mu(C) = \int_{C}Z\,\mathrm{d}m$

for all

![]() $C\in\mathcal{B}([0,1]^2)$

. Again by Proposition 2.1, it is sufficient to verify that

$C\in\mathcal{B}([0,1]^2)$

. Again by Proposition 2.1, it is sufficient to verify that

![]() $X=\mathbb{E}(Z\mid X)$

and

$X=\mathbb{E}(Z\mid X)$

and

![]() $Y=\mathbb{E}(Z\mid Y)$

. By symmetry, it is enough to show the first equality. Fix

$Y=\mathbb{E}(Z\mid Y)$

. By symmetry, it is enough to show the first equality. Fix

![]() $A\in \mathcal{B}([0,1])$

and note that

$A\in \mathcal{B}([0,1])$

and note that

Similarly, we also have

Finally, note that by

![]() $(\mu, \nu) \in \mathcal{R}$

, the right-hand sides of (2.4) and (2.5) are equal. Therefore, we obtain the identity

$(\mu, \nu) \in \mathcal{R}$

, the right-hand sides of (2.4) and (2.5) are equal. Therefore, we obtain the identity

![]() $\int_{\{X\in A\}}X\,\mathrm{d}m = \int_{\{X\in A\}}Z\,\mathrm{d}m$

for arbitrary

$\int_{\{X\in A\}}X\,\mathrm{d}m = \int_{\{X\in A\}}Z\,\mathrm{d}m$

for arbitrary

![]() $A\in \mathcal{B}([0,1])$

. This yields the claim.

$A\in \mathcal{B}([0,1])$

. This yields the claim.

We turn our attention to the characterisation of

![]() $\mathrm{ext}(\mathcal{C})$

stated in the previous section.

$\mathrm{ext}(\mathcal{C})$

stated in the previous section.

Proof of Theorem 1.4. For the implication ‘

![]() $\Rightarrow$

’, let m be an extremal coherent measure and suppose, on the contrary, that

$\Rightarrow$

’, let m be an extremal coherent measure and suppose, on the contrary, that

![]() $(\mu_1, \nu_1)$

and

$(\mu_1, \nu_1)$

and

![]() $(\mu_2, \nu_2)$

are two different elements of

$(\mu_2, \nu_2)$

are two different elements of

![]() $\mathcal{R}(m)$

. We will prove that

$\mathcal{R}(m)$

. We will prove that

![]() $m-\mu_1+\mu_2$

and

$m-\mu_1+\mu_2$

and

![]() $m-\mu_2+\mu_1$

are also coherent distributions. Because

$m-\mu_2+\mu_1$

are also coherent distributions. Because

we obtain a contradiction with the assumed extremality of m. By symmetry, it is enough to show that

![]() $(m-\mu_1+\mu_2) \in \mathcal{C}$

. To this end, by virtue of Proposition 1.1, it suffices to check that

$(m-\mu_1+\mu_2) \in \mathcal{C}$

. To this end, by virtue of Proposition 1.1, it suffices to check that

![]() $m-\mu_1+\mu_2$

is a probability measure and

$m-\mu_1+\mu_2$

is a probability measure and

![]() $(\mu_2, m-\mu_1) \in \mathcal{R}$

. First, note that

$(\mu_2, m-\mu_1) \in \mathcal{R}$

. First, note that

![]() $\nu_1=m-\mu_1$

is nonnegative, and fix an arbitrary

$\nu_1=m-\mu_1$

is nonnegative, and fix an arbitrary

![]() $A\in \mathcal{B}([0,1])$

. As

$A\in \mathcal{B}([0,1])$

. As

![]() $(\mu_1, \nu_1)$

and

$(\mu_1, \nu_1)$

and

![]() $(\mu_2, \nu_2)$

are representations of m, Definition 1.1 gives

$(\mu_2, \nu_2)$

are representations of m, Definition 1.1 gives

\begin{align} \int_{A}1\,\mathrm{d}\mu_1^x = \int_{A}x\,(\mathrm{d}\nu_1^x+\mathrm{d}\mu_1^x) = \int_{A}x\,\mathrm{d}m^x, \nonumber \\[5pt] \int_{A}1\,\mathrm{d}\mu_2^x = \int_{A}x\,(\mathrm{d}\nu_2^x+\mathrm{d}\mu_2^x) = \int_{A}x\,\mathrm{d}m^x, \end{align}

\begin{align} \int_{A}1\,\mathrm{d}\mu_1^x = \int_{A}x\,(\mathrm{d}\nu_1^x+\mathrm{d}\mu_1^x) = \int_{A}x\,\mathrm{d}m^x, \nonumber \\[5pt] \int_{A}1\,\mathrm{d}\mu_2^x = \int_{A}x\,(\mathrm{d}\nu_2^x+\mathrm{d}\mu_2^x) = \int_{A}x\,\mathrm{d}m^x, \end{align}

so

![]() $\mu_1^x(A)=\mu_2^x(A)$

. Similarly, we can deduce that

$\mu_1^x(A)=\mu_2^x(A)$

. Similarly, we can deduce that

![]() $\mu_1^y=\mu_2^y$

, which means that marginal distributions of

$\mu_1^y=\mu_2^y$

, which means that marginal distributions of

![]() $\mu_1$

and

$\mu_1$

and

![]() $\mu_2$

are equal. This, together with

$\mu_2$

are equal. This, together with

![]() $m-\mu_1\ge 0$

, proves that

$m-\mu_1\ge 0$

, proves that

![]() $m-\mu_1+\mu_2$

is a probability measure. Next, using (2.6) and

$m-\mu_1+\mu_2$

is a probability measure. Next, using (2.6) and

![]() $\mu_1^x=\mu_2^x$

, we can also write

$\mu_1^x=\mu_2^x$

, we can also write

In the same way, we get

for all

![]() $B\in \mathcal{B}([0,1])$

. By (2.7) and (2.8), we obtain

$B\in \mathcal{B}([0,1])$

. By (2.7) and (2.8), we obtain

![]() $(\mu_2, m-\mu_1) \in \mathcal{R}$

, and this completes the proof of uniqueness.

$(\mu_2, m-\mu_1) \in \mathcal{R}$

, and this completes the proof of uniqueness.

To show the minimality, let m be an extremal coherent measure with the representation

![]() $(\mu,\nu)$

(which is unique, as we have just proved). Consider any nonzero

$(\mu,\nu)$

(which is unique, as we have just proved). Consider any nonzero

![]() $(\tilde{\mu},\tilde{\nu})\in\mathcal{R}$

with

$(\tilde{\mu},\tilde{\nu})\in\mathcal{R}$

with

![]() $\tilde{\mu}\le \mu$

and

$\tilde{\mu}\le \mu$

and

![]() $\tilde{\nu}\le \nu$

. Then, by the very definition of

$\tilde{\nu}\le \nu$

. Then, by the very definition of

![]() $\mathcal{R}$

,

$\mathcal{R}$

,

![]() $(\mu-\tilde{\mu},\nu-\tilde{\nu})\in\mathcal{R}$

. Therefore, by Proposition 1.1, we get

$(\mu-\tilde{\mu},\nu-\tilde{\nu})\in\mathcal{R}$

. Therefore, by Proposition 1.1, we get

![]() $\alpha^{-1}(\tilde{\mu}+\tilde{\nu}),(1-\alpha)^{-1}(m-\tilde{\mu}-\tilde{\nu}) \in \mathcal{C}$

, where

$\alpha^{-1}(\tilde{\mu}+\tilde{\nu}),(1-\alpha)^{-1}(m-\tilde{\mu}-\tilde{\nu}) \in \mathcal{C}$

, where

![]() $\alpha=(\tilde{\mu}+\tilde{\nu})([0,1]^2) \in (0,1]$

. We have the identity

$\alpha=(\tilde{\mu}+\tilde{\nu})([0,1]^2) \in (0,1]$

. We have the identity

which, combined with the extremality of m, yields

![]() $m=\alpha^{-1}(\tilde{\mu}+\tilde{\nu})=\alpha^{-1}\tilde{\mu}+\alpha^{-1}\tilde{\nu}$

. But

$m=\alpha^{-1}(\tilde{\mu}+\tilde{\nu})=\alpha^{-1}\tilde{\mu}+\alpha^{-1}\tilde{\nu}$

. But

![]() $(\alpha^{-1}\tilde{\mu},\alpha^{-1}\tilde{\nu})$

belongs to

$(\alpha^{-1}\tilde{\mu},\alpha^{-1}\tilde{\nu})$

belongs to

![]() $\mathcal{R}$

, since

$\mathcal{R}$

, since

![]() $(\tilde{\mu},\tilde{\nu})$

does, and hence

$(\tilde{\mu},\tilde{\nu})$

does, and hence

![]() $(\alpha^{-1}\tilde{\mu},\alpha^{-1}\tilde{\nu})$

is a representation of m. By the uniqueness, we deduce that

$(\alpha^{-1}\tilde{\mu},\alpha^{-1}\tilde{\nu})$

is a representation of m. By the uniqueness, we deduce that

![]() $(\tilde{\mu}, \tilde{\nu}) = \alpha\cdot(\mu,\nu)$

.

$(\tilde{\mu}, \tilde{\nu}) = \alpha\cdot(\mu,\nu)$

.

For the implication ‘

![]() $\Leftarrow$

’, let m be a coherent distribution with the unique and minimal representation

$\Leftarrow$

’, let m be a coherent distribution with the unique and minimal representation

![]() $(\mu, \nu)$

. To show that m is extremal, consider the decomposition

$(\mu, \nu)$

. To show that m is extremal, consider the decomposition

![]() $m=\beta \cdot m_1+(1-\beta)\cdot m_2$

for some

$m=\beta \cdot m_1+(1-\beta)\cdot m_2$

for some

![]() $m_1, m_2 \in \mathcal{C}$

and

$m_1, m_2 \in \mathcal{C}$

and

![]() $\beta \in (0,1)$

. Moreover, let

$\beta \in (0,1)$

. Moreover, let

![]() $(\mu_1, \nu_1)\in \mathcal{R}(m_1)$

and

$(\mu_1, \nu_1)\in \mathcal{R}(m_1)$

and

![]() $(\mu_2, \nu_2) \in \mathcal{R}(m_2)$

. By the convexity of

$(\mu_2, \nu_2) \in \mathcal{R}(m_2)$

. By the convexity of

![]() $\mathcal{R}$

, we have

$\mathcal{R}$

, we have

and hence, by the uniqueness, we get

![]() $(\mu', \nu')=(\mu, \nu)$

. Then, directly from the previous equation, we have

$(\mu', \nu')=(\mu, \nu)$

. Then, directly from the previous equation, we have

![]() $\beta \mu_1\le \mu$

and

$\beta \mu_1\le \mu$

and

![]() $\beta \nu_1 \le \nu$

. Combining this with the minimality of

$\beta \nu_1 \le \nu$

. Combining this with the minimality of

![]() $(\mu, \nu)$

, we get

$(\mu, \nu)$

, we get

![]() $(\beta\mu_1,\beta\nu_1)=\alpha (\mu,\nu)$

for some

$(\beta\mu_1,\beta\nu_1)=\alpha (\mu,\nu)$

for some

![]() $\alpha\in [0,1]$

. Since

$\alpha\in [0,1]$

. Since

![]() $m=\mu+\nu$

and

$m=\mu+\nu$

and

![]() $m_1=\mu_1+\nu_1$

are probability measures, this gives

$m_1=\mu_1+\nu_1$

are probability measures, this gives

![]() $\alpha=\beta$

and hence

$\alpha=\beta$

and hence

![]() $(\mu_1, \nu_1)=(\mu, \nu)$

. This implies

$(\mu_1, \nu_1)=(\mu, \nu)$

. This implies

![]() $m=m_1$

and completes the proof.

$m=m_1$

and completes the proof.

3. Extreme points with finite support

In this section we study the geometric structure of the supports of measures belonging to

![]() $\textrm{ext}_\mathrm{f}(\mathcal{C}) = \{\eta \in \textrm{ext}(\mathcal{C}) \colon |\mathrm{supp}(\eta)|<\infty\}$

. Our key result is presented in Theorem 3.1—we prove that the support of an extremal coherent distribution cannot contain any axial cycles (see Definition 1.4). Let us emphasise that this property was originally conjectured in [Reference Zhu21]. We start with a simple combinatorial observation: it is straightforward to check that certain special ‘alternating’ cycles are forbidden.

$\textrm{ext}_\mathrm{f}(\mathcal{C}) = \{\eta \in \textrm{ext}(\mathcal{C}) \colon |\mathrm{supp}(\eta)|<\infty\}$

. Our key result is presented in Theorem 3.1—we prove that the support of an extremal coherent distribution cannot contain any axial cycles (see Definition 1.4). Let us emphasise that this property was originally conjectured in [Reference Zhu21]. We start with a simple combinatorial observation: it is straightforward to check that certain special ‘alternating’ cycles are forbidden.

Definition 3.1. Let

![]() $\eta$

be a coherent distribution with a unique representation

$\eta$

be a coherent distribution with a unique representation

![]() $(\mu, \nu)$

, and let

$(\mu, \nu)$

, and let

![]() $\big((x_i,y_i)\big)_{i=1}^{2n}$

be an axial cycle contained in

$\big((x_i,y_i)\big)_{i=1}^{2n}$

be an axial cycle contained in

![]() $\mathrm{supp}(\eta)$

. Then

$\mathrm{supp}(\eta)$

. Then

![]() $\big((x_i,y_i)\big)_{i=1}^{2n}$

is an alternating cycle if

$\big((x_i,y_i)\big)_{i=1}^{2n}$

is an alternating cycle if

![]() $(x_{2i+1},y_{2i+1})\in\mathrm{supp}(\mu)$

and

$(x_{2i+1},y_{2i+1})\in\mathrm{supp}(\mu)$

and

![]() $(x_{2i}, y_{2i})\in\mathrm{supp}(\nu)$

, for all

$(x_{2i}, y_{2i})\in\mathrm{supp}(\nu)$

, for all

![]() $i=1,2,\dots, n$

(with the convention that

$i=1,2,\dots, n$

(with the convention that

![]() $x_{2n+1}=x_1$

,

$x_{2n+1}=x_1$

,

![]() $y_{2n+1}=y_1$

).

$y_{2n+1}=y_1$

).

An example of such an alternating cycle is shown in Figure 1.

Figure 1. An example of an alternating cycle. Red points represent probability masses in

![]() $\mathrm{supp}(\mu)$

, while blue points indicate probability masses in

$\mathrm{supp}(\mu)$

, while blue points indicate probability masses in

![]() $\mathrm{supp}(\nu)$

. Arrows outline a possible transformation of the representation

$\mathrm{supp}(\nu)$

. Arrows outline a possible transformation of the representation

![]() $(\mu,\nu)$

.

$(\mu,\nu)$

.

Proposition 3.1. If

![]() $\eta \in \mathrm{ext}_\mathrm{f}(\mathcal{C})$

, then

$\eta \in \mathrm{ext}_\mathrm{f}(\mathcal{C})$

, then

![]() $\mathrm{supp}(\eta)$

does not contain any alternating cycles.

$\mathrm{supp}(\eta)$

does not contain any alternating cycles.

Proof. Let

![]() $\eta$

be a coherent distribution with a unique representation

$\eta$

be a coherent distribution with a unique representation

![]() $(\mu,\nu)$

and a finite support. Additionally, assume that

$(\mu,\nu)$

and a finite support. Additionally, assume that

![]() $\big((x_i,y_i)\big)_{i=1}^{2n}$

is an alternating cycle contained in

$\big((x_i,y_i)\big)_{i=1}^{2n}$

is an alternating cycle contained in

![]() $\mathrm{supp}(\eta)$

. Let

$\mathrm{supp}(\eta)$

. Let

![]() $\delta$

be the smaller of the two numbers

$\delta$

be the smaller of the two numbers

![]() $\min_{0\le i \le n-1}\mu(x_{2i+1}, y_{2i+1})$

and

$\min_{0\le i \le n-1}\mu(x_{2i+1}, y_{2i+1})$

and

![]() $\min_{1\le i \le n}\nu(x_{2i}, y_{2i})$

(for brevity, in what follows we will skip the braces and write

$\min_{1\le i \le n}\nu(x_{2i}, y_{2i})$

(for brevity, in what follows we will skip the braces and write

![]() $\mu(a,b)$

,

$\mu(a,b)$

,

![]() $\nu(a,b)$

instead of

$\nu(a,b)$

instead of

![]() $\mu(\{a,b\})$

,

$\mu(\{a,b\})$

,

![]() $\nu(\{a,b\})$

, respectively). By Definition 3.1, we have

$\nu(\{a,b\})$

, respectively). By Definition 3.1, we have

![]() $\delta>0$

. Now, consider the transformation

$\delta>0$

. Now, consider the transformation

![]() $(\mu, \nu) \mapsto (\mu', \nu')$

described by the following requirements:

$(\mu, \nu) \mapsto (\mu', \nu')$

described by the following requirements:

-

1. for

$i=0,1,\dots, n-1$

, put

$i=0,1,\dots, n-1$

, put  \begin{align*}\mu'(x_{2i+1},y_{2i+1}) \;:\!=\; \mu(x_{2i+1},y_{2i+1}) - \delta, \qquad \nu'(x_{2i+1},y_{2i+1}) \;:\!=\; \nu(x_{2i+1},y_{2i+1}) + \delta;\end{align*}

\begin{align*}\mu'(x_{2i+1},y_{2i+1}) \;:\!=\; \mu(x_{2i+1},y_{2i+1}) - \delta, \qquad \nu'(x_{2i+1},y_{2i+1}) \;:\!=\; \nu(x_{2i+1},y_{2i+1}) + \delta;\end{align*}

-

2. for

$i=1,2,\dots, n$

, put

$i=1,2,\dots, n$

, put  \begin{align*}\mu'(x_{2i}, y_{2i}) \;:\!=\; \mu(x_{2i}, y_{2i})+\delta, \qquad \nu'(x_{2i}, y_{2i}) \;:\!=\; \nu(x_{2i}, y_{2i})-\delta;\end{align*}

\begin{align*}\mu'(x_{2i}, y_{2i}) \;:\!=\; \mu(x_{2i}, y_{2i})+\delta, \qquad \nu'(x_{2i}, y_{2i}) \;:\!=\; \nu(x_{2i}, y_{2i})-\delta;\end{align*}

-

3. for

$(x,y)\not \in \{ (x_i,y_i)\colon 1\le i \le 2n\}$

, set

$(x,y)\not \in \{ (x_i,y_i)\colon 1\le i \le 2n\}$

, set

$\mu'(x,y)=\mu(x,y)$

,

$\mu'(x,y)=\mu(x,y)$

,

$\nu'(x,y)=\nu(x,y)$

.

$\nu'(x,y)=\nu(x,y)$

.

Note that

![]() $\mu$

and

$\mu$

and

![]() $\mu'$

, as well as

$\mu'$

, as well as

![]() $\nu$

and

$\nu$

and

![]() $\nu'$

, have the same marginal distributions and hence

$\nu'$

, have the same marginal distributions and hence

![]() $(\mu', \nu')\in \mathcal{R}$

. We also have

$(\mu', \nu')\in \mathcal{R}$

. We also have

![]() $\mu'+\nu'=\mu+\nu=\eta$

and thus

$\mu'+\nu'=\mu+\nu=\eta$

and thus

![]() $(\mu',\nu')\in\mathcal{R}(\eta)$

. This contradicts the uniqueness of the representation

$(\mu',\nu')\in\mathcal{R}(\eta)$

. This contradicts the uniqueness of the representation

![]() $(\mu,\nu)$

and shows that

$(\mu,\nu)$

and shows that

![]() $\mathrm{supp}(\eta)$

cannot contain an alternating cycle. By Theorem 1.4, this ends the proof.

$\mathrm{supp}(\eta)$

cannot contain an alternating cycle. By Theorem 1.4, this ends the proof.

Before the further combinatorial analysis, we need to introduce some useful auxiliary notation. For

![]() $\mu, \nu \in \mathcal{M}([0,1]^2)$

with

$\mu, \nu \in \mathcal{M}([0,1]^2)$

with

![]() $|\mathrm{supp}(\mu+\nu)|<\infty$

, we define a quotient function

$|\mathrm{supp}(\mu+\nu)|<\infty$

, we define a quotient function

![]() $q_{(\mu, \nu)}\colon \mathrm{supp}(\mu+\nu) \rightarrow [0,1]$

by

$q_{(\mu, \nu)}\colon \mathrm{supp}(\mu+\nu) \rightarrow [0,1]$

by

In what follows, we omit the subscripts and write q for

![]() $q_{(\mu,\nu)}$

whenever the choice for

$q_{(\mu,\nu)}$

whenever the choice for

![]() $(\mu,\nu)$

is clear from the context.

$(\mu,\nu)$

is clear from the context.

Proposition 3.2. Let

![]() $\mu, \nu \in \mathcal{M}([0,1]^2)$

and

$\mu, \nu \in \mathcal{M}([0,1]^2)$

and

![]() $|\mathrm{supp}(\mu+\nu)|<\infty$

. Then

$|\mathrm{supp}(\mu+\nu)|<\infty$

. Then

![]() $(\mu, \nu) \in \mathcal{R}$

if and only if the following conditions hold simultaneously:

$(\mu, \nu) \in \mathcal{R}$

if and only if the following conditions hold simultaneously:

-

for every x satisfying

$\mu(\{x\}\times[0,1])+\nu(\{x\}\times [0,1])>0$

, (3.1)

$\mu(\{x\}\times[0,1])+\nu(\{x\}\times [0,1])>0$

, (3.1) \begin{equation} \sum_{\substack{y\in[0,1],\\ (x,y)\in\mathrm{supp}(\mu+\nu)}}q(x,y) \frac{\mu(x,y)+\nu(x,y)}{\mu(\{x\}\times[0,1])+\nu(\{x\}\times [0,1])} = x; \end{equation}

\begin{equation} \sum_{\substack{y\in[0,1],\\ (x,y)\in\mathrm{supp}(\mu+\nu)}}q(x,y) \frac{\mu(x,y)+\nu(x,y)}{\mu(\{x\}\times[0,1])+\nu(\{x\}\times [0,1])} = x; \end{equation}

-

for every y satisfying

$\mu([0,1]\times \{y\})+\nu([0,1]\times \{y\})>0$

, (3.2)

$\mu([0,1]\times \{y\})+\nu([0,1]\times \{y\})>0$

, (3.2) \begin{equation} \sum_{\substack{x\in[0,1],\\ (x,y)\in\mathrm{supp}(\mu+\nu)}}q(x,y) \frac{\mu(x,y)+\nu(x,y)}{\mu([0,1]\times \{y\})+\nu([0,1]\times \{y\})} = y, \end{equation}

\begin{equation} \sum_{\substack{x\in[0,1],\\ (x,y)\in\mathrm{supp}(\mu+\nu)}}q(x,y) \frac{\mu(x,y)+\nu(x,y)}{\mu([0,1]\times \{y\})+\nu([0,1]\times \{y\})} = y, \end{equation}

where the sums in (3.1) and (3.2) are well defined—in both cases, there is only a finite number of nonzero summands.

Proof. Due to

![]() $|\mathrm{supp}(\mu+\nu)|<\infty$

, this is a simple consequence of Definition 1.1.

$|\mathrm{supp}(\mu+\nu)|<\infty$

, this is a simple consequence of Definition 1.1.

Next, we will require an additional distinction between three different types of points.

Definition 3.2. Let

![]() $(\mu, \nu)\in \mathcal{R}$

. A point

$(\mu, \nu)\in \mathcal{R}$

. A point

![]() $(x,y)\in \mathrm{supp}(\mu+\nu)$

is said to be

$(x,y)\in \mathrm{supp}(\mu+\nu)$

is said to be

-

(i) a lower out point if

$q(x,y)< \min(x,y)$

;

$q(x,y)< \min(x,y)$

; -

(ii) an upper out point if

$q(x,y)> \max(x,y)$

;

$q(x,y)> \max(x,y)$

; -

(iii) a cut point if it is not an out point, i.e.

$x\leq(x,y)\le y$

or

$x\leq(x,y)\le y$

or

$y\le q(x,y) \le x$

.

$y\le q(x,y) \le x$

.

Finally, for the sake of completeness, we include a formal definition of an axial path.

Definition 3.3. The sequence

![]() $\big((x_i,y_i)\big)_{i=1}^{n}$

with terms in

$\big((x_i,y_i)\big)_{i=1}^{n}$

with terms in

![]() $[0,1]^2$

is called an axial path if all the points

$[0,1]^2$

is called an axial path if all the points

![]() $(x_i, y_i)$

are distinct, and

$(x_i, y_i)$

are distinct, and

![]() $x_{2i} = x_{2i+1}$

and

$x_{2i} = x_{2i+1}$

and

![]() $y_{2i-1} = y_{2i}$

or

$y_{2i-1} = y_{2i}$

or

![]() $y_{2i} = y_{2i+1}$

and

$y_{2i} = y_{2i+1}$

and

![]() $x_{2i-1} = x_{2i}$

, for all i.

$x_{2i-1} = x_{2i}$

, for all i.

To develop some intuition, it is convenient to inspect the following example.

Example 3.1. Let m be a probability measure given by

There are five observations, which we discuss separately.

-

(i) Consider the decomposition

$m=\mu+\nu$

, where

$m=\mu+\nu$

, where

$(\mu,\nu)$

is determined by the quotient function Using Proposition 3.2, we can check that

$(\mu,\nu)$

is determined by the quotient function Using Proposition 3.2, we can check that \begin{align*} q\bigg(\frac{1}{8},\frac{1}{4}\bigg)=\frac{1}{8}, \qquad q\bigg(\frac{1}{2},\frac{1}{4}\bigg)=1, \qquad q\bigg(\frac{1}{2},\frac{3}{4}\bigg)=0, \qquad q\bigg(\frac{7}{8},\frac{3}{4}\bigg)=\frac{7}{8}. \end{align*}

\begin{align*} q\bigg(\frac{1}{8},\frac{1}{4}\bigg)=\frac{1}{8}, \qquad q\bigg(\frac{1}{2},\frac{1}{4}\bigg)=1, \qquad q\bigg(\frac{1}{2},\frac{3}{4}\bigg)=0, \qquad q\bigg(\frac{7}{8},\frac{3}{4}\bigg)=\frac{7}{8}. \end{align*}

$(\mu,\nu)\in\mathcal{R}$

. For instance, for

$(\mu,\nu)\in\mathcal{R}$

. For instance, for

$y=\frac{1}{4}$

we get (3.3)which agrees with (3.2). As a direct consequence, by Proposition 1.1, we have

$y=\frac{1}{4}$

we get (3.3)which agrees with (3.2). As a direct consequence, by Proposition 1.1, we have \begin{equation} \frac{q\big(\frac{1}{8},\frac{1}{4}\big)\cdot m\big(\frac{1}{8},\frac{1}{4}\big) + q\big(\frac{1}{2},\frac{1}{4}\big)\cdot m\big(\frac{1}{2},\frac{1}{4}\big)} {m\big(\frac{1}{8}, \frac{1}{4}\big)+m\big(\frac{1}{2}, \frac{1}{4}\big)} = \frac{\frac{1}{8}\cdot \frac{84}{196}+1\cdot \frac{14}{196}}{\frac{84}{196}+\frac{14}{196}} = \frac{1}{4}, \end{equation}

\begin{equation} \frac{q\big(\frac{1}{8},\frac{1}{4}\big)\cdot m\big(\frac{1}{8},\frac{1}{4}\big) + q\big(\frac{1}{2},\frac{1}{4}\big)\cdot m\big(\frac{1}{2},\frac{1}{4}\big)} {m\big(\frac{1}{8}, \frac{1}{4}\big)+m\big(\frac{1}{2}, \frac{1}{4}\big)} = \frac{\frac{1}{8}\cdot \frac{84}{196}+1\cdot \frac{14}{196}}{\frac{84}{196}+\frac{14}{196}} = \frac{1}{4}, \end{equation}

$m\in \mathcal{C}$

.

$m\in \mathcal{C}$

.

-

(ii) Observe that

$\big(\frac{1}{8}, \frac{1}{4}\big)$

and

$\big(\frac{1}{8}, \frac{1}{4}\big)$

and

$\big(\frac{7}{8}, \frac{3}{4}\big)$

are cut points,

$\big(\frac{7}{8}, \frac{3}{4}\big)$

are cut points,

$\big(\frac{1}{2}, \frac{1}{4}\big)$

is an upper out point, and

$\big(\frac{1}{2}, \frac{1}{4}\big)$

is an upper out point, and

$\big(\frac{1}{2}, \frac{3}{4}\big)$

is a lower out point. Moreover,

$\big(\frac{1}{2}, \frac{3}{4}\big)$

is a lower out point. Moreover,

$\mathrm{supp}(m)$

is an axial path without cycles—see Figure 2.

$\mathrm{supp}(m)$

is an axial path without cycles—see Figure 2.

Figure 2. Support of a coherent distribution m. Purple points (endpoints of the path) are cut points. The red point represents a mass in

$\mathrm{supp}(\mu)$

and is an upper out point. The blue point indicates a mass in

$\mathrm{supp}(\mu)$

and is an upper out point. The blue point indicates a mass in

$\mathrm{supp}(\nu)$

and it is a lower out point.

$\mathrm{supp}(\nu)$

and it is a lower out point.

-

(iii) Notably,

$(\mu, \nu)$

is a unique representation of m. Indeed,

$(\mu, \nu)$

is a unique representation of m. Indeed,

$\big(\frac{1}{8},\frac{1}{4}\big)$

is the only point in

$\big(\frac{1}{8},\frac{1}{4}\big)$

is the only point in

$\mathrm{supp}(m)$

with x-coordinate equal to

$\mathrm{supp}(m)$

with x-coordinate equal to

$\frac{1}{8}$

and hence

$\frac{1}{8}$

and hence

$q\big(\frac{1}{8}, \frac{1}{4}\big)=\frac{1}{8}$

. Accordingly,

$q\big(\frac{1}{8}, \frac{1}{4}\big)=\frac{1}{8}$

. Accordingly,

$q\big(\frac{1}{2}, \frac{1}{4}\big)=1$

is now a consequence of (3.3). The derivation of

$q\big(\frac{1}{2}, \frac{1}{4}\big)=1$

is now a consequence of (3.3). The derivation of

$q\big(\frac{1}{2}, \frac{3}{4}\big)=0$

and

$q\big(\frac{1}{2}, \frac{3}{4}\big)=0$

and

$q\big(\frac{7}{8}, \frac{3}{4}\big)=\frac{7}{8}$

follows from an analogous computation.

$q\big(\frac{7}{8}, \frac{3}{4}\big)=\frac{7}{8}$

follows from an analogous computation. -

(iv) Finally, the representation

$(\mu, \nu)$

is minimal; let

$(\mu, \nu)$

is minimal; let

$(\tilde{\mu},\tilde{\nu})\in\mathcal{R}$

satisfy

$(\tilde{\mu},\tilde{\nu})\in\mathcal{R}$

satisfy

$\tilde{\mu}\le\mu$

and

$\tilde{\mu}\le\mu$

and

$\tilde{\nu}\le\nu$

. Suppose that

$\tilde{\nu}\le\nu$

. Suppose that

$\big(\frac{1}{8}, \frac{1}{4}\big)\in \mathrm{supp}(\tilde{\mu}+\tilde{\nu})$

. Again, as

$\big(\frac{1}{8}, \frac{1}{4}\big)\in \mathrm{supp}(\tilde{\mu}+\tilde{\nu})$

. Again, as

$\big(\frac{1}{8}, \frac{1}{4}\big)$

is the only point in

$\big(\frac{1}{8}, \frac{1}{4}\big)$

is the only point in

$\mathrm{supp}(m)$

with x-coordinate equal to

$\mathrm{supp}(m)$

with x-coordinate equal to

$\frac{1}{8}$

, we get

$\frac{1}{8}$

, we get

$q_{(\tilde{\mu},\tilde{\nu})}\big(\frac{1}{8},\frac{1}{4}\big)=\frac{1}{8}$

. Next, assume that

$q_{(\tilde{\mu},\tilde{\nu})}\big(\frac{1}{8},\frac{1}{4}\big)=\frac{1}{8}$

. Next, assume that

$\big(\frac{1}{2}, \frac{1}{4}\big)\in \mathrm{supp}(\tilde{\mu}+\tilde{\nu})$

. As

$\big(\frac{1}{2}, \frac{1}{4}\big)\in \mathrm{supp}(\tilde{\mu}+\tilde{\nu})$

. As

$\tilde{\nu}\big(\frac{1}{2}, \frac{1}{4}\big) \le \nu\big(\frac{1}{2}, \frac{1}{4}\big)=0$

, we have

$\tilde{\nu}\big(\frac{1}{2}, \frac{1}{4}\big) \le \nu\big(\frac{1}{2}, \frac{1}{4}\big)=0$

, we have

$q_{(\tilde{\mu},\tilde{\nu})}\big(\frac{1}{2}, \frac{1}{4}\big)=1$

. Likewise, we can check that

$q_{(\tilde{\mu},\tilde{\nu})}\big(\frac{1}{2}, \frac{1}{4}\big)=1$

. Likewise, we can check that

$q_{(\tilde{\mu},\tilde{\nu})}(x,y) = q_{(\mu, \nu)}(x,y)$

for all

$q_{(\tilde{\mu},\tilde{\nu})}(x,y) = q_{(\mu, \nu)}(x,y)$

for all

$(x,y)\in \mathrm{supp}(\tilde{\mu}+\tilde{\nu})$

. From this and Proposition 3.2, we easily obtain that

$(x,y)\in \mathrm{supp}(\tilde{\mu}+\tilde{\nu})$

. From this and Proposition 3.2, we easily obtain that

$\tilde{\mu} +\tilde{\nu}=0$

or

$\tilde{\mu} +\tilde{\nu}=0$

or

$\mathrm{supp}(\tilde{\mu}+\tilde{\nu})=\mathrm{supp}(m)$

. For example,

$\mathrm{supp}(\tilde{\mu}+\tilde{\nu})=\mathrm{supp}(m)$

. For example,-

if

$\big(\frac{1}{2}, \frac{1}{4}\big)\in \mathrm{supp}(\tilde{\mu}+\tilde{\nu})$

, then (3.1) gives

$\big(\frac{1}{2}, \frac{1}{4}\big)\in \mathrm{supp}(\tilde{\mu}+\tilde{\nu})$

, then (3.1) gives

$\big(\frac{1}{2}, \frac{3}{4}\big) \in \mathrm{supp}(\tilde{\mu}+\tilde{\nu})$

;

$\big(\frac{1}{2}, \frac{3}{4}\big) \in \mathrm{supp}(\tilde{\mu}+\tilde{\nu})$

; -

if

$\big(\frac{1}{2}, \frac{3}{4}\big)\in \mathrm{supp}(\tilde{\mu}+\tilde{\nu})$

, then (3.2) yields

$\big(\frac{1}{2}, \frac{3}{4}\big)\in \mathrm{supp}(\tilde{\mu}+\tilde{\nu})$

, then (3.2) yields

$\big(\frac{7}{8}, \frac{3}{4}\big) \in \mathrm{supp}(\tilde{\mu}+\tilde{\nu})$

.

$\big(\frac{7}{8}, \frac{3}{4}\big) \in \mathrm{supp}(\tilde{\mu}+\tilde{\nu})$

.

Therefore, if

$\tilde{\mu}+\tilde{\nu}\not=0$

, then the measure

$\tilde{\mu}+\tilde{\nu}\not=0$

, then the measure

$\tilde{\mu}+\tilde{\nu}$

is supported on the same set as m and

$\tilde{\mu}+\tilde{\nu}$

is supported on the same set as m and

$q_{(\tilde{\mu},\tilde{\nu})} \equiv q_{(\mu, \nu)}$

. For the same reason, i.e. using Proposition 3.2 and the path structure of

$q_{(\tilde{\mu},\tilde{\nu})} \equiv q_{(\mu, \nu)}$

. For the same reason, i.e. using Proposition 3.2 and the path structure of

$\mathrm{supp}(m)$

, it follows that

$\mathrm{supp}(m)$

, it follows that

$\tilde{\mu}+ \tilde{\nu} = \alpha \cdot m$

for some

$\tilde{\mu}+ \tilde{\nu} = \alpha \cdot m$

for some

$\alpha \in [0,1]$

. For instance, by (3.2) for

$\alpha \in [0,1]$

. For instance, by (3.2) for

$y=\frac{1}{4}$

, we get where

$y=\frac{1}{4}$

, we get where \begin{align*} \frac{\frac{1}{8}\cdot\tilde{m}\big(\frac{1}{8},\frac{1}{4}\big) + 1\cdot\tilde{m}\big(\frac{1}{2}, \frac{1}{4}\big)} {\tilde{m}\big(\frac{1}{8},\frac{1}{4}\big) + \tilde{m}\big(\frac{1}{2},\frac{1}{4}\big)} = \frac{1}{4}, \end{align*}

\begin{align*} \frac{\frac{1}{8}\cdot\tilde{m}\big(\frac{1}{8},\frac{1}{4}\big) + 1\cdot\tilde{m}\big(\frac{1}{2}, \frac{1}{4}\big)} {\tilde{m}\big(\frac{1}{8},\frac{1}{4}\big) + \tilde{m}\big(\frac{1}{2},\frac{1}{4}\big)} = \frac{1}{4}, \end{align*}

$\tilde{m}= \tilde{\mu}+\tilde{\nu}.$

Hence

$\tilde{m}= \tilde{\mu}+\tilde{\nu}.$

Hence

$\tilde{m}\big(\frac{1}{8}, \frac{1}{4}\big)\tilde{m}\big(\frac{1}{2},\frac{1}{4}\big)^{-1} = m\big(\frac{1}{8}, \frac{1}{4}\big)m\big(\frac{1}{2}, \frac{1}{4}\big)^{-1}=\frac{84}{14}$

.

$\tilde{m}\big(\frac{1}{8}, \frac{1}{4}\big)\tilde{m}\big(\frac{1}{2},\frac{1}{4}\big)^{-1} = m\big(\frac{1}{8}, \frac{1}{4}\big)m\big(\frac{1}{2}, \frac{1}{4}\big)^{-1}=\frac{84}{14}$

.

-

-

(v) By the above analysis and Theorem 1.4, we conclude that

$m\in\mathrm{ext}_\mathrm{f}(\mathcal{C})$

.

$m\in\mathrm{ext}_\mathrm{f}(\mathcal{C})$

.

To clarify the main reasoning, we first record an obvious geometric lemma.

Lemma 3.1. Let

![]() $\big((x_i,y_i)\big)_{i=1}^{n}$

be an axial path without cycles.

$\big((x_i,y_i)\big)_{i=1}^{n}$

be an axial path without cycles.

-

(i) If

$x_{n-1}=x_n$

(or

$x_{n-1}=x_n$

(or

$y_{n-1}=y_n$

), then

$y_{n-1}=y_n$

), then

$y_n \not= y_j$

(or

$y_n \not= y_j$

(or

$x_n\not = x_j$

) for all

$x_n\not = x_j$

) for all

$j<n$

.

$j<n$

. -

(ii) For every

$x,y \in [0,1]$

,

$x,y \in [0,1]$

,

$\max\{|\{i\colon x_i=x\}|,\,|\{j\colon y_j=y\}|\} < 3$

.

$\max\{|\{i\colon x_i=x\}|,\,|\{j\colon y_j=y\}|\} < 3$

.

Proof. Part (i) can be verified by induction. Part (ii) follows from (i).

We are now ready to demonstrate the central result of this section.

Theorem 3.1. If

![]() $\eta \in \mathrm{ext}_\mathrm{f}(\mathcal{C})$

, then

$\eta \in \mathrm{ext}_\mathrm{f}(\mathcal{C})$

, then

![]() $\mathrm{supp}(\eta)$

is an axial path without cycles.

$\mathrm{supp}(\eta)$

is an axial path without cycles.

Let us briefly explain the main idea of the proof. For

![]() $\eta \in \mathrm{ext}_\mathrm{f}(\mathcal{C})$

, we inductively construct a special axial path contained in

$\eta \in \mathrm{ext}_\mathrm{f}(\mathcal{C})$

, we inductively construct a special axial path contained in

![]() $\mathrm{supp}(\eta)$

, which does not contain any cut points (apart from the endpoints). We show that the axial path obtained in this process is acyclic and involves all the points from

$\mathrm{supp}(\eta)$

, which does not contain any cut points (apart from the endpoints). We show that the axial path obtained in this process is acyclic and involves all the points from

![]() $\mathrm{supp}(\eta)$

.

$\mathrm{supp}(\eta)$

.

Proof of Theorem 3.1. Fix

![]() $\eta \in \mathrm{ext}_\mathrm{f}(\mathcal{C})$

and let

$\eta \in \mathrm{ext}_\mathrm{f}(\mathcal{C})$

and let

![]() $(\mu, \nu)$

be the unique representation of

$(\mu, \nu)$

be the unique representation of

![]() $\eta$

. By

$\eta$

. By

![]() $\mathcal{L}(\eta)$

and

$\mathcal{L}(\eta)$

and

![]() $\mathcal{U}(\eta)$

denote the sets of lower and upper out points. Choose any

$\mathcal{U}(\eta)$

denote the sets of lower and upper out points. Choose any

![]() $(x_0,y_0)\in \mathrm{supp}(\eta)$

. We consider two separate cases.

$(x_0,y_0)\in \mathrm{supp}(\eta)$

. We consider two separate cases.

Case I:

![]() $(x_0, y_0)$

is an out point. With no loss of generality, we can assume that

$(x_0, y_0)$

is an out point. With no loss of generality, we can assume that

![]() $(x_0, y_0) \in \mathcal{L}(\eta)$

. We then use the following inductive procedure.

$(x_0, y_0) \in \mathcal{L}(\eta)$

. We then use the following inductive procedure.

-

(i) Suppose we have successfully found

$(x_n, y_n)\in \mathcal{L}(\eta)$

and it is the first time we have chosen a point with the x-coordinate equal to

$(x_n, y_n)\in \mathcal{L}(\eta)$

and it is the first time we have chosen a point with the x-coordinate equal to

$x_n$

. Since

$x_n$

. Since

$(x_n,y_n)\in\mathcal{L}(\eta)$

, we have

$(x_n,y_n)\in\mathcal{L}(\eta)$

, we have

$q(x_n, y_n)<x_n$

. By (3.1), there must exist a point

$q(x_n, y_n)<x_n$

. By (3.1), there must exist a point

$(x_{n+1},y_{n+1})\in \mathrm{supp}(\eta)$

such that

$(x_{n+1},y_{n+1})\in \mathrm{supp}(\eta)$

such that

$x_{n+1}=x_n$

and

$x_{n+1}=x_n$

and

$q(x_{n+1}, y_{n+1})>x_n$

. We pick one such point and add it at the end of the path. If

$q(x_{n+1}, y_{n+1})>x_n$

. We pick one such point and add it at the end of the path. If

$(x_{n+1}, y_{n+1})$

is a cut point or an axial cycle was just created, we exit the loop. Otherwise, note that

$(x_{n+1}, y_{n+1})$

is a cut point or an axial cycle was just created, we exit the loop. Otherwise, note that

$(x_{n+1},y_{n+1})\in\mathcal{U}(\eta)$

and

$(x_{n+1},y_{n+1})\in\mathcal{U}(\eta)$

and

$y_{n+1}\not=y_j$

for all

$y_{n+1}\not=y_j$

for all

$j<n+1$

(by Lemma 3.1(i)). Go to (ii).

$j<n+1$

(by Lemma 3.1(i)). Go to (ii). -

(ii) Assume we have successfully found

$(x_n, y_n)\in \mathcal{U}(\eta)$

and it is the first time we have chosen a point with the y-coordinate equal to

$(x_n, y_n)\in \mathcal{U}(\eta)$

and it is the first time we have chosen a point with the y-coordinate equal to

$y_n$

. Since

$y_n$

. Since

$(x_n, y_n)\in \mathcal{U}(\eta)$

, we have

$(x_n, y_n)\in \mathcal{U}(\eta)$

, we have

$q(x_n, y_n)>y_n$

. By (3.2), there must exist a point

$q(x_n, y_n)>y_n$

. By (3.2), there must exist a point

$(x_{n+1},y_{n+1})\in \mathrm{supp}(\eta)$

such that

$(x_{n+1},y_{n+1})\in \mathrm{supp}(\eta)$

such that

$y_{n+1}=y_n$

and

$y_{n+1}=y_n$

and

$q(x_{n+1}, y_{n+1})<y_n$

. We pick one such point and add it at the end of the path. If

$q(x_{n+1}, y_{n+1})<y_n$

. We pick one such point and add it at the end of the path. If

$(x_{n+1}, y_{n+1})$

is a cut point or an axial cycle was just created, we exit the loop. Otherwise, note that

$(x_{n+1}, y_{n+1})$

is a cut point or an axial cycle was just created, we exit the loop. Otherwise, note that

$(x_{n+1},y_{n+1})\in\mathcal{L}(\eta)$

and

$(x_{n+1},y_{n+1})\in\mathcal{L}(\eta)$

and

$x_{n+1}\not=x_j$

for all

$x_{n+1}\not=x_j$

for all

$j<n+1$

(by Lemma 3.1(i)). Go to (i).

$j<n+1$

(by Lemma 3.1(i)). Go to (i).

As

![]() $|\mathrm{supp}(\eta)|<\infty$

, the procedure terminates after a finite number of steps (denote it by k) and produces an axial path

$|\mathrm{supp}(\eta)|<\infty$

, the procedure terminates after a finite number of steps (denote it by k) and produces an axial path

![]() $\big((x_i,y_i)\big)_{i=0}^{k}$

contained in

$\big((x_i,y_i)\big)_{i=0}^{k}$

contained in

![]() $\mathrm{supp}(\eta)$

. Notice that it is possible that

$\mathrm{supp}(\eta)$

. Notice that it is possible that

![]() $(x_k,y_k)$

is a third point on some horizontal or vertical line—in such a case, by Lemma 3.1(ii), the sequence

$(x_k,y_k)$

is a third point on some horizontal or vertical line—in such a case, by Lemma 3.1(ii), the sequence

![]() $\big((x_i,y_i)\big)_{i=0}^{k}$

contains an axial cycle. Now, by the construction of the loop, point

$\big((x_i,y_i)\big)_{i=0}^{k}$

contains an axial cycle. Now, by the construction of the loop, point

![]() $(x_k, y_k)$

is either an endpoint of an axial cycle or a cut point. Let us show that the first alternative is impossible. First, we clearly have

$(x_k, y_k)$

is either an endpoint of an axial cycle or a cut point. Let us show that the first alternative is impossible. First, we clearly have

![]() $\mathcal{L}(\eta)\subset\mathrm{supp}(\nu)$

and

$\mathcal{L}(\eta)\subset\mathrm{supp}(\nu)$

and

![]() $\mathcal{U}(\eta)\subset\mathrm{supp}(\mu)$

(see Figure 3). Next, assume that

$\mathcal{U}(\eta)\subset\mathrm{supp}(\mu)$

(see Figure 3). Next, assume that

![]() $(x_{k-1}, y_{k-1})\in \mathcal{U}(\eta)$

. This means that

$(x_{k-1}, y_{k-1})\in \mathcal{U}(\eta)$

. This means that

![]() $(x_k, y_k)$

was found in step (ii) and

$(x_k, y_k)$

was found in step (ii) and

![]() $q(x_k, y_k)<y_{k-1}\le 1$

. Therefore

$q(x_k, y_k)<y_{k-1}\le 1$

. Therefore

![]() $(x_k, y_k)\in \mathrm{supp}(\nu)$

and there exists an alternating cycle in

$(x_k, y_k)\in \mathrm{supp}(\nu)$

and there exists an alternating cycle in

![]() $\mathrm{supp}(\eta)$

. However, this is not possible because of Proposition 3.1. If

$\mathrm{supp}(\eta)$

. However, this is not possible because of Proposition 3.1. If

![]() $(x_{k-1}, y_{k-1})\in \mathcal{L}(\eta)$

, the argument is analogous.

$(x_{k-1}, y_{k-1})\in \mathcal{L}(\eta)$

, the argument is analogous.

Figure 3. An example of an axial path constructed by the algorithm. The symbols

![]() $\vee, \wedge$

are placed next to lower (

$\vee, \wedge$

are placed next to lower (

![]() $\vee$

) and upper (

$\vee$

) and upper (

![]() $\wedge$

) out points. The purple point

$\wedge$

) out points. The purple point

![]() $(x_k, y_k)$

is the endpoint of the path. The red points represent probability masses in

$(x_k, y_k)$

is the endpoint of the path. The red points represent probability masses in

![]() $\mathrm{supp}(\mu)$

, while the blue points indicate probability masses in

$\mathrm{supp}(\mu)$

, while the blue points indicate probability masses in

![]() $\mathrm{supp}(\nu)$

.

$\mathrm{supp}(\nu)$

.

We have shown that

![]() $(x_{k}, y_k)$

is a cut point. Set

$(x_{k}, y_k)$

is a cut point. Set

![]() $\Gamma_+=\bigcup_{i=1}^k\{(x_i,y_i)\}$

. Moving on, we can return to the starting point

$\Gamma_+=\bigcup_{i=1}^k\{(x_i,y_i)\}$

. Moving on, we can return to the starting point

![]() $(x_0,y_0)$

and repeat the above construction in the reverse direction. By switching the roles of the x- and y-coordinates in steps (i) and (ii), we produce another axial path

$(x_0,y_0)$

and repeat the above construction in the reverse direction. By switching the roles of the x- and y-coordinates in steps (i) and (ii), we produce another axial path

![]() $(x_i,y_i)_{i=0}^{-l}$

. Set

$(x_i,y_i)_{i=0}^{-l}$

. Set

![]() $\Gamma_-=\bigcup_{i=-1}^{-l}\{(x_i,y_i)\}$

and

$\Gamma_-=\bigcup_{i=-1}^{-l}\{(x_i,y_i)\}$

and

![]() $\Gamma = \Gamma_+ \cup \{(x_0,y_0)\} \cup \Gamma_-$

. Repeating the same arguments as before, we show that

$\Gamma = \Gamma_+ \cup \{(x_0,y_0)\} \cup \Gamma_-$

. Repeating the same arguments as before, we show that

![]() $(x_{-l}, y_{-l})$

is a cut point and

$(x_{-l}, y_{-l})$

is a cut point and

![]() $\Gamma$

is an axial path without cycles (see Figure 4).

$\Gamma$

is an axial path without cycles (see Figure 4).

It remains to verify that

![]() $\mathrm{supp}(\eta)=\Gamma$

. This is accomplished by showing that there exists

$\mathrm{supp}(\eta)=\Gamma$

. This is accomplished by showing that there exists

![]() $(\tilde{\mu}, \tilde{\nu})\in \mathcal{R}$

with

$(\tilde{\mu}, \tilde{\nu})\in \mathcal{R}$

with

![]() $\tilde{\mu}\le \mu$

,

$\tilde{\mu}\le \mu$

,

![]() $\tilde{\nu}\le \nu$

, and

$\tilde{\nu}\le \nu$

, and

![]() $\mathrm{supp}(\tilde{\mu}+\tilde{\nu})=\Gamma$

. This will give the claim: by the minimality of the representation

$\mathrm{supp}(\tilde{\mu}+\tilde{\nu})=\Gamma$

. This will give the claim: by the minimality of the representation

![]() $(\mu, \nu)$

, we deduce that

$(\mu, \nu)$

, we deduce that

![]() $\tilde{\mu}+ \tilde{\nu} = \alpha \cdot \eta$

for some

$\tilde{\mu}+ \tilde{\nu} = \alpha \cdot \eta$

for some

![]() $\alpha \in (0,1]$

, and hence

$\alpha \in (0,1]$

, and hence

![]() $\mathrm{supp}(\tilde{\mu}+\tilde{\nu})=\mathrm{supp}(\eta)$

.

$\mathrm{supp}(\tilde{\mu}+\tilde{\nu})=\mathrm{supp}(\eta)$

.

Figure 4. An example of an axial path

![]() $\Gamma$

constructed after the second run of the algorithm. The purple points

$\Gamma$

constructed after the second run of the algorithm. The purple points

![]() $(x_k, y_k)$

and

$(x_k, y_k)$

and

![]() $(x_{-l}, y_{-l})$

(endpoints of

$(x_{-l}, y_{-l})$

(endpoints of

![]() $\Gamma$

) are cut points. The red points represent probability masses in

$\Gamma$

) are cut points. The red points represent probability masses in

![]() $\mathrm{supp}(\mu)$

, while the blue points indicate probability masses in

$\mathrm{supp}(\mu)$

, while the blue points indicate probability masses in

![]() $\mathrm{supp}(\nu)$

.

$\mathrm{supp}(\nu)$

.

We begin with the endpoints of

![]() $\Gamma$

. As

$\Gamma$

. As

![]() $(x_k, y_k)$

is a cut point, there exists

$(x_k, y_k)$

is a cut point, there exists

![]() $\gamma \in [0,1]$

such that

$\gamma \in [0,1]$

such that

![]() $q(x_k, y_k)=\gamma x_k + (1-\gamma)y_k$

. We can write

$q(x_k, y_k)=\gamma x_k + (1-\gamma)y_k$

. We can write

where

![]() $\eta'(x_k, y_k)=\gamma\eta(x_k, y_k)$

and

$\eta'(x_k, y_k)=\gamma\eta(x_k, y_k)$

and

![]() $\eta''(x_k, y_k)=(1-\gamma)\eta(x_k, y_k)$

. Set

$\eta''(x_k, y_k)=(1-\gamma)\eta(x_k, y_k)$

. Set

Equations (3.4) and (3.6) have a clear and convenient interpretation. Namely, we can visualize it as ‘cutting’ the point

![]() $(x_k, y_k)$

into two separate points:

$(x_k, y_k)$

into two separate points:

![]() $(x_k, y_k)'$

with mass

$(x_k, y_k)'$

with mass

![]() $\eta'(x_k, y_k)$

and

$\eta'(x_k, y_k)$

and

![]() $(x_k, y_k)''$

with mass

$(x_k, y_k)''$

with mass

![]() $\eta''(x_k, y_k)$

. Moreover, calculating their quotient functions independently, we get

$\eta''(x_k, y_k)$

. Moreover, calculating their quotient functions independently, we get

![]() $q'(x_k, y_k)=x_k$

and

$q'(x_k, y_k)=x_k$

and

![]() $q''(x_k, y_k)=y_k$

. Performing the same ‘cut’ operation on

$q''(x_k, y_k)=y_k$

. Performing the same ‘cut’ operation on

![]() $(x_{-l}, y_{-l})$

we can divide this point into

$(x_{-l}, y_{-l})$

we can divide this point into

![]() $(x_{-l}, y_{-l})'$

and

$(x_{-l}, y_{-l})'$

and

![]() $(x_{-l}, y_{-l})''$

such that

$(x_{-l}, y_{-l})''$

such that

![]() $q'(x_{-l}, y_{-l})=x_{-l}$

and

$q'(x_{-l}, y_{-l})=x_{-l}$

and

![]() $q''(x_{-l}, y_{-l})=y_{-l}$

.

$q''(x_{-l}, y_{-l})=y_{-l}$

.

Observe that

![]() $(x_k,y_k)$

and

$(x_k,y_k)$

and

![]() $(x_{k-1},y_{k-1})$

have exactly one common coordinate, say

$(x_{k-1},y_{k-1})$

have exactly one common coordinate, say

![]() $y_k=y_{k-1}$

. Consequently,

$y_k=y_{k-1}$

. Consequently,

![]() $(x_k, y_k)$

is the only point in

$(x_k, y_k)$

is the only point in

![]() $\Gamma$

with x-coordinate equal to

$\Gamma$

with x-coordinate equal to

![]() $x_k$

. Additionally, by (3.2) and

$x_k$

. Additionally, by (3.2) and

![]() $(x_{k-1}, y_{k-1}) \in \mathcal{U}(\eta)$

, this means that

$(x_{k-1}, y_{k-1}) \in \mathcal{U}(\eta)$

, this means that

![]() $q(x_k, y_k)\not=y_{k}$

and

$q(x_k, y_k)\not=y_{k}$

and

![]() $\gamma>0$

. Hence

$\gamma>0$

. Hence

![]() $\eta'(x_k, y_k)>0$

. Similarly, suppose that

$\eta'(x_k, y_k)>0$

. Similarly, suppose that

![]() $y_{-l}=y_{-l+1}$

(as presented in Figure 4; for other configurations of endpoints, we proceed by analogy). Thus,

$y_{-l}=y_{-l+1}$

(as presented in Figure 4; for other configurations of endpoints, we proceed by analogy). Thus,

![]() $(x_{-l}, y_{-l})$

is the only point in

$(x_{-l}, y_{-l})$

is the only point in

![]() $\Gamma$

with x-coordinate equal to

$\Gamma$

with x-coordinate equal to

![]() $x_{-l}$

. By (3.2) and

$x_{-l}$

. By (3.2) and

![]() $(x_{-l+1}, y_{-l+1}) \in \mathcal{L}(\eta)$

, we have

$(x_{-l+1}, y_{-l+1}) \in \mathcal{L}(\eta)$

, we have

![]() $\eta'(x_{-l}, y_{-l})>0$

.

$\eta'(x_{-l}, y_{-l})>0$

.

Next, consider the function

![]() $\tilde{q}\colon\Gamma \rightarrow [0,1]$

uniquely determined by the following requirements:

$\tilde{q}\colon\Gamma \rightarrow [0,1]$

uniquely determined by the following requirements:

-