1 Introduction

Phonetics can be defined as the science which studies the characteristics of human speech and provides methods for their description, classification, and transcription (Reference CrystalCrystal, 2003, p. 349). Of course, a detailed description of the production of speech is immensely complex: speech involves, for example, the coordination of over eighty different muscles (Reference LaverLaver, 1994, p. 1); furthermore, a comprehensive understanding of phonetics involves a wide range of different disciplines (Reference LaverLaver, 1994, p. 2). Indeed, scientific research on phonetics can involve many different techniques such as magnetic resonance imaging (MRI) and electropalatography (EPG) (Reference Stone, Hardcastle, Laver and GibbonStone, 2010), and even introductory texts on phonetics tend to now make extensive reference to the acoustic analysis of speech (e.g. Reference Ladefoged and JohnsonLadefoged & Johnson, 2011), the interpretation of which can be quite technical.

This Element explores ways in which language teachers, especially teachers of English, can benefit from knowledge of phonetics. Language teachers do not need a detailed knowledge of all aspects of scientific research into phonetics or an extensive understanding of acoustics; but we assert that a basic understanding of the production of speech sounds, knowledge about how they can be described, and skills in their transcription are essential tools for the proficient language teacher. This Element provides an overview of this knowledge and these skills. We also offer recommendations for introducing pronunciation teaching in the classroom, and we hope that teachers will find some of these suggestions helpful.

In this Element, we connect phonetic knowledge and language teaching with the aim of providing an overview of phonetics that can be invaluable for teachers. Phonetic knowledge is closely related to multiple language skills including listening, speaking, and pronunciation. In particular, the study of phonetics covers all aspects of pronunciation teaching, which, in the past had what has been described as a “Cinderella status,” being denied a proper role in language teaching despite the crucial importance of the work it does (Reference LevisLevis, 2018, p. 217). This Element thus focuses on how the status of pronunciation teaching can be improved, so that teachers and their students can make use of knowledge about and skills in the description, classification, and transcription of spoken language. We focus in Section 2 on phonetic transcription using the International Phonetic Alphabet (IPA), a skill which we believe is exceptionally valuable for language teachers and learners. In Section 3, we discuss the segmental articulation of consonants and vowels. Section 4 deals with consonants and vowels in context and connected speech processes (CSPs). Section 5 focuses on prosody, and Section 6 considers the state of the art including models of language teaching and use of computers to contribute to the teaching of listening, speaking, and pronunciation.

While we hope that this Element is useful to instructors of all languages, the majority of the examples will come from North American English (NAE) and the English language classroom, because English is the language that most readers will be familiar with and also because there is an extensive range of research on the pronunciation of English for teachers (e.g. Reference BrownBrown, 1991; Reference JonesJones, 2016; Reference Kang, Thomson and MurphyKang et al., 2018; Reference Reed and LevisReed & Levis, 2015). Furthermore, focusing on English allows us to provide a substantial overview of aspects of the phonetics of the language, such as its transcription and articulation. At the same time, we acknowledge that English language teaching is rather different from the teaching of other languages in one important respect: nowadays, most interactions around the world in English do not involve a native speaker, and use of English as a lingua franca (ELF) – which can be defined as English used as the language of choice between speakers who do not share a common first language (Reference SeidlhoferSeidlhofer, 2011, p. 7) – has become widespread. When a speaker from Brazil is talking to someone from Japan using English, they are principally concerned about being understood and usually do not care very much how closely their pronunciation is aligned with native-speaker norms of speech (Reference JenkinsJenkins, 2007). While knowledge about pronunciation is invaluable for language teachers and their pupils, teachers of English should be aware that their students may not want to mimic all aspects of native-speaker pronunciation; and indeed, some native-speaker patterns of speech, such as the extensive simplification and omission of sounds that will be discussed in Section 4.5, may not be helpful in enhancing intelligibility. We consider the issue of intelligibility further in Section 1.1 before we discuss some models of learning pronunciation in Section 1.2, offer a brief overview of approaches to language teaching in Section 1.3, and then summarize the role of phonetics in language teaching in Section 1.4.

1.1 The Intelligibility Principle

In the past, learning a foreign language usually involved closely imitating the speech patterns of native speakers. For learning English, this raises the issue of which native speakers should be imitated. Speakers of NAE? Or speakers of standard southern British English (SSBE), the standard model of British English, which was formerly called received pronunciation and is also sometimes referred to as BBC English (Reference RoachRoach, 2009)? Or maybe another accent, such as that of Australia?

Quite apart from the selection of a pronunciation model, we should note that after the age of about twelve, it is difficult for most learners to develop native-like pronunciation (Reference Abrahamsson and HyltenstamAbrahamsson & Hyltenstam, 2009). Furthermore, we should acknowledge that many learners of English, particularly those in ELF contexts, want to communicate effectively using the language without trying to pretend that they come from the USA, the UK, or Australia (Reference JenkinsJenkins, 2007). In other words, they are concerned with achieving a high level of intelligibility, and closely mimicking a native-speaker model is not necessarily the most effective way of achieving this.

Before we discuss the implications for teaching pronunciation, we consider what is meant by the concepts of intelligibility, comprehensibility, and accentedness. Reference Derwing and MunroDerwing and Munro (2005) have suggested definitions differentiating these three concepts, as summarized in Table 1.

Table 1 Definitions of intelligibility, comprehensibility, and accentedness

| Concepts | Definition |

|---|---|

| Intelligibility | the extent to which a listener actually understands an utterance |

| Comprehensibility | a listener’s perception of how difficult it is to understand an utterance |

| Accentedness | a listener’s perception of how different a speaker’s accent is from that of the local L1 community |

The intelligibility principle holds that the goal of pronunciation teaching and learning is intelligible speech, regardless of how native-like it sounds (Reference Derwing and MunroDerwing & Munro, 2015, p. 6), and most current pronunciation instruction strives for the practical goal of a high level of intelligibility, that is learners producing speech that can be understood by a range of different listeners. Comprehensibility is also crucial, as it is important not just to be understood but also to be easily understood, but accentedness is less important for many students. For the overwhelming majority of learners of English, close imitation of native-speech accents is not important, while being easily understood by people around the world is.

At the same time, however, there certainly are some learners who do want to try to fully integrate into a native-speaker society, and for them, close imitation of the local patterns of speech may be important. “Even if people can understand what [a speaker is] saying, an off-target pronunciation may still sound comical, irritating, or distracting to listeners” (Reference Carley and MeesCarley & Mees, 2021, p. 1). For those who are not trying to integrate into a native-speaker society, it is important to ensure that their pronunciation is not comical or irritating, so they need to ensure that their accent is not a distraction. Teachers need to evaluate the priorities and aspirations of their students and adjust their teaching appropriately.

Quite apart from this evaluation of the needs and aspirations of learners, a key issue for teachers involves determining which aspects of pronunciation contribute most to enhancing intelligibility, and this is an ongoing research issue (Reference DeterdingDeterding, 2013; Reference PickeringPickering, 2006). Many language educators and researchers refer to the segmentals – the consonant and vowel sounds – as the building blocks for larger units, such as syllables, words, and phrases (Reference Rogerson-Revell and KangRogerson-Revell, 2018, p. 93). When students do not have a strong grasp of these building blocks, their ability to speak in the target language is severely impaired. In addition to consonants and vowels, language teachers and learners need to consider suprasegmental features, those features that extend beyond individual segments, including stress, rhythm, and intonation. The relative contribution of segmentals and suprasegmentals to improvement in intelligibility remains a topic of discussion, but it is generally agreed that both areas are important (Reference McNerney, Mendelsohn, Avery and EhrlichMcNerney & Mendelsohn,1992; Reference Zielinski, Reed and LevisZielinski, 2015).

Reference JenkinsJenkins (2000) investigated the relative importance of various features of pronunciation. She proposed a lingua franca core (LFC), an inventory of those features of pronunciation that are important for international intelligibility, and she suggests that noncore features do not need to be taught. Included in the LFC are all the consonants of English apart from /θ/ and /ð/ (the sounds at the start of think and that respectively), word-initial and medial consonant clusters, the distinction between tense and lax vowels (also often referred to as long and short vowels), and sentence stress; noncore features include /θ/ and /ð/, vowel quality (e.g. the exact distinction in vowel height of different vowels), the weak forms of function words, stress-timed rhythm, and the specific tunes associated with intonation (Reference JenkinsJenkins, 2000, pp. 23–24). Many of these, such as the exclusion of vowel quality, remain controversial. Issues related to segments will be discussed further in Sections 3 and 4 of this Element, while rhythm and intonation will be covered in Section 5.

Even though the exact features of the LFC are subject to ongoing research, the concept that achieving a high level of intelligibility is key for learners of a language is a well-established principle of language teaching. One other key concept is the distinction between perception and production: while many learners of English may not aspire to imitate native-speaker patterns closely, they do need to be able to understand those patterns. In practical terms, they do not have to adopt all the features associated with fast, fluent utterances produced by native speakers, including assimilation, simplification, and omission of sounds, but they must be able to understand speech that includes that kind of speech. This will be discussed further in Section 4.5.

1.2 Models of Speech Learning

Here we briefly discuss three models which relate the potential for accurate perception and production of new sounds, or phones, to the degree of similarity between L1 and L2 sounds: the contrastive analysis hypothesis (CAH) originally proposed by Reference LadoLado (1957); the speech learning model (SLM) suggested by Reference Flege and StrangeFlege (1995), and the perceptual assimilation model (PAM) developed by Reference Best and StrangeBest (1995).

The CAH was an extension of contrastive analysis (CA), a comparative linguistics methodology utilizing structural linguistics to predict error that sought to establish similarities and differences between languages as a basis for improving foreign language instruction. Adapting the CA approach to a focus on predicting learner difficulty, Reference LadoLado (1957) formulated the CAH which, in its strong form, attempted to predict (a priori) which features learners will find difficult to acquire, starting with pronunciation of sounds. The CAH is based on a comparison between the native and target language, under the assumption that similar “elements” will be easy whereas those that differ will be difficult. Specifically, it was predicted that L2 phonemes with no L1 counterpart would be difficult to learn, while equivalent L1-L2 phonemes would be relatively easy to learn. Although intended to account for learner difficulty, in actual practice the CAH, as with all prevailing CA approaches, was used to predict errors, perhaps on the unconscious assumption that difficulty and error can be equated. It was also wrongly assumed that pronunciation errors resulted from faulty production, that is incorrect articulation of the speech sound, as opposed to faulty perception. This approach also failed to take into account that while two languages may share the same sound, that phoneme may differ at the phonetic level, that is in how it is articulated depending on its phonological environment. To illustrate, consider the voiceless phonemes /p/, /t/, and /k/, referred to as stop consonants or plosives because their bilabial, alveolar, or velar place of articulation stops the airflow from the lungs. In English, these phonemes, when released in word-initial or stressed syllable-initial position, are accompanied by a burst of air referred to as aspiration. The resulting conditioned variants or allophones – aspirated [ph], [th], or [kh] – occur in predictable phonological environments in English but do not occur in these positions in other languages, such as Spanish, which share the same sounds at the phonemic level. Further, the CA approach overlooked individual differences, including among learners with a shared L1 at comparable levels of proficiency. Failure to take into account crosslinguistic differences in the allophonic distribution of the “same” phonemes at the phonetic level or to recognize the role of conditioned variants of phonemes in L1 acquisition, along with evidence that not all predicted errors occur and that not all speakers of a particular L1 make the same errors in their L2 speech all combined to weaken the strong form of the CAH. As empirical evidence failed to support the major claims of the hypothesis, particularly that the closer the target phone is to an L1 category the easier it is to perceive and produce, the CAH eventually fell out of favor.

The SLM (Reference Flege and StrangeFlege, 1995) addresses a major failure of CA to account for L2 speech learning, namely reliance on phoneme inventories without respect to phonetic realization, that is positional allophonic variation of the phonemes. The SLM, a model of the influence of the L1 on L2 speech learning, also takes issue with the critical period hypothesis, which presumed discontinuity in postpuberty language learning ability (Reference LennebergLenneberg, 1967). Assuming instead that phonetic category formation is not age limited, the SLM addresses how phonetic systems reorganize over the life span and whether highly experienced L2 learners will eventually “master” L2 sounds. It stipulates which L2 sounds are most challenging to perceive and produce on the basis of equivalence classification. The SLM claims that similar but not identical sounds are perceived as instances of L1 sounds through a subconscious cognitive mechanism of interlingual identification, and that this perceptual linking to L1 sounds initially forestalls establishment of new phonetic categories. Flege’s model, which concerns the requisite mental representation for perceiving new target sounds, accounts for early stage nontarget realization of new L2 sounds; that is the degree of accuracy with which L2 segments are perceived establishes and constrains the degree of accuracy with which they can be produced. The greater the perceived dissimilarity or distance between the L1 sound and a target sound, the more readily learners will establish a new target-like category. Proposing a perceptual basis for production errors challenges the assumption that we lose neurocognitive plasticity by suggesting eventual category and motoric implementation alignment for some, though not necessarily all L2 sounds that differ audibly from the closest L1 sound.

The SLM accounts for improved discrimination as the result of phonetic category formation, considered essential for phonetic reorganization, claiming “the processes and mechanisms used in learning the L1 sound system, including category formation, remain intact over the life-span and can be applied to L2 learning” (Reference Best, Tyler, Munro and BohnBest & Tyler, 2007, p. 24). Retention of speech-learning capacity allows L2 learners to “gradually discern” phonetic differences between close but not identical sounds – described by SLM as a slow process, but one that is sensitive to phonetic input during L2 learning.

In its revised formulation (Reference Flege, Bohn and WaylandFlege & Bohn, 2021), the SLM-r adjusts its focus from eventual mastery or ultimate attainment to L2 speech development across the life span, with no presumed end state, consistent with L1 speech-development literature. SLM-r considers it unproductive and no longer of theoretical interest to determine whether or the extent to which an L2 learner’s speech perception or production is distinguishable from that of a native speaker. Further, whereas the SLM focused on between-group differences, for example highly experienced versus inexperienced L2 learners or child versus adult learners, SLM-r focuses on individual differences, with emphasis on factors such as the quality and quantity of input L2 learners receive, learners’ auditory acuity, and working auditory memory. It acknowledges that the frequency of target language use is a stronger predictor of foreign accent than critical period effects. Moreover, where the original SLM looked broadly at the factors chronological age at first exposure to an L2 and length of residence, SLM-r more deeply considers how precisely developed the learner’s L1 phonetic categories are at time of first L2 exposure. The L1 category precision assumption states that the more precisely defined L1 categories are, the more readily differences between an L1 sound and the closest L2 sound will be detected, allowing for a new phonetic category to be formed. With respect to interactions between perception and production, the former unidirectional claim that accurate L2 segmental perception constrains L2 speech production has been replaced by a “co-evolution” hypothesis on the basis of empirical evidence supporting a bidirectional relationship between perception and production. With these and other refinements, the SLM-r seeks to continue the investigation into how speech is learned across the life span while taking into account individual differences in L2 attainment.

The PAM, an articulatory framework developed by Reference Best and StrangeBest (1995), examined the role of the native language in shaping consonant and vowel perception. The focus of this model is the mechanism by which learners’ perceptual systems attempt to match, or assimilate, new or unfamiliar speech sounds to known or established L1 categories. The PAM draws information on how native phonological categories develop from research in infant speech perception. Native perceptual systems develop in correspondence with the inventory of L1 sounds, with discriminatory ability for sounds that are not in the L1 input gradually diminishing between the ages of eight to twelve months, a phenomenon known as perceptual narrowing (Reference Werker and TeesWerker & Tees, 1983). Native categories are established on the basis of contrast between phonemes, the consonant and vowel building blocks of languages, such that the English consonant phonemes /t/ and /d/ are separate categories, capable of creating minimal-pair distinctions, for example to/do or bat/bad. Native speakers tend not to discriminate consonants from within a single category, as when native speakers of English hear allophones (phonologically conditioned variants) of the phoneme /t/, for example the glottal stop in words like kitten or the flap in words like water, as one sound, /t/.

The PAM draws on research in cross-language speech perception to determine the potential of adult L2 learners to discriminate nonnative contrasts. The model predicts that accurate perceptual discrimination of previously unheard nonnative sounds, or phones, depends on whether and to what degree they are assimilated to native phonological categories. Whether pairs of phonologically contrasting never-before-heard nonnative phones will be assimilated depends on whether the listener can detect a native phonological distinction between the nonnative phones. Reference Best and StrangeBest (1995) established empirically what types of phoneme contrasts are easy for L2 learning and which pose challenges. A good discrimination is predicted if an L1 phonological contrast is detected between contrasting nonnative phones, such that each of the pair assimilates to a different L1 phonological category, a two-category assimilation. A poor discrimination is predicted in the case where no difference is detected between two nonnative phones, which then map onto one L1 phone, a single-category assimilation.

Having attributed differences to the ways nonnative sounds align with gestural constellations of native phonological categories, PAM related disparities in perception of foreign contrasts to disparities in native phonological knowledge gained through linguistic experience. Building upon PAM, PAM-L2 (Reference Best, Tyler, Munro and BohnBest & Tyler, 2007) expanded the scope of perceptual learning to encompass the role of attentional focus in detecting phonological contrasts as a basis for forming a new L2 category. Ability to detect a phonetic difference between contrasting L2 phonemes is claimed to facilitate perceptual learning.

To illustrate the challenges of perceiving and then producing unfamiliar speech sounds as modeled by PAM, consider the following case of a student from Venezuela. During self-introductions in a L2 classroom, he used /β/, a voiced bilabial fricative that exists in his native language Spanish, but not in English. To an English native speaker, this rendered his native country sounding like “Benezuela” and his profession sounding like “diborce” attorney. The Spanish phoneme shares two features with the English target /v/ phoneme: the fricative manner of articulation, and voicing; but it shares bilabial place of articulation with the perceived phoneme /b/, instead of the expected labio-dental place of articulation for /v/. Granting that pronunciation of country names can be regarded as the purview of the native speaker, the problematic pronunciation of his profession by this English-language learner may be more worthy of attention from the English as a second language (ESL) instructor. Because naive English-speaking listeners with whom this student expects to interact will perceptually associate /β/ with the closest English phoneme /b/, and cognizant that self-introductions provide just one chance to make a good first impression, it can be argued that the student would benefit from explicit instruction focused on the place of articulation of /v/.

1.3 Phonetics in Language Teaching

Language teaching has a long history and has adopted various methods, including the grammar-translation method, which involved substantial knowledge of grammar and was based on translation from the source language to the target language, and the audiolingual method, which depended on extensive use of recordings in language laboratories in the belief that multiple repetition of words led to perfection in their production. More recently, communicative language teaching (CLT) became the dominant approach; it advocates a focus on the use of language for purposes of genuine interpersonal communication.

In each of these methods, phonetic skills had a different status. The grammar-translation method was mostly focused on written texts; phonetic skills were not regarded as important. In contrast, phonetic skills were central in the audiolingual method. In CLT, explicit pronunciation instruction is often given a less prominent role, in the belief that phonetic accuracy is not essential for communication of the message. However, many people now believe that the exclusion of phonetics knowledge from the language teaching curriculum is misguided, as it has been shown that unexpected pronunciation, even in ELF contexts, is the factor that most often leads to a loss of intelligibility and a breakdown in communication (Reference DeterdingDeterding, 2013).

Phonetics in language teaching is both an art and a science. It is an art because it is creative, adaptive, and innovative in its nature. Phonetics in language teaching often involves creative adaptation of teaching materials according to different instructional contexts and settings; consideration of learners’ objectives, age, proficiency levels, motivations, aspirations, and many other factors; and application of innovative and emerging technology that can be used imaginatively to enhance language teaching. As a science, it requires interdisciplinary knowledge, for in order to teach listening, speaking, and pronunciation effectively, instructors need to have content knowledge about phonetics, background knowledge about second-language development, and pedagogical knowledge about language teaching. They need to be able to identify, transcribe, and describe sounds based on phonetic knowledge, to select optimal teaching approaches based on an understanding of second language development, and to design effective teaching methods based on their experience of language teaching.

Clearly, phonetics benefits language learners, even in CLT-based classrooms, when they are provided with instruction in and practice with the description and classification of sounds and the perception and production of target-language phonemes. Unfortunately, a number of studies report that many educators, including English language-teaching professionals, receive insufficient training in pronunciation teaching, so they feel ill-prepared to deal with it in the classroom (Reference BakerBaker, 2014; Reference Foote, Holtby and DerwingFoote et al., 2011; Reference Murphy and GrantMurphy, 2014). Even those who have received some training in phonetics often rely on their own intuition when conveying the concepts to language learners (Reference LevisLevis, 2005), which may initially result in teacher-centered pronunciation instruction with limited communicative application (Reference Burri and BakerBurri & Baker, 2021). The gaps in knowledge affect all areas of phonetics, a topic which many teachers find daunting (Reference CouperCouper, 2017, p. 830), with the result that many feel that it is simply too difficult for them to teach pronunciation (Reference Zielinski, Yates and GrantZielinski & Yates, 2014, p. 57).

Fortunately, when language teachers feel empowered to teach pronunciation, explicit instruction in the articulation of target-language phonemes has the potential to make a substantial difference in students’ intelligibility (Reference CouperCouper, 2006).

Educators often report a desire for practical tips and techniques that will help them introduce target-language sounds. As a result, this Element attempts to bridge the divide between what language teachers may already know about teaching phonetics and what they need to know in order to provide the most effective pronunciation instruction to their students. It also offers some ideas about how skills in pronunciation can help their students. Specifically, the following aspects are discussed:

1. Sounds are abstract and transient in nature. To teach sounds and to provide feedback to students, teachers need to be able to describe sounds. Crucially, accurate description of sounds involves transcription, and we deal with this in Section 2.

2. Learners need to know how phonemes in the target language are similar to and different from those in their first languages (Reference Best, McRoberts and GoodellBest et al., 2001; Reference VihmanVihman, 1993). Further, learners benefit from understanding how the positioning and use of speech organs affect the production of sounds (Reference Howard and MessumHoward & Messum, 2011; Reference Mompean, Solé and RecansensMompean, 2003). Issues related to the description and articulation of phonemes are addressed in Section 3.

3. Learners need to know how sounds can change when they occur in close proximity to other sounds in a stream of speech (Reference Lowie, Bultena and MaidmanLowie & Bultena, 2007). Sounds do not occur in isolation, and they vary depending on where they occur. Furthermore, they can undergo substantial change when produced in fluent speech. These areas are considered in greater detail in Section 4.

4. The study of prosody includes rhythm (the regular occurrence in time of a unit of speech) and intonation (the tunes associated with utterances), and learners should be familiar with these areas of phonetics and how they affect the intelligibility of their speech. This is discussed in Section 5.

5. Finally, there are many computer-based tools that can enhance pronunciation teaching, and all language learners can benefit from these. In Section 6 we review some resources for pronunciation teaching that can be usefully introduced in the language classroom.

2 Phonetic Transcription

Before we discuss the symbols and systems used for phonetic transcription, we would like to first invite you to a language class that took place several years ago in an institute in the United States. This is a case that demonstrates how some teachers integrate phonetic transcription in language teaching. The setting was an English for Academic Purposes class, and the students were high-intermediate adult learners who were preparing to study in a US university. The teacher had been working in the language institution for many years and the classes she taught included listening, speaking, and integrated skills classes.

At the beginning of the class, the teacher wrote some IPA symbols on the whiteboard and asked students to describe those symbols using three parameters she had introduced in the previous class to represent consonants: voicing, place of articulation, and manner of articulation. She produced these sounds, one at a time, and asked her students to repeat after her. Then, she gave out some practice exercises and asked students to transcribe them using IPA symbols and provide a phonetic description of their articulation. Although students were able to complete the transcription exercise, they did not enjoy the activity. Also, students mispronounced sounds in the following classes despite successfully completing the transcription activities.

This example raises two questions:

1. Do we need a set of symbols to describe and transcribe sounds?

2. Does successful description and transcription of sounds lead to correct production?

To answer the first question, we review the development of phonetic transcription. In later sections, we describe approaches that can help address the second question.

2.1 The Role of Phonetic Transcription in Language Teaching

One essential aspect of phonetics for language teaching is the knowledge of phonetic transcription. Unlike spelling, phonetic transcription uses symbols that match each sound to a unique symbol so that reading the symbols shows the teacher and learners the actual pronunciation of a word or phrase. Knowledge of phonetic transcription is a critical content skill for language teachers, and such knowledge helps them to identify how words are pronounced in the target language and how their learners are missing the mark in their own pronunciation. Furthermore, it can be helpful in explaining how to make new sounds.

One widely used system of transcription involves the IPA, which was originally developed in 1889 with the intention of describing the sounds of all the world’s languages and has remained remarkably consistent since then (Reference Esling, Hardcastle., Laver and GibbonEsling, 2010). At its most basic, the IPA can be used to represent the sounds of consonants in terms of their voicing, place, and manner of articulation, and to represent vowels in terms of lip rounding and the height and position of the tongue (IPA, 1999). These ways of describing the pronunciation of consonants and vowels will be adopted in Section 3 of this Element.

The convention for representing the phonemes of a language – the meaningful units that differentiate sounds in the language – is to enclose them in slashes: a broad transcription. For example, English has three voiceless plosive consonant phonemes – /p, t, k/ – which are produced with no vocal cord vibration and are often referred to as stops because they briefly stop the airflow. These phonemes vary in their articulation according to their phonological context, and these variants, known as allophones, are represented in square brackets: a narrow transcription. For instance, while the phoneme /p/ appears in both pit and spit, the pronunciation differs slightly: while the /p/ in the initial position in pit is released with an accompanying puff of air, known as aspiration, there is no aspiration associated with the /p/ in spit because of the influence of the fricative /s/ at the start of the word. The aspirated and unaspirated allophones of /p/ are represented as [pʰ] and [p] respectively.

One reason for the crucial need for a system of transcription such as the IPA is the mismatch between spelling and sound in any language. In languages like Spanish, the mismatch is smaller and the pronunciation is relatively predictable from the spelling. In a language like English, the mismatch is very noticeable. English letters may correspond to multiple sounds; for example the letter <a> is pronounced differently in apple, father, walk, and about. Using IPA transcription, these vowel sounds are shown as /æ, ɑ, ɔ, ə/ respectively. Because there are only five vowel letters in English, the written system is insufficient to show the range of vowel sounds in the language. In addition, different letters in English may represent the same sound: the letters <c> in cat and <k> in kitten are pronounced with the same sound, and the letter <f> in fish and the letter combination <ph> in Phillip similarly have the same sound. Using IPA transcription, the same sound is always transcribed using a consistent symbol, so the sound at the start of cat and kitten is shown as /k/ and that at the start of fish and Phillip is /f/.

When instructors are teaching and explaining pronunciation to language learners, it is difficult to refer to different sounds properly without a systematic transcription system such as the IPA. There are several issues that make learning the IPA, or a similar phonetic transcription system, beneficial.

1. Phonemes and allophones usually differ substantially between the first language of learners and the language they are learning (Reference LevisLevis, 1999, p. 65). For instance, Mandarin Chinese has the voiceless palatal fricative /ɕ/ at the start of words such as 西, xī (west), Spanish has the voiceless velar fricative /x/ in the middle of words such as ojo (eye) and French has the voiced uvular fricative /ʁ/ at the start of rouge (red) but none of these sounds occur in English. Use of the IPA enables students to appreciate differences between the sounds of their languages and the target language.

2. Learners cannot easily perceive sounds that do not exist in their L1 sound inventories. For example, Mandarin Chinese does not have the voiceless dental fricative (Reference DuanmuDuanmu, 2007), the sound which is transcribed as /θ/ and occurs at the start of thank, and so learners of English in China may use /s/ in place of /θ/, producing sank you instead of thank you (Reference DeterdingDeterding, 2006). Some Chinese learners of English find it challenging to discriminate between /s/ and /θ/, and use of IPA transcription helps highlight this distinction.

3. The transient nature of speech and limited time available for classroom instruction leads to few classroom practice opportunities. In the classroom, a teacher may try to elicit a target sound production from a student by modeling it, and sometimes, after multiple attempts, either the student ends up producing the right sound accidentally or the instructor simply gives up because there is not enough time in the lesson to ensure the student can hear and produce the sound accurately. Use of the IPA can help fix sounds in the memory of students.

2.2 Phonetic Transcription and Notation in Textbooks

Although the IPA has a long and consistent history (Reference Esling, Hardcastle., Laver and GibbonEsling, 2010), it is not the only transcription system. In the field of language teaching, there are variations in the system and symbols used in different textbooks and resources. For example, one of the most widely used coursebooks for teachers of NAE, Reference Celce-Murcia, Brinton and GoodwinCelce-Murcia et al. (2010), sometimes deviates from the IPA. For instance, the IPA symbol /y/ represents a front rounded vowel (the vowel which occurs in tu, “you,” in French and is represented as <ü> both in German words such as über, “over,” and in the Pinyin system for Mandarin Chinese, as in 女, nǚ, [woman]), but Reference Celce-Murcia, Brinton and GoodwinCelce-Murcia et al. (2010) use /y/ for the sound at the start of words such as yes. Table 2 shows some of the different symbols that are used in the IPA and three pronunciation teaching textbooks. The first two, Reference Celce-Murcia, Brinton and GoodwinCelce-Murcia et al. (2010) and Reference GilbertGilbert (2012), present American English while Reference RoachRoach (2009) deals with SSBE (though he refers to it as “BBC pronunciation”). Symbols not shown in Table 2 are generally consistently used in the IPA and in various textbooks.

Table 2 Different symbols used in the IPA and three textbooks

| Sample Words | IPA | Reference Celce-Murcia, Brinton and GoodwinCelce-Murcia et al. (2010) | Reference GilbertGilbert (2012) | Reference RoachRoach (2009) |

|---|---|---|---|---|

| yes | /j/ | /y/ | /y/ | /j/ |

| cake, pay | /eɪ/ | /ey/ | /ey/ | /eɪ/ |

| tea, key | /i/ | /iy/ | /iy/ | /iː/ |

| ice, pie | /aɪ/ | /ay/ | /ay/ | /aɪ/ |

| road, know | /oʊ/ | /ow/ | /ow/ | /əʊ/ |

| blue, school | /u/ | /uw/ | /uw/ | /uː/ |

| house, cow | /aʊ/ | /aw/ | /aw/ | /aʊ/ |

| boy, join | /ɔɪ/ | /ɔy/ | /ɔy/ | /ɔɪ/ |

Given these differences, teachers naturally will have questions about which transcription system they should use; thus, we need to address the following two issues:

1. What are the sources of the IPA symbols?

2. Why do some textbook authors use alternative symbols?

Most IPA symbols originate from an extended Roman alphabet; a few, such as /θ/, are from the Greek alphabet; some symbols, such as /ɪ/ (the vowel in kit), are modified versions of Roman letters; and finally, there are symbols derived from more specialized alphabets, such as /ʃ/ (the sound at the start of she), which is like the integral symbol in mathematics (Reference MacMahon, Daniels and BrightMacMahon, 1996, p. 823).

Because of the multiple origins of symbols, some will be more familiar to students than others. It is therefore important to point out that mastering a phonetic transcription system is not learning a whole new set of symbols of which the students have no prior knowledge. In fact, the majority of the symbols, like the initial consonants /b/, /d/, and /s/ in the words boy, dance, and sing, are likely to be familiar to learners and learning the symbols for these consonant sounds should be fairly straightforward for them. Symbols like /θ/ and /ʃ/ may be less familiar. The IPA relies on symbols such as these because, as mentioned above, there are not enough letters in the Roman alphabet for all the sounds in the languages of the world. As every symbol should uniquely represent one sound, new symbols must be introduced. Table 3 shows some of the non-Roman symbols and sample words:

Table 3 Sample non-Roman symbols in the IPA

| Consonants | Sample Words | Vowels | Sample Words |

|---|---|---|---|

| /θ/ | thank, both | /ɪ/ | Sit |

| /ð/ | the, bathe | /æ/ | Bat |

| /ʃ/ | show, wash | /ɔ/ | Talk |

| /ʒ/ | measure, beige | /ʌ/ | But |

| /ʧ/ | choose, rich | /ʊ/ | Book |

| /ʤ/ | jump, bridge | /ɝ/ | herd |

| /ŋ/ | sink, sing | /ə/ | about |

In an attempt to reduce the number of unfamiliar symbols, many textbook authors adopt alternatives to the IPA system. For example, English does not have the close front rounded vowel /y/. Thus, some textbook writers use /y/ instead of the IPA symbol /j/ to represent the sound at the start of words such as yes. In this case the phonetic symbol is the same as the letter, and the burden of memorizing an extra symbol due to a mismatch between letters and phonetic transcription has been reduced.

If we limit the scope to English, the modified textbook notation system is advantageous in that it is more straightforward and requires less time and effort to learn. However, it is useful for teachers to have familiarity with the IPA, as such knowledge allows them to refer to specific sounds in learners’ L1s that may not exist in English. It is recommended that teachers make the selection of the transcription system they adopt based on their teaching contexts.

When implementing this decision, consistency is important because shifting between two systems will introduce discrepancies to the material, which many students already regard as complex. Teachers also need to be aware that students may have used a different system previously. Thus, knowing what transcription system(s) and symbols students already know before introducing the IPA is helpful. Finally, it is useful to develop a chart that places side by side the words, the symbols that are going to be used in the class, and any other symbols students may be familiar with.

2.3 Phonetic Transcription in Teacher Preparation and Language Classrooms

It has been reported that teachers receive insufficient training in phonetics and pronunciation teaching (Reference Breitkreutz, Derwing and RossiterBreitkreutz et al., 2001; Reference Derwing and MunroDerwing & Munro, 2005). Recent years, though, have witnessed an increased coverage of these areas in teacher training programs (Reference Foote, Holtby and DerwingFoote et al., 2011). However, researchers have found that, despite widespread agreement that phonetic knowledge and transcription are useful, many educators lack interest in these areas. Furthermore, teachers have reported challenges when attempting to transfer phonetic knowledge into teaching practice, as they often lack confidence with use of the symbols (Reference CouperCouper, 2017).

Explicit phonetic instruction is effective in improving learners’ pronunciation proficiency when it is adopted in classroom teaching, particularly in English as a foreign language (EFL) settings (Reference SaitoSaito, 2007, Reference Saito2012). There are two aspects involved in the classroom use of phonetic transcription. First, teachers need to explicitly teach the transcription system itself, including a set of symbols as discussed previously, as well as the parameters used to categorize and describe the articulation of the various sounds. Second, they need to integrate phonetic transcription with the use of the new language, adopting phonetic transcription when teaching new vocabulary to students and using phonetic knowledge when providing feedback on students’ pronunciation errors. In Section 3, we will review the use of transcription in teaching activities and offer suggestions for approaches that teachers might adopt when raising awareness about articulation.

Crucial to the teaching of pronunciation is linking a transcription system such as the IPA to the articulation of sounds, including where and how they are produced. It is more important to explain to students how sounds are produced than to get them to memorize technical terms, for example that /b/ is a voiced bilabial plosive. As we discuss in Section 3, teachers may even develop a more learner-friendly terminology system for the places of articulation, for instance talking about the lips instead of bilabial.

With increased attention to the field of pronunciation teaching, several studies have been conducted to review practices and the effectiveness of methods of instruction, including read-aloud, drills, imitation, picture narratives, and spontaneous speaking (Reference Lee, Jang and PlonskyLee et al., 2015; Reference SaitoSaito, 2012; Reference Thomson and DerwingThomson & Derwing, 2015). While phonetic transcription is not directly involved in most of these activities, it serves a fundamental role in that it increases learners’ metalinguistic awareness of the sounds. It also offers a set of tools for teachers to distinguish between correct and incorrect pronunciation and provide feedback.

Incorporating IPA transcription practice into English lessons can be done fairly seamlessly. As students learn target phonemes, they can also learn the phonetic symbol for the sound. As a review activity, teachers can dictate words and have the students write them using the phonetic symbols. This can be transformed into a game by distributing pieces of paper and markers and encouraging pairs of students to race against other pairs to do the phonetic transcriptions quickly and accurately. Educators who want to infuse their lessons with some movement can create sets of matching index cards with words on half of the cards and the phonetic symbols on the other half, telling the students to mingle around the classroom and read the words on their cards until they find their match. If teachers want to help students practice the quick identification of a phoneme, they might write several phonetic symbols on the board and divide the class into teams, giving each team a different colored flyswatter. As they call out a word, students from each team race to hit the phonetic symbol for the initial sound of the word. Finally, of course, teachers can have their students phonetically transcribe new vocabulary as they learn it. This is perhaps the most useful practice for students because it helps them pronounce new words accurately.

2.4 Questions about Phonetic Transcription in Language Classrooms

We have reviewed the development, needs, purpose, and uses of a phonetic transcription system for segmental sounds. The use of such a system is beneficial in language classrooms. Here, we would like to address some common questions from preservice and in-service teachers with regard to the uses of phonetic transcription in classrooms.

Question 1: Can I teach English sounds without using the IPA or other transcription system?

It is important to adopt some kind of consistent phonetic transcription system, either the IPA or some other system. Phonetic transcription can be beneficial in two ways: 1. it helps learners to become aware that, in English, one sound can correspond to multiple spellings and one letter or letter combination can have different sounds; 2. when learners have difficulty discriminating two sounds, teachers can explicitly indicate the differences by using the phonetic symbols and parameters.

However, it is important to understand that teaching the IPA or a transcription of sounds does not necessarily lead to the improvement of learners’ language skills. Some pronunciation-related instruction such as internalizing transcription conventions and understanding the articulatory or acoustic correlates of specific segmental and suprasegmental features of English are theoretical and not pedagogical in nature (Reference Tsunemoto, Trofimovich and KennedyTsunemoto et al., 2020). While these activities may improve learners’ explicit knowledge, they may not have a direct impact on learners’ spontaneous production.

It is also important to point out that the same functions that teachers typically use IPA for can be achieved by using alternative tools such as the Color Vowel Chart (Reference Taylor and ThompsonTaylor & Thompson, 2015) or phonics, which is commonly used for teaching reading to preschoolers (Reference Ehri, Nunes, Stahl and WillowsEhri et al., 2001). Additionally, research shows that both phonetic symbols and keywords can be used to improve learners’ perception, and the two techniques can be equally beneficial (Reference Fouz-González and MompeanFouz-González & Mompean, 2021; Reference Mompean and Fouz-GonzálezMompean & Fouz-González, 2021).

Question 2: Should I use broad or narrow transcription?

Broad transcription is usually sufficient, but narrow transcription may sometimes be helpful, because it provides details of the exact pronunciation of words. For example, as described earlier, to show the difference between two allophones such as the /p/ in spit and pit, we can show the first as [p] and the second as [ph].

The choice of broad versus narrow transcription depends on the purposes of teaching activities. Reference Mompean, Cutillas Espinosa, Hernández Campoy, Manchón Ruiz and Mena MartínezMompean (2017) suggests combining a broad phonemic transcription with narrow transcription of salient allophonic variants and/or CSPs for language teaching purposes. Using NAE, this would entail a basic broad transcription but with further detail for variants such as the glottal stop [ʔ] that can occur as an allophone of /t/ in words like kitten.

Question 3: I teach in an ELF setting – How does this affect the use of phonetic transcription in my class?

English as a lingua franca interactions involve speakers with different L1s using English to communicate (Reference SeidlhoferSeidlhofer, 2011, p. 7), and this contrasts with L2 contexts in which students are trying to integrate into a native-English society. The key in ELF settings is the enhancement of intelligibility, and mimicking native-speaker norms is not necessary in such contexts. However, it has also been shown that pronunciation is the source of most cases of misunderstanding in ELF contexts (Reference DeterdingDeterding, 2013), and reference to a transcription system such as the IPA is crucial in improving intelligibility.

At the same time, it may not be necessary to learn all the symbols or become familiar with all aspects of native-speaker pronunciation. Instructors of ELF may want to consider Reference JenkinsJenkins’ (2000) LFC, the inventory of those sounds that are necessary for maintaining international intelligibility in English. In such settings, teachers do not need to address details of pronunciation that are outside the LFC, such as the dark /l/ at the end of a word such as pill (which can be shown as [ɫ]).

Question 4: With limited time in class, which sound(s) should I prioritize?

The sounds to prioritize should depend on the functional load of the sounds and also the L1 background of the students. For classes involving students with a range of L1s, use of personalized training tools may be helpful.

Functional load estimates the importance of a phonetic contrast for communication by referring to the number of minimal pairs between two sounds (Reference BrownBrown, 1988). For instance, berry, /beri/, and very, /veri/, constitute a minimal pair because they are only distinguished by the two initial sounds /b/ and /v/. The functional load for /b/ and /v/ is quite high, because there are many minimal pairs for them, and it is vitally important that students differentiate between them. Simply put, “The most important sounds are the ones that can change the meaning of words” (Reference Carley and MeesCarley & Mees, 2021, p. 1).

It is also useful to consider learners’ L1s when determining the sounds to focus on. For instance, if a teacher is teaching a class in which most students are Japanese English learners, then focusing on the contrast between /ɹ/ and /l/ (the sounds at the start of right and light) would be an effective strategy, because Japanese does not distinguish these sounds and Japanese learners of English often have problems with them.

In a class composed of students of various language backgrounds, however, instructors may want to leverage personalized training with technology. For example, they may provide high variability pronunciation training by using English Accent Coach (www.englishaccentcoach.com/) for perceptual training. They may also use apps like Sounds of Speech (https://soundsofspeech.uiowa.edu/) to visualize the use of speech organs and speech processes and help learners to establish connections between sounds, phonetic symbols, and the articulation processes.

3 Articulation of Segments

To help students become proficient in producing the phonemes of a language, it is valuable for instructors to be familiar with the anatomical and physical processes involved in forming them. Because of this, teaching the sound inventory is different from instruction on other aspects of a language. Reference Celce-Murcia, Brinton and GoodwinCelce-Murcia et al. (2010, p. 43) note that, as pronunciation is a motor activity that involves sensory and physiological challenges, teachers can use tactile and kinesthetic methods to help their students master these challenges.

An important aspect of these approaches involves familiarity with the anatomy responsible for sound production – the vocal tract. In addition, educators need to understand how proficient speakers manipulate their speech organs to produce sounds. Finally, since teaching all of the phonemes and allophones of a language is an unreasonable expectation in most educational contexts, instructors also benefit from identifying which target-language sounds will prove the most challenging for their particular students and also which ones are the most important for them to learn to maximize their intelligibility.

One crucial aspect of a needs analysis involves determining if the learners want to try to approximate a native-speaker accent as nearly as possible, perhaps in order to assimilate into an English-speaking society (though they should be made aware that attaining a truly native-speaker accent is not achievable by most students and is not necessary in most contexts); alternatively, they may prefer to focus on achieving a high level of intelligibility in order to interact in English with a range of people around the world. This needs analysis may be determined by asking the students what they want to achieve, possibly by using a questionnaire, at the start of the course.

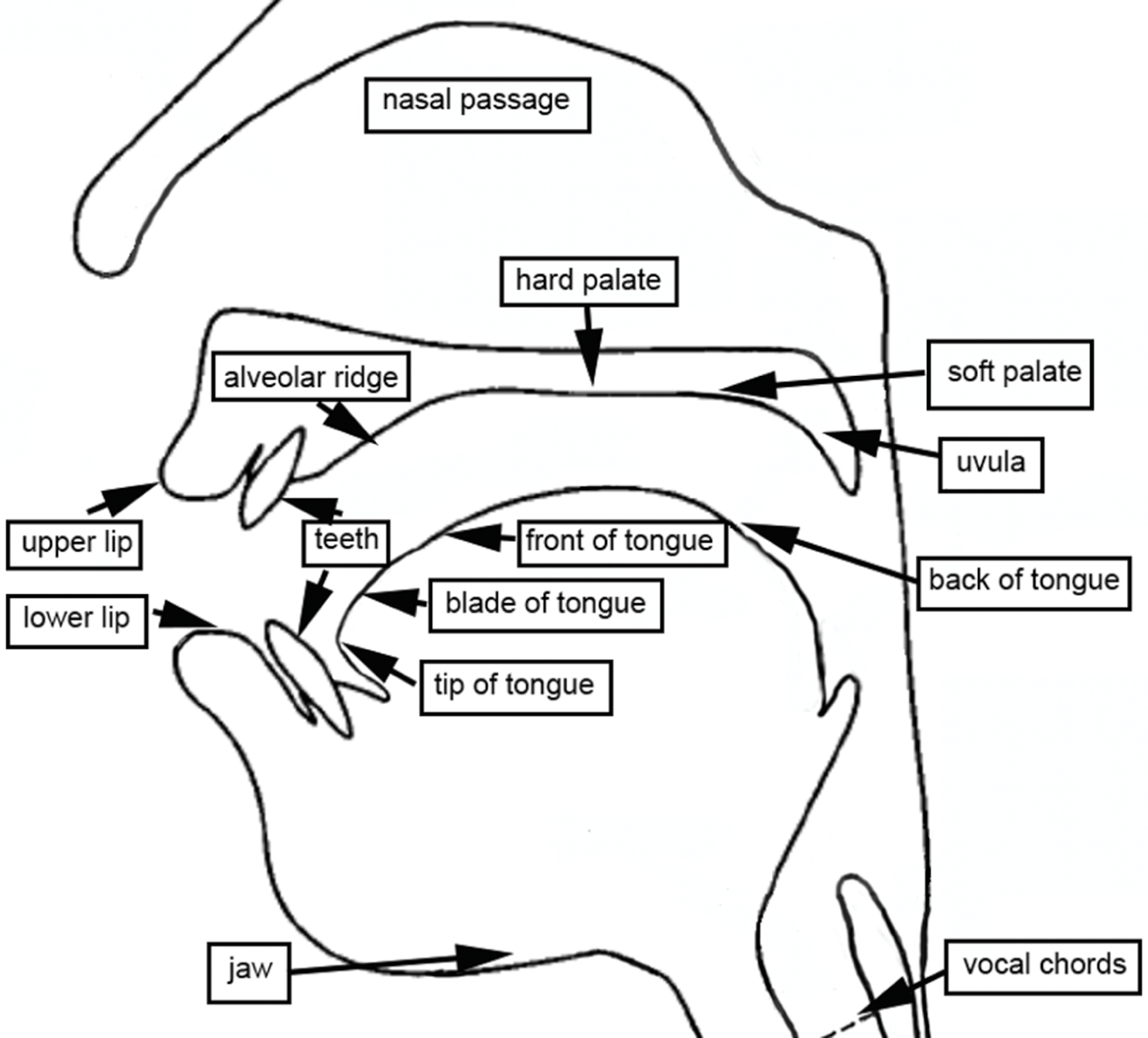

3.1 The Vocal Tract

Teaching or reviewing essential parts of the vocal tract with students at the beginning of a language course can help them access the descriptions of consonant and vowel sound production later in the course. While some phonetics publications, such as Reference LaverLaver (1994) and Reference LadefogedLadefoged (2001), contain detailed descriptions of the speech organs, it is not necessary to introduce the vocal tract in minute detail to most language learners. Rather, instructors might just identify the anatomical parts that are essential to the production of the phonemes in the target language. This is most helpfully done by displaying a sagittal diagram, a cross-sectional view of the head, such as in Figure 1.

Figure 1 The vocal tract

In addition to visual support, lower-level students may benefit from the use of simplified labels. For example, rather than teaching the technical term alveolar ridge to English learners, educators may prefer to use the more accessible term tooth ridge. An interactive activity in which students work together to label copies of a sagittal diagram can be a fun way to cover this key vocabulary in a classroom setting. Alternatively, teachers can provide students with cardboard, plastic tubes, and a pump and have them work in small groups to build models of the vocal tract.

For learners who have not previously given much thought to their vocal tracts, other tools can achieve awareness raising. Mirrors can help them identify both external and internal parts of the vocal tract, such as the lips, teeth, and parts of the tongue (Reference LadefogedLadefoged, 2001, p. 100). Moving small lollipops around their mouths can familiarize students with anatomical features that are harder to see, such as the alveolar ridge, hard palate, and velum. In addition, feathers or small slips of paper can help them see the movement of air as it is exhaled through the mouth and nose. Since learning about phonemes is a physical as well as a mental process, techniques which utilize auditory, visual, and kinesthetic modalities can raise awareness about aspects of English pronunciation that might otherwise go unnoticed (Reference Acton, Baker, Burri, Teaman, Levis and LeVelleActon et al., 2013; Reference Zielinski, Yates and GrantZielinski & Yates, 2014, p. 68).

It is also helpful to combine learning about articulation with phonetic transcription, as previously discussed in Section 2. For example, it can be useful to get students to identify the speech organs in their vocal tract starting from the lips at the front, produce sounds in different locations, and transcribe those sounds using the IPA. Teachers can put students in pairs, asking one partner to produce a sound and the other to identify the articulation process and write down the symbol for the sound.

3.2 Articulation: Consonants

Most consonants are formed by bringing parts of the vocal tract, known as articulators, in contact in order to obstruct the air flow, either partially or fully. The point at which the articulators touch or nearly touch is called the place of articulation. The places of articulation include the lips, teeth, tongue, alveolar ridge, hard palate, and velum (soft palate).

3.2.1 Place of Articulation

In order to accurately pronounce the consonant phonemes of the target language, students need to learn how to position their articulators appropriately. This may be challenging for them for three reasons. First, they may not perceive the sounds accurately. In other words, if the phoneme does not exist in their L1, learners may not hear it in the same way that a proficient L1 user hears it – something Reference Hayes-Harb and MasudaHayes-Harb and Masuda (2008) refer to as foreign-accented listening. For instance, many Japanese-speaking learners hear /ɹ/ and /l/ as the same sound because they are not distinguished in Japanese, so they may be unable to differentiate between words such as right and light (Reference Haslam and LiontasHaslam, 2018, p. 2). Second, leaners may not be accustomed to positioning their articulators in certain ways. For example, learners of English whose first languages do not contain the /θ/ or /ð/ sounds may not be accustomed to positioning their tongues between their teeth, and they may need explicit instruction and repeated practice to master this placement. Third, while consonant sounds sometimes seem similar across languages, the place of articulation may differ slightly. If students are unaware of this difference, they may have problems accurately pronouncing the target phoneme. For example, the Spanish /t/ sound is dental in articulation, and native speakers touch the tip and front of the tongue on the back of the upper teeth (Reference SalcedoSalcedo, 2010). This position is different from the English /t/, in which only the tip of the tongue touches the alveolar ridge (Reference LadefogedLadefoged, 2001).

In English, there are seven places of articulation for consonant sounds, and students may need to be familiar with other places of articulation (such as uvular; see Figure 1) to understand how the sounds of other languages are produced, and how their own articulation of English may differ from what is expected. It should be noted that, while learning the terms associated with the place of articulation may be helpful for teachers, language learners may not benefit from memorizing these terms. Instead, educators might want to present the sounds using simple explanations, visuals, and example words. Table 4 includes sagittal diagrams to illustrate the articulation of English consonants. Note that these sagittal diagrams are not completely accurate for the nasal sounds /m, n, ŋ/, in which the velum is lowered to allow air to pass out through the nose.

Table 4 Place of articulation for English consonants (based on Reference Celce-Murcia, Brinton and GoodwinCelce-Murcia et al., 2010, p. 61)

Sagittal images such as those included in Table 4 do not always illuminate the place of articulation as efficiently as one might hope because, as Reference Gilbert and BrownGilbert (1991, p. 312) reminds us, the crucial mouth relationships are actually three dimensional. A few techniques may facilitate the learning of articulation:

1. Instructors can provide a model of the place of articulation using their own face and encourage students to mimic it. A mirror can help students determine the configuration of the lips and the tip of the tongue (Reference LadefogedLadefoged, 2001, p. 100). Alternatively, they may use online resources such as Sounds of Speech, mentioned earlier, which contains animated sagittal diagrams.

2. For consonant sounds that involve placement of the tongue, Reference MillerMiller (2011) recommends teachers use a red sock on their hand to highlight the correct position for the tongue. To perfect the mouth position for pronouncing the dental phonemes with an interdental articulation, students can hold a lollipop or their finger (Reference NollNoll, 2007) in front of their mouth and stick their tongues out far enough to touch it.

3. Reference Gilbert and BrownGilbert (1991) suggests bringing dental molds into the classroom to demonstrate alveolar sounds, though presumably this tool could also be used for phonemes produced further back in the mouth.

4. Other strategies for helping students identify different locations in their mouths can also be useful. For example, English learners with varying L1s who pronounce /d/ or /t/ in place of /θ/ and /ð/ can dip a cotton swab into lemon juice and rub it on their alveolar ridge (Reference NollNoll, 2007). If they taste lemon as they are repeating words beginning with the dental phonemes, they are touching their tongue to the tooth ridge instead of pressing it up against the top teeth or inserting it between the teeth.

3.2.2 Manner of Articulation

In addition to being conscious about where consonant sounds occur in their mouths, language learners also need to know how air is released as the sounds are produced. This is known as the manner of articulation. Reference LadefogedLadefoged (2001, p. 101) compares the production of sounds to water passing through a garden hose, in that you can do various things to the hose to influence the flow of the water.

Language learners may struggle to produce consonant sounds correctly because they are accustomed to restricting their airflow in different ways. For example, while Spanish has an /r/ sound, it tends to be trilled, with the tip of the tongue tapping rapidly on the alveolar ridge (Reference Avery, Ehrlich, Avery and EhrlichAvery & Ehrlich, 1992c; Reference Coe, Swan and SmithCoe, 2001); in contrast, the English /ɹ/ is formed without touching the alveolar ridge at all, and while use of a Spanish trill is unlikely to cause problems in intelligibility, it results in a noticeable foreign accent (Reference Rogerson-Revell and KangRogerson-Revell, 2018, p. 97). However, in many cases, the substitution of a target-language phoneme with a more familiar L1 sound can be problematic for intelligibility. For instance, Korean does not contain /f/, a sound that involves a kind of hissing that we call a fricative, and rather than narrowing their airstream by connecting their top teeth and lower lips in the labio-dental position, Korean speakers may say /p/, closing both lips in the bilabial position (Reference Lee, Swan and SmithLee, 2001) and producing a stop instead of a fricative. This is problematic because the contrast between /p/ and /f/ carries a high functional load in English.

For the consonants of English, there are three categories describing how restricted the speaker’s airflow may be: complete closure, where there is a complete stoppage of their airflow; partial closure, where the air stream is narrowed by articulators nearly touching, resulting in a fricative; and approximation, where the air stream is narrowed by articulators coming close to one another but not as close as for a fricative (Reference Rogerson-Revell and KangRogerson-Revell, 2018). In addition, there are two subcategories of approximant: liquids and glides.

The consonant sounds phoneticians refer to as stops (or sometimes plosives), /p, b, t, d, k, g/, are formed when the airflow is stopped completely by contact between articulators, either with a bilabial articulation, an alveolar articulation, or a velar articulation, and the velum is raised so air cannot exit through the nasal passage. This stoppage of air is brief and how it is released depends on where it appears in a word. If the stop is at the beginning of a word (as with the /t/ in tack) or the beginning of a stressed syllable in the middle of a word (as with the /t/ in attack), the release involves a puff of air. The duration of this puff of air before the onset of voicing of the following segment is referred to as voice onset time (VOT). For /p, t, k/, the puff of air is audible and is often referred to as aspiration; however, for /b, d, g/, the puff of air is weaker and less noticeable. The contrast between the strength of the aspiration is occasionally referred to as fortis (strong) for /p, t, k/ and lenis (weak) for /b, d, g/. When these sounds appear at the beginning of an unstressed syllable (as with the /t/ in attic) or at the end of a syllable (as with the /t/ in hat), the plosion “is very weak and often not audible” (Reference RoachRoach, 2009, p. 28) regardless of whether they are fortis or lenis, and English listeners tend to distinguish between word-final fortis and lenis stops based on the length of the preceding vowel sound. The vowel before word-final /p, t, k/ is held for a shorter period of time than the same vowel sound before /b, d, g/. For instance, the /æ/ sound before /t/ in pat is shorter than the /æ/ sound in pad.

Fricatives are produced when the articulators are touching or nearly touching, but the passage of air through the mouth is not completely closed off. In English, there are nine fricatives: /f, v, θ, ð, s, z, ʃ, ʒ, h/. The restricted air passage in the vocal tract can have labio-dental articulation, dental articulation, alveolar articulation, palatal articulation, or glottal articulation, and a hissing sound occurs as a result of the turbulence in the airstream as it passes between the articulators in close contact. English learners may conflate stops for fricatives, as in berry instead of very or copy instead of coffee. When this happens, teachers can use pictures or themselves as models to draw learners’ attention to the narrow opening associated with the target fricative and compare that with the closed mouth position of the stop consonant, while students use mirrors to see and correct their own articulation. Encouraging students to hold their hands in front of their mouths to compare the sustained stream of air of a fricative with the burst of air of a stop can also help clarify the difference between fricatives and stops.

In English, there are two sounds that are a combination of a stop and a fricative, /ʧ/ and /ʤ/, and they are known as affricates. When speakers make these sounds, their tongues touch the alveolar ridge, stopping the airflow. Then, instead of a sharp release to produce a stop, the contact between the articulators is slowly released, and there is a fricative following the stop (Reference Ladefoged and JohnsonLadefoged & Johnson, 2011, p. 15). Some English students, particularly Spanish and Portuguese speakers, have trouble contrasting the fricative /ʃ/ and the affricate /ʧ/ (Reference Avery, Ehrlich, Avery and EhrlichAvery & Ehrlich, 1992c). Teachers might give Spanish-speaking students small plastic spoons to put upside down over their tongues (Reference NollNoll, 2007). As they start to make the /ʧ/ sound, the spoon may block the tongue from touching the alveolar ridge, producing the /ʃ/ sound instead. Alternately, Portuguese-speaking learners might benefit from the lemon juice trick if they are substituting /ʃ/ for /ʧ/. If they taste the lemon juice, they have touched their tongue to their alveolar ridge, forming the target sound correctly.

Although most consonant sounds are formed by air passing through the oral cavity, three English sounds occur when air passes through the nasal cavity. These sounds, /m, n, ŋ/, are called nasals, and students are often amused to discover that it is impossible to produce these sounds when their noses are plugged. The places of articulation are different for each of these sounds (/m/ is bilabial, /n/ is alveolar and /ŋ/ is velar), but the air is stopped at each of the points of articulation in the same way that it is for a stop consonant. The difference is that the velum is lowered, and the air passes out through the nose, and some students may have difficulty producing a clear nasal in some circumstances. For example, speakers of English in Hong Kong may confuse /n/ and /l/ (Reference Deterding, Wong and KirkpatrickDeterding et al., 2008), and Russian speakers may produce /ŋ/ as /ɡ/, so wing sounds like wig (Reference Monk, Burak, Swan and SmithMonk & Burak, 2001). If students show difficulty producing a nasal sound, Reference Gilbert and BrownGilbert (1991) suggests having them hold a mirror under their nose and say /n/ or /ŋ/. The mirror will fog up if they are correctly allowing air through their nasal passage but not if they are incorrectly saying /l/ or /g/.

Approximants are formed when the articulators come into close contact, but they do not actually touch. In other words, “the space between the articulators is wide enough to allow the airstream through with no audible friction” (Reference Collins and MeesCollins & Mees, 2009, p. 48). There are two types of approximants in English: the liquids, /l/ and /ɹ/ (the latter being the approximant at the start of red); and the glides, /w/ and /j/ (the latter being the sound at the start of yet).

The liquids are some of the most challenging sounds for many English learners. /l/ is an alveolar sound, in which the air escapes along the sides of the tongue (Reference RoachRoach, 2009, p. 48). For this reason, /l/ is sometimes called a lateral consonant. /ɹ/ is another challenge for learners because in NAE the tongue does not touch the alveolar ridge or roof of the mouth; most speakers produce a retroflex sound by curling the tip of their tongues behind the alveolar ridge and rounding the lips slightly. Another fairly common way of pronouncing /ɹ/ is referred to as bunched /r/ (Reference Carley and MeesCarley & Mees, 2021, p. 30), in which the center of the tongue rises up toward the back of the velum and the lips are slightly rounded. In addition to these ways of articulating /ɹ/, the pronunciation of the sound also differs from dialect to dialect. Furthermore, in most dialects of British English, /ɹ/ is not normally pronounced unless it comes before a vowel sound, so while most North Americans would say farm as /fɑɹm/ and far as /fɑɹ/, in SSBE, the standard accent spoken in Britain, speakers would say these words with no /ɹ/ after the vowel (Reference WellsWells, 1982, p. 76). English instructors will want to teach the pronunciation that is most useful for their learners.

As stated, /ɹ/ is notoriously difficult for some students and many substitute /l/ for /ɹ/ because they touch their tongue to their alveolar ridge or roof of their mouths. In addition to using sagittal diagrams, in order to help students feel the difference between the two sounds, teachers can get them to place a pen horizontally in their mouths between their teeth, as shown in Figure 2. When students say /ɹ/, they should not touch the pencil in their mouths; instead, the tip should curl around the pencil.

Figure 2 The pencil trick

Lemon juice on the alveolar ridge can also help students identify when they are touching it with their tongue inadvertently and mispronouncing the /ɹ/ sound. English learners typically need a great deal of practice with this challenging contrast, and they often greatly appreciate any tangible help they can get.

The glides /w/ and /j/ are phonetically like vowels, but phonologically they behave like consonants (Reference RoachRoach, 2009, p. 50): they are articulated in ways similar to vowels, so the position for /j/ is similar to /i/ and the position for /w/ is similar in /u/; but they are used like consonants because they can occur before a vowel at the start of a syllable in words such as wet and yet. When English learners have trouble with these sounds, it may involve place and/or manner of articulation. For instance, Arabic and Turkish students may pronounce /v/ (or possibly [ʋ], a labio-dental approximant) instead of /w/ (Reference Nilsen and Pace NilsenNilsen & Pace Nilsen, 2010). Having students look into a mirror and compare their own mouth position with that of a model – either a picture, a video, or the instructor – is often enlightening.

3.2.3 Voicing

A final way to classify consonants is to distinguish between sounds that are created by vibrating the vocal chords, or voiced, and sounds that are created without the vocal chords, or unvoiced. More specifically, when a speaker makes a voiced sound, the vocal folds in the larynx come close together and vibrate, whereas when a speaker makes an unvoiced sound, the vocal folds are held apart. In English, the unvoiced consonant sounds are /p, t, k, f, θ, s, ʃ, ʧ h/. All others are voiced. All of the unvoiced sounds, with the exception of /h/, have an equivalent voiced pair in which the place and manner of articulation is identical; the only difference is whether the vocal chords vibrate or not. For instance, the mouth position for /f/ and /v/ are exactly the same; however, /f/ is not voiced and /v/ is voiced.

This voicing contrast can be challenging for some English-language learners because, according to Reference Maddieson, Dryer and HaspelmathMaddieson (2013), approximately one-third of the world’s languages do not contain a similar distinction. Arabic speakers are accustomed to accepting quite a wide range of sounds as /b/, and, in learning English, they face the task of differentiating /b/ from /p/ (Reference Field and GrantField, 2014, p. 83). Because [p] and [b] are allophones of the same phoneme in Arabic, they do not distinguish words, and as a result, when speaking English, Arabic speakers may produce what sounds like /b/ instead of /p/ at the beginning of words, so parking lot may be pronounced as barking lot (Reference Smith, Swan and SmithSmith, 2001). In this case, teachers can have students hold fluffy feathers or small, thin strips of paper in front of their mouths while they are repeating minimal pairs such as pit and bit. The goal is for the feathers or paper to move more visibly when the initial sound is /p/ than it should for /b/. Alternatively, students can hold their hands in front of their mouths to feel the difference in aspiration with the contrast between the sounds.

Furthermore, when students’ L1s contain voiced and unvoiced sounds, they may be constrained by rules most speakers are not even aware of. For example, speakers of some languages have trouble voicing consonants at the ends of words because voiced consonants do not occur at the ends of words in their native language. In addition, some languages have no voiced fricatives while others do not have voiced stops (Reference Avery, Ehrlich, Avery and EhrlichAvery & Ehrlich, 1992a, p. 26). Some of these differences carry a substantial functional load. In other words, if a speaker pronounces thank as /ðæŋk/ instead of the expected /θæŋk/, most proficient listeners will be able to recognize this word without trouble because there are very few minimal pairs (for instance, thigh and thy) for /θ/ and /ð/. However, the possibility for misunderstanding is far greater for /p/ and /b/, /t/ and /d/, /k/ and /g/, /s/ and /z/, and /f/ and /v/ (Reference BrownBrown, 1988). To teach voicing, the difference can be demonstrated by placing the palm of the hand on the larynx when articulating contrasting sounds such as /s/ and /z/ and feeling the vibration in the latter. Alternatively, one can cover one’s ears and feel the sensation of the resonance (Reference Przedlacka and KangPrzedlacka, 2018, p. 40). In addition, since the unvoiced stops of English have more aspiration (the puff of air that is produced while saying them is greater) than the voiced stops, using a feather or a slip of paper may help students feel and visualize the difference between troublesome contrasts in syllable-initial position.

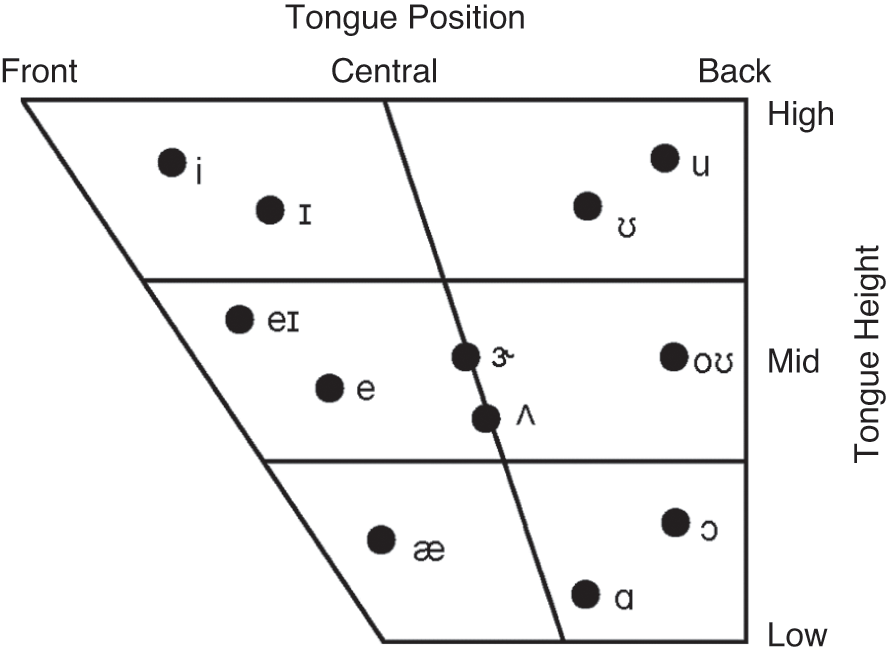

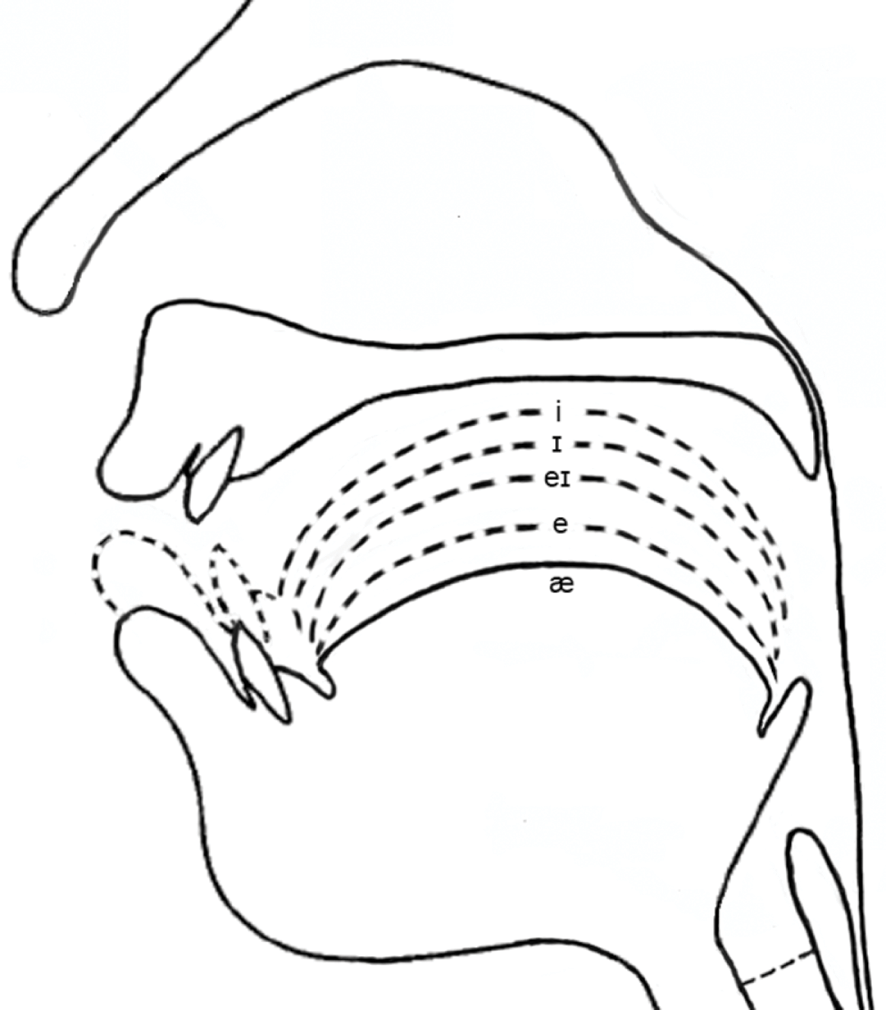

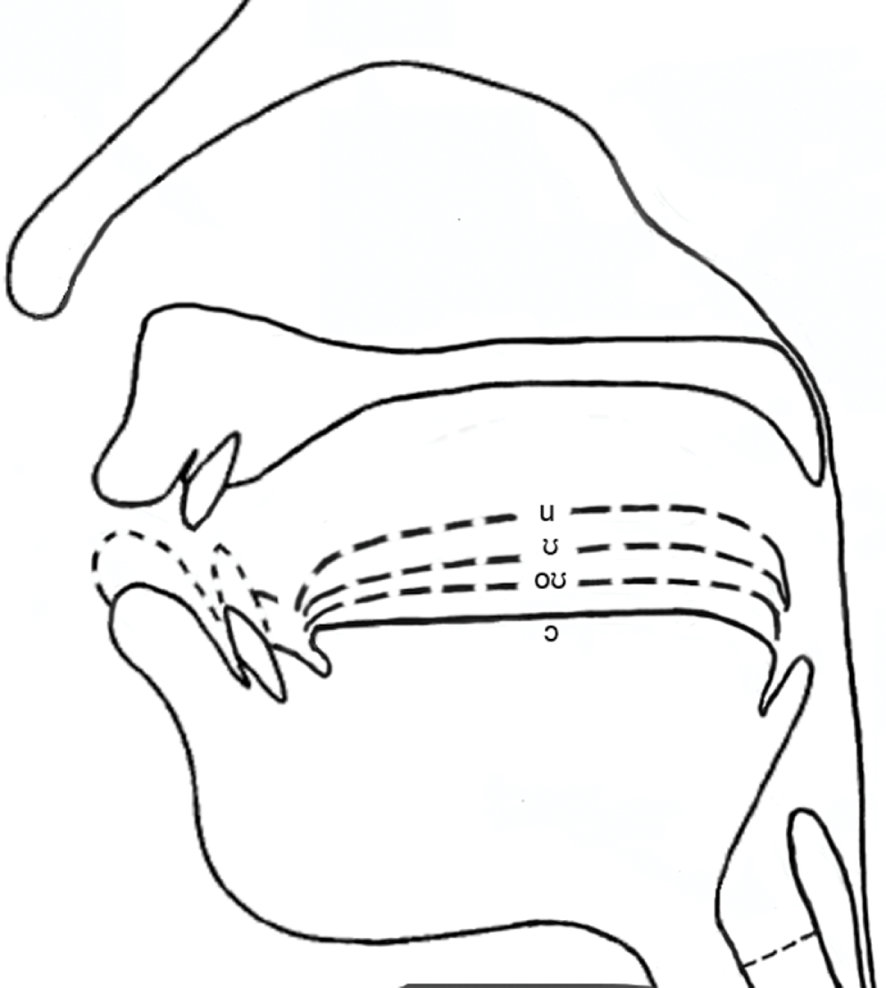

3.3 Articulation: Vowels