Book contents

- Frontmatter

- Contents

- Preface

- Nomenclature

- 1 Introduction

- 2 Survey of Distributions

- 3 Monte Carlo

- 4 Discrete Random Variables

- 5 The Normal Distribution

- 6 Handling Experimental Data

- 7 Mathematics of Random Variables

- 8 Bayes

- 9 Entropy

- 10 Collective Behaviour

- 11 Markov Chains

- 12 Stochastic Processes

- A Answers to Exercises

- B Probability Distributions

- Bibliography

- Index

- References

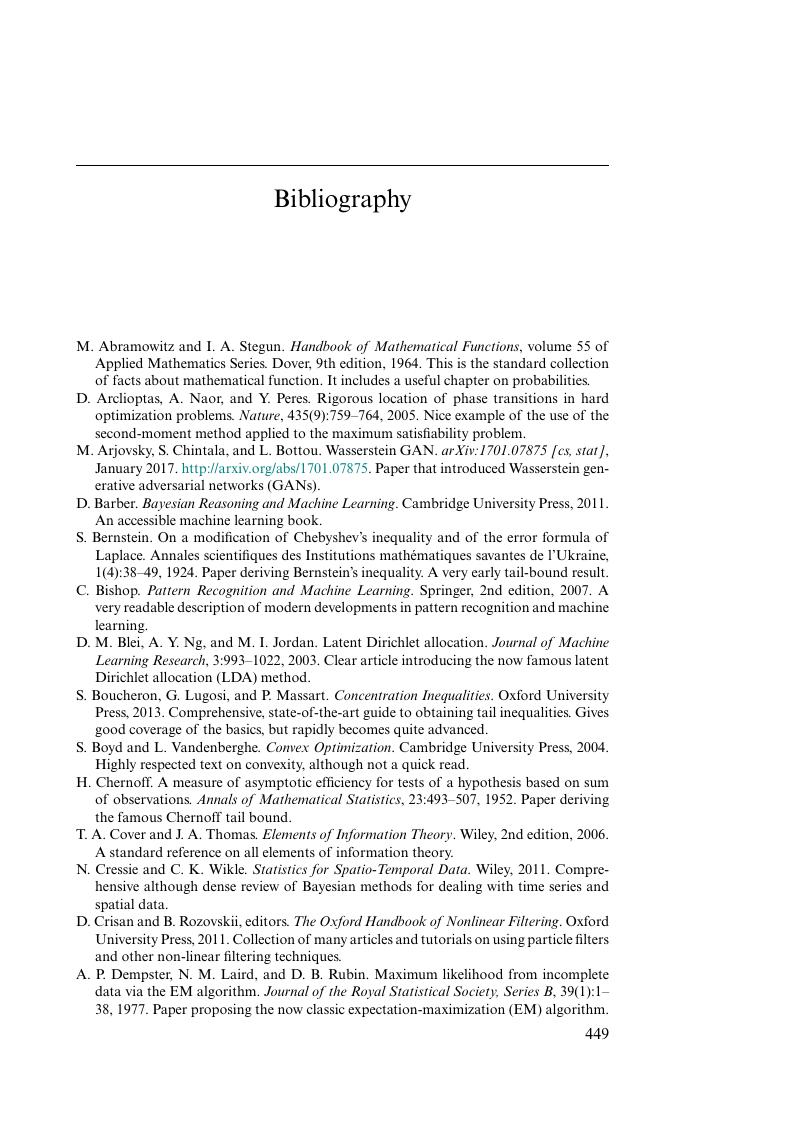

Bibliography

Published online by Cambridge University Press: 03 January 2020

- Frontmatter

- Contents

- Preface

- Nomenclature

- 1 Introduction

- 2 Survey of Distributions

- 3 Monte Carlo

- 4 Discrete Random Variables

- 5 The Normal Distribution

- 6 Handling Experimental Data

- 7 Mathematics of Random Variables

- 8 Bayes

- 9 Entropy

- 10 Collective Behaviour

- 11 Markov Chains

- 12 Stochastic Processes

- A Answers to Exercises

- B Probability Distributions

- Bibliography

- Index

- References

Summary

- Type

- Chapter

- Information

- The Probability Companion for Engineering and Computer Science , pp. 449 - 453Publisher: Cambridge University PressPrint publication year: 2020