Introduction

Consider this scenario: You would like to buy a new pair of shoes. You have a particular pair in mind that you are interested in, but not yet convinced that you really need or want it. You go online to check this pair of shoes out. However, once you found it on a shopping website, you were confronted with: ‘Low supply. Buy now!’ Almost instantly, you feel this impulse to buy the shoes immediately, dreading that if you take the time to responsibly consider whether you really need the shoes or could even afford it, you run the risk of the shoes selling out. Sounds familiar? This limited-quantity scarcity messages and others like these (e.g., countdown timer, confirmshaming, hidden subscriptions, etc.) are common ‘dark patterns’ found on online retail stores, generally with the goal to induce impulse buying. Beyond online shopping, dark patterns have been implemented in other situations. Some examples include Turbotax hiding and redirecting consumers away from the legally mandated option to file taxes for free toward their paid services (Elliott & Kiel, Reference Elliott and Kiel2019) and Uber implementing ‘dark nudges’ to prod drivers to work harder and longer (Scheiber, Reference Scheiber2017), just to name a few.

Coined by Brignull (Reference Brignull2010), dark patterns are subtle design features embedded in websites that prey on human psychology to steer consumers into making decisions that, if fully informed or under optimal conditions, they might not make. From a behavioral science perspective, dark patterns are designed to prompt consumers to evoke System 1 thinking rather than a more deliberate and thoughtful System 2 thinking by exploiting cognitive biases like scarcity bias or social proof (Stanovich & West, Reference Stanovich and West2000; Kahneman, Reference Kahneman2011). Central to the motivation as to why online vendors incorporate dark patterns is the attendant impact on impulse buying.

Impulse buying, generally defined as sudden and unplanned purchases that are emotionally driven and hedonically complex (Stern, Reference Stern1962; Rook, Reference Rook1987), has been on a rise alongside the unabated growth of e-commerce. A recent survey found that more than five in six Americans have made impulse purchases, with each person spending an average of $81.75 per session, amounting to almost $18 billion in total (McDermott, Reference McDermott2021). Other studies found that online purchases amounted to approximately 40% of consumers’ online expenditure (Liu et al., Reference Liu, Li and Hu2013). This trend is exacerbated by COVID-19 where online shopping has grown dramatically during the pandemic, at an estimated 39.1% year-over-year growth in Q1 2021 (Census Bureau, 2021). Slickdeals (an online shopping website) administered two surveys before the pandemic in January and again in April during the stay-at-home restrictions and found that impulse purchases have grown by 18% (Cain, Reference Cain2020). Indeed, the propensity toward impulse buying contributes in no small part to a broader issue of overspending in America today.

Related Literature on Dark Patterns

Generally, there are three bodies of literature. The first and dominant literature builds upon Brignull's (Reference Brignull2010) work to uncover, identify, and typologize different dark patterns (Bösch et al., Reference Bösch, Erb, Kargl, Kopp and Pfattheicher2016; Mathur et al., Reference Mathur, Acar, Friedman, Lucherini, Mayer, Chetty and Narayanan2019; Di Geronimo et al., Reference Di Geronimo, Braz, Fregnan, Palomba and Bacchelli2020). For example, Mathur and his colleagues developed an automated web crawler to extract dark patterns from a set of more than 11,000 shopping websites. They uncovered almost 2000 distinct dark patterns which were then recategorized into a seven-category taxonomy, each generally with a distinct underlying psychological mechanism.

The second body of research moves the focus away from identifying dark patterns to assessing their impact on consumers (Nouwens et al., Reference Nouwens, Liccardi, Veale, Karger and Kagal2020; Bongard-Blanchy et al., Reference Bongard-Blanchy, Rossi, Rivas, Doublet, Koenig and Lenzini2021; Luguri & Strahilevitz, Reference Luguri and Strahilevitz2021). Nouwens and his colleagues ran an experiment to understand the impacts of different dark patterns on obtaining users’ consent for personal data collection. They found that not all dark patterns are equally effective. Notification styles (barriers that prevent users from interacting until consent response is received vs. banners that ask for consent but do not block access) do not impact consent rate while not showing a ‘reject all’ button on the first page increases consent rate by 22–23 percentage points. In this body of work, more research is needed to better understand which dark patterns are effective, under what conditions, and among whom when it comes to online purchase impulsivity. In Study 1, we conduct a ‘sludge audit’ (Sunstein, Reference Sunstein2020) by experimentally testing three dark patterns commonly seen on e-commerce websites to assess their respective effectiveness at increasing online purchase impulsivity.

Building upon the earlier two bodies of research that establish dark patterns as ubiquitous with varying effectiveness, the third is a small burgeoning body of research that evaluates interventions to mitigate the impacts of dark patterns (Mills, Reference Mills2020; Moser, Reference Moser2020). Though not explicitly examining the impact of price anchoring on pre-discounted cost as a dark pattern, Moser (Reference Moser2020) conducted a series of experiments to test the efficacy of different interventions on purchase impulsivity and purchase intent on a list of discounted shopping items. She found that both reflection (list three reasons why you should/should not buy) and distraction (count number of red squares in two 10 × 15 tables) interventions reduce purchase impulsivity and intent significantly, relative to the control group. A gap in this literature is that we need more research on understanding which intervention is effective against which dark pattern. Accordingly, in Study 2, we examine the efficacy of different interventions on different dark patterns within the context of online shopping as it relates to impulse buying.

The outline of the article is as follows: first, we introduce Study 1 with a theoretical discussion of dark patterns as sludge, their respective hypotheses, experimental design and results. Following that, we discuss Study 2 in a similar fashion to Study 1 but delved into the theoretical considerations of the interventions tested instead. Lastly, we addressed the limitations of both studies and a nuanced but in-depth discussion about the policy implications.

Study 1: Do Dark Patterns Work?

The Sludging Impact of Dark Patterns

Conventionally, sludges are defined as excessive friction with the intent to inhibit a more deliberative System 2 thinking (Thaler, Reference Thaler2018; Sunstein, Reference Sunstein2020). More recently, Shahab and Lades (Reference Shahab and Lades2021) integrated the concept of sludge with transaction cost economics to develop a typology of different types of costs incurred from sludges, namely search, evaluation, implementation, and psychological costs. Accordingly, dark patterns are sludges intentionally incorporated into the choice architecture of decision-makers to increase the search costs (e.g., misdirection), evaluation costs (e.g., concealment), as well as psychological costs (e.g., induced anxiety brought about by scarcity bias, as illustrated in the introductory scenario). However, are all dark patterns equally effective? Or are some more effective than others, as suggested by the literature? As documented by Mathur et al. (Reference Mathur, Acar, Friedman, Lucherini, Mayer, Chetty and Narayanan2019), there are almost 2000 different types of dark patterns, making it impossible to test them all. In Study 1, we limit it to three commonly experienced dark patterns.

Social norms are one of the most commonly used nudges and have been applied to a wide variety of settings ranging from energy conservation (Allcott, Reference Allcott2011) to voting (Gerber & Rogers, Reference Gerber and Rogers2009). The central reasoning for this is that when making decisions under uncertainty, most people either rely on descriptive norms (‘what do most people do’) and/or injunctive norms (‘what you should do’) to guide their behavior (Cialdini, Reference Cialdini2006). As a testament to the popularity of social norms, a recent global survey of behavioral practitioners in corporate settings revealed that 83% of them had used social influence (a broad umbrella term that encompasses social norms and social proof) as a behavioral change technique at work (Wendel, Reference Wendel2020). In the online retail space, customer testimonials are common instantiations of social proof. A large body of research ranging from reviews, ratings, and recommendations found evidence affirming the effectiveness of using social proof to improve sales (Amblee & Bui, Reference Amblee and Bui2011; Luca, Reference Luca2016; Gavilan et al., Reference Gavilan, Avello and Martinez-Navarro2018). An unintended consequence of the success in leveraging social proof to drive business growth is the emergence and proliferation of fake reviews, as a type of dark pattern (Luca & Zervas, Reference Luca and Zervas2016).

H1: Consumers exposed to social proof dark pattern (a customer testimonial) will have significantly higher buying impulse than the control group

Exploiting scarcity bias is another common dark pattern deployed in the retail space. The more scarce a product is perceived to be, the more valuable it is in the eyes of consumers (Cialdini, Reference Cialdini2006; Mullainathan & Shafir, Reference Mullainathan and Shafir2013). Because product scarcity is able to influence product price and popularity, limited edition products and time-limited offers are now part and parcel of most company's marketing strategies (Shi et al., Reference Shi, Li and Chumnumpan2020). Even though there are typically three types of scarcity messaging used in the marketplace: time scarcity (e.g., ‘limited time only’), quantity scarcity (e.g., ‘only 10 left in stock’), and demand-related scarcity (e.g., ‘in high demand’), the majority of research is focused on evaluating the first two scarcity messages, to the exclusion of the third (Aggarwal et al., Reference Aggarwal, Jun and Huh2011; Luo et al., Reference Luo, Cheng, Zhou, Song, Yu and Lin2021; Wu et al., Reference Wu, Xin, Li, Yu and Guo2021). For example, in an experiment using hypothetical advertisements, Aggarwal and his colleagues (Reference Aggarwal, Jun and Huh2011) found that, relative to the control, the average purchase intent of participants in both limited-quantity (‘First 100 customers only’) and limited-time scarcity messages (‘For six days only’) were significantly higher. Interestingly, not all scarcity messaging is the same. The average intent to purchase was higher for those exposed to the limited-quantity advertisement relative to the limited-time advertisement. Similarly, Wu et al. (Reference Wu, Xin, Li, Yu and Guo2021) examined both the limited-time and limited-quantity messaging in a field experiment and found both to be effective in stimulating impulse purchase. A notable exception is a study that compared demand-related scarcity (‘in high demand’) versus supply-related scarcity (‘limited supply only’) messaging and found that the former was more effective than the latter (Aguirre-Rodriguez, Reference Aguirre-Rodriguez2013). Similarly, in study 1, we test the effectiveness of both the demand-related and supply-related scarcity messaging.

H2a: Consumers exposed to supply-related scarcity messaging (‘Only 5 left in stock – Order soon’) will have significantly higher buying impulse than the control group

H2b: Consumers exposed to demand-related scarcity messaging (‘Item in high demand – Order soon’) will have significantly higher buying impulse than the control group

H2c: Consumers exposed to supply-related scarcity messaging will have significantly lower buying impulse than those exposed to the demand-related scarcity messaging.

Experimental Design and Methodology

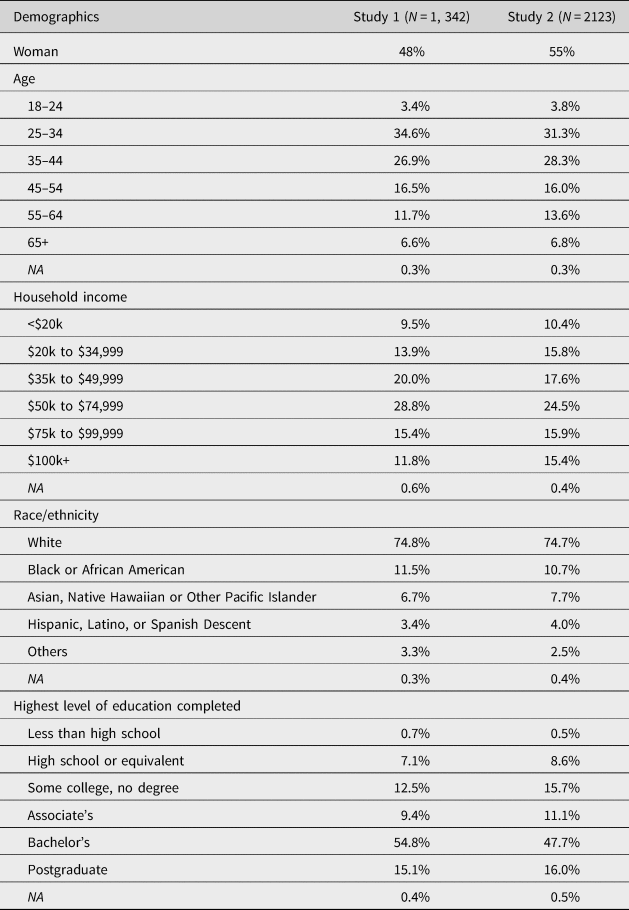

To recap, Study 1 experimentally assesses the impact of three dark patterns, specifically limited-quantity, testimonials, and high-demand, against a control group on purchase impulsivity within a hypothetical online shopping experience, involving only one product. An a priori power analysis utilizing a 95% confidence interval, achieving power of 80%, and predicting a small effect size (f = 0.1) determined that a minimum of 274 respondents were required per condition, for a total minimum sample size of 1096. Sample characteristics are presented in Table 1. We administered an online experimental survey to 1342 respondents from Amazon Mechanical Turk (MTurk) to ensure that we obtained sufficient power. Using IQR completion timeFootnote 1 and attention checksFootnote 2 as criteria, 122 respondents (9%) were identified as non-serious and subsequently dropped from the analysis.

Table 1. Sample characteristics, studies 1 and 2.

Participants were presented with a hypothetical online shopping experience, involving only one product – Red Yeast Rice, a supplement purported to reduce cholesterol. We chose Red Yeast Rice for two main reasons: (1) even though the product does exist, it may be obscure enough to minimize the impact of prior preference as a potential confound; (2) supplements, including Red Yeast Rice are not regulated by the FDA nor is their uniformity and safety independently verified (see Klimek et al., Reference Klimek, Wang and Ogunkanmi2009), a controversy where the manipulative nature of dark patterns may be exploited. Participants were presented with three pieces of information:

• A fact sheet about Red Yeast Rice, adapted from the National Institute of Health, to eschew variation in prior knowledge as a potential confound (Figure 1).

• Real production information about Red Yeast Rice, taken from Amazon.com (Figure 2).

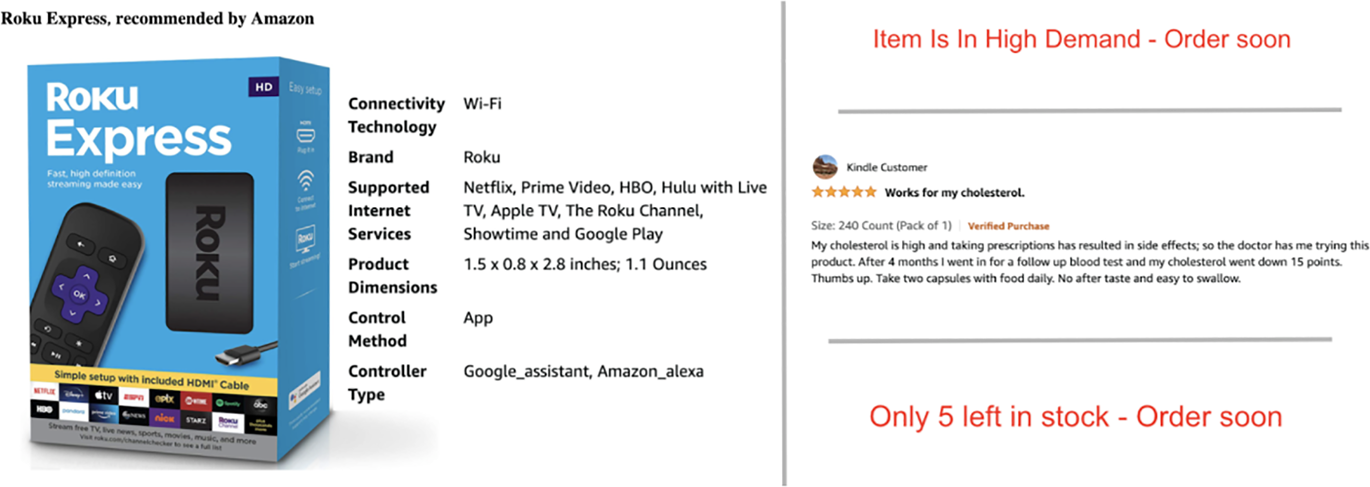

• One out of three dark patterns, randomly selected, or a control (information on an unrelated product recommendation, Roku Express, taken from Amazon.com) (Figure 3).

Figure 1. Fact Sheet About Red Yeast Rice, Adapted From the National Institute of Health.

Figure 2. Product Information About Red Yeast Rice, Taken From Amazon.com.

Figure 3. (L) Control; (R) Dark Patterns: (T) High-Demand, (M) Positive Testimonials, (B) Limited-Quantity.

After viewing each piece of information in sequential order, participants were asked, on a scale from one to seven, what their urge to purchase Red Yeast Rice can be described as.

Measuring impulse buying is notoriously challenging (Wells et al., Reference Wells, Parboteeah and Valacich2011; Chan et al., Reference Chan, Cheung and Lee2017). Because of social desirability bias, most consumers may be reluctant to admit to indulging in impulse buying – a behavior many regard as undesirable. Beatty and Ferrell (Reference Beatty and Ferrell1998) point out that capturing impulse purchases in a timely fashion and in situ can be difficult. In addition to that, research in online impulse buying has also experienced limited success in capturing actual impulse purchase behavior (Koufaris, Reference Koufaris2002). As a result, ‘felt urge to buy impulsively’ is the dominant outcome surrogate used to measure impulse buying (for a review, see Chan et al., Reference Chan, Cheung and Lee2017). Consistent with the literature, we decided to use this outcome variable as an imperfect but adequate proxy to measure impulse buying.

Results

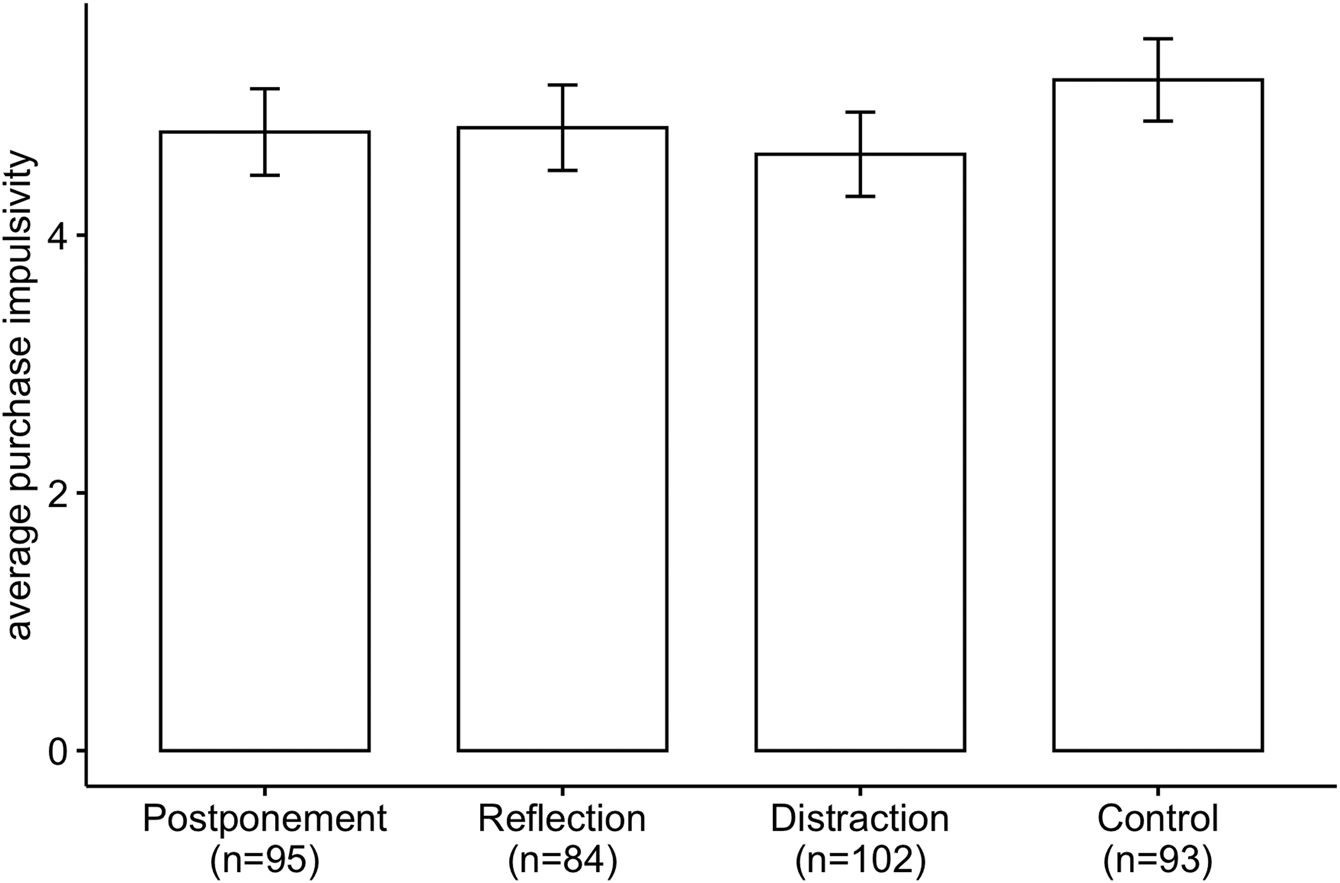

Results from a one-way ANOVA were significant, indicating that at least the average purchase impulsivity of one experimental condition is significantly different from the rest [F(3, 1222) = 4.83, p < 0.01], and with a small effect size (ɳp 2 = 0.01). A post hoc pairwise comparison with FDR adjusted p-values using the BH procedure (Benjamini & Hochberg, Reference Benjamini and Hochberg1995) revealed that average purchase impulsivity for the control group (M = 3.78; SD = 2.21) is significantly lower across all treatment groups [high-demand (M = 4.31; SD = 2.14), limited-quantity (M = 4.22; SD = 2.11) and social proof (4.38; SD = 2.09)]. Hypotheses 1, 2a, and 2b are supported. Additionally, there is no statistically significant difference detected between each treatment condition, indicating no support for hypothesis 2c. The results are summarized in Figure 4. Departing from previous studies that found that not all dark patterns are equally effective, our results suggest that all the dark patterns that we tested do have an impact in increasing average purchase impulsivity, albeit a small effect size. Next, after establishing that dark patterns do impact purchase impulsivity within an online shopping environment, in Study 2, we investigated which interventions may help attenuate the negative influence of each dark pattern.

Figure 4. Results From Pairwise t-Test of Dark Patterns and Average Purchase Impulsivity, Separated by Experimental Conditions. *p < 0.05, **p < 0.01, ***p < 0.001.

Study 2: Can Nudges Help Mitigate the Impact of Dark Patterns?

Study 2 builds off Moser's (Reference Moser2020) work to test the efficacy of three different interventions (postponement, reflection, and distraction) against four distinct dark patterns (limited-time scarcity, limited-quantity scarcity, high-demand scarcity, and positive testimonials) within the context of online shopping.

Intervention #1: Postponement

More commonly referred to as ‘cooling-off periods,’ postponement interventions operate like a circuit-breaker that interrupts rash decision-making. Also, postponements are typically implemented during the period when a provisional decision has been made and when that decision is binding or permanent. Studies show that when people are in a viscerally charged state (i.e., having strong emotions), they tend to make suboptimal decisions (Loewenstein, Reference Loewenstein1996; Kahneman, Reference Kahneman2011). Having some time for these strong emotions to wane gives people an opportunity to reconsider and, perhaps, make better decisions. Similarly, when discussing consumer behavior, Hoch and Loewenstein (Reference Hoch and Loewenstein1991) argue that establishing temporal proximity, that is giving time for hot heads to cool, has the potential to reduce purchase impulsivity. Hence, it is of little wonder we see postponement interventions implemented across different domains ranging from negotiations (Oechssler et al., Reference Oechssler, Roider and Schmitz2015) to divorce (Wie & Kim, Reference Wie and Kim2015), and is now almost a standard practice in consumer protection regulations (Camerer et al., Reference Camerer, Issacharoff, Loewenstein, O'Donoghue and Rabin2003). Testing this hypothesis, Moser (Reference Moser2020) ran a series of experiments and found mixed results on the effectiveness of postponement with regards to purchase impulsivity. In sum, the literature suggests that postponement may be an effective nudge to attenuate the impacts of dark patterns of online shopping, though with inconclusive evidence.

H1a: Average purchase impulsivity is significantly lower for groups exposed to the postponement intervention than the control group.

H1b: Average purchase impulsivity is significantly lower for participants after exposure to postponement intervention than before.

Intervention #2: Reflection

Another common technique for debiasing is the consider-the-opposite strategy (Lord et al., Reference Lord, Lepper and Preston1984), or simply referred to as ‘reflection’ (Moser, Reference Moser2020). Generally, reflection tasks ask participants to provide justifications as to why their position is valid, and also consider why the opposite position may be equally valid. Stemming from the concept of disfluency (Mills, Reference Mills2020), reflection increases the cognitive load of decision-making, forcing participants to confront the assumptions of their pre-existing position, and at the same time open the door to considering alternates. Experimental studies have shown the effectiveness of reflection across different contexts such as managerial decision-making (Nagtegaal et al., Reference Nagtegaal, Tummers, Noordegraaf and Bekkers2020), price estimation of cars and probabilities of election outcomes (Mussweiler et al., Reference Mussweiler, Strack and Pfeiffer2000). Moser (Reference Moser2020) found that reflection does significantly reduce purchase impulsivity and intent, but she only tested it against anchoring on discounted pricing. This study tests reflection against other forms of dark patterns within online shopping.

H2a: Average purchase impulsivity is significantly lower for groups exposed to the reflection intervention than the control group.

H2b: Average purchase impulsivity is significantly lower for participants after exposure to reflection intervention than before.

Intervention #3: Distraction

Contrary to popular belief that distractions are anathema to optimal decision making, experimental studies actually found the opposite. Known as ‘deliberation-without-attention,’ researchers found that those who were distracted with an unrelated task before rendering a decision made a better choice, relative to those who made their decision immediately, and those who spent some time deeply thinking about it (Dijksterhuis, Reference Dijksterhuis2004; Dijksterhuis et al., Reference Dijksterhuis, Bos, Nordgren and Baaren2006). To elaborate, consumers who were randomly assigned distracted tasks were more competent at differentiating between attractive and unattractive product alternatives (Dijksterhuis et al., Reference Dijksterhuis, Bos, Nordgren and Baaren2006) and reported more satisfaction with their selections (Dijksterhuis & van Olden, Reference Dijksterhuis and van Olden2006), relative to other groups who either evaluated the products immediately or thought consciously about them. Even though some subsequent studies on distractions did not find an impact (e.g., Acker, Reference Acker2008), a meta-analysis found evidence that distraction is statistically significant (though admittedly a small effect size) and moderators such as mind-set help explain the discrepancies between studies (Strick et al., Reference Strick, Dijksterhuis, Bos, Sjoerdsma, Baaren and Nordgren2011).

Alternatively, distraction tasks may lead to cognitive depletion that inhibits consumers from making more deliberative processing, increasing their susceptibility to dark patterns (Stroop, Reference Stroop1935; Pocheptsova et al., Reference Pocheptsova, Amir, Dhar and Baumeister2009; Vonasch et al., Reference Vonasch, Vohs, Ghosh and Baumeister2017).Footnote 3 In other words, contrary to the earlier theoretical prediction mentioned above, distraction tasks could backfire. As such, we test the effectiveness of distraction as a digital intervention aimed at reducing purchase impulsivity.

H3a: Average purchase impulsivity is significantly lower for groups exposed to the distraction intervention than the control group.

H3b: Average purchase impulsivity is significantly higher for groups exposed to the distraction intervention than the control group.

H3c: Average purchase impulsivity is significantly lower for participants after exposure to distraction intervention than before.

In sum, Study 2 contributes to the literature by examining the efficacy of different interventions on different dark patterns within the context of online shopping. This study also contributes to the growing body of behavioral science research that moves away from adding yet another to the ever-growing list of cognitive biases to a more actionable direction of proposing and experimentally testing interventions that may potentially help people make better decisions (e.g., Thaler & Sunstein, Reference Thaler and Sunstein2009; Sin et al., Reference Sin, Murphy and Lamas2019; Milkman, Reference Milkman2021).

Differences Between Studies 1 and 2

There are three main differences between Study 1 and 2. First is the focus. Study 2 is focused on experimentally testing interventions against dark patterns, while Study 1 is interested in understanding the impact of dark patterns. The second difference is that we added one more dark pattern – ‘limited-time’ scarcity – to the mix, increasing the number of dark patterns to be tested from three in Study 1 to four in Study 2. The last difference is the decision to raise the level of realism in Study 2, relative to Study 1, and we achieved this in two ways. First, unlike Study 1 where we only presented one product to participants, we increased the number of products presented to participants to ten in Study 2. The advantage of doing this is that it makes the experience of online shopping more realistic, and less like a laboratory setting. Second, all ten products were top-rated best seller items across different categories, ranging from Home & Kitchen to Electronics, that we carefully curated from Amazon.com. Some examples are a blender, Roku Stick, laptop backpack, and so on. In other words, we intentionally replaced an obscure product like Red Yeast Rice with popular ones, in hope of maximizing the probability that at least one of the items is of interest to participants. However, the disadvantage of raising realism is that we unavoidably introduce confounds such as prior preferences, a potential issue that is inherent and compounded by introducing ten products instead of one. As such, it would be hard to rule out other unexpected confounds and external influences. McGrath (Reference McGrath, Baecker, Grudin, Buxton and Greenberg1995) notes that all research designs are essentially a satisficing process between generalizability, precision, and realism. No one design can maximize all three. In designing this research study, we intentionally increase the level of realism, while acknowledging that in doing so, precision and generalizability may not be optimal.

Experimental Design and Methodology

The results from our power analysis revealed that a minimum of 104 respondents were required per condition to achieve statistical power of 80% at a 95% confidence interval that predicts a small effect size (f = 0.1), achieving a total minimum sample size of 2080. The demographics of participants from both studies are presented in Table 1. Accordingly, a 5 × 4 between- and within-subject experimental online survey was administered to 2123 respondents, also from MTurk. Similar to Study 1, we used IQR completion time and attention checks to identify those who were ‘non-serious’ and a total of 158 participants (8%) were dropped from the analysis. The final sample size is 1945. Participants were randomly assigned, at the start, into 1 out of 20 possible arms. With the exception of the baseline and control groups, each arm is a combination that is made up of one out of four dark patterns (limited-quantity scarcity, limited-time scarcity, high demand, and positive testimonies) and one out of three interventions (reflection, distraction, and postponement). Arms that were not randomly assigned any intervention nor dark pattern constituted the baseline, and arms that either have only dark patterns but no interventions, or vice versa, constituted the control groups. Table 2 illustrates the overall experimental design. For the sake of brevity and sticking to answering the research question, we limit our discussion that compares each combination of dark patterns and interventions against the control group (e.g., testimonials + postponement vs. control).

Table 2. Experimental design with 20 conditions, enumerated.

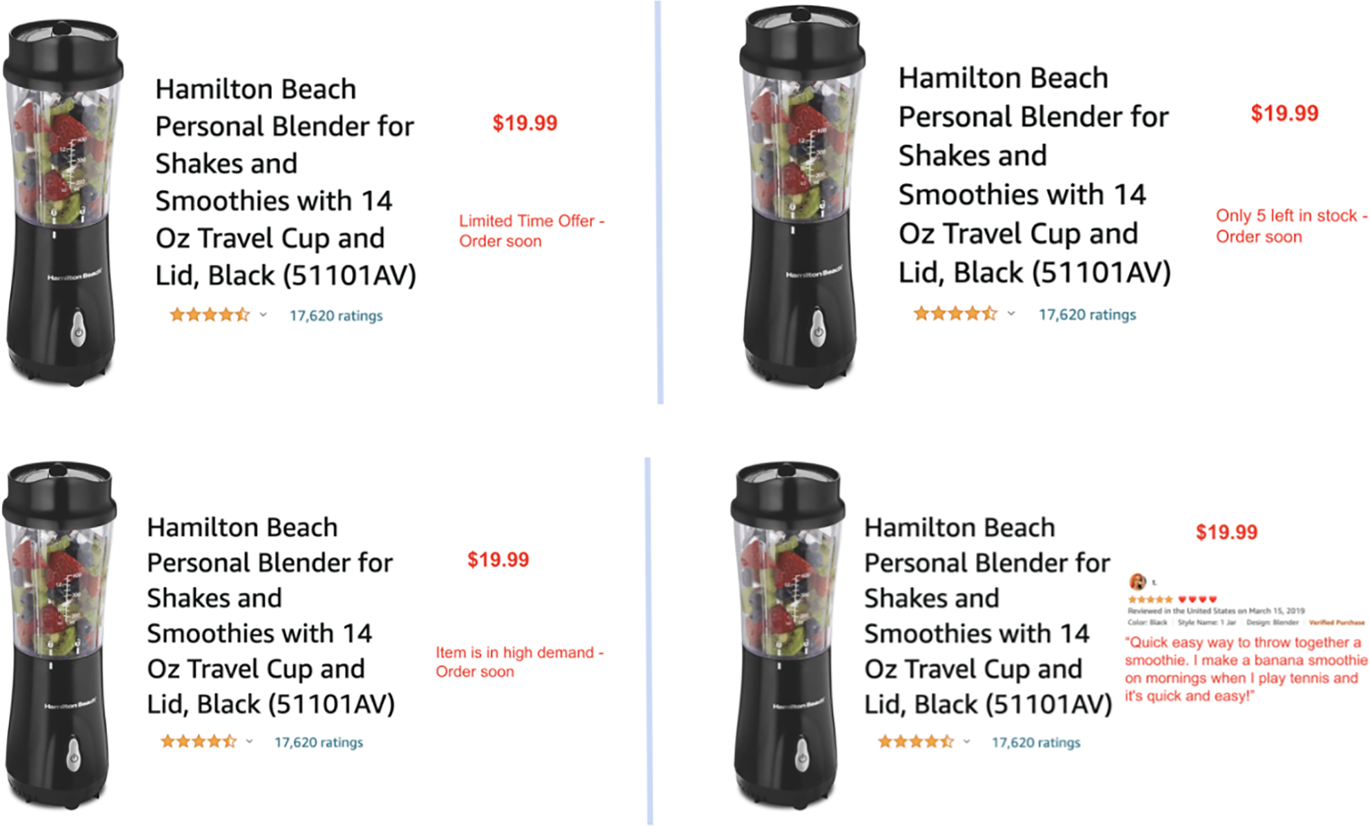

At the start of the experiment, all participants were presented with a list of ten highly rated best seller products that were carefully curated across different categories from Amazon.com. The list shows a picture of each item and a short description of what the item is, both of which were also taken verbatim from Amazon. From the list of ten products randomly displayed, we asked all participants to click on the item that they felt the strongest urge to purchase. Once selected, the item was accompanied with one out of four possible four dark patterns, or none at all, representing a control or a baseline, as mentioned earlier (see Figure 5). The four dark patterns are:

• limited-quantity scarcity (‘Only 5 Left in Stock – Order Soon’),

• limited-time scarcity (‘Limited Time Offer – Order Soon’),

• high demand (‘Item is in High Demand – Order Soon’),

• positive testimonies (one real review taken from Amazon.com).

Figure 5. Example of an Item with Limited-Time (Top Left), Limited-Quantity (Top Right), High-Demand (Bottom Left) and Positive Testimonials (Bottom Right) Dark Patterns. The Control Group Does Not Have Any Accompanying Dark Patterns.

Participants were also given the option to continue or go back to the list and reselect another item, if they so choose. But if participants reselected a different product, they would still be exposed to the same dark pattern to ensure continuity.

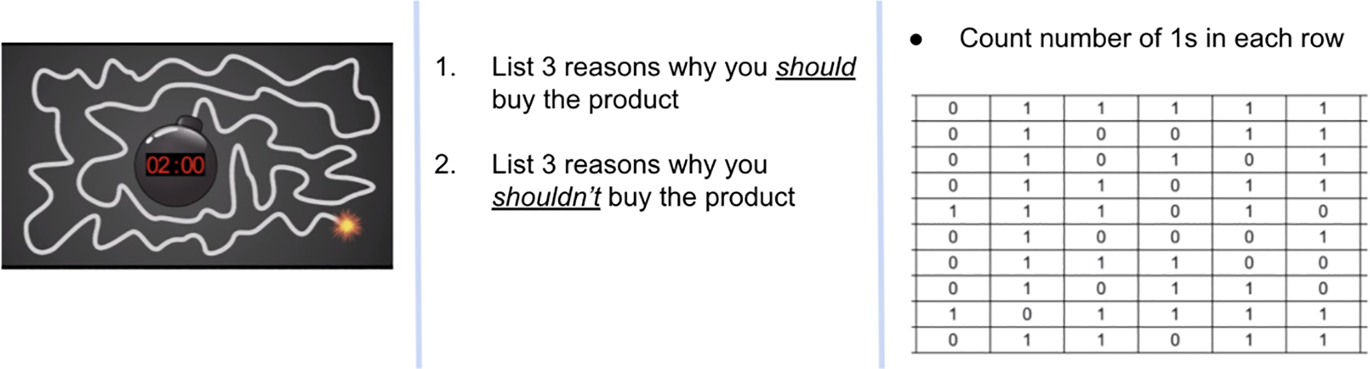

Once participants finalized their product selection, we asked them to self-report, on a scale from 1 (no urge) to 7 (very strong urge), what their purchase impulsivity is (‘at this moment, the urge to buy the product that I selected can be described as’), the same outcome variable as Study 1. Following from that, participants were tasked to engage with the interventions, that is, they had to complete one of three tasks (randomly assigned) before continuing (see Figure 6):

• postponement (watching a 2-minute delay video before continuing),

• reflection (listing three reasons why they should/should not purchase the item selected),

• distraction (counting number of ‘1's from a 10 × 10 grid filled with ones and zeros).

Figure 6. Three Types of Intervention Tasks: Two Minute Delay Postponement (Left), Reflection (Middle) and 10 × 10 Distraction Grid Task (Right). Control Groups Were Not Asked to Complete Any Tasks.

Controls and baseline groups proceeded with the study without any interventions. After engaging with the interventions, and with the exception of participants in the baseline and non-intervention groups, we re-measured their purchase impulsivity, using the exact same wording.Footnote 4

Two-Way Mixed ANOVA on Purchase Impulsivity

We ran a two-way mixed ANOVA to compare the main effects of experimental arm (between-subject) and intervention (within-subject), as well as their interaction, on purchase impulsivity (results summarized in Table 3). The results from two-way Mixed ANOVA show the interaction between intervention and experimental arms was significant (F(19, 1945) = 9.01, p < 0.0001, η 2 = 0.01), suggesting that at least one arm had a significant change in average purchase impulse from pre- to post-intervention. Following the convention of finding a significant interaction in a mixed ANOVA, we proceeded to look at the simple main effects.

Table 3. Two-way mixed ANOVA results on purchase impulsivity.

A separate one-way ANOVA was conducted on impulse to purchase before and after intervention. Purchase impulsivity was not significantly different between each arm pre-intervention (F(19, 1945) = 1.32, p = 0.16, η 2 = 0.01). However, purchase impulsivity was significantly different between each arm post-intervention (F(19, 1945) = 3.48, p < 0.00, η 2 = 0.03), suggesting that at least one combination of dark pattern-digital intervention was effective in reducing purchase impulse, relative to another combination, post-intervention. Similar to Study 1, a post hoc pairwise comparison with FDR adjusted p-values using the BH procedure (Benjamini & Hochberg, Reference Benjamini and Hochberg1995) revealed that at least one intervention is effective in significantly reducing average purchase impulsivity for three out of four dark patterns, relative to control groups. Due to space constraints, we limit our discussion to only experimental conditions that were meaningfully significant.Footnote 5

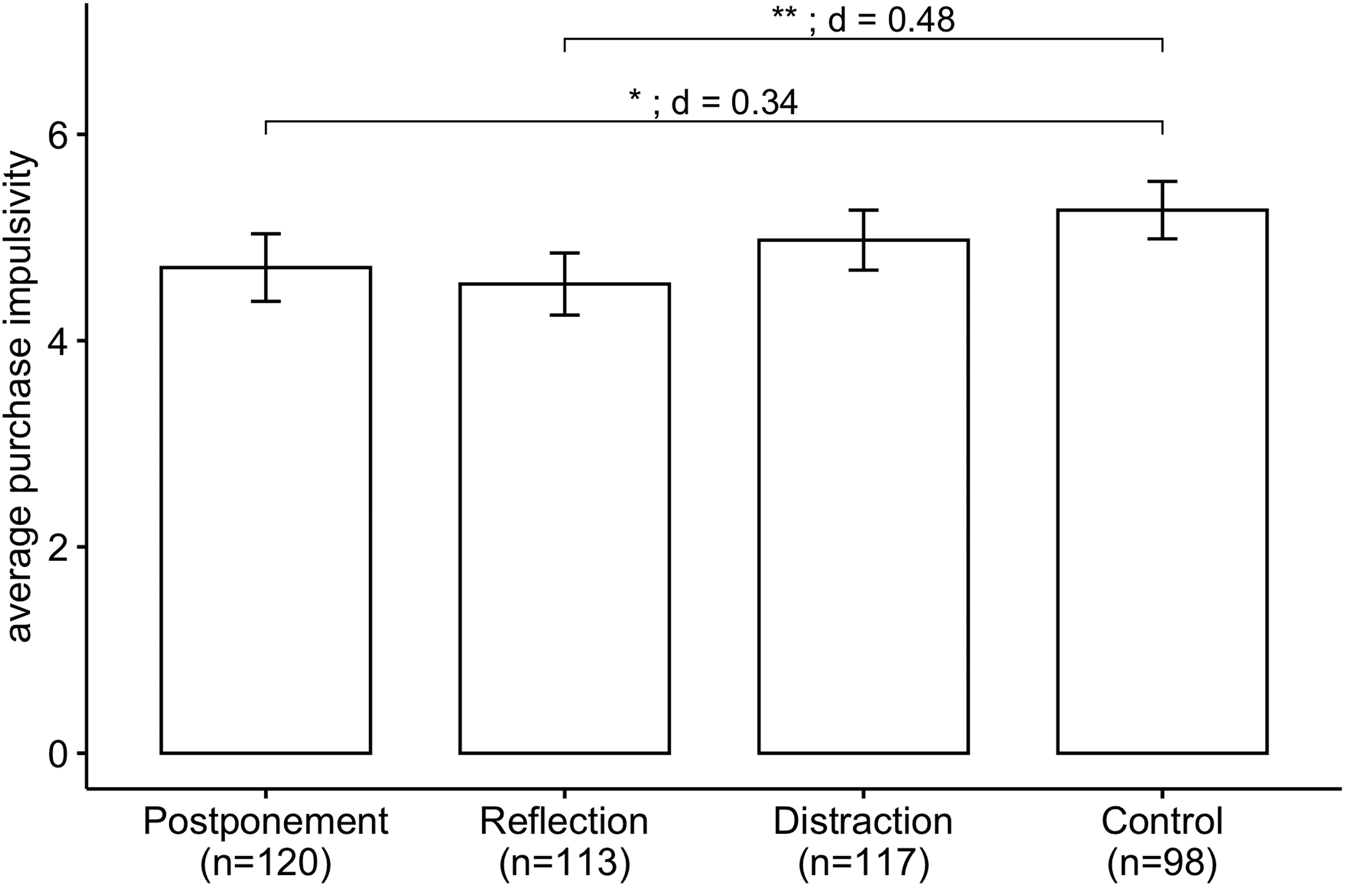

For participants who experienced limited-time scarcity messaging, all interventions [postponement (M = 4.32, SD = 1.89); reflection (M = 4.66, SD = 1.65); distraction (M = 4.70, SD = 1.83)] significantly reduced average purchase impulsivity relative to control (M = 5.30, SD = 1.40) (Figure 7). In contrast, as illustrated in Figure 8, none of the interventions produced a significant impact against limited-quantity scarcity messaging [(postponement (M = 4.80, SD = 1.65); reflection (M = 4.83, SD = 1.53); distraction (M = 4.63, SD = 1.67); control (M = 5.20, SD = 1.55)]. For the remaining two dark patterns, not all interventions were equally effective.

Figure 7. Limited-Time Dark Pattern: Results of Pairwise Comparison t-Test on Average Purchase Impulsivity, Separated by Interventions. *p < 0.05, **p < 0.01, ***p < 0.001.

Figure 8. Limited-Quantity Dark Pattern: Results of Pairwise Comparison t-Test on Average Purchase Impulsivity, Separated by Interventions. *p < 0.05, **p < 0.01, ***p < 0.001.

Among the participants who were exposed to positive testimonials as a dark pattern, only the reflection intervention (Figure 9) led to a significantly lower average purchase impulsivity (M = 4.36, SD = 1.80) than the control (M = 5.30, SD = 1.47) group. When it comes to combating high-demand messaging (Figure 10), participants who engaged with postponement (M = 4.71; SD = 1.81) and reflection (M = 4.55; SD = 1.62) had significantly lower average purchase impulsivity than the control (M = 5.27; SD = 1.39) group.

Figure 9. Positive Testimonials Dark pattern: Results of Pairwise Comparison t-Test on Average Purchase Impulsivity, Separated by Interventions. *p < 0.05, **p < 0.01, ***p < 0.001.

Figure 10. High-Demand Dark Pattern: Results of Pairwise Comparison t-Test on Average Purchase Impulsivity, Separated by Interventions. *p < 0.05, **p < 0.001, ***p < 0.001.

Overall, similar to previous studies, we found that no one size fits all. In other words, not all interventions are equally effective across all dark patterns, some interventions are effective against certain dark patterns while others are not. For example, reflection significantly reduces average purchase impulsivity for all dark patterns, except limited-quantity scarcity, whereas distraction is effective against limited-time scarcity only. As a result, we find support for hypotheses 1a, 2a, 3a, and 3b.

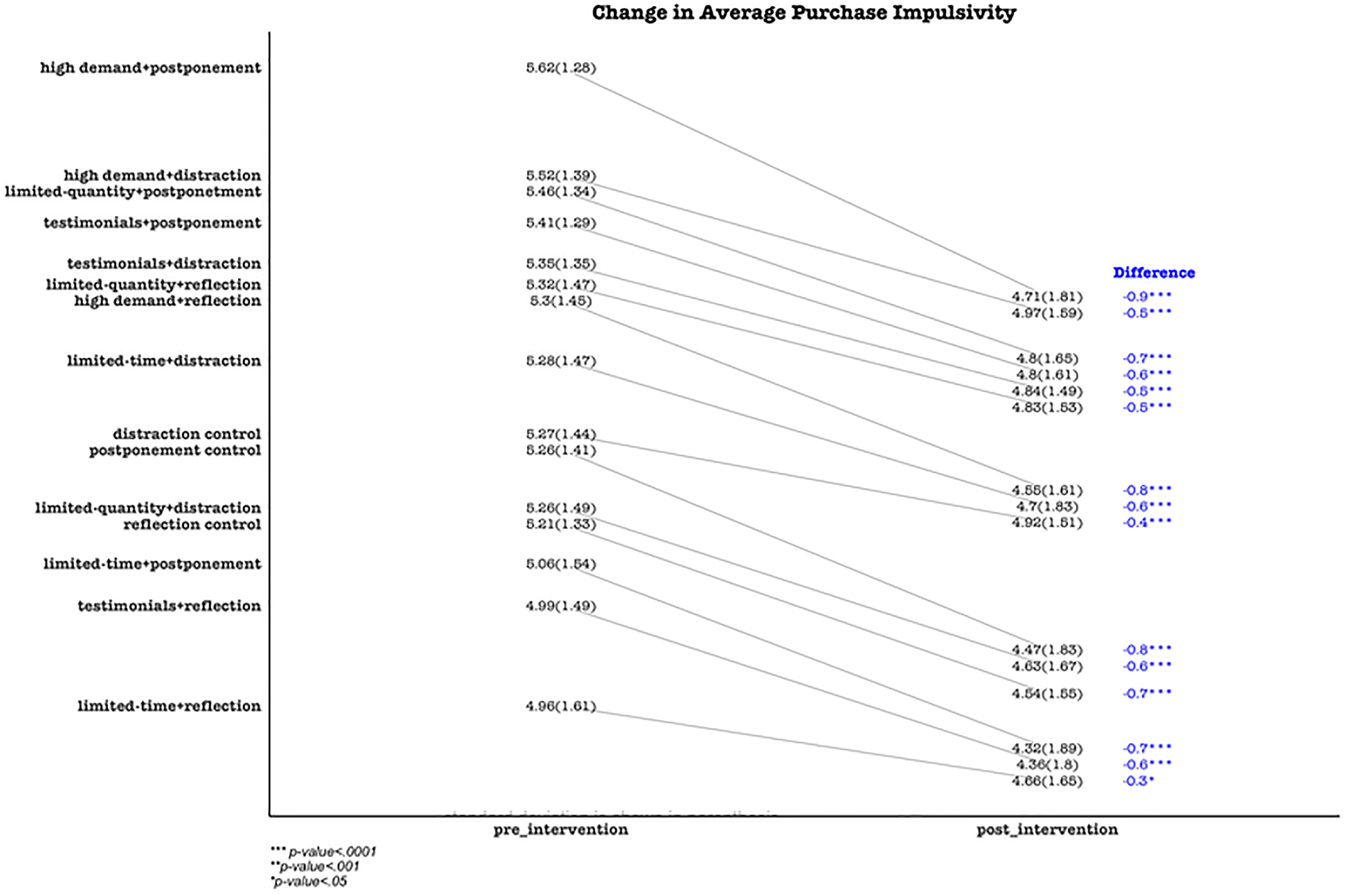

Next, we move on from between-subject to within-subject analysis. We ran a repeated measure ANOVA and, consistent with previous analyses, a post hoc pairwise comparison (with FDR adjusted p-values using the BH procedure) for all experimental treatment conditions to evaluate the corresponding impact of the interventions on the change in average purchase impulsivity. Table 4 summarizes the results of the repeated measure ANOVA and their corresponding effect sizes, grouped by dark patterns. Overall, the results indicate that average purchase impulse declined significantly across all interventions. Figure 11 presents the average purchase impulsivity and their corresponding change before and after intervention, separated by treatment conditions, and the adjusted p-value. Similarly, the results indicate that even though there is some variation in the decline post-intervention between each arm, overall, average purchase impulsivity reduced significantly for all treatment groups. Hypotheses 1b, 2b, and 3c are supported (Figure 11).

Figure 11. Comparison of Average Purchase Impulsivity Before and After Interventions Across Experimental Conditions.

Table 4. Repeated measure ANOVA of interventions on average purchase impulsivity, by experimental groups.

*p < 0.05, **p < 0.01, ***p < 0.001.

Limitations

One unexpected result from Study 2 is the lack of a statistical difference between the control group (dark pattern but no interventions) and baseline (no dark patterns and no interventions), a finding that is dissimilar from Study 1 and previous studies (e.g., Moser, Reference Moser2020; Luguri & Strahilevitz, Reference Luguri and Strahilevitz2021). One possible explanation for this is, as elaborated earlier in the methodology, is our decision to up the realism in Study 2. In Study 1, we offered participants only one product, Red Yeast Rice, that had a relatively low probability of anyone having any prior preferences or even prior knowledge. However, in Study 2, we introduced a variety of highly differentiated products that are common and well-rated. As a result, confounds such as prior preferences or even current ownership (the possibility that many participants have already owned those products, and thus were not in the market to make additional purchases of the same item) may have attenuated the impact of dark patterns in the control groups, relative to the baseline. In other words, a limitation of our experimental design in Study 2 is that it is not set up to sufficiently test the effectiveness of dark patterns, that is detecting a significant difference between controls (dark pattern but no intervention) and baseline (no dark pattern and no intervention), a trade-off we made for raising realism in Study 2. However, we believe that it does not significantly impact the results presented above with respect to evaluating the effectiveness of each intervention against different dark patterns.

The second limitation is the use of Mturk as our sample where their demographics tend to be more diverse than the general U.S. population (Berinsky et al., Reference Berinsky, Huber and Lenz2012; Shank, Reference Shank2016). Despite that, studies found that, in terms of data quality, Mturk is comparable to population-based samples, indicating an acceptable level of validity and reliability for experimental studies (Buhrmester et al., Reference Buhrmester, Kwang and Gosling2011; Weinberg et al., Reference Weinberg, Freese and McElhattan2014; Litman et al., Reference Litman, Robinson and Rosenzweig2015).

A third limitation is that this study is based on a hypothetical online shopping scenario. It remains to be seen whether these interventions would work in a real-life situation where consumers are enacting their preference and spending their own money. Future studies should not only attempt to field test these just-in-time interventions on real online shopping websites, but also in different e-commerce contexts like travel booking websites.

Discussion and Policy Implications

Our study contributes to three different categories of policy implications: (1) nature of dark patterns, (2) recommending evidence-based interventions to combat dark patterns, and (3) broad legislative and commercial considerations.

Nature of Dark Patterns: Persuasive Marketing or Manipulative Motifs

The first is addressing the debate on whether dark patterns are manipulative motifs or just persuasive marketing. Some may argue that dark patterns are nothing more than just behaviorally driven marketing strategies, sometimes known as ‘behavioral marketing,’ that are geared toward persuasion, not deception (Funkhouser & Parker, Reference Funkhouser and Parker1999; Kivetz & Netzer, Reference Kivetz and Netzer2008). Others, on the other hand, may view these seemingly innocuous marketing gimmicks as manipulative motifs that exploit human psychology to engender suboptimal decision-making (Crisp, Reference Crisp1987; Sher, Reference Sher2011; Bösch et al., Reference Bösch, Erb, Kargl, Kopp and Pfattheicher2016; Mathur et al., Reference Mathur, Acar, Friedman, Lucherini, Mayer, Chetty and Narayanan2019; Bongard-Blanchy et al., Reference Bongard-Blanchy, Rossi, Rivas, Doublet, Koenig and Lenzini2021). This is especially more acute when few of the claims made by marketers can be independently or easily verified by consumers. Is the product really low in stock? Does the time-limit offer really expire after the stated date? Put differently, from a market liberalism perspective, dark patterns are low-cost nudges that can help deliver value to shareholders. From a consumer advocate perspective, dark patterns are manipulative motifs that prey on psychology to prod consumers into making decisions that may or may not be in their best interests. Addressing deceptive persuasion marketing in research is not new (Held & Germelmann, Reference Held and Germelmann2018); some have developed frameworks (Sher, Reference Sher2011), while others have tested interventions (Sagarin et al., Reference Sagarin, Cialdini, Rice and Serna2002).

Admittedly, not all persuasive marketing are necessarily dark patterns. We consider those that are deceptive and do not have cosumers' best interest in mind to be dark patterns. However, that line is increasingly blurred today. Here's an example. Ferreira and Goh (Reference Ferreira and Goh2021) found that intentionally concealing the full array of product choices by rotating product assortment throughout the season introduces uncertainty into the purchasing decision-making. As a result, consumers, especially mypoic ones, end up purchasing more than they intended to. Using the parlance of sludge, concealment increases the evaluation and the psychological costs that resulted in consumers’ overspending. Concealment is different from curation. The former is intended to introduce uncertainty while the latter reduces decision paralysis. Nonetheless, whether concealment is a clever nudge that helps improve business outcomes or a deceptive practice that exploits human psychology is equivocal. We suggest two ways forward for policy practitioners to address such ambiguity.

First is investigating dark patterns through the lens of standpoint epistemology (Harding, Reference Harding1992; Sprague, Reference Sprague2005; Collins, Reference Collins2010). At its core, standpoint epistemology interrogates the relationship between social identities and knowledge, also known as perspectival differences. Perspectival differences posit that knowledge is shaped by one's social location (e.g., gender, socio-economic status, race/ethnicity, etc.) due to their unique lived experiences. As a result, that situated knowledge is shared only among those who are in the same social location and outgroup members, in principle, do not have access to knowledge outside of their social location. For example, understanding parenting from the social location of men versus women will yield qualitatively distinct sets of knowledge because their lived experiences are not the same due to differences in social location, and in this case, gender. In other words, standpoint epistemology argues that knowledge is always partial, situated and emerges from one's social location (Haraway, Reference Haraway1988). Similarly, whether dark patterns are persuasive marketing strategies or manipulative motifs is largely a matter of standpoint. In such a situation where both outcomes may be true, policy practitioners should err on the side of caution and always put the interests of consumers first. We should continue to identify and call out dark patterns that improve business outcomes at the expense of consumers, like in the case of Turbotax and Uber that we recounted in the introduction.

The second way is taking a pareto approach. Mills (Reference Mills2020) argues against a dichotomized thinking about ‘good nudges’ versus ‘bad sludges.’ Instead, he offers a pareto (everyone benefits) versus rent-seeking (only the choice architect, such as the company, benefits) framework instead. As such, mitigating the impact of dark patterns can potentially reduce impulsive shopping among consumers (that is good for the user) and also reduce common business consequences of impulsive shopping such as chargeback, returns and friendly frauds which can be costly to companies (also good for the firm). In short, reducing the prevalence of dark patterns and attenuating the negative consequences of dark patterns can result in a pareto situation where both parties (consumers and businesses) may stand to gain.

Evidence-Based Interventions Against Dark Patterns

From a practitioner perspective looking to implement interventions to combat dark patterns, which intervention works best? Because results from the within-subject analyses show that all interventions did lead to a significant decline post-intervention, we rely on effect sizes in Table 4 to make our assessment. Based on the relative effect sizes for each dark pattern, the results suggest that when confronting limited-quantity scarcity messaging, postponement works best (η 2 = 0.05), followed by distraction (η 2 = 0.04). Postponement (η 2 = 0.08) is the most effective against high demand, followed by reflection (η 2 = 0.06). When it comes to attenuating the effects of positive testimonials, postponement and reflection are equally effective (η 2 = 0.04). Lastly, postponement (η 2 = 0.05), followed by distraction (η 2 = 0.03) are the most effective against limited-time scarcity. In sum, similar to previous studies (e.g., Nouwens et al., Reference Nouwens, Liccardi, Veale, Karger and Kagal2020; Luguri & Strahilevitz, Reference Luguri and Strahilevitz2021), our results again indicate that no one size fits all when it comes to combating dark patterns. In spite of this, postponement appears to have a nontrivial effect size (ranging from small to medium) across all dark patterns when it comes to reducing purchase impulse. In other words, when in doubt, use postponement.

Broad Legislative and Commercial Considerations

So what concrete steps can be taken from a legislative and commercial position to combat dark patterns? We identify three. First, the proliferation and impact of dark patterns on consumers did not go unnoticed on Capitol Hill. A legislation called ‘Deceptive Experiences to Online Users Reduction Act (DETOUR Act)’ was introduced in Congress in 2019 and is currently pending in the Senate. In 2021, California passed a regulation, updating the 2018 California Consumer Privacy Act to ban the use of dark patterns, but only in the context of data privacy. It is unclear if there would be enough political currency to enact an outright ban preventing companies from using dark patterns. Even if there is, it is equally unclear if the ban would be limited to a specific context like data privacy or across the board. Additionally, enforcement of the ban may also be tricky. In sum, the legislative solution approach, while probably the most impactful, may take a much longer time for the details to be fleshed out.

Second, the fight against dark patterns could take a public awareness route, similar to financial literacy, where authorities take the educate-the-problem-away approach. On some level, this makes sense because it is hard to convince consumers that they are being manipulated if they do not know that simple design features that they now take for granted as commonplace are subtly influencing their decision-making process. However, to use financial literacy as a parallel again, a meta-analysis found that financial literacy has minimal impact on people's financial behaviors (Fernandes et al., Reference Fernandes, Lynch and Netemeyer2014). In fact, financial literacy only explains 0.1% of the variance in their behaviors. The same probably goes for dark patterns. Bongard-Blanchy and his colleagues (Reference Bongard-Blanchy, Rossi, Rivas, Doublet, Koenig and Lenzini2021) found that people are generally aware of the presence of dark patterns but they remain unsure of the impacts dark patterns have on them. As such, awareness and education may be necessary but ultimately insufficient to effectively combat dark patterns.

Lastly, is technology, specifically the integration of nudges with technology which we find most promising. As mentioned earlier, not all interventions are equally effective, with uneven impact across dark patterns. To fully protect consumers, there may be a need to develop tailored solutions suited to combat not only the specific dark pattern at hand, but take into account consumers’ personality, needs, wants, and social location like economic status. Minimizing the impact of dark patterns inducing impulse shopping may have a larger impact on the most economically vulnerable than the wealthy. Similarly, attenuating the influence of dark patterns on data privacy may be more crucial among young kids who tend to engage with social media the most. To achieve this, a promising solution is to leverage artificial intelligence and machine learning to develop a tool that marries the data from web-crawlers that scrape websites identifying dark patterns – the same way that Mathur and his colleagues (Reference Milkman2019) did – and implement specific just-in-time behavioral interventions that can most effectively combat that dark pattern at hand.

Conclusion

To address the problem of embedding dark patterns in e-commerce to induce impulsive shopping, we conducted two experiments. The first (Study 1) examines the impact of three different dark patterns (limited-quantity scarcity, high-demand, and positive testimonials) alongside a single product on impulse buying behavior. The results show that all three dark patterns significantly increase purchase impulsivity, relative to the control group. Interestingly, unlike previous studies, we did not find any significant differences between dark patterns. The second experiment (Study 2) evaluates the effectiveness of three types of behaviorally informed interventions (reflection, distraction, and postponement) against four different types of dark patterns (limited-quantity scarcity, limited-time scarcity, high-demand social proof, and positive testimonies), this time presented alongside multiple products. Within-subject analysis found that any exposure to interventions, regardless of dark patterns, significantly reduces purchase impulsivity. This suggests that most consumers will benefit from having any intervention, relative to having none, when it comes to reducing the impact of dark patterns. Yet, between-subject analysis found that, contingent on which dark pattern is at play, some interventions were effective while others were not, indicating that not all interventions are equally effective. In sum, when it comes to combating dark patterns, a tailored approach may be most effective, if implemented at scale.

Acknowledgments

We are grateful to Kate Thorne, Ben Ball, and Christian Pedersen for their invaluable feedback and comments on earlier drafts of this manuscript.