Introduction

The use of high-fidelity simulation in emergency medicine (EM) training programs is increasing at a rapid pace.Reference Okuda, Bond and Bonfante 1 There is abundant evidence supporting the use of simulation-based training for medical educationReference McGaghie, Issenberg and Cohen 2 and a growing body of literature demonstrating that simulation is a valid tool for competency assessment.Reference Cook, Brydges and Zendejas 3 Simulation has the potential to satisfy the need for valid competency-based assessment toolsReference McGaghie, Issenberg and Petrusa 4 as clinical expertise assessment moves away from traditional knowledge-based examination and towards competency-based methods. 5 We describe the development and experience of the Queen’s University EM post-graduate training program with a simulation-based Objective Structured Clinical Examination (OSCE) for resuscitation and procedural skill competency assessment.

Description of Innovation

Rationale

There has been a broad call for the development of tools to assess competency of post-graduate medical trainees in the United States,Reference Steadman and Huang 6 Canada, 5 and internationally.Reference Amin, Boulet and Cook 7 , Reference Hamstra 8 As the Royal College of Physicians and Surgeons (RCPS) moves toward the implementation of competency-based medical education curricula for postgraduate training,Reference Frank, Snell and Sherbino 9 the need for tools to accurately assess clinical competencies has become paramount. Traditional knowledge-based examinations (written tests and oral exams) test trainees at a “knows how” level.Reference Miller 10 Direct evaluation of performance through simulation-based assessment provides an opportunity for simultaneous evaluation of knowledge, clinical reasoning, communication, and teamwork.Reference Epstein 11 We launched a simulation-based OSCE in 2008 to answer the call for the development and implementation of competency assessments at the “shows how” level of Miller’s pyramid.Reference Boursicot, Etheridge and Setna 12

Trainees and faculty

The Queen’s University post-graduate EM program oversees 30 resident trainees per year; 20 Royal College of Physicians and Surgeons of Canada EM candidates (RCPS-EM) and 10 College of Family Physicians of Canada EM candidates (CFPC-EM). All RCPS-EM and CFPC-EM residents are required to participate bi-annually in a standardized OSCE held in our clinical simulation centre (CSC). Two specific faculty members with training in medical education are primarily responsible for the delivery of the simulation-based OSCE assessments, while the RCPS-EM and CFPC-EM program directors assist with debriefing trainees following their performances.

Assessment framework

All residents are presented with two or three resuscitation scenarios in sequential order and debriefed for up to 30 minutes by a faculty member immediately following their performance. The debriefing focuses on strengths, weaknesses, and any need for improvement of each individual trainee.

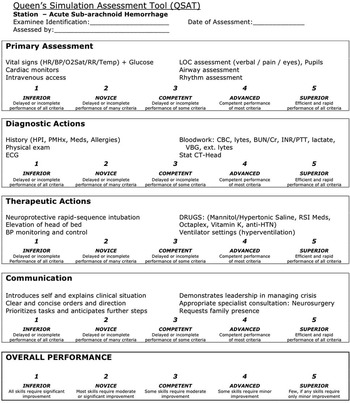

Faculty debriefers use a standardized Queen’s Simulation Assessment Tool (QSAT) for the resuscitation scenarios (Figure 1). This tool has been evolving since the inception of the assessment program and has been validated both locally and in a multicentre setting.Reference Dagnone, Hall and Woolfrey 13 , Reference Hall, Dagnone and Lacroix 14 The QSAT was designed as a hybrid scoring tool with four anchored domain scores (primary assessment, diagnostic actions, therapeutic actions, and communication) and an overall performance score (global assessment). Within each domain, key anchors are included, which may be modified to assist the determinations of expert evaluators related to assessment scores. A modified Delphi technique by EM and critical care physicians was used to modify the generic QSAT for each standardized scenario. The QSAT is easily modifiable for use across multiple resuscitation scenarios and remains an ongoing research focus within our program.Reference Hall, Dagnone and Lacroix 14 , Reference Hall, Pickett and Dagnone 15

Figure 1 Sample of the Queen’s Simulation Assessment Tool (QSAT) used to assess resident performance in an OSCE station and guide feedback during debriefing.

Scenarios

OSCE scenarios are carried out in a single room, designed as a simulated resuscitation bay, using a high-fidelity mannequin. All scenarios are run by a simulation-trained faculty member and an experienced simulation laboratory technician, and involve roles for the mannequin operator and standardized actors portraying a nurse and a respiratory therapist. The scenarios are pre-scripted and delivered in a standardized fashion to all residents. All resident performances are video-recorded using Kb Port ETC Pro (Kb Port, Allison Park, PA), a 3-camera system, and a cardiac monitor, with the audio recording included.

The scenarios utilized were designed, implemented, and debriefed by EM faculty with training in simulation-based instruction. The two faculty members primarily responsible for this program have 15 combined years of experience in simulation-based instruction and assessment, and Master’s degrees in medical education. One of the faculty members has also obtained additional training in simulation at Harvard University (Boston, USA). The scenarios include cardinal presentations, such as unstable arrhythmia, cardiac arrest, bradycardia, decreased level of consciousness, and hypotension. A blueprinting exercise was performed, mapping against the 7th edition of Rosen’s Emergency Medicine textbookReference Marx, Hockberger and Walls 16 to ensure a proper distribution of resuscitation cases and the assessment of multiple core competencies within EM. The standardized scenarios were designed to discriminate between residents of different levels of training by eliciting observable behaviours that traditionally are best assessed in the actual clinical environment. In a validation study of the QSAT, compiling data from sequential OSCE examinations, nine of 10 scenarios were found to have statistically significant differences in assessment scores, and thus discriminating ability, between junior and senior RCPS-EM trainees.Reference Hall, Dagnone and Lacroix 14

Debriefing and self-assessment

Faculty debriefing occurs in an adjacent private conference room after each resident has completed sequential performance of all scenarios. Performance expectations are based on resident’s post-graduate level. Trainees receive a score for each domain on the QSAT, a total score for all the domains combined, and a global assessment score for each scenario performance. QSAT scoring sheets are placed in each trainee’s portfolio as a formative objective assessment measure of their progression and are discussed during quarterly reviews with the program director.

All video-recorded performances are uploaded onto a secure server, and linked to each trainee on a Queen’s University online MoodleTM platform (Figure 2). Each resident can access only their own video recordings using a login and password. Residents are also provided with a self-assessment scoring tool online in PDF form, which can be downloaded and utilized electronically. By allowing access to the video recording of their own performance and the opportunity to meet with faculty at a later date, all residents are provided a means for further formative feedback after delayed reflection and personal assessment.

Figure 2 A screen capture of the video recording made during the OSCE that residents access following the OSCE.

Resident perspective

By the end of their residency, Queen’s University RCSP-EM residents will have participated in 10 simulation-based OSCEs and will have logged over 150 hours in the simulation laboratory by participating in the simulation-based resuscitation curriculum at Queen’s University.Reference Dagnone, Mcgraw and Howes 17 Similarly, Queen’s University CFPC-EM residents will have participated in two simulation-based OSCEs and will have logged over 30 hours in the simulation laboratory. Simulation-based examinations are a valuable tool for the assessment of individual clinical skill acquisition by faculty at our centre, but are of equal importance to trainees, to gauge improvement and allow reflection on their progress and competency development.

Summary

The Queen’s University EM post-graduate simulation-based OSCE is an innovation at the forefront of competency-based medical education. Since its implementation, the use of high-fidelity simulation and medically diverse scenarios to assess the clinical skills and competence of all RCPS-EM and CFPC-EM residents at our centre has allowed both faculty educators and resident trainees to gauge clinical performance and improvement over time. The development of a modifiable assessment tool has been essential to the success of our simulation-based OSCE. Simulation-based examinations will be an asset as we train future generations of competent physicians.

Competing Interests: None to declare.