1. Introduction

A random uniform attachment graph, denoted

![]() $G^m_n$

and also known as a uniform random recursive dag (directed acyclic graph), can be constructed recursively as follows. Fix

$G^m_n$

and also known as a uniform random recursive dag (directed acyclic graph), can be constructed recursively as follows. Fix

![]() $m\geqslant 1$

. The initial graph

$m\geqslant 1$

. The initial graph

![]() $G^m_1$

is a single isolated vertex. To construct

$G^m_1$

is a single isolated vertex. To construct

![]() $G^m_{n}$

from

$G^m_{n}$

from

![]() $G^m_{n-1}$

, we add vertex

$G^m_{n-1}$

, we add vertex

![]() $n$

to

$n$

to

![]() $G^m_{n-1}$

, with vertex

$G^m_{n-1}$

, with vertex

![]() $n$

born with

$n$

born with

![]() $m$

edges, which are labelled by

$m$

edges, which are labelled by

![]() $1,\dots, m$

. For

$1,\dots, m$

. For

![]() $i\in [m]\,:\!=\,{\{1,\dots, m\}}$

, the other endpoint of

$i\in [m]\,:\!=\,{\{1,\dots, m\}}$

, the other endpoint of

![]() $n^{(i)}$

, the

$n^{(i)}$

, the

![]() $i$

th edge of vertex

$i$

th edge of vertex

![]() $n$

, is then uniformly chosen among the existing vertices of

$n$

, is then uniformly chosen among the existing vertices of

![]() $G^m_{n-1}$

, i.e., in

$G^m_{n-1}$

, i.e., in

![]() $[n-1]$

. Thus,

$[n-1]$

. Thus,

![]() $G^m_n$

has

$G^m_n$

has

![]() $n$

vertices and

$n$

vertices and

![]() $(n-1)m$

edges, and each edge has a label in

$(n-1)m$

edges, and each edge has a label in

![]() $[m]$

. Observe that we allow multiple edges when

$[m]$

. Observe that we allow multiple edges when

![]() $m\geqslant 2$

. An edge in

$m\geqslant 2$

. An edge in

![]() $G^m_n$

can be thought of as always pointing towards the vertex with the smaller label, and so there is no real distinction between the undirected and directed versions of the uniform attachment graph.

$G^m_n$

can be thought of as always pointing towards the vertex with the smaller label, and so there is no real distinction between the undirected and directed versions of the uniform attachment graph.

When

![]() $m=1$

, the model is the random recursive tree first studied in [Reference Na and Rapoport16]; and when

$m=1$

, the model is the random recursive tree first studied in [Reference Na and Rapoport16]; and when

![]() $m\geqslant 2$

, the model was first introduced in [Reference Devroye and Lu6]. There is an abundance of literature on random recursive trees (see e.g. [Reference Drmota7] for an overview), but here we mention [Reference Feng and Mahmoud9–Reference Fuchs11, Reference Holmgren and Janson13], which provide Poisson and normal approximations to the counts of subtree copies. For

$m\geqslant 2$

, the model was first introduced in [Reference Devroye and Lu6]. There is an abundance of literature on random recursive trees (see e.g. [Reference Drmota7] for an overview), but here we mention [Reference Feng and Mahmoud9–Reference Fuchs11, Reference Holmgren and Janson13], which provide Poisson and normal approximations to the counts of subtree copies. For

![]() $m\geqslant 2$

, results on vertex degrees can be found in [Reference Devroye and Lu6, Reference Tsukiji and Mahmoud18], and results on depths and path lengths are available in [Reference Arya, Golin and Mehlhorn1, Reference Broutin and Fawzi3, Reference Devroye and Janson5, Reference Tsukiji and Xhafa19]. The recent paper [Reference Janson14] studies the number of vertices that can be reached from vertex

$m\geqslant 2$

, results on vertex degrees can be found in [Reference Devroye and Lu6, Reference Tsukiji and Mahmoud18], and results on depths and path lengths are available in [Reference Arya, Golin and Mehlhorn1, Reference Broutin and Fawzi3, Reference Devroye and Janson5, Reference Tsukiji and Xhafa19]. The recent paper [Reference Janson14] studies the number of vertices that can be reached from vertex

![]() $n$

via a directed path, where the edge is thought as pointing from the larger vertex label towards the smaller one.

$n$

via a directed path, where the edge is thought as pointing from the larger vertex label towards the smaller one.

In this article, we consider the counts of subgraphs of

![]() $G^m_n$

isomorphic to a given fixed graph as

$G^m_n$

isomorphic to a given fixed graph as

![]() $n\to \infty$

, where the parameter

$n\to \infty$

, where the parameter

![]() $m$

is fixed with

$m$

is fixed with

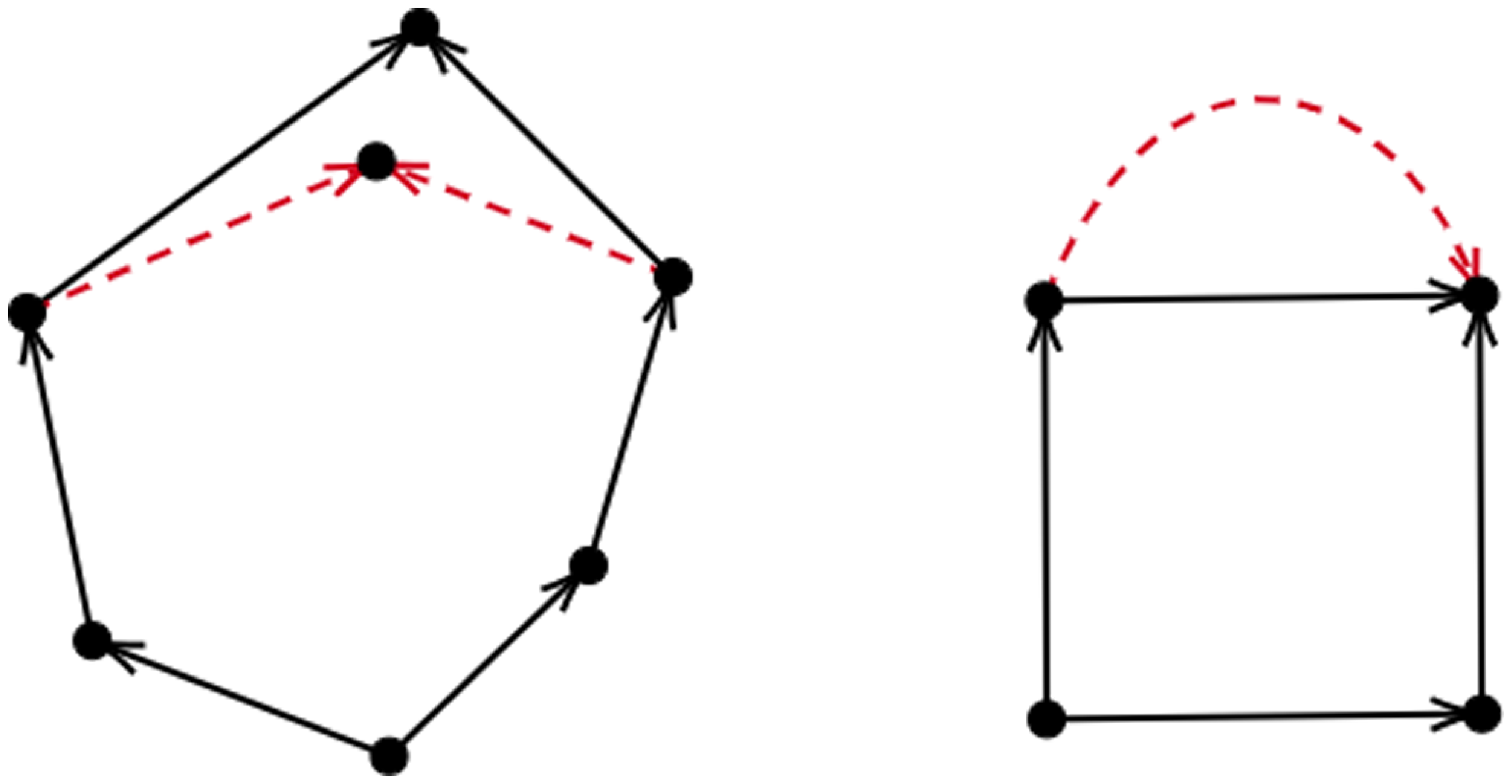

![]() $m\ge 2$

(Figure 1). We provide uni- and multivariate Poisson approximations to the counts of cycles. We also prove that the counts of unicyclic subgraphs are asymptotically normal (after suitable renormalisation). We conjecture that the counts of more general trees are approximately normal and show that this conjecture holds for stars. All these approximation results are accompanied with convergence rates. We also consider multicyclic subgraphs. In particular, we show that if in addition to having more than two cycles, the subgraph is ‘leaf-free’ (i.e. has no vertices of degree one), then the number of copies in the uniform attachment graph is bounded a.s. For multicyclic subgraphs that are not leaf-free, we identify the exact rate of growth of the expectation and an upper bound on the variance. We conjecture that the count of a subgraph of this type converges a.s. after suitable rescaling, and prove a special case.

$m\ge 2$

(Figure 1). We provide uni- and multivariate Poisson approximations to the counts of cycles. We also prove that the counts of unicyclic subgraphs are asymptotically normal (after suitable renormalisation). We conjecture that the counts of more general trees are approximately normal and show that this conjecture holds for stars. All these approximation results are accompanied with convergence rates. We also consider multicyclic subgraphs. In particular, we show that if in addition to having more than two cycles, the subgraph is ‘leaf-free’ (i.e. has no vertices of degree one), then the number of copies in the uniform attachment graph is bounded a.s. For multicyclic subgraphs that are not leaf-free, we identify the exact rate of growth of the expectation and an upper bound on the variance. We conjecture that the count of a subgraph of this type converges a.s. after suitable rescaling, and prove a special case.

Figure 1. A 4-cycle (with blue and dotted edges) as a subgraph of

![]() $G_5^2$

.

$G_5^2$

.

The distributional approximation tool that we use here is Stein’s method, using in particular the size-bias coupling for both types of approximations; see [Reference Barbour, Holst and Janson2, Reference Chen, Goldstein and Shao4, Reference Ross17] for an overview. This is in contrast to the analytic and contraction methods employed in [Reference Feng and Mahmoud9–Reference Fuchs11]. In [Reference Holmgren and Janson13], Stein’s method was applied in proving the Poisson and normal approximation results for the counts of subgraphs and their functionals in random recursive trees. However, we note that, for normal approximation, the approach that we use here is different from that in [Reference Holmgren and Janson13], which does not provide a convergence rate.

A major challenge in the distributional approximation problem of subgraph counts in the uniform attachment graph is the computation of the variance of the counts. In particular, our methods require a lower bound on the variance. In the case

![]() $m=1$

, the variance can be computed explicitly by using an elegant bijection between the random binary tree and the random recursive tree [ [Reference Knuth15], Section 2.3.2], as done in [Reference Holmgren and Janson13]. When

$m=1$

, the variance can be computed explicitly by using an elegant bijection between the random binary tree and the random recursive tree [ [Reference Knuth15], Section 2.3.2], as done in [Reference Holmgren and Janson13]. When

![]() $m\geqslant 2$

, the order of the variance of the count of certain non-tree subgraphs can be obtained by analysing the covariances of the indicators that a certain subgraph exists in

$m\geqslant 2$

, the order of the variance of the count of certain non-tree subgraphs can be obtained by analysing the covariances of the indicators that a certain subgraph exists in

![]() $G^m_n$

, and finding the pairs of subgraphs that contribute the dominant term in the variance, but this task becomes difficult in the case of trees. In contrast to the case of

$G^m_n$

, and finding the pairs of subgraphs that contribute the dominant term in the variance, but this task becomes difficult in the case of trees. In contrast to the case of

![]() $m=1$

, it is also much harder to obtain an explicit expression for the mean and variances in the case of

$m=1$

, it is also much harder to obtain an explicit expression for the mean and variances in the case of

![]() $m\geqslant 2$

. This is due to the fact for most subgraphs, the same set of vertices in the uniform attachment graph can form a copy in several ways. Consequently, we are only able to give the order of the mean and variance (i.e., within constant factors), and in some cases only an upper bound, and we only consider fixed subgraphs and a fixed

$m\geqslant 2$

. This is due to the fact for most subgraphs, the same set of vertices in the uniform attachment graph can form a copy in several ways. Consequently, we are only able to give the order of the mean and variance (i.e., within constant factors), and in some cases only an upper bound, and we only consider fixed subgraphs and a fixed

![]() $m$

.

$m$

.

Remark 1.1. It would be interesting to extend the results of this paper to subgraphs

![]() $H_n$

depending on

$H_n$

depending on

![]() $n$

, or to

$n$

, or to

![]() $m=m(n)$

growing with

$m=m(n)$

growing with

![]() $n$

. Our methods still work in principle, but it becomes more complicated to estimate the various expressions, and we have not pursued this. We leave these as open problems.

$n$

. Our methods still work in principle, but it becomes more complicated to estimate the various expressions, and we have not pursued this. We leave these as open problems.

2. Notation

The objective of this paper is thus to study the number of copies of a given graph

![]() $H$

in

$H$

in

![]() $G^m_n$

. We will only consider connected

$G^m_n$

. We will only consider connected

![]() $H$

. We emphasise that we generally see both

$H$

. We emphasise that we generally see both

![]() $G^m_n$

and the

$G^m_n$

and the

![]() $H$

as undirected. However, each edge in

$H$

as undirected. However, each edge in

![]() $G^m_n$

has a canonical direction where an edge between the vertices

$G^m_n$

has a canonical direction where an edge between the vertices

![]() $i$

and

$i$

and

![]() $j$

is directed towards the smaller of

$j$

is directed towards the smaller of

![]() $i$

and

$i$

and

![]() $j$

. Thus every copy of

$j$

. Thus every copy of

![]() $H$

in

$H$

in

![]() $G^m_n$

also has an induced direction on its edges. Note, however, that different copies may induce different directions in

$G^m_n$

also has an induced direction on its edges. Note, however, that different copies may induce different directions in

![]() $H$

.

$H$

.

Let

![]() $K^m_n$

be the

$K^m_n$

be the

![]() $m$

-fold complete multigraph on

$m$

-fold complete multigraph on

![]() $n$

, defined to be the multigraph on

$n$

, defined to be the multigraph on

![]() $[n]$

such that for every pair

$[n]$

such that for every pair

![]() $(i,j)$

with

$(i,j)$

with

![]() $1\leqslant i\lt j\leqslant n$

, there are

$1\leqslant i\lt j\leqslant n$

, there are

![]() $m$

edges

$m$

edges

![]() $j\to i$

, and these edges are labelled

$j\to i$

, and these edges are labelled

![]() $1,\dots, m$

. We denote the edge

$1,\dots, m$

. We denote the edge

![]() $j\to i$

with label

$j\to i$

with label

![]() $a$

by

$a$

by

![]() $ji^{(a)}$

. Note that we can regard

$ji^{(a)}$

. Note that we can regard

![]() $G^m_n$

as a subgraph of

$G^m_n$

as a subgraph of

![]() $K^m_n$

in the obvious way (preserving edge labels); in fact, we may define

$K^m_n$

in the obvious way (preserving edge labels); in fact, we may define

![]() $G^m_n$

as the subgraph of

$G^m_n$

as the subgraph of

![]() $K^m_n$

obtained by choosing, for every

$K^m_n$

obtained by choosing, for every

![]() $j\in \{2,\dots, n\}$

and every

$j\in \{2,\dots, n\}$

and every

![]() $a\in [m]$

, exactly one of the edges

$a\in [m]$

, exactly one of the edges

![]() $ji^{(a)}$

with label

$ji^{(a)}$

with label

![]() $a$

and

$a$

and

![]() $i\in [j-1]$

, all choices uniformly at random and independent.

$i\in [j-1]$

, all choices uniformly at random and independent.

Let

![]() $\Gamma$

be the set of all copies of

$\Gamma$

be the set of all copies of

![]() $H$

in

$H$

in

![]() $K^m_n$

that do not contain two different edges

$K^m_n$

that do not contain two different edges

![]() $ji_1^{(a)}$

and

$ji_1^{(a)}$

and

![]() $ji_2^{(a)}$

with

$ji_2^{(a)}$

with

![]() $i_1,i_2\lt j$

and the same label

$i_1,i_2\lt j$

and the same label

![]() $a$

. (If there are two such edges

$a$

. (If there are two such edges

![]() $ji_1^{(a)}$

and

$ji_1^{(a)}$

and

![]() $ji_2^{(a)}$

, then the copy can never be a subgraph of

$ji_2^{(a)}$

, then the copy can never be a subgraph of

![]() $G^m_n$

.) We say that

$G^m_n$

.) We say that

![]() $\Gamma$

is the set of potential copies of

$\Gamma$

is the set of potential copies of

![]() $H$

in

$H$

in

![]() $G^m_n$

. For

$G^m_n$

. For

![]() $\alpha \in \Gamma$

, let

$\alpha \in \Gamma$

, let

![]() ${\mathbf{1}}_\alpha$

be the indicator variable that takes value 1 if

${\mathbf{1}}_\alpha$

be the indicator variable that takes value 1 if

![]() $\alpha \subseteq G^m_n$

, and 0 otherwise. The number

$\alpha \subseteq G^m_n$

, and 0 otherwise. The number

![]() $W_n$

of copies of

$W_n$

of copies of

![]() $H$

in

$H$

in

![]() $G^m_n$

is thus

$G^m_n$

is thus

and we are therefore interested in approximating the distribution of this sum.

Example 2.1.

For a simple example, let

![]() $H$

be a triangle. Then, a potential copy of

$H$

be a triangle. Then, a potential copy of

![]() $H$

in

$H$

in

![]() $G^m_n$

is described by its vertex set

$G^m_n$

is described by its vertex set

![]() ${\{i,j,k\}}\subseteq [n]$

, where we may assume

${\{i,j,k\}}\subseteq [n]$

, where we may assume

![]() $i\lt j\lt k$

, together with its edges

$i\lt j\lt k$

, together with its edges

![]() $ji^{(a)}$

,

$ji^{(a)}$

,

![]() $ki^{(b)}$

, and

$ki^{(b)}$

, and

![]() $kj^{(c)}$

, where

$kj^{(c)}$

, where

![]() $a,b,c\in [m]$

and

$a,b,c\in [m]$

and

![]() $b\neq c$

.

$b\neq c$

.

Remark 2.2. Let

![]() $m_H$

be the largest integer

$m_H$

be the largest integer

![]() $k$

such that the

$k$

such that the

![]() $k$

-core of

$k$

-core of

![]() $H$

is non-empty, i.e., there exists a non-empty subgraph of

$H$

is non-empty, i.e., there exists a non-empty subgraph of

![]() $H$

where each node has degree at least

$H$

where each node has degree at least

![]() $k$

(in the subgraph). For example,

$k$

(in the subgraph). For example,

![]() $m_H=1$

if

$m_H=1$

if

![]() $H$

is a tree,

$H$

is a tree,

![]() $m_H=2$

if

$m_H=2$

if

![]() $H$

is a cycle, and

$H$

is a cycle, and

![]() $m_H=k-1$

if

$m_H=k-1$

if

![]() $H$

is the complete graph on

$H$

is the complete graph on

![]() $k$

vertices. Then it is easy to see that if

$k$

vertices. Then it is easy to see that if

![]() $m\lt m_H$

, then there are no potential copies of

$m\lt m_H$

, then there are no potential copies of

![]() $H$

in

$H$

in

![]() $G^m_n$

for any

$G^m_n$

for any

![]() $n$

(since in a copy of

$n$

(since in a copy of

![]() $H$

, the vertex with largest label in the

$H$

, the vertex with largest label in the

![]() $m_H$

-core has at least

$m_H$

-core has at least

![]() $m_H$

edges to vertices with smaller labels); hence

$m_H$

edges to vertices with smaller labels); hence

![]() $\Gamma =\emptyset$

and thus

$\Gamma =\emptyset$

and thus

![]() $W_n=0$

deterministically. On the other hand, if

$W_n=0$

deterministically. On the other hand, if

![]() $m\geqslant m_H$

and

$m\geqslant m_H$

and

![]() $n$

is large enough, then there are potential copies (since we may create a copy by assigning labels in decreasing order to the vertices of

$n$

is large enough, then there are potential copies (since we may create a copy by assigning labels in decreasing order to the vertices of

![]() $H$

, each time choosing a vertex that has at most

$H$

, each time choosing a vertex that has at most

![]() $m_H$

edges to the remaining vertices).

$m_H$

edges to the remaining vertices).

Remark 2.3. Recall that

![]() $G^m_n$

is a loop-less multigraph. Similarly,

$G^m_n$

is a loop-less multigraph. Similarly,

![]() $H$

can be a loop-less multigraph in the results below, although we for simplicity write “graph” and “subgraph”.

$H$

can be a loop-less multigraph in the results below, although we for simplicity write “graph” and “subgraph”.

2.1. Probability distances

To precisely state our results, we also need to define the metrics in consideration here. The total variation distance

![]() $\mathop{d_{\mathrm{TV}}}$

between two probability measures

$\mathop{d_{\mathrm{TV}}}$

between two probability measures

![]() $\nu _1$

and

$\nu _1$

and

![]() $\nu _2$

supported on

$\nu _2$

supported on

![]() $\mathbb{Z}^+$

is defined as

$\mathbb{Z}^+$

is defined as

and the Wasserstein distance

![]() $\mathop{d_{\mathrm{W}}}$

between two probability measures

$\mathop{d_{\mathrm{W}}}$

between two probability measures

![]() $\nu _1,\nu _2$

supported on

$\nu _1,\nu _2$

supported on

![]() $\mathbb{R}$

is defined as

$\mathbb{R}$

is defined as

where the supremum is over all

![]() $f\,:\,\mathbb{R}\to \mathbb{R}$

such that

$f\,:\,\mathbb{R}\to \mathbb{R}$

such that

![]() $| f(x)-f(y)|\leqslant | x-y|$

for any

$| f(x)-f(y)|\leqslant | x-y|$

for any

![]() $x,y\in \mathbb{R}$

. Note that if, for example,

$x,y\in \mathbb{R}$

. Note that if, for example,

![]() $Y$

is a random variable with the standard normal distribution

$Y$

is a random variable with the standard normal distribution

![]() $\mathcal{N}(0,1)$

, then for any random variable

$\mathcal{N}(0,1)$

, then for any random variable

![]() $X$

, the usual Kolmogorov distance can be bounded by

$X$

, the usual Kolmogorov distance can be bounded by

(see e.g. [[Reference Ross17], Proposition 2.1]).

2.2. Further notation

We sometimes tacitly assume that

![]() $n$

is not too small.

$n$

is not too small.

![]() $\overset{\mathrm{a.s.}}{\longrightarrow }$

and

$\overset{\mathrm{a.s.}}{\longrightarrow }$

and

![]() $\overset{\mathrm{p}}{\longrightarrow }$

denote convergence almost surely (a.s.) and in probability, respectively.

$\overset{\mathrm{p}}{\longrightarrow }$

denote convergence almost surely (a.s.) and in probability, respectively.

![]() $\mathrm{Po}(\mu )$

denotes the Poisson distribution with mean

$\mathrm{Po}(\mu )$

denotes the Poisson distribution with mean

![]() $\mu$

, and

$\mu$

, and

![]() $\mathcal{N}(0,1)$

is the standard normal distribution.

$\mathcal{N}(0,1)$

is the standard normal distribution.

![]() $C$

denotes constants that may vary from one occurrence to the next. They do not depend on

$C$

denotes constants that may vary from one occurrence to the next. They do not depend on

![]() $n$

, but they may depend on

$n$

, but they may depend on

![]() $m$

,

$m$

,

![]() $H$

, and other parameters.

$H$

, and other parameters.

3. Main results

Consider first subgraphs that are cycles. The first two theorems show that when

![]() $n$

is large, the count of any cycle with a fixed number of edges is approximately Poisson, and that the joint distribution of the counts of cycles of different numbers of edges can be approximated by independent Poisson variables. For convenience, we refer to a cycle with

$n$

is large, the count of any cycle with a fixed number of edges is approximately Poisson, and that the joint distribution of the counts of cycles of different numbers of edges can be approximated by independent Poisson variables. For convenience, we refer to a cycle with

![]() $\ell$

edges and vertices simply as an

$\ell$

edges and vertices simply as an

![]() $\ell$

-cycle. Note also we view a pair of parallel edges as a 2-cycle.

$\ell$

-cycle. Note also we view a pair of parallel edges as a 2-cycle.

Theorem 3.1.

Fixing the positive integers

![]() $m\ge 2$

and

$m\ge 2$

and

![]() $\ell \geqslant 2$

, let

$\ell \geqslant 2$

, let

![]() $W_n$

be the number of

$W_n$

be the number of

![]() $\ell$

-cycles in

$\ell$

-cycles in

![]() $G^m_n$

, and let

$G^m_n$

, and let

![]() $\mu _n\,:\!=\,{\mathbb{E}} W_n$

. Then,

$\mu _n\,:\!=\,{\mathbb{E}} W_n$

. Then,

and there is a positive constant

![]() $C=C(m,\ell )$

such that

$C=C(m,\ell )$

such that

Remark 3.2. Let

![]() $W_n$

be as above and

$W_n$

be as above and

![]() $Y^{\prime}_n\sim \mathrm{Po}(\mu _n)$

and define

$Y^{\prime}_n\sim \mathrm{Po}(\mu _n)$

and define

It follows from Theorem 3.1 that

On the other hand, the classical Berry–Esseen theorem implies that

Combining (3.4) and (3.5) with the triangle inequality, and using (3.1), we obtain

In particular, the cycle count

![]() $W_n$

is asymptotically normal. In fact, the estimate (3.6) is sharp. Since

$W_n$

is asymptotically normal. In fact, the estimate (3.6) is sharp. Since

![]() $\mathcal N(0,1)$

has a continuous distribution function, while the distribution function of

$\mathcal N(0,1)$

has a continuous distribution function, while the distribution function of

![]() $Z_n$

has a jump

$Z_n$

has a jump

![]() $\mathbb{P}(W_n=k)$

(if this is non-zero) at

$\mathbb{P}(W_n=k)$

(if this is non-zero) at

![]() $(k-\mu _n)/\sqrt{\mu _n}$

, it follows by choosing

$(k-\mu _n)/\sqrt{\mu _n}$

, it follows by choosing

![]() $k=\lfloor{\mu _n}\rfloor$

and using (3.2) and (3.1) that

$k=\lfloor{\mu _n}\rfloor$

and using (3.2) and (3.1) that

Consequently, combining (3.5) and (3.7),

In the following corresponding theorem on multi-variate Poisson approximation, the error bound that we obtain is slightly inferior to the one in Theorem3.1. As in Remark 3.2, this theorem implies also a multivariate normal approximation (we omit the details).

Theorem 3.3.

Fix the positive integers

![]() $m,r\ge 2$

and

$m,r\ge 2$

and

![]() $\ell (i)\in [2,\infty )$

,

$\ell (i)\in [2,\infty )$

,

![]() $i\in [r]$

, with

$i\in [r]$

, with

![]() $\ell (i)\ne \ell (j)$

for

$\ell (i)\ne \ell (j)$

for

![]() $i\ne j$

. Let

$i\ne j$

. Let

![]() $W^{(i)}_n$

be the number of cycles of length

$W^{(i)}_n$

be the number of cycles of length

![]() $\ell (i)$

in

$\ell (i)$

in

![]() $G^m_n$

, and let

$G^m_n$

, and let

![]() $\mu _{n,i}\,:\!=\,{\mathbb{E}} W_n^{(i)}$

. Then, there is a positive constant

$\mu _{n,i}\,:\!=\,{\mathbb{E}} W_n^{(i)}$

. Then, there is a positive constant

![]() $C=C(m,(\ell (i))_{i\in [r]})$

such that

$C=C(m,(\ell (i))_{i\in [r]})$

such that

\begin{equation} \mathop{d_{\mathrm{TV}}}\left (\mathcal{L}(\left \{{W^{(i)}_n}\right \}^r_{i=1}), \prod ^r_{i=1}\mathrm{Po}(\mu _{n,i})\right )\leqslant \frac{C\log \log n}{\log n}. \end{equation}

\begin{equation} \mathop{d_{\mathrm{TV}}}\left (\mathcal{L}(\left \{{W^{(i)}_n}\right \}^r_{i=1}), \prod ^r_{i=1}\mathrm{Po}(\mu _{n,i})\right )\leqslant \frac{C\log \log n}{\log n}. \end{equation}

Example 3.4.

The case

![]() $\ell =2$

is simple in Theorems 3.1 and 3.3, since the numbers of pairs of parallel edges with larger endpoint

$\ell =2$

is simple in Theorems 3.1 and 3.3, since the numbers of pairs of parallel edges with larger endpoint

![]() $j$

are independent for different

$j$

are independent for different

![]() $j$

; moreover, we have the exact formula

$j$

; moreover, we have the exact formula

![]() $\mu _n=\binom m2\sum _{j=2}^n\frac{1}{j-1}$

. For

$\mu _n=\binom m2\sum _{j=2}^n\frac{1}{j-1}$

. For

![]() $\ell =3$

, Example 2.1 yields

$\ell =3$

, Example 2.1 yields

Next, we state the normal approximation for the count of any unicyclic graph, i.e., a graph that contains exactly one cycle. For completeness, we include cycles in the theorem below. Let

![]() $\mathcal{C}_\ell$

be an

$\mathcal{C}_\ell$

be an

![]() $\ell$

-cycle, let

$\ell$

-cycle, let

![]() $s\ge 0$

, and let, for

$s\ge 0$

, and let, for

![]() $i=1,\dots, s$

,

$i=1,\dots, s$

,

![]() $\mathcal{T}_i$

be a tree with

$\mathcal{T}_i$

be a tree with

![]() $t_i$

edges and a distinguished root, so that

$t_i$

edges and a distinguished root, so that

![]() $\mathcal{T}_i$

has

$\mathcal{T}_i$

has

![]() $t_i+1$

vertices. We consider a graph

$t_i+1$

vertices. We consider a graph

![]() $\Lambda \,:\!=\,\Lambda _{\ell, t_1,\dots, t_s}$

which can be constructed by attaching each

$\Lambda \,:\!=\,\Lambda _{\ell, t_1,\dots, t_s}$

which can be constructed by attaching each

![]() $\mathcal{T}_i$

to

$\mathcal{T}_i$

to

![]() $\mathcal{C}_\ell$

, using a vertex of

$\mathcal{C}_\ell$

, using a vertex of

![]() $\mathcal{C}_\ell$

as the distinguished root of

$\mathcal{C}_\ell$

as the distinguished root of

![]() $\mathcal{T}_i$

. (This does not specify

$\mathcal{T}_i$

. (This does not specify

![]() $\Lambda$

uniquely, but we choose one possibility.) By combining the trees

$\Lambda$

uniquely, but we choose one possibility.) By combining the trees

![]() $\mathcal{T}_i$

that are attached at the same vertex in the cycle, we may assume that

$\mathcal{T}_i$

that are attached at the same vertex in the cycle, we may assume that

![]() $s\leqslant \ell$

and that each

$s\leqslant \ell$

and that each

![]() $\mathcal{T}_i$

is attached to

$\mathcal{T}_i$

is attached to

![]() $\mathcal{C}_\ell$

at a distinct vertex. Denote by

$\mathcal{C}_\ell$

at a distinct vertex. Denote by

![]() $\Gamma =\Gamma ^{(n,m)}_{\Lambda }$

the set of all potential copies of

$\Gamma =\Gamma ^{(n,m)}_{\Lambda }$

the set of all potential copies of

![]() $\Lambda$

; and saving notation, let

$\Lambda$

; and saving notation, let

Theorem 3.5.

Fixing the integers

![]() $m\ge 2$

,

$m\ge 2$

,

![]() $\ell \ge 2$

,

$\ell \ge 2$

,

![]() $s\ge 0$

,

$s\ge 0$

,

![]() $t_1,\dots, t_s\ge 1$

, and a unicyclic subgraph

$t_1,\dots, t_s\ge 1$

, and a unicyclic subgraph

![]() $\Lambda$

as above, let

$\Lambda$

as above, let

![]() $W_n$

,

$W_n$

,

![]() $\mu _n$

and

$\mu _n$

and

![]() $\sigma _n$

be as in (3.11), and

$\sigma _n$

be as in (3.11), and

![]() $Y_n\,:\!=\,(W_n-\mu _n)/\sigma _n$

. Let

$Y_n\,:\!=\,(W_n-\mu _n)/\sigma _n$

. Let

![]() $t=\sum ^s_{i=1}t_i\ge 0$

; then

$t=\sum ^s_{i=1}t_i\ge 0$

; then

and there is a positive constant

![]() $C=C(m,\ell, t)$

such that

$C=C(m,\ell, t)$

such that

Remark 3.6. In view of (2.4) and (3.14), Theorem 3.5 implies an error bound

for the Kolmogorov distance, which in the case of a cycle is clearly not as sharp as the error bound in (3.6).

The next theorem concerns trees, and the normal approximation result relies on the assumption that the variance of the counts of the tree of choice is of the exact order

![]() $\Theta (n)$

. The precise order of the variance in this case is much harder to establish, essentially due to the fact that the total covariance of the positively correlated pairs of copies and that of the negatively correlated pairs of copies are both of order

$\Theta (n)$

. The precise order of the variance in this case is much harder to establish, essentially due to the fact that the total covariance of the positively correlated pairs of copies and that of the negatively correlated pairs of copies are both of order

![]() $O(n)$

. However, we are able to prove that the variance of the count of a star is precisely

$O(n)$

. However, we are able to prove that the variance of the count of a star is precisely

![]() $\Theta (n)$

. Below, let

$\Theta (n)$

. Below, let

![]() $\Lambda$

be a fixed tree on

$\Lambda$

be a fixed tree on

![]() $t$

vertices, let

$t$

vertices, let

![]() $\Gamma$

be the set of potential copies of

$\Gamma$

be the set of potential copies of

![]() $\Lambda$

, and define again

$\Lambda$

, and define again

![]() $W_n,\mu _n$

, and

$W_n,\mu _n$

, and

![]() $\sigma ^2_n$

by (3.11).

$\sigma ^2_n$

by (3.11).

Theorem 3.7.

Fix the positive integers

![]() $m,t$

and the tree

$m,t$

and the tree

![]() $\Lambda$

with

$\Lambda$

with

![]() $t$

vertices. Let

$t$

vertices. Let

![]() $W_n,\mu _n$

and

$W_n,\mu _n$

and

![]() $\sigma _n$

be as above, and

$\sigma _n$

be as above, and

![]() $Y_n\,:\!=\,(W_n-\mu _n)/\sigma _n$

. Then

$Y_n\,:\!=\,(W_n-\mu _n)/\sigma _n$

. Then

If

![]() $\sigma _n^2=\Theta (n)$

, then there is a constant

$\sigma _n^2=\Theta (n)$

, then there is a constant

![]() $C=C(m,t)$

such that

$C=C(m,t)$

such that

In the trivial cases

![]() $t=1$

and

$t=1$

and

![]() $t=2$

(a single vertex and a single edge, respectively),

$t=2$

(a single vertex and a single edge, respectively),

![]() $W_n$

is deterministic and

$W_n$

is deterministic and

![]() $\sigma ^2_n=0$

; thus

$\sigma ^2_n=0$

; thus

![]() $Y_n$

is not even defined. We conjecture that these are the only cases where (3.18) does not hold.

$Y_n$

is not even defined. We conjecture that these are the only cases where (3.18) does not hold.

Conjecture 3.8.

If

![]() $\Lambda$

is tree with at least 2 edges, then

$\Lambda$

is tree with at least 2 edges, then

![]() $\sigma ^2_n=\Theta (n)$

, and thus the normal approximation (3.18) holds.

$\sigma ^2_n=\Theta (n)$

, and thus the normal approximation (3.18) holds.

We show in Section 9.2 that this holds at least for stars.

Theorem 3.9.

Conjecture 3.8 holds when

![]() $\Lambda$

is a star

$\Lambda$

is a star

![]() $S_\ell$

with

$S_\ell$

with

![]() $\ell \ge 2$

edges.

$\ell \ge 2$

edges.

The remaining subgraphs are the multicyclic ones. We consider in particular the ones that have the following properties.

Definition 3.10. We say that a graph is

-

• multicyclic if it has at least two (not necessarily edge- or vertex-disjoint) cycles;

-

• leaf-free if it has no node of degree 1.

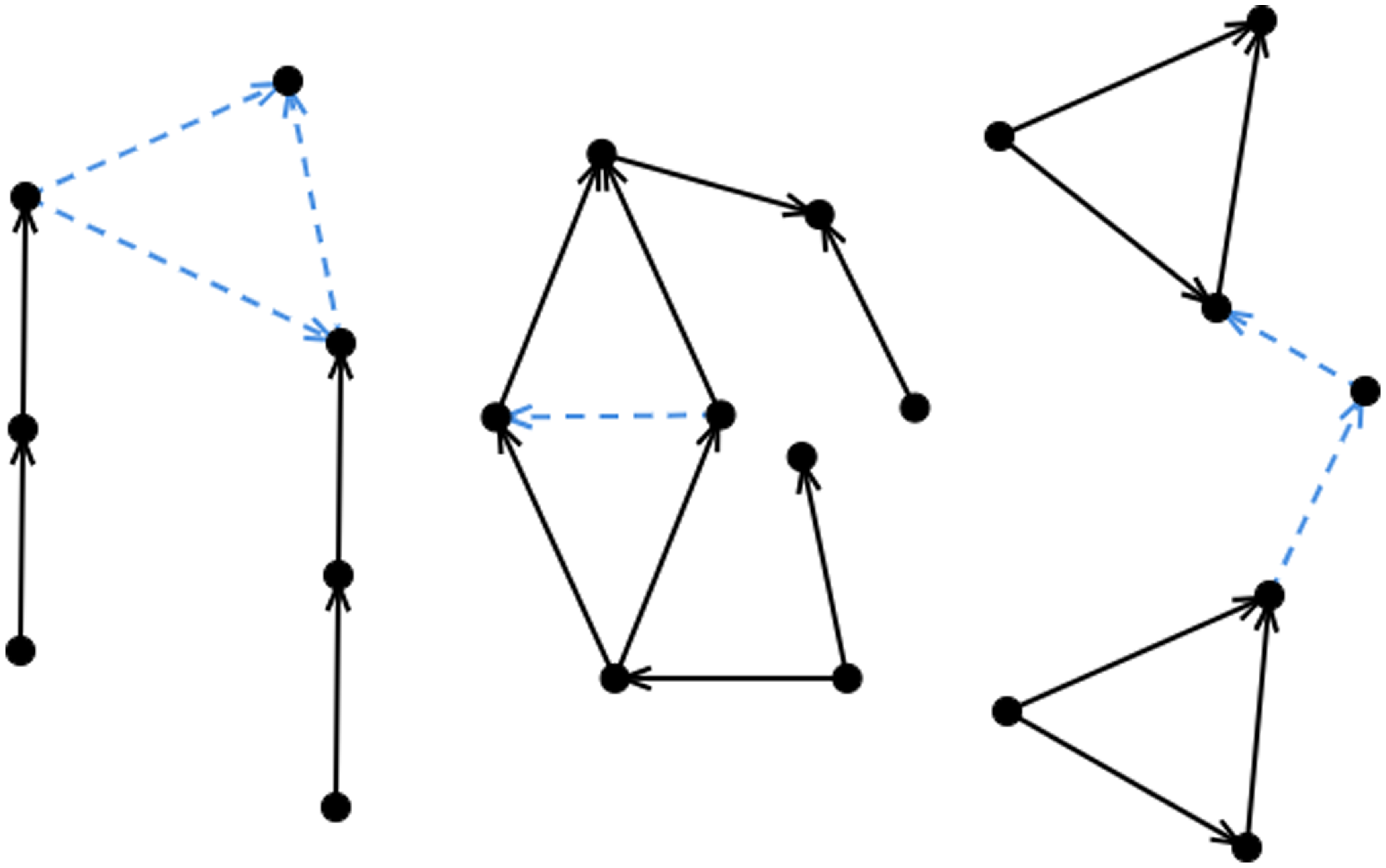

As examples of connected graphs that are both multicyclic and leaf-free, we have two edge-disjoint cycles joined by an edge, or two cycles that share precisely an edge; see Figure 2 for another example.

Theorem 3.11.

For any connected graph

![]() $H$

that is both multicyclic and leaf-free, and any

$H$

that is both multicyclic and leaf-free, and any

![]() $m\geqslant 2$

, the expected number of copies of

$m\geqslant 2$

, the expected number of copies of

![]() $H$

in

$H$

in

![]() $G^m_n$

is bounded as

$G^m_n$

is bounded as

![]() $n\to \infty$

.

$n\to \infty$

.

Remark 3.12. We may define the infinite random graph

![]() $G^m_\infty$

with vertex set {1,2,…} as the union of

$G^m_\infty$

with vertex set {1,2,…} as the union of

![]() $G^m_n$

over all

$G^m_n$

over all

![]() $n\ge 1$

. Let

$n\ge 1$

. Let

![]() $H$

be any fixed graph and let as above

$H$

be any fixed graph and let as above

![]() $W_n$

be the number of copies of

$W_n$

be the number of copies of

![]() $H$

in

$H$

in

![]() $G^m_n$

; also, let

$G^m_n$

; also, let

![]() $W_\infty \le \infty$

be the number of copies of

$W_\infty \le \infty$

be the number of copies of

![]() $H$

in

$H$

in

![]() $G^m_\infty$

. Then, as

$G^m_\infty$

. Then, as

![]() ${n\to \infty }$

,

${n\to \infty }$

,

![]() $W_n\nearrow W_\infty$

. In particular,

$W_n\nearrow W_\infty$

. In particular,

![]() $W_n\overset{\mathrm{p}}{\longrightarrow } W_\infty$

, and since both (3.12)–(3.13) and (3.16)–(3.17) imply

$W_n\overset{\mathrm{p}}{\longrightarrow } W_\infty$

, and since both (3.12)–(3.13) and (3.16)–(3.17) imply

![]() $W_n\overset{\mathrm{p}}{\longrightarrow }+\infty$

, we see that if

$W_n\overset{\mathrm{p}}{\longrightarrow }+\infty$

, we see that if

![]() $H$

is unicyclic or a tree, then

$H$

is unicyclic or a tree, then

![]() $W_\infty =\infty$

a.s. On the other hand, Theorem 3.11 implies by monotone convergence that if

$W_\infty =\infty$

a.s. On the other hand, Theorem 3.11 implies by monotone convergence that if

![]() $H$

is multicyclic and leaf-free, then

$H$

is multicyclic and leaf-free, then

![]() ${\mathbb{E}} W_\infty \lt \infty$

and thus

${\mathbb{E}} W_\infty \lt \infty$

and thus

![]() $W_\infty \lt \infty$

a.s.

$W_\infty \lt \infty$

a.s.

Note further that since

![]() $W_n\overset{\mathrm{a.s.}}{\longrightarrow } W_\infty$

as

$W_n\overset{\mathrm{a.s.}}{\longrightarrow } W_\infty$

as

![]() ${n\to \infty }$

, in particular,

${n\to \infty }$

, in particular,

![]() $W_n$

converges in distribution to

$W_n$

converges in distribution to

![]() $W_\infty$

(without any normalisation). However, we do not expect that this limiting distribution has any nice form such as Poisson, since

$W_\infty$

(without any normalisation). However, we do not expect that this limiting distribution has any nice form such as Poisson, since

![]() $W_\infty$

is mainly determined by the random wirings of the first few edges in

$W_\infty$

is mainly determined by the random wirings of the first few edges in

![]() $G^m_n$

.

$G^m_n$

.

We also consider the expected counts of a graph that are multicyclic but not leaf-free. Note that every such graph

![]() $H$

can be constructed in the following way. Start with a graph

$H$

can be constructed in the following way. Start with a graph

![]() $H^{\prime}$

that is both multicyclic and leaf-free, and let

$H^{\prime}$

that is both multicyclic and leaf-free, and let

![]() $\mathcal{T}_i$

,

$\mathcal{T}_i$

,

![]() $i=1,\ldots, s$

be trees with

$i=1,\ldots, s$

be trees with

![]() $t_i$

edges and a distinguished root. The graph

$t_i$

edges and a distinguished root. The graph

![]() $H$

is obtained by attaching every

$H$

is obtained by attaching every

![]() $\mathcal{T}_i$

to

$\mathcal{T}_i$

to

![]() $H^{\prime}$

, with one of the vertices of

$H^{\prime}$

, with one of the vertices of

![]() $H^{\prime}$

as the distinguished root of

$H^{\prime}$

as the distinguished root of

![]() $\mathcal{T}_i$

. As before, we may assume that any pair of

$\mathcal{T}_i$

. As before, we may assume that any pair of

![]() $\mathcal{T}_i$

do not share the same vertex of

$\mathcal{T}_i$

do not share the same vertex of

![]() $H^{\prime}$

. The graph

$H^{\prime}$

. The graph

![]() $H^{\prime}$

is known as the

$H^{\prime}$

is known as the

![]() $2$

-core of

$2$

-core of

![]() $H$

. See Figure 2 for an example.

$H$

. See Figure 2 for an example.

Figure 2. Left: a graph that is both multicyclic and leaf-free. Right: a graph that is multicyclic but not leaf-free.

In the following theorem, we for convenience include the case

![]() $t=0$

, which is just Theorem3.11. (Then

$t=0$

, which is just Theorem3.11. (Then

![]() $s=0$

and

$s=0$

and

![]() $H^{\prime}=H$

.) Recall the definition of

$H^{\prime}=H$

.) Recall the definition of

![]() $m_H$

in Remark 2.2, and that

$m_H$

in Remark 2.2, and that

![]() $W_n=0$

if

$W_n=0$

if

![]() $m\lt m_H$

.

$m\lt m_H$

.

Theorem 3.13.

Fixing a connected multicyclic graph

![]() $H$

and a positive integer

$H$

and a positive integer

![]() $m\geqslant m_H$

, let

$m\geqslant m_H$

, let

![]() $W_n$

be the number of copies of

$W_n$

be the number of copies of

![]() $H$

in

$H$

in

![]() $G^m_n$

, and let

$G^m_n$

, and let

![]() $t\,:\!=\,\sum _i t_i\ge 0$

be the number of vertices in

$t\,:\!=\,\sum _i t_i\ge 0$

be the number of vertices in

![]() $H\setminus H^{\prime}$

, where

$H\setminus H^{\prime}$

, where

![]() $H^{\prime}$

as above is the

$H^{\prime}$

as above is the

![]() $2$

-core of

$2$

-core of

![]() $H$

. Then,

$H$

. Then,

The precise order of

![]() ${\mathrm{Var}}(W_n)$

is more difficult to establish for the same reason as for trees. For this family of graphs, we do not expect the count to be approximately Poisson or normal (after renormalisation). Instead, we make the following conjecture, where we, as commented above, do not expect the distribution of the limit to have a nice form.

${\mathrm{Var}}(W_n)$

is more difficult to establish for the same reason as for trees. For this family of graphs, we do not expect the count to be approximately Poisson or normal (after renormalisation). Instead, we make the following conjecture, where we, as commented above, do not expect the distribution of the limit to have a nice form.

Conjecture 3.14.

Let

![]() $H$

and

$H$

and

![]() $H^{\prime}$

be as in Theorem 3.13, and let

$H^{\prime}$

be as in Theorem 3.13, and let

![]() $W_n$

and

$W_n$

and

![]() $W^{\prime}_n$

be the numbers of copies of

$W^{\prime}_n$

be the numbers of copies of

![]() $H$

and

$H$

and

![]() $H^{\prime}$

in

$H^{\prime}$

in

![]() $G^m_n$

, respectively. Then there exists a constant

$G^m_n$

, respectively. Then there exists a constant

![]() $c\gt 0$

such that

$c\gt 0$

such that

![]() $(\log n)^{-t}W_n-c W^{\prime}_n \overset{\mathrm{a.s.}}{\longrightarrow } 0$

as

$(\log n)^{-t}W_n-c W^{\prime}_n \overset{\mathrm{a.s.}}{\longrightarrow } 0$

as

![]() ${n\to \infty }$

, and thus

${n\to \infty }$

, and thus

We can prove a special case. It appears likely that the general case can be shown by similar arguments, but the details seem complicated and we leave this as an open problem.

Theorem 3.15.

Conjecture 3.14 holds when

![]() $t=1$

.

$t=1$

.

We note also that at least for some multicyclic graphs

![]() $H^{\prime}$

,

$H^{\prime}$

,

![]() $W^{\prime}_\infty =0$

with positive probability, and thus

$W^{\prime}_\infty =0$

with positive probability, and thus

![]() $\mathbb{P}(W_\infty =0)\geqslant \mathbb{P}(W^{\prime}_\infty =0)\gt 0$

; hence although

$\mathbb{P}(W_\infty =0)\geqslant \mathbb{P}(W^{\prime}_\infty =0)\gt 0$

; hence although

![]() ${\mathbb{E}} W_n\to \infty$

by (3.19),

${\mathbb{E}} W_n\to \infty$

by (3.19),

![]() $\mathbb{P}(W_n=0)\geqslant c\gt 0$

, which in particular shows that we cannot have Poisson or normal convergence. We do not know whether this holds for every simple multigraph

$\mathbb{P}(W_n=0)\geqslant c\gt 0$

, which in particular shows that we cannot have Poisson or normal convergence. We do not know whether this holds for every simple multigraph

![]() $H^{\prime}$

, and we give just one example.

$H^{\prime}$

, and we give just one example.

Example 3.16.

Let

![]() $H^{\prime}$

be the complete graph on 4 vertices,

$H^{\prime}$

be the complete graph on 4 vertices,

![]() $K_4$

, and construct

$K_4$

, and construct

![]() $H$

by adding a single edge to

$H$

by adding a single edge to

![]() $H^{\prime}$

; thus we take

$H^{\prime}$

; thus we take

![]() $s=1$

and

$s=1$

and

![]() $\mathcal{T}_1$

as an edge in the construction above. Let

$\mathcal{T}_1$

as an edge in the construction above. Let

![]() $W_n$

and

$W_n$

and

![]() $W^{\prime}_n$

be as above. By Theorem 3.11 (or a simple calculation), we see that a.s.

$W^{\prime}_n$

be as above. By Theorem 3.11 (or a simple calculation), we see that a.s.

![]() $W^{\prime}_n\to W^{\prime}_\infty \lt \infty$

as

$W^{\prime}_n\to W^{\prime}_\infty \lt \infty$

as

![]() $n\to \infty$

. Moreover, we claim that

$n\to \infty$

. Moreover, we claim that

![]() $\mathbb{P}(W^{\prime}_\infty =0)\gt 0$

.

$\mathbb{P}(W^{\prime}_\infty =0)\gt 0$

.

To see this, let

![]() $H^{\prime\prime}=K_4^-$

be

$H^{\prime\prime}=K_4^-$

be

![]() $K_4$

minus one edge, and let

$K_4$

minus one edge, and let

![]() $W_n^{\prime\prime}$

be the number of copies of

$W_n^{\prime\prime}$

be the number of copies of

![]() $K_4^-$

in

$K_4^-$

in

![]() $G^m_n$

. Fix a large

$G^m_n$

. Fix a large

![]() $N$

, and consider only

$N$

, and consider only

![]() $n\gt N$

. Let

$n\gt N$

. Let

![]() ${\mathcal{E}}_N^{\prime}$

be the event that there is a triangle in

${\mathcal{E}}_N^{\prime}$

be the event that there is a triangle in

![]() $G^m_N$

. Let

$G^m_N$

. Let

![]() ${\mathcal{E}}_{N,n}^{\prime\prime}$

be the event that there is a copy of

${\mathcal{E}}_{N,n}^{\prime\prime}$

be the event that there is a copy of

![]() $K_4$

in

$K_4$

in

![]() $G^m_n\cup K^m_N$

with at most 2 vertices in

$G^m_n\cup K^m_N$

with at most 2 vertices in

![]() $[N]$

. In other words

$[N]$

. In other words

![]() ${\mathcal{E}}^{\prime\prime}_{N,n}$

means that there is either a copy of

${\mathcal{E}}^{\prime\prime}_{N,n}$

means that there is either a copy of

![]() $K_4$

in

$K_4$

in

![]() $G^m_n$

, or a copy of

$G^m_n$

, or a copy of

![]() $K_4^-$

in

$K_4^-$

in

![]() $G^m_n$

with the two non-adjacent vertices in

$G^m_n$

with the two non-adjacent vertices in

![]() $[N]$

and the two others in

$[N]$

and the two others in

![]() $[n]\setminus [N]$

. Note that

$[n]\setminus [N]$

. Note that

![]() ${\mathcal{E}}_{N,n}^{\prime\prime}$

does not depend on the edges of

${\mathcal{E}}_{N,n}^{\prime\prime}$

does not depend on the edges of

![]() $G^m_N$

, and thus

$G^m_N$

, and thus

![]() ${\mathcal{E}}_N^{\prime}$

and

${\mathcal{E}}_N^{\prime}$

and

![]() ${\mathcal{E}}_{N,n}^{\prime\prime}$

are independent. If there is a copy of

${\mathcal{E}}_{N,n}^{\prime\prime}$

are independent. If there is a copy of

![]() $K_4$

in

$K_4$

in

![]() $G^m_n$

, then either

$G^m_n$

, then either

![]() ${\mathcal{E}}_N^{\prime}$

or

${\mathcal{E}}_N^{\prime}$

or

![]() ${\mathcal{E}}_{N,n}^{\prime\prime}$

holds, and thus

${\mathcal{E}}_{N,n}^{\prime\prime}$

holds, and thus

If

![]() ${\mathcal{E}}_{N,n}^{\prime\prime}$

holds, then there is a copy of

${\mathcal{E}}_{N,n}^{\prime\prime}$

holds, then there is a copy of

![]() $H^{\prime\prime}=K_4^-$

in

$H^{\prime\prime}=K_4^-$

in

![]() $G^m_n$

with at least two vertices in

$G^m_n$

with at least two vertices in

![]() $[n]\setminus [N]$

, and thus

$[n]\setminus [N]$

, and thus

![]() $W^{\prime\prime}_\infty \geqslant W^{\prime\prime}_n \geqslant W^{\prime\prime}_N+1$

. Hence, by Markov’s inequality,

$W^{\prime\prime}_\infty \geqslant W^{\prime\prime}_n \geqslant W^{\prime\prime}_N+1$

. Hence, by Markov’s inequality,

Since

![]() ${\mathbb{E}} W_N^{\prime\prime}\to{\mathbb{E}} W_\infty ^{\prime\prime}$

as

${\mathbb{E}} W_N^{\prime\prime}\to{\mathbb{E}} W_\infty ^{\prime\prime}$

as

![]() ${N\to \infty }$

, we can choose

${N\to \infty }$

, we can choose

![]() $N$

such that

$N$

such that

![]() ${\mathbb{E}} W_\infty ^{\prime\prime}-{\mathbb{E}} W_N^{\prime\prime}\le \frac 12$

; then (3.23) implies that

${\mathbb{E}} W_\infty ^{\prime\prime}-{\mathbb{E}} W_N^{\prime\prime}\le \frac 12$

; then (3.23) implies that

![]() $\mathbb{P}(\text{not }{\mathcal{E}}_{N,n}^{\prime\prime})\geqslant \frac 12$

for all

$\mathbb{P}(\text{not }{\mathcal{E}}_{N,n}^{\prime\prime})\geqslant \frac 12$

for all

![]() $n\gt N$

. Moreover,

$n\gt N$

. Moreover,

![]() $\mathbb{P}(\text{not }{\mathcal{E}}_N^{\prime})\gt 0$

, since there is a positive probability that all edges in

$\mathbb{P}(\text{not }{\mathcal{E}}_N^{\prime})\gt 0$

, since there is a positive probability that all edges in

![]() $G^m_N$

lead to 1, and then there is no triangle. Consequently, (3.22) yields

$G^m_N$

lead to 1, and then there is no triangle. Consequently, (3.22) yields

![]() $\mathbb{P}(W_n^{\prime}=0)\geqslant c$

, for some

$\mathbb{P}(W_n^{\prime}=0)\geqslant c$

, for some

![]() $c\gt 0$

that does not depend on

$c\gt 0$

that does not depend on

![]() $n\gt N$

; thus also

$n\gt N$

; thus also

![]() $\mathbb{P}(W^{\prime}_\infty =0)\geqslant c\gt 0$

.

$\mathbb{P}(W^{\prime}_\infty =0)\geqslant c\gt 0$

.

If there are no copies of

![]() $H^{\prime}$

, then there can be no copies of

$H^{\prime}$

, then there can be no copies of

![]() $H$

, and thus we conclude

$H$

, and thus we conclude

![]() $\mathbb{P}(W_n=0)\geqslant c\gt 0$

for all

$\mathbb{P}(W_n=0)\geqslant c\gt 0$

for all

![]() $n$

. Hence, although

$n$

. Hence, although

![]() ${\mathbb{E}} W_n\to \infty$

by (3.19),

${\mathbb{E}} W_n\to \infty$

by (3.19),

![]() $W_n$

does not converge in probability to

$W_n$

does not converge in probability to

![]() $+\infty$

, and in particular

$+\infty$

, and in particular

![]() $W_n$

cannot be asymptotically normal.

$W_n$

cannot be asymptotically normal.

3.1. Discussion on possible future avenues

An important direction for future work is to verify if Conjecture 3.8 holds, or in other words, to prove that the variance of the count of any tree with at least 2 edges is of the precise order

![]() $n$

. Another direction for future work is to compute the leading coefficients of the means and variances of the subgraph counts. More precise expressions will possibly also enable us to verify if the approximation results still hold if

$n$

. Another direction for future work is to compute the leading coefficients of the means and variances of the subgraph counts. More precise expressions will possibly also enable us to verify if the approximation results still hold if

![]() $m$

and (or) the number of vertices of the subgraph are allowed to increase with

$m$

and (or) the number of vertices of the subgraph are allowed to increase with

![]() $n$

. It is also possible to prove a multivariate analogue for the normal approximation results. This can be done using, for instance, [[Reference Chen, Goldstein and Shao4], Theorem 12.1], which also uses Stein’s method with the size-bias coupling.

$n$

. It is also possible to prove a multivariate analogue for the normal approximation results. This can be done using, for instance, [[Reference Chen, Goldstein and Shao4], Theorem 12.1], which also uses Stein’s method with the size-bias coupling.

3.2. Article outline

In the next section, we state the results from Stein’s method that we apply in the approximation proofs. These results use a coupling that we construct in Section 5. In Section 6, we prove Theorem3.11 and some additional lemmas that will be useful in the approximation proofs later. We prove the Poisson approximation results (Theorems3.1 and 3.3) in Section 7 and the normal approximation for unicyclic graphs (Theorem 3.5) in Section 8, where we also prove Theorem3.13. Section 9 contains the proofs of the normal approximation for trees (Theorems3.7 and 3.9), and in the last section we give a proof of Theorem 3.15.

4. Preliminary: Stein’s method

The error bounds in the Poisson and normal approximation results that we use are obtained from general results on Stein’s method in terms of a coupling that we now describe. Let

![]() $(I_\alpha )_{\alpha \in \Gamma }$

be a collection of 0-1 valued random variables. For each

$(I_\alpha )_{\alpha \in \Gamma }$

be a collection of 0-1 valued random variables. For each

![]() $\alpha \in \Gamma$

, let the random variables

$\alpha \in \Gamma$

, let the random variables

![]() $(J_{\beta \alpha })_{\beta \in \Gamma }$

be defined on the same space as

$(J_{\beta \alpha })_{\beta \in \Gamma }$

be defined on the same space as

![]() $(I_\alpha )_{\alpha \in \Gamma }$

, satisfying

$(I_\alpha )_{\alpha \in \Gamma }$

, satisfying

Note that this is a special case of the size-bias coupling appearing in the literature of Stein’s method; see [Reference Chen, Goldstein and Shao4, Reference Ross17], and also [Reference Goldstein and Rinott12]. The theorem below is a direct consequence of [[Reference Barbour, Holst and Janson2], Chapter 2, equation (1.2)].

Theorem 4.1.

Let

![]() $(I_\alpha )_{\alpha \in \Gamma }$

be as above, where

$(I_\alpha )_{\alpha \in \Gamma }$

be as above, where

![]() ${\mathbb{E}} I_\alpha =\pi _\alpha$

. Suppose that for each

${\mathbb{E}} I_\alpha =\pi _\alpha$

. Suppose that for each

![]() $\alpha \in \Gamma$

, there is a coupling of

$\alpha \in \Gamma$

, there is a coupling of

![]() $(J_{\beta \alpha })_{\beta \in \Gamma }$

and

$(J_{\beta \alpha })_{\beta \in \Gamma }$

and

![]() $(I_\alpha )_{\alpha \in \Gamma }$

such that (4.1) holds. Let

$(I_\alpha )_{\alpha \in \Gamma }$

such that (4.1) holds. Let

![]() $W\,:\!=\,\sum _{\alpha \in \Gamma }I_\alpha$

,

$W\,:\!=\,\sum _{\alpha \in \Gamma }I_\alpha$

,

![]() $\lambda \,:\!=\,\sum _{\alpha \in \Gamma }\pi _\alpha$

. Then,

$\lambda \,:\!=\,\sum _{\alpha \in \Gamma }\pi _\alpha$

. Then,

\begin{equation} \mathop{d_{\mathrm{TV}}}(\mathcal{L}(W),\mathrm{Po}(\lambda ))\leqslant \min \left \{{1,\lambda ^{-1}}\right \}\left (\sum _{\alpha \in \Gamma }\pi ^2_\alpha +\sum _{\alpha \in \Gamma } \sum _{\beta \not =\alpha }\pi _\alpha{\mathbb{E}} | J_{\beta \alpha }-I_\beta |\right ). \end{equation}

\begin{equation} \mathop{d_{\mathrm{TV}}}(\mathcal{L}(W),\mathrm{Po}(\lambda ))\leqslant \min \left \{{1,\lambda ^{-1}}\right \}\left (\sum _{\alpha \in \Gamma }\pi ^2_\alpha +\sum _{\alpha \in \Gamma } \sum _{\beta \not =\alpha }\pi _\alpha{\mathbb{E}} | J_{\beta \alpha }-I_\beta |\right ). \end{equation}

Now, assume that there is a partition

![]() $\Gamma =\bigcup ^r_{j=1}\Gamma _j$

. The next theorem quantifies how well the random vector

$\Gamma =\bigcup ^r_{j=1}\Gamma _j$

. The next theorem quantifies how well the random vector

![]() $(\sum _{\alpha \in \Gamma _j}I_\alpha )_{1\leqslant j\leqslant r}$

can be approximated by a vector of independent Poisson variables. In our application,

$(\sum _{\alpha \in \Gamma _j}I_\alpha )_{1\leqslant j\leqslant r}$

can be approximated by a vector of independent Poisson variables. In our application,

![]() $\Gamma _j$

is to be taken as the set of potential cycles of length

$\Gamma _j$

is to be taken as the set of potential cycles of length

![]() $\ell (j)$

.

$\ell (j)$

.

Theorem 4.2 ([ [Reference Barbour, Holst and Janson2], Theorem 10.K]). Let

![]() $W_j=\sum _{\alpha \in \Gamma _j}I_\alpha$

,

$W_j=\sum _{\alpha \in \Gamma _j}I_\alpha$

,

![]() ${\mathbb{E}} I_\alpha =\pi _\alpha$

and

${\mathbb{E}} I_\alpha =\pi _\alpha$

and

![]() $\lambda _j={\mathbb{E}} W_j$

. Suppose that for each

$\lambda _j={\mathbb{E}} W_j$

. Suppose that for each

![]() $\alpha \in \Gamma$

, there is a coupling of

$\alpha \in \Gamma$

, there is a coupling of

![]() $(J_{\beta \alpha })_{\beta \in \Gamma }$

and

$(J_{\beta \alpha })_{\beta \in \Gamma }$

and

![]() $(I_\alpha )_{\alpha \in \Gamma }$

such that (4.1) holds. Then,

$(I_\alpha )_{\alpha \in \Gamma }$

such that (4.1) holds. Then,

\begin{equation} \mathop{d_{\mathrm{TV}}}\left ({\mathcal{L}(\left \{{W_j}\right \}^r_{j=1}), \prod ^r_{j=1}\mathrm{Po}(\lambda _j)}\right )\leqslant \frac{1+2\log ^+(e\min _j \lambda _j)}{e\min _j\lambda _j}\sum _{\alpha \in \Gamma } \left ({\pi ^2_\alpha + \sum _{\beta \not =\alpha }\pi _\alpha{\mathbb{E}} | J_{\beta \alpha }-I_\beta |}\right ). \end{equation}

\begin{equation} \mathop{d_{\mathrm{TV}}}\left ({\mathcal{L}(\left \{{W_j}\right \}^r_{j=1}), \prod ^r_{j=1}\mathrm{Po}(\lambda _j)}\right )\leqslant \frac{1+2\log ^+(e\min _j \lambda _j)}{e\min _j\lambda _j}\sum _{\alpha \in \Gamma } \left ({\pi ^2_\alpha + \sum _{\beta \not =\alpha }\pi _\alpha{\mathbb{E}} | J_{\beta \alpha }-I_\beta |}\right ). \end{equation}

We now state a normal approximation result for a collection of 0-1 variables, which follows from [[Reference Ross17], Theorem 3.20 and Corollary 3.24].

Theorem 4.3.

Let

![]() $(I_\alpha )_{\alpha \in \Gamma }$

be a collection of

$(I_\alpha )_{\alpha \in \Gamma }$

be a collection of

![]() $0$

-

$0$

-

![]() $1$

variables and let

$1$

variables and let

![]() $(J_{\beta \alpha })_{\beta \in \Gamma }$

be defined on the same space as

$(J_{\beta \alpha })_{\beta \in \Gamma }$

be defined on the same space as

![]() $(I_\alpha )_{\alpha \in \Gamma }$

, satisfying (4.1). Define

$(I_\alpha )_{\alpha \in \Gamma }$

, satisfying (4.1). Define

![]() $W\,:\!=\,\sum _{\alpha \in \Gamma }I_\alpha$

,

$W\,:\!=\,\sum _{\alpha \in \Gamma }I_\alpha$

,

![]() $\mu \,:\!=\,{\mathbb{E}} W$

,

$\mu \,:\!=\,{\mathbb{E}} W$

,

![]() $\sigma ^2\,:\!=\,{\mathrm{Var}}(W)$

and

$\sigma ^2\,:\!=\,{\mathrm{Var}}(W)$

and

![]() $W^s\,:\!=\, \sum _{\beta \in \Gamma \setminus \{K\}}J_{\beta K}+1$

, where the index

$W^s\,:\!=\, \sum _{\beta \in \Gamma \setminus \{K\}}J_{\beta K}+1$

, where the index

![]() $K\in \Gamma$

is chosen randomly with probabilities

$K\in \Gamma$

is chosen randomly with probabilities

![]() $\mathbb{P}(K=\alpha )={\mathbb{E}} I_\alpha /\mu$

. If

$\mathbb{P}(K=\alpha )={\mathbb{E}} I_\alpha /\mu$

. If

![]() $Z=(W-\mu )/\sigma$

, then

$Z=(W-\mu )/\sigma$

, then

where

![]() $\mathcal{N}(0,1)$

is the standard normal distribution.

$\mathcal{N}(0,1)$

is the standard normal distribution.

5. Construction of the size-bias couplings

As the edges of a uniform attachment graph are independent, we can construct the coupling appearing in Theorems4.1–4.3 as follows. Fix the subgraph

![]() $H$

, let

$H$

, let

![]() $G\,:\!=\,G^m_n$

be the uniform attachment graph and let

$G\,:\!=\,G^m_n$

be the uniform attachment graph and let

![]() $\Gamma$

be the set of potential copies of

$\Gamma$

be the set of potential copies of

![]() $H$

. For every

$H$

. For every

![]() $\alpha \in \Gamma$

, we couple two graphs

$\alpha \in \Gamma$

, we couple two graphs

![]() $G$

and

$G$

and

![]() $G^\alpha$

by matching their attachment steps, except for the edges of

$G^\alpha$

by matching their attachment steps, except for the edges of

![]() $\alpha$

. In

$\alpha$

. In

![]() $G^\alpha$

, the edges of

$G^\alpha$

, the edges of

![]() $\alpha$

are wired in a deterministic fashion to obtain the copy

$\alpha$

are wired in a deterministic fashion to obtain the copy

![]() $\alpha$

of

$\alpha$

of

![]() $H$

; whereas in

$H$

; whereas in

![]() $G$

, they are generated independently from the construction of

$G$

, they are generated independently from the construction of

![]() $G^\alpha$

. For

$G^\alpha$

. For

![]() $\beta \in \Gamma$

, let as above

$\beta \in \Gamma$

, let as above

![]() ${\mathbf{1}}_\beta$

be the indicator that the subgraph

${\mathbf{1}}_\beta$

be the indicator that the subgraph

![]() $\beta$

is present in

$\beta$

is present in

![]() $G$

, and let

$G$

, and let

![]() ${\mathbf{1}}^\alpha _\beta$

be the indicator that

${\mathbf{1}}^\alpha _\beta$

be the indicator that

![]() $\beta$

is present in

$\beta$

is present in

![]() $G^\alpha$

. It follows that for every chosen

$G^\alpha$

. It follows that for every chosen

![]() $\alpha$

,

$\alpha$

,

Let

![]() $\Gamma ^-_\alpha \subset \Gamma$

be the set of copies

$\Gamma ^-_\alpha \subset \Gamma$

be the set of copies

![]() $\beta$

of

$\beta$

of

![]() $H$

such that at least one edge of

$H$

such that at least one edge of

![]() $\alpha$

has a different endpoint in

$\alpha$

has a different endpoint in

![]() $\beta$

, and let

$\beta$

, and let

![]() $\Gamma ^+_\alpha \subset \Gamma \setminus \Gamma ^-_\alpha$

be the set of copies of

$\Gamma ^+_\alpha \subset \Gamma \setminus \Gamma ^-_\alpha$

be the set of copies of

![]() $H$

that share at least one edge with

$H$

that share at least one edge with

![]() $\alpha$

, excluding

$\alpha$

, excluding

![]() $\alpha$

itself. Observe that under the coupling,

$\alpha$

itself. Observe that under the coupling,

![]() ${\mathbf{1}}^\alpha _{\beta }\leqslant{\mathbf{1}}_\beta$

for

${\mathbf{1}}^\alpha _{\beta }\leqslant{\mathbf{1}}_\beta$

for

![]() $\beta \in \Gamma ^-_\alpha$

and

$\beta \in \Gamma ^-_\alpha$

and

![]() ${\mathbf{1}}^\alpha _{\beta }\geqslant{\mathbf{1}}_\beta$

for

${\mathbf{1}}^\alpha _{\beta }\geqslant{\mathbf{1}}_\beta$

for

![]() $\beta \in \Gamma ^+_\alpha$

, while

$\beta \in \Gamma ^+_\alpha$

, while

![]() ${\mathbf{1}}^\alpha _{\beta }={\mathbf{1}}_\beta$

for every

${\mathbf{1}}^\alpha _{\beta }={\mathbf{1}}_\beta$

for every

![]() $\beta \in \Gamma \setminus (\Gamma ^+_\alpha \cup \Gamma ^-_\alpha \cup \left \{{\alpha }\right \})$

. The error bound (4.2) in Theorem4.1 therefore simplifies to

$\beta \in \Gamma \setminus (\Gamma ^+_\alpha \cup \Gamma ^-_\alpha \cup \left \{{\alpha }\right \})$

. The error bound (4.2) in Theorem4.1 therefore simplifies to

\begin{align} \text{min}\! \left \{{1,\lambda ^{-1}}\right \}\left (\sum _{\alpha \in \Gamma }\pi _\alpha ^2 + \sum _{\alpha \in \Gamma }\sum _{\beta \in \Gamma ^+_\alpha } \pi _\alpha{\mathbb{E}}[{\mathbf{1}}^\alpha _{\beta }-{\mathbf{1}}_\beta ] + \sum _{\alpha \in \Gamma }\sum _{\beta \in \Gamma ^-_\alpha } \pi _\alpha{\mathbb{E}}[{\mathbf{1}}_\beta -{\mathbf{1}}^\alpha _{\beta }]\right ); \end{align}

\begin{align} \text{min}\! \left \{{1,\lambda ^{-1}}\right \}\left (\sum _{\alpha \in \Gamma }\pi _\alpha ^2 + \sum _{\alpha \in \Gamma }\sum _{\beta \in \Gamma ^+_\alpha } \pi _\alpha{\mathbb{E}}[{\mathbf{1}}^\alpha _{\beta }-{\mathbf{1}}_\beta ] + \sum _{\alpha \in \Gamma }\sum _{\beta \in \Gamma ^-_\alpha } \pi _\alpha{\mathbb{E}}[{\mathbf{1}}_\beta -{\mathbf{1}}^\alpha _{\beta }]\right ); \end{align}

noting that

![]() $\sum _{\beta \in \Gamma ^+_\alpha }{\mathbb{E}}[{\mathbf{1}}^\alpha _\beta -{\mathbf{1}}_\beta ]$

and

$\sum _{\beta \in \Gamma ^+_\alpha }{\mathbb{E}}[{\mathbf{1}}^\alpha _\beta -{\mathbf{1}}_\beta ]$

and

![]() $\sum _{\beta \in \Gamma ^-_\alpha } \pi _\alpha{\mathbb{E}}[{\mathbf{1}}_\beta -{\mathbf{1}}^\alpha _{\beta }]$

are, respectively, the expected gain and loss in the copies of

$\sum _{\beta \in \Gamma ^-_\alpha } \pi _\alpha{\mathbb{E}}[{\mathbf{1}}_\beta -{\mathbf{1}}^\alpha _{\beta }]$

are, respectively, the expected gain and loss in the copies of

![]() $H$

after forcing

$H$

after forcing

![]() $\alpha$

to be present in the graph

$\alpha$

to be present in the graph

![]() $G$

. We may simplify this further by omitting the negative terms in (5.2) and noting that, by (5.1),

$G$

. We may simplify this further by omitting the negative terms in (5.2) and noting that, by (5.1),

which yields the simpler (but somewhat larger) error bound

\begin{align} \text{min}\! \left \{{1,\lambda ^{-1}}\right \}\left (\sum _{\alpha \in \Gamma }\pi _\alpha ^2 + \sum _{\alpha \in \Gamma }\sum _{\beta \in \Gamma ^+_\alpha }{\mathbb{E}}\left [{\mathbf{1}}_\alpha{\mathbf{1}}_\beta \right ] + \sum _{\alpha \in \Gamma }\sum _{\beta \in \Gamma ^-_\alpha } \pi _\alpha \pi _\beta \right ). \end{align}

\begin{align} \text{min}\! \left \{{1,\lambda ^{-1}}\right \}\left (\sum _{\alpha \in \Gamma }\pi _\alpha ^2 + \sum _{\alpha \in \Gamma }\sum _{\beta \in \Gamma ^+_\alpha }{\mathbb{E}}\left [{\mathbf{1}}_\alpha{\mathbf{1}}_\beta \right ] + \sum _{\alpha \in \Gamma }\sum _{\beta \in \Gamma ^-_\alpha } \pi _\alpha \pi _\beta \right ). \end{align}

As for Theorem4.2, the simplified error bound is the same as (5.2) or (5.4), but with the factor

![]() $\min \{1,\lambda ^{-1}\}$

replaced with

$\min \{1,\lambda ^{-1}\}$

replaced with

When applying Theorem4.3, we first sample a copy

![]() $K\in \Gamma$

of subgraph

$K\in \Gamma$

of subgraph

![]() $H$

with probabilities

$H$

with probabilities

![]() $\mathbb{P}(K=\alpha )$

proportional to

$\mathbb{P}(K=\alpha )$

proportional to

![]() ${\mathbb{E}}{\mathbf{1}}_\alpha$

, and construct the graphs

${\mathbb{E}}{\mathbf{1}}_\alpha$

, and construct the graphs

![]() $G^K$

and

$G^K$

and

![]() $G$

as above. The subgraph count

$G$

as above. The subgraph count

![]() $W$

and its size-bias version

$W$

and its size-bias version

![]() $W^s$

can then be found in

$W^s$

can then be found in

![]() $G$

and

$G$

and

![]() $G^K$

, respectively.

$G^K$

, respectively.

6. Proof of Theorem 3.11 and some useful lemmas

Here we prove some lemmas that are useful for proving the main results later. We also prove Theorem3.11 here, as some special cases of the result will be applied in the other proofs. To study the expected number of copies of a graph

![]() $H$

, we use the following definition. Let

$H$

, we use the following definition. Let

![]() $h$

be the number of vertices in

$h$

be the number of vertices in

![]() $H$

.

$H$

.

Definition 6.1 (Vertex marks and mark sequence). Let

![]() $\alpha$

be a potential copy of

$\alpha$

be a potential copy of

![]() $H$

and suppose that its vertices are

$H$

and suppose that its vertices are

![]() $k_1\lt \dots \lt k_h$

. We say that the mark of a vertex

$k_1\lt \dots \lt k_h$

. We say that the mark of a vertex

![]() $k_i\in \alpha$

is the out-degree of

$k_i\in \alpha$

is the out-degree of

![]() $k_i$

in

$k_i$

in

![]() $\alpha$

regarded as a directed graph (as always, with edges directed towards the smaller endpoint). The mark sequence is

$\alpha$

regarded as a directed graph (as always, with edges directed towards the smaller endpoint). The mark sequence is

![]() $(b_i)_{1\leqslant i\leqslant h}$

, with

$(b_i)_{1\leqslant i\leqslant h}$

, with

![]() $b_i$

being the mark of vertex

$b_i$

being the mark of vertex

![]() $k_i$

and for convenience, we occasionally refer to

$k_i$

and for convenience, we occasionally refer to

![]() $b_i=k$

as a k-mark.

$b_i=k$

as a k-mark.

In other words, for

![]() $\alpha$

to actually be a copy of

$\alpha$

to actually be a copy of

![]() $H$

in

$H$

in

![]() $G^m_n$

, there are for each

$G^m_n$

, there are for each

![]() $i\in [h]$

exactly

$i\in [h]$

exactly

![]() $b_i$

edges in

$b_i$

edges in

![]() $G^m_n$

from

$G^m_n$

from

![]() $k_i$

that have to have the endpoints determined by

$k_i$

that have to have the endpoints determined by

![]() $\alpha$

. Note that the mark sequence does not entirely encode the configuration of the copy of

$\alpha$

. Note that the mark sequence does not entirely encode the configuration of the copy of

![]() $H$

in

$H$

in

![]() $G^m_n$

, but, together with the sequence of vertices

$G^m_n$

, but, together with the sequence of vertices

![]() $k_i$

, the mark sequence gives the probability that the copy

$k_i$

, the mark sequence gives the probability that the copy

![]() $\alpha$

is present in

$\alpha$

is present in

![]() $G^m_n$

. In fact, since the edges in

$G^m_n$

. In fact, since the edges in

![]() $G^m_n$

choose their endpoints independently,

$G^m_n$

choose their endpoints independently,

\begin{align} \pi _\alpha \,:\!=\, \mathbb{P}({\mathbf{1}}_\alpha =1) = \prod _{i=1}^h \frac{1}{(k_i-1)^{b_i}} = \prod _{i=2}^h \frac{1}{(k_i-1)^{b_i}}, \end{align}

\begin{align} \pi _\alpha \,:\!=\, \mathbb{P}({\mathbf{1}}_\alpha =1) = \prod _{i=1}^h \frac{1}{(k_i-1)^{b_i}} = \prod _{i=2}^h \frac{1}{(k_i-1)^{b_i}}, \end{align}

where the final equality holds because always

![]() $b_1=0$

, since edges are directed towards lower numbers, and thus

$b_1=0$

, since edges are directed towards lower numbers, and thus

![]() $\alpha$

has no out-edge from

$\alpha$

has no out-edge from

![]() $k_1$

. Similarly,

$k_1$

. Similarly,

![]() $b_h$

equals the degree of

$b_h$

equals the degree of

![]() $k_h$

in

$k_h$

in

![]() $\alpha$

, since

$\alpha$

, since

![]() $k_h$

has no in-edge.

$k_h$

has no in-edge.

Note that for a given unlabelled graph

![]() $H$

, there is a finite number (at most

$H$

, there is a finite number (at most

![]() $h!$

) of non-isomorphic labelled versions of

$h!$

) of non-isomorphic labelled versions of

![]() $H$

. Hence, in order to obtain estimates of the expected number of copies, it suffices to consider each possible labelled version separately, and then consider only potential copies with vertex sequences

$H$

. Hence, in order to obtain estimates of the expected number of copies, it suffices to consider each possible labelled version separately, and then consider only potential copies with vertex sequences

![]() $(k_i)_1^h$

where

$(k_i)_1^h$

where

![]() $k_1\lt \dots \lt k_h$

and

$k_1\lt \dots \lt k_h$

and

![]() $k_i$

corresponds to vertex

$k_i$

corresponds to vertex

![]() $i\in H$

. The mark sequence of the potential copy depends only on the labelled version of

$i\in H$

. The mark sequence of the potential copy depends only on the labelled version of

![]() $H$

. Different labelled versions of

$H$

. Different labelled versions of

![]() $H$

may yield different mark sequences

$H$

may yield different mark sequences

![]() $(b_i)_1^h$

, but they all have the same length

$(b_i)_1^h$

, but they all have the same length

![]() $h=v(H)$

, the number of vertices in

$h=v(H)$

, the number of vertices in

![]() $H$

, and the same sum

$H$

, and the same sum

\begin{align} \sum _{i=1}^h b_i=e(H), \end{align}

\begin{align} \sum _{i=1}^h b_i=e(H), \end{align}

the number of edges in

![]() $H$

.

$H$

.

For the proof of Theorem 3.11, we also use the following definition.

Definition 6.2 (

![]() $F$

-number). We define the

$F$

-number). We define the

![]() $F$

-number,

$F$

-number,

![]() $F(a_i)$

of a finite sequence

$F(a_i)$

of a finite sequence

![]() $(a_i)$

of natural numbers as

$(a_i)$

of natural numbers as

![]() $\sum _{a_i\gt 1}a_i+\sum _{a_i=0}({-}2)$

, that is, we ignore all

$\sum _{a_i\gt 1}a_i+\sum _{a_i=0}({-}2)$

, that is, we ignore all

![]() $a_i$

with

$a_i$

with

![]() $a_i=1$

, count the

$a_i=1$

, count the

![]() $a_i$

with

$a_i$

with

![]() $a_i=0$

with weight

$a_i=0$

with weight

![]() $-2$

and count all other

$-2$

and count all other

![]() $a_i$

with

$a_i$

with

![]() $a_i$

as their weight.

$a_i$

as their weight.

Proof of Theorem

3.11. Consider a connected graph

![]() $H$

that is multicyclic and leaf-free, see Definition 3.10. Let

$H$

that is multicyclic and leaf-free, see Definition 3.10. Let

![]() $(b_i)_{1\leqslant i\leqslant h}$

be the mark sequence of a potential copy of

$(b_i)_{1\leqslant i\leqslant h}$

be the mark sequence of a potential copy of

![]() $H$

. By (6.1), the expected number of copies of

$H$

. By (6.1), the expected number of copies of

![]() $H$

with this mark sequence can be bounded by a constant times

$H$

with this mark sequence can be bounded by a constant times

\begin{equation} S(b_1\cdots b_h)\,:\!=\, \sum _{1\leqslant k_1\lt \dots \lt k_h\lt \infty }\prod ^h_{i=2}\frac{1}{(k_i-1)^{b_i}}. \end{equation}

\begin{equation} S(b_1\cdots b_h)\,:\!=\, \sum _{1\leqslant k_1\lt \dots \lt k_h\lt \infty }\prod ^h_{i=2}\frac{1}{(k_i-1)^{b_i}}. \end{equation}

Hence it suffices to show that this infinite sum is finite for every mark sequence

![]() $(b_i)_1^h$

.

$(b_i)_1^h$

.

We will show this by modifying the mark sequence

![]() $(b_i)$