1. Introduction

For decades, understanding and controlling fluid flows have proven to be a considerable challenge due to their inherent complexity and potential impact, attracting attention from technological and academic research (Brunton & Noack Reference Brunton and Noack2015). In engineering, applications span several domains, from drag reduction in transport, to lift increase or acoustic noise reduction for aircraft, or mixing enhancement in chemical processes. Earlier approaches mainly used passive control (i.e. without exogenous input), while active control of flows is today an ongoing and fruitful research topic (Schmid & Sipp Reference Schmid and Sipp2016; Garnier et al. Reference Garnier, Viquerat, Rabault, Larcher, Kuhnle and Hachem2021). Active flow control can be itself separated into two categories: open-loop control and closed-loop control. While open-loop control proves to be effective (e.g. with harmonic signals in Bergmann, Cordier & Brancher (Reference Bergmann, Cordier and Brancher2005)), it usually requires large and sustained control inputs to drive the system to a more beneficial regime. On the other hand, closed-loop control aims at modifying the intrinsic dynamics of the system, therefore usually requiring less energy, at the cost of an increased complexity in the design and implementation phases.

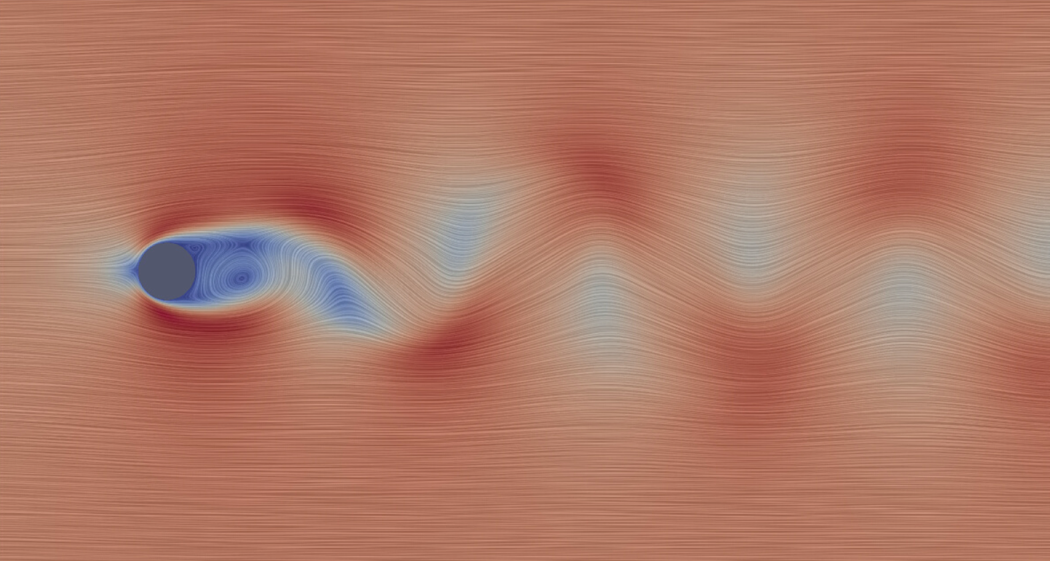

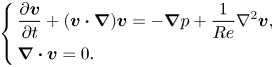

In this article, we are interested in the feedback control of laminar oscillator flows (Schmid & Sipp Reference Schmid and Sipp2016). They form a particular class of flows that are linearly unstable, dominated by a nonlinear regime of self-sustained oscillations and mostly insensitive to upstream perturbations. The most well-known example of oscillator flow is probably the flow past a cylinder in two dimensions (Barkley Reference Barkley2006), displaying a wake of alternating vortices known as the von Kármán vortex street (see figure 1). This category of flows generally exhibits two equilibria or more: an unstable fixed point (referred to as the base flow) and an unsteady attractor. In this application, the objective is to drive the flow from an initial state lying on the attractor, to the base flow stabilized in closed loop. It is notable that open-loop control strategies cannot stabilize the equilibrium, while closed-loop strategies can be designed as such.

Figure 1. Streamlines of a snapshot of the incompressible flow past a two-dimensional cylinder at Reynolds number ![]() $Re=100$. Coloured by velocity magnitude.

$Re=100$. Coloured by velocity magnitude.

From a control perspective, closed-loop strategies developed in the literature may be categorized based on the flow regime they aim to address. The first category of approaches focuses on preventing the growth of linear instabilities from a neighbourhood of the stationary equilibrium, while the second category of approaches tackles the reduction of oscillations in the fully developed nonlinear regime of self-sustained oscillations. For the control law design, the main difficulty is due to the Navier–Stokes equations that are both nonlinear and infinite-dimensional. Often, the choice of the regime of interest naturally induces a structure for the control policy (e.g. linear or nonlinear, static or dynamic, etc.) and/or a controller design method. In the following, we propose to sort control approaches depending on the flow regime addressed, and we showcase some of the methods used to circumvent the difficulty posed by the high dimensionality and nonlinearity of the controlled system.

1.1. Control in a neighbourhood of the equilibrium

The historical approaches to oscillator flow control aim at efficiently counteracting the linear instabilities, whose development from equilibrium is eventually responsible for self-sustained oscillations. To do so, one may linearize the equations about the unstable equilibrium and work in the linear regime, in a neighbourhood of the equilibrium. For applying linear synthesis techniques to the inherently high-dimensional system, a common workaround is the use of a linear reduced-order model (ROM) that captures the most essential features of the flow around a set point. Linear ROMs may be established with a wide range of techniques, such as Galerkin projection of the governing equations (Barbagallo, Sipp & Schmid Reference Barbagallo, Sipp and Schmid2009, Reference Barbagallo, Sipp and Schmid2011; Weller, Camarri & Iollo Reference Weller, Camarri and Iollo2009), system identification from time data (Illingworth, Morgans & Rowley Reference Illingworth, Morgans and Rowley2011; Flinois & Morgans Reference Flinois and Morgans2016) or frequency data (Jin, Illingworth & Sandberg Reference Jin, Illingworth and Sandberg2020), ![]() ${{\mathcal {H}\scriptscriptstyle \infty }}$ balanced truncation (Benner, Heiland & Werner Reference Benner, Heiland and Werner2022) or low-order conceptual physical modelling (Illingworth, Morgans & Rowley Reference Illingworth, Morgans and Rowley2012). Using linear ROMs, linear control techniques of various complexity may be applied: the control techniques range from proportional control (Weller et al. Reference Weller, Camarri and Iollo2009) and linear quadratic Gaussian (LQG) (Barbagallo et al. Reference Barbagallo, Sipp and Schmid2009, Reference Barbagallo, Sipp and Schmid2011; Illingworth et al. Reference Illingworth, Morgans and Rowley2011, Reference Illingworth, Morgans and Rowley2012) to

${{\mathcal {H}\scriptscriptstyle \infty }}$ balanced truncation (Benner, Heiland & Werner Reference Benner, Heiland and Werner2022) or low-order conceptual physical modelling (Illingworth, Morgans & Rowley Reference Illingworth, Morgans and Rowley2012). Using linear ROMs, linear control techniques of various complexity may be applied: the control techniques range from proportional control (Weller et al. Reference Weller, Camarri and Iollo2009) and linear quadratic Gaussian (LQG) (Barbagallo et al. Reference Barbagallo, Sipp and Schmid2009, Reference Barbagallo, Sipp and Schmid2011; Illingworth et al. Reference Illingworth, Morgans and Rowley2011, Reference Illingworth, Morgans and Rowley2012) to ![]() ${{\mathcal {H}\scriptscriptstyle \infty }}$ synthesis or loop-shaping (Flinois & Morgans Reference Flinois and Morgans2016; Jin et al. Reference Jin, Illingworth and Sandberg2020; Benner et al. Reference Benner, Heiland and Werner2022). In an attempt to address some shortcomings of the linearized approaches, a linear parameter varying approach was proposed in Heiland & Werner (Reference Heiland and Werner2023) for both low-order modelling and control; it shows an expanded basin of attraction of control strategies around the equilibrium for a weakly supercritical flow.

${{\mathcal {H}\scriptscriptstyle \infty }}$ synthesis or loop-shaping (Flinois & Morgans Reference Flinois and Morgans2016; Jin et al. Reference Jin, Illingworth and Sandberg2020; Benner et al. Reference Benner, Heiland and Werner2022). In an attempt to address some shortcomings of the linearized approaches, a linear parameter varying approach was proposed in Heiland & Werner (Reference Heiland and Werner2023) for both low-order modelling and control; it shows an expanded basin of attraction of control strategies around the equilibrium for a weakly supercritical flow.

These approaches are mainly restricted by the region of validity of the low-dimensional linearized model of the flow, and the assumed knowledge of the equations. In particular, these approaches are only satisfactory in the vicinity of the equilibrium (or for weakly supercritical flows), but rapidly fail for strong nonlinearity when linearization about the equilibrium becomes irrelevant (Schmid & Sipp Reference Schmid and Sipp2016).

1.2. Control of the fully developed regime

The second category of approaches tackles the reduction of oscillations in the fully developed nonlinear regime of self-sustained oscillations. To address this regime, both data-based and model-based approaches may be suitable.

1.2.1. Nonlinear reduced-order modelling and control

In order to address the shortcomings of approaches using linear models, approaches were developed to handle the nonlinearity in different ways, especially with low-dimension nonlinear approximations of the flow. Linear ROMs may be extended with nonlinear terms in Galerkin projection, in order to reproduce the oscillating behaviour of the flow (e.g. Rowley & Juttijudata Reference Rowley and Juttijudata2005; King et al. Reference King, Seibold, Lehmann, Noack, Morzyński and Tadmor2005; Lasagna et al. Reference Lasagna, Huang, Tutty and Chernyshenko2016). They may be consequently used for the design of various linear and nonlinear control methods such as linear parameter varying pole placement (Aleksić-Roeßner et al. Reference Aleksić-Roeßner, King, Lehmann, Tadmor and Morzyński2014), model predictive control (MPC) (Aleksić-Roeßner et al. Reference Aleksić-Roeßner, King, Lehmann, Tadmor and Morzyński2014), backstepping (King et al. Reference King, Seibold, Lehmann, Noack, Morzyński and Tadmor2005), sliding mode control (Aleksić et al. Reference Aleksić, Luchtenburg, King, Noack and Pfeifer2010), the sum-of-squares formulation (Lasagna et al. Reference Lasagna, Huang, Tutty and Chernyshenko2016; Huang et al. Reference Huang, Jin, Lasagna, Chernyshenko and Tutty2017)) or more physically based solutions (Gerhard et al. Reference Gerhard, Pastoor, King, Noack, Dillmann, Morzynski and Tadmor2003; Rowley & Juttijudata Reference Rowley and Juttijudata2005). These studies show that nonlinear ROMs may be used for the design of control methods and provide satisfactory performance in a high-dimensional nonlinear system. They, however, might require strong model assumptions for building a nonlinear ROM (e.g. mode computation, full-field information, etc.), which may hinder their applicability in experiments with localized measurements, noise or model mismatch.

1.2.2. Approaches using the Koopman operator

More recent approaches use input–output data to directly build a surrogate nonlinear model of the flow, and use it for control with the MPC framework, leveraging the prediction power of cheap surrogate models. Rooted in Koopman operator theory, Korda & Mezić (Reference Korda and Mezić2018a) and Arbabi, Korda & Mezić (Reference Arbabi, Korda and Mezić2018) developed a framework for computing a linear representation of a dynamical system, in a user-chosen lifted coordinate space. Using a moderate-dimension flow, they show the possibility of replacing full-state measurement by sparse measurements with delay embedding. A similar approach is used in Morton et al. (Reference Morton, Jameson, Kochenderfer and Witherden2018) with full-state measurement but the space of observables is learned with an encoder neural network, which is illustrated for a weakly supercritical cylinder wake flow. With the same idea, using Koopman operator theory, Peitz & Klus (Reference Peitz and Klus2020) summarize two findings introduced in two previous papers: in Peitz & Klus (Reference Peitz and Klus2019), the flow under any actuation signal is modelled using autonomous systems with constant input (derived from data), and the control problem is turned into a switching problem; in Peitz, Otto & Rowley (Reference Peitz, Otto and Rowley2020), a bilinear model is interpolated from said autonomous, constant-input flow models and the control problem is solved on the bilinear model. In a related work, Otto, Peitz & Rowley (Reference Otto, Peitz and Rowley2022) directly built a bilinear model using delayed sparse observables and applied said model to the fluidic pinball, even providing decent performance at off-design Reynolds number. In Page & Kerswell (Reference Page and Kerswell2019), it is shown that a single Koopman expansion might not be able to reproduce the behaviour of the flow around two distinct invariant solutions (namely the stationary equilibrium and the attractor), which may underline the necessity of building several such models for efficient model-based control. Not explicitly linked to the Koopman operator, Bieker et al. (Reference Bieker, Peitz, Brunton, Kutz and Dellnitz2019) modelled the actuated flow as a black box, in a latent space with a recurrent neural network that may be updated online, and demonstrated the possibility of efficiently addressing complex flows such as the chaotic fluidic pinball, by only using a small number of localized measurements, provided large amounts of training data are available.

1.2.3. Interacting with high-dimensional nonlinear systems

On the other side of the spectrum, some techniques try to address the control of high-dimensional nonlinear systems with direct interaction with the system itself. Some of these approaches use tools and controller structures from linear control theory, such as with proportional integral derivative control (Park, Ladd & Hendricks Reference Park, Ladd and Hendricks1994; Son & Choi Reference Son and Choi2018; Yun & Lee Reference Yun and Lee2022), or structured ![]() ${{\mathcal {H}\scriptscriptstyle \infty }}$ control (Jussiau et al. Reference Jussiau, Leclercq, Demourant and Apkarian2022), in order to design controllers by direct interaction with full-size nonlinearity, either heuristically (Park et al. Reference Park, Ladd and Hendricks1994; Yun & Lee Reference Yun and Lee2022) or with the help of optimization (Son & Choi Reference Son and Choi2018; Jussiau et al. Reference Jussiau, Leclercq, Demourant and Apkarian2022). Furthermore, a significant body of literature uses nonlinear model-free control laws, using information conveyed by the full-order nonlinear system. Examples of such approaches are found in Cornejo Maceda et al. (Reference Cornejo Maceda, Li, Lusseyran, Morzyński and Noack2021) and Castellanos et al. (Reference Castellanos, Cornejo Maceda, De La Fuente, Noack, Ianiro and Discetti2022), where nonlinear control laws consisting of nested operations (

${{\mathcal {H}\scriptscriptstyle \infty }}$ control (Jussiau et al. Reference Jussiau, Leclercq, Demourant and Apkarian2022), in order to design controllers by direct interaction with full-size nonlinearity, either heuristically (Park et al. Reference Park, Ladd and Hendricks1994; Yun & Lee Reference Yun and Lee2022) or with the help of optimization (Son & Choi Reference Son and Choi2018; Jussiau et al. Reference Jussiau, Leclercq, Demourant and Apkarian2022). Furthermore, a significant body of literature uses nonlinear model-free control laws, using information conveyed by the full-order nonlinear system. Examples of such approaches are found in Cornejo Maceda et al. (Reference Cornejo Maceda, Li, Lusseyran, Morzyński and Noack2021) and Castellanos et al. (Reference Castellanos, Cornejo Maceda, De La Fuente, Noack, Ianiro and Discetti2022), where nonlinear control laws consisting of nested operations (![]() $+,\times,\cos$, etc.) are built with optimization, for applications to the fluidic pinball. Naturally, the reinforcement learning framework has also proved its efficiency at discovering control policies (see Garnier et al. (Reference Garnier, Viquerat, Rabault, Larcher, Kuhnle and Hachem2021) and Viquerat et al. (Reference Viquerat, Meliga, Larcher and Hachem2022) for reviews of approaches, or Paris, Beneddine & Dandois (Reference Paris, Beneddine and Dandois2021), Rabault et al. (Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019) and Ghraieb et al. (Reference Ghraieb, Viquerat, Larcher, Meliga and Hachem2021) for illustrations). A recent study in reinforcement learning (Xia et al. Reference Xia, Zhang, Kerrigan and Rigas2023) demonstrates the effectiveness of including delayed measurements and past control inputs within a nonlinear control policy in discrete time. This approach essentially transforms the control law from static to dynamic, utilizing the concept of dynamic output feedback from control theory (Syrmos et al. Reference Syrmos, Abdallah, Dorato and Grigoriadis1997).

$+,\times,\cos$, etc.) are built with optimization, for applications to the fluidic pinball. Naturally, the reinforcement learning framework has also proved its efficiency at discovering control policies (see Garnier et al. (Reference Garnier, Viquerat, Rabault, Larcher, Kuhnle and Hachem2021) and Viquerat et al. (Reference Viquerat, Meliga, Larcher and Hachem2022) for reviews of approaches, or Paris, Beneddine & Dandois (Reference Paris, Beneddine and Dandois2021), Rabault et al. (Reference Rabault, Kuchta, Jensen, Réglade and Cerardi2019) and Ghraieb et al. (Reference Ghraieb, Viquerat, Larcher, Meliga and Hachem2021) for illustrations). A recent study in reinforcement learning (Xia et al. Reference Xia, Zhang, Kerrigan and Rigas2023) demonstrates the effectiveness of including delayed measurements and past control inputs within a nonlinear control policy in discrete time. This approach essentially transforms the control law from static to dynamic, utilizing the concept of dynamic output feedback from control theory (Syrmos et al. Reference Syrmos, Abdallah, Dorato and Grigoriadis1997).

While the use of data makes these approaches more easily applicable in experiments, they still require large amounts of data and their training can be made more challenging by various external factors, such as convective time delays stemming from convective phenomena or partial observability (i.e. localized sensing, often requiring numerous sensors to reconstruct worthwhile information).

1.3. Proposed approach

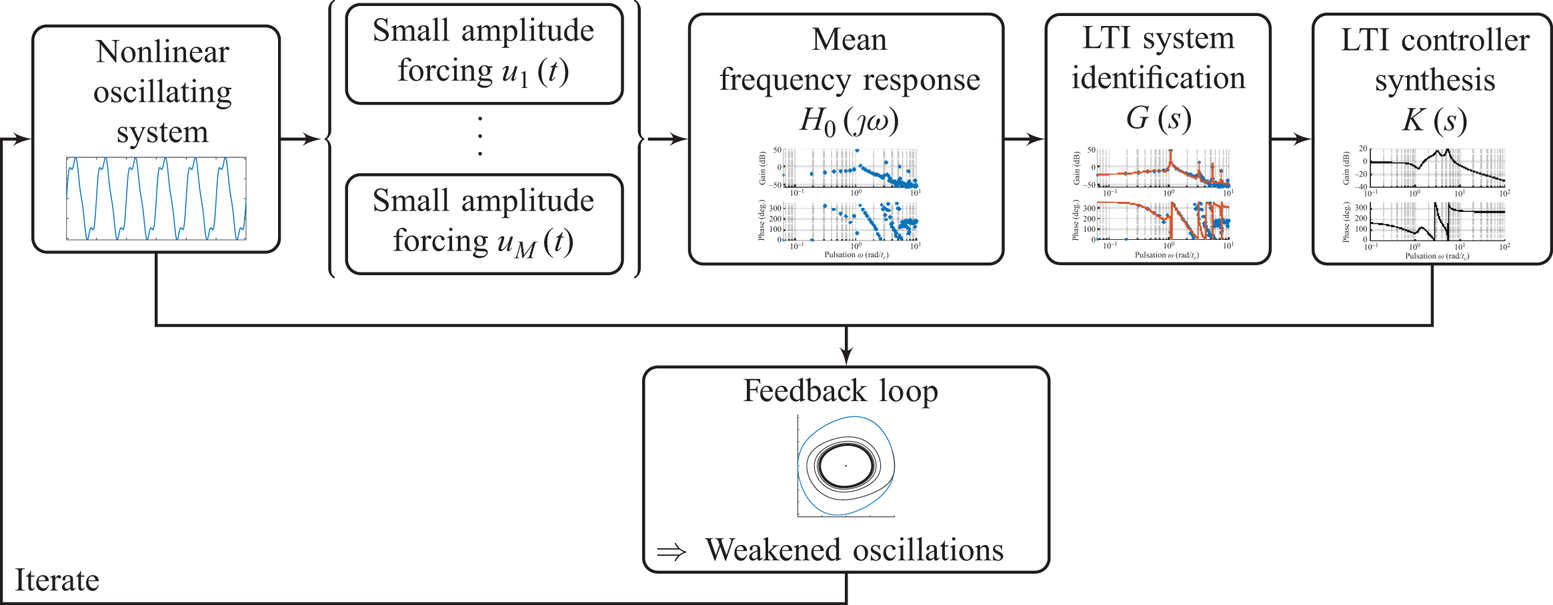

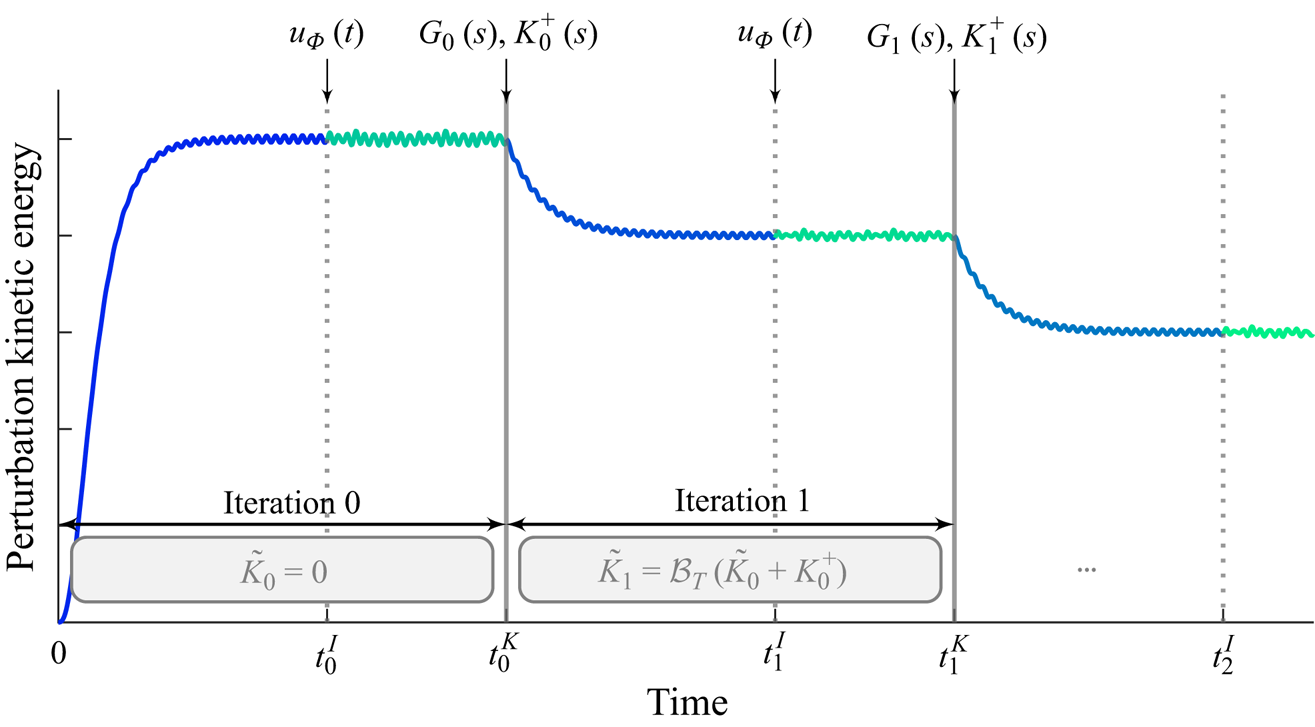

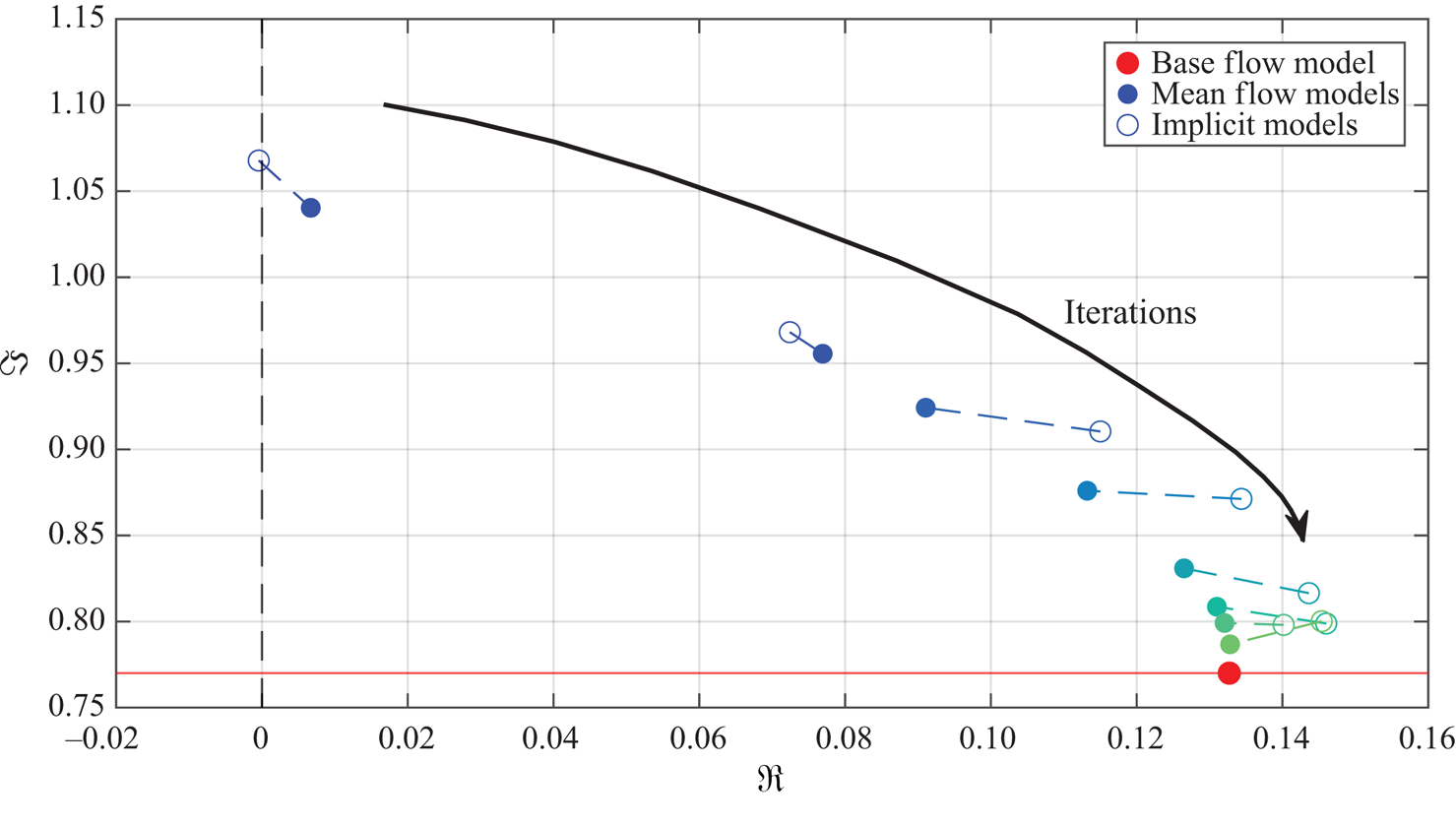

In this paper, we aim at driving the system from its natural limit cycle to its equilibrium, stabilized in closed loop, by handling the nonlinearity iteratively, with the same idea as Leclercq et al. (Reference Leclercq, Demourant, Poussot-Vassal and Sipp2019) that completely suppresses oscillations on top of a cavity, by solving the nonlinear control problem using a sequence of low-order linear approximations. Contrary to Leclercq et al. (Reference Leclercq, Demourant, Poussot-Vassal and Sipp2019), where the model is obtained from linearizing the Navier–Stokes equations about the mean flow, our proposed method is fully data-based in the sense that no knowledge about the governing equations is necessary. In addition, it aims at being easy to design and implement, and does not require multiple sensors, extensive training or tricky parameter tuning. It aims at handling the nonlinearity iteratively, and tackles the large dimension of the system with system identification solely from input–output data. Using the mean resolvent framework from Leclercq & Sipp (Reference Leclercq and Sipp2023), we can establish a linear time-invariant (LTI) model of the oscillating flow, uniquely from input–output data, which is used to design a dynamic output feedback LTI controller. While the constructed controller successfully reduces oscillations in the flow, it cannot stabilize the flow completely due to the local validity (in phase space) of the LTI model. Consequently, the flow reaches a new dynamical equilibrium characterized by a lower perturbation kinetic energy. The procedure is then iterated from this new dynamical equilibrium, until the flow is fully stabilized – the procedure is illustrated in figure 2.

Figure 2. Graphical summary of the method: data-based stabilization of an oscillating flow with LTI controllers, using the mean resolvent framework (Leclercq & Sipp Reference Leclercq and Sipp2023).

The paper is structured as follows. In § 2, we present and justify the method and its associated tools in detail. In § 3, we demonstrate the applicability of the method to the canonical two-dimensional flow past a cylinder at ![]() $Re=100$, essentially reaching equilibrium with data-driven LTI controllers, and analyse the solution found. We discuss several points of the method in § 4. Finally, the main results are recalled and perspectives are drawn in § 5.

$Re=100$, essentially reaching equilibrium with data-driven LTI controllers, and analyse the solution found. We discuss several points of the method in § 4. Finally, the main results are recalled and perspectives are drawn in § 5.

2. Method and tools

2.1. Objective and overview of the method

We consider the flow past a cylinder in two dimensions, at Reynolds number ![]() $Re=100$ (presented in more detail in § 2.3). This flow is an oscillator flow with an unstable equilibrium, referred to as the base flow, and a periodic attractor, which is the regime naturally observed: the von Kármán vortex street (figure 1). Our objective is to drive the system from its attractor back to its equilibrium stabilized in closed loop, using a single local sensor and a single actuator, in a fully data driven way (i.e. without the need for knowledge of the equations, the base flow or sensor/actuator models).

$Re=100$ (presented in more detail in § 2.3). This flow is an oscillator flow with an unstable equilibrium, referred to as the base flow, and a periodic attractor, which is the regime naturally observed: the von Kármán vortex street (figure 1). Our objective is to drive the system from its attractor back to its equilibrium stabilized in closed loop, using a single local sensor and a single actuator, in a fully data driven way (i.e. without the need for knowledge of the equations, the base flow or sensor/actuator models).

The procedure is based on the same idea as that described in Leclercq et al. (Reference Leclercq, Demourant, Poussot-Vassal and Sipp2019). In that previous study, the oscillating flow is modelled, from an input–output viewpoint, as an LTI system enabling LTI controller design. However, as the controller is unable to completely stabilize the flow, the feedback system converges to a new attractor with lower perturbation energy, and the procedure is reiterated until the base flow is reached. More specifically, in Leclercq et al. (Reference Leclercq, Demourant, Poussot-Vassal and Sipp2019), the authors used a linearized model around the mean flow (i.e. equations linearized around the temporal average ![]() $\bar {{\boldsymbol {q}}} = \langle {\boldsymbol {q}}(t) \rangle _t$ of a statistically steady flow), which is shown to represent features of the flow important for control (Liu et al. Reference Liu, Sun, Cattafesta, Ukeiley and Taira2018). Although the mean flow may be estimated in experiments, it remains quite impractical for applications, and the linearization performed requires significant assumptions (e.g. models for both the sensor and actuator, neglecting three-dimensional effects, working with expensive three-dimensional equations, etc.). Also, they used multi-objective structured

$\bar {{\boldsymbol {q}}} = \langle {\boldsymbol {q}}(t) \rangle _t$ of a statistically steady flow), which is shown to represent features of the flow important for control (Liu et al. Reference Liu, Sun, Cattafesta, Ukeiley and Taira2018). Although the mean flow may be estimated in experiments, it remains quite impractical for applications, and the linearization performed requires significant assumptions (e.g. models for both the sensor and actuator, neglecting three-dimensional effects, working with expensive three-dimensional equations, etc.). Also, they used multi-objective structured ![]() ${{\mathcal {H}\scriptscriptstyle \infty }}$ synthesis (Apkarian & Noll Reference Apkarian and Noll2006; Apkarian, Gahinet & Buhr Reference Apkarian, Gahinet and Buhr2014) for the design of low-order controllers. While this technique is very powerful and well suited to this problem (as it can enforce, for example, controller structure, roll-off or location of poles in the closed loop), it is not easy to automate and often requires the control engineer perspective to be used at its maximum potential. Finally, as the controllers are being stacked onto each other during the iterations, the controller effectively operating in closed loop has its order increasing linearly with the number of iterations. In an experiment where the procedure would likely never really converge to a steady equilibrium (due, at least, to residual incoming turbulence), this ever-increasing order could be a problem for runtime and numerical conditioning.

${{\mathcal {H}\scriptscriptstyle \infty }}$ synthesis (Apkarian & Noll Reference Apkarian and Noll2006; Apkarian, Gahinet & Buhr Reference Apkarian, Gahinet and Buhr2014) for the design of low-order controllers. While this technique is very powerful and well suited to this problem (as it can enforce, for example, controller structure, roll-off or location of poles in the closed loop), it is not easy to automate and often requires the control engineer perspective to be used at its maximum potential. Finally, as the controllers are being stacked onto each other during the iterations, the controller effectively operating in closed loop has its order increasing linearly with the number of iterations. In an experiment where the procedure would likely never really converge to a steady equilibrium (due, at least, to residual incoming turbulence), this ever-increasing order could be a problem for runtime and numerical conditioning.

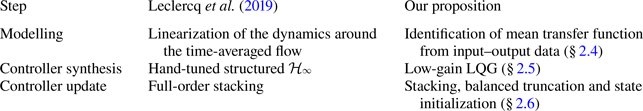

In this study, we tackle these three shortcomings preventing the use of the method in an automated data-based manner, summarized in table 1. First, the modelling part is done solely with input–output data, using the mean resolvent framework introduced in Leclercq & Sipp (Reference Leclercq and Sipp2023), identified with multisine excitations (Schoukens, Guillaume & Pintelon Reference Schoukens, Guillaume and Pintelon1991). Not only is the mean transfer function easier to derive and implement, but it is also better founded than the resolvent around the mean flow (Leclercq & Sipp Reference Leclercq and Sipp2023). Second, the controller is designed with LQG synthesis, which is easy to automate. While this method is arguably less powerful and flexible than structured ![]() ${{\mathcal {H}\scriptscriptstyle \infty }}$ synthesis, it permits easy automation while maintaining some desirable properties for the controller. Third, the increasing order of stacked controllers is managed with online controller reduction with balanced truncation (denoted

${{\mathcal {H}\scriptscriptstyle \infty }}$ synthesis, it permits easy automation while maintaining some desirable properties for the controller. Third, the increasing order of stacked controllers is managed with online controller reduction with balanced truncation (denoted ![]() $\mathcal {B}_T$; see Moore Reference Moore1981; Zhou, Salomon & Wu Reference Zhou, Salomon and Wu1999) and transient regimes are handled with a two-step initialization of the new controller. Overall, these advantages aim at making the method applicable in a real-life set-up. The overview of the method is as follows, with

$\mathcal {B}_T$; see Moore Reference Moore1981; Zhou, Salomon & Wu Reference Zhou, Salomon and Wu1999) and transient regimes are handled with a two-step initialization of the new controller. Overall, these advantages aim at making the method applicable in a real-life set-up. The overview of the method is as follows, with ![]() $i$ the iteration index:

$i$ the iteration index:

$\mathcal {S}.1$ Simulate flow with feedback controller

$\mathcal {S}.1$ Simulate flow with feedback controller  $\tilde K_i(s)$. After transient regime, reach new statistically steady regime (dynamical equilibrium). (§ 2.3)

$\tilde K_i(s)$. After transient regime, reach new statistically steady regime (dynamical equilibrium). (§ 2.3) $\hookrightarrow$ At iteration 0, controller is null:

$\hookrightarrow$ At iteration 0, controller is null:  $\tilde K_0 (s)=0$.

$\tilde K_0 (s)=0$. $\mathcal {S}.2$ Compute LTI ROM of the oscillating closed loop:

$\mathcal {S}.2$ Compute LTI ROM of the oscillating closed loop:  $G_i(s)$. (§ 2.4)

$G_i(s)$. (§ 2.4) $\hookrightarrow$ With input–output data.

$\hookrightarrow$ With input–output data. $\mathcal {S}.3$ Synthesize new controller

$\mathcal {S}.3$ Synthesize new controller  $K^+_i(s)$ for the identified ROM. (§ 2.5)

$K^+_i(s)$ for the identified ROM. (§ 2.5) $\hookrightarrow$ Automated synthesis.

$\hookrightarrow$ Automated synthesis. $\mathcal {S}.4$ Stack controllers and reduce the order with the balanced truncation

$\mathcal {S}.4$ Stack controllers and reduce the order with the balanced truncation  $\mathcal {B}_T$: define

$\mathcal {B}_T$: define  $\tilde K_{i+1}(s) = \mathcal {B}_T(\tilde K_{i}(s) + K_i^+(s))$ to use in closed loop. (§ 2.6)

$\tilde K_{i+1}(s) = \mathcal {B}_T(\tilde K_{i}(s) + K_i^+(s))$ to use in closed loop. (§ 2.6) $\hookrightarrow$ Reduce controller order and control input transient.

$\hookrightarrow$ Reduce controller order and control input transient. $\mathcal {S}.5$ Back to

$\mathcal {S}.5$ Back to  $\mathcal {S}.5$ and iterate until condition is met.

$\mathcal {S}.5$ and iterate until condition is met. $\hookrightarrow$ For example, low norm of sensing signal.

$\hookrightarrow$ For example, low norm of sensing signal.

Table 1. Converting the method of Leclercq et al. (Reference Leclercq, Demourant, Poussot-Vassal and Sipp2019) into a data-based and automated method.

2.2. Notations

2.2.1. Notations for the iterative procedure

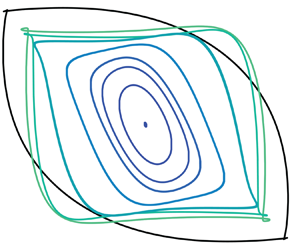

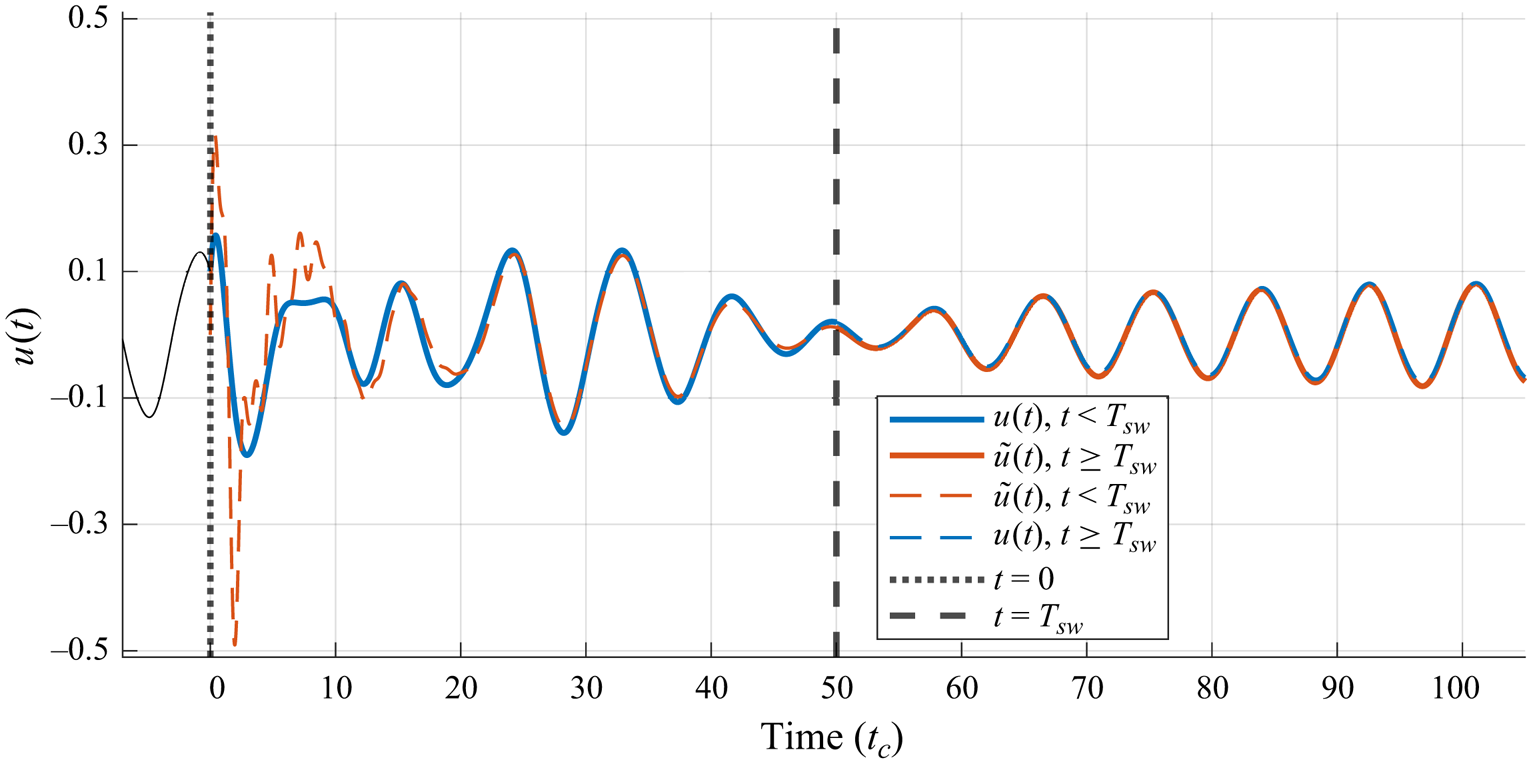

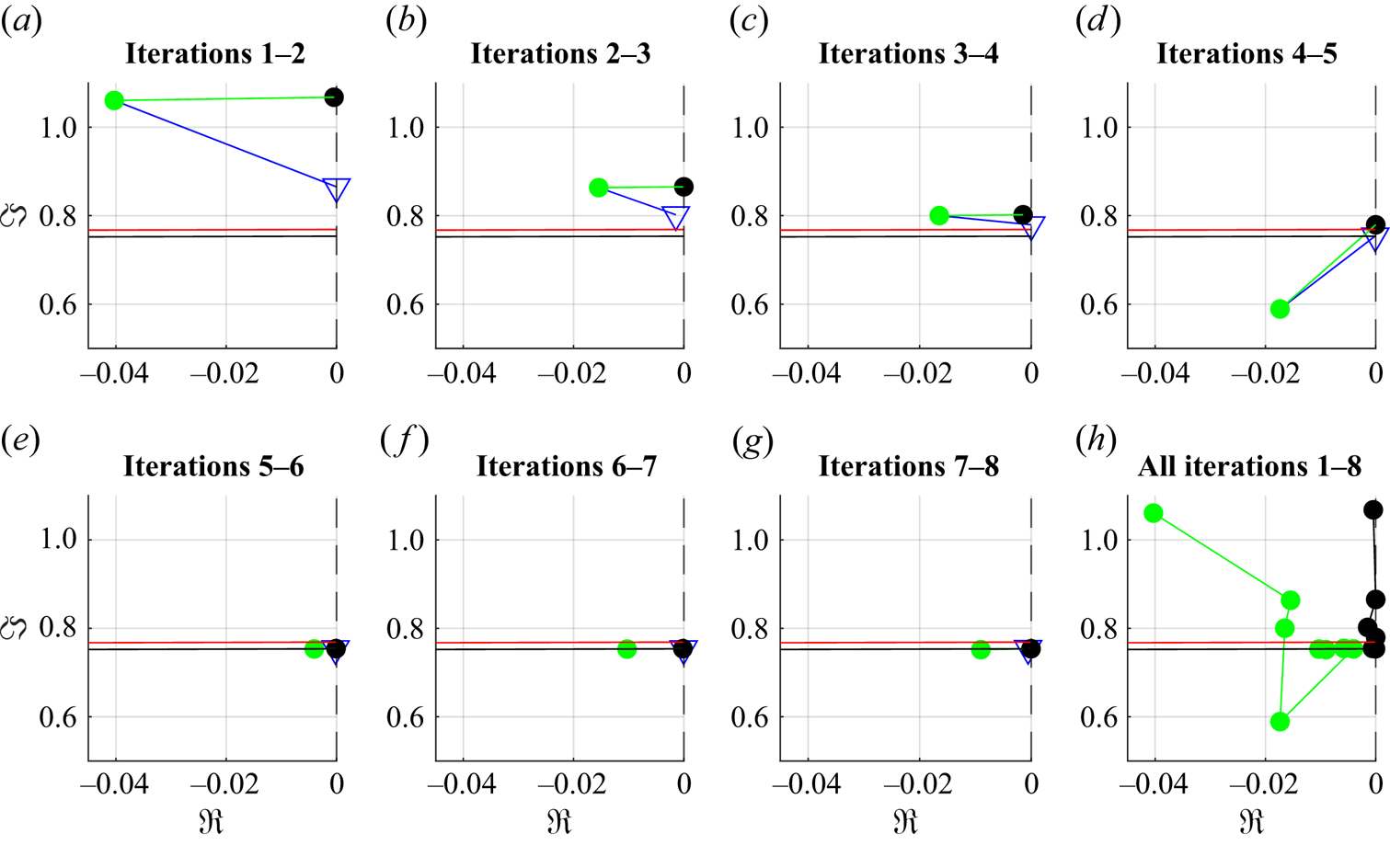

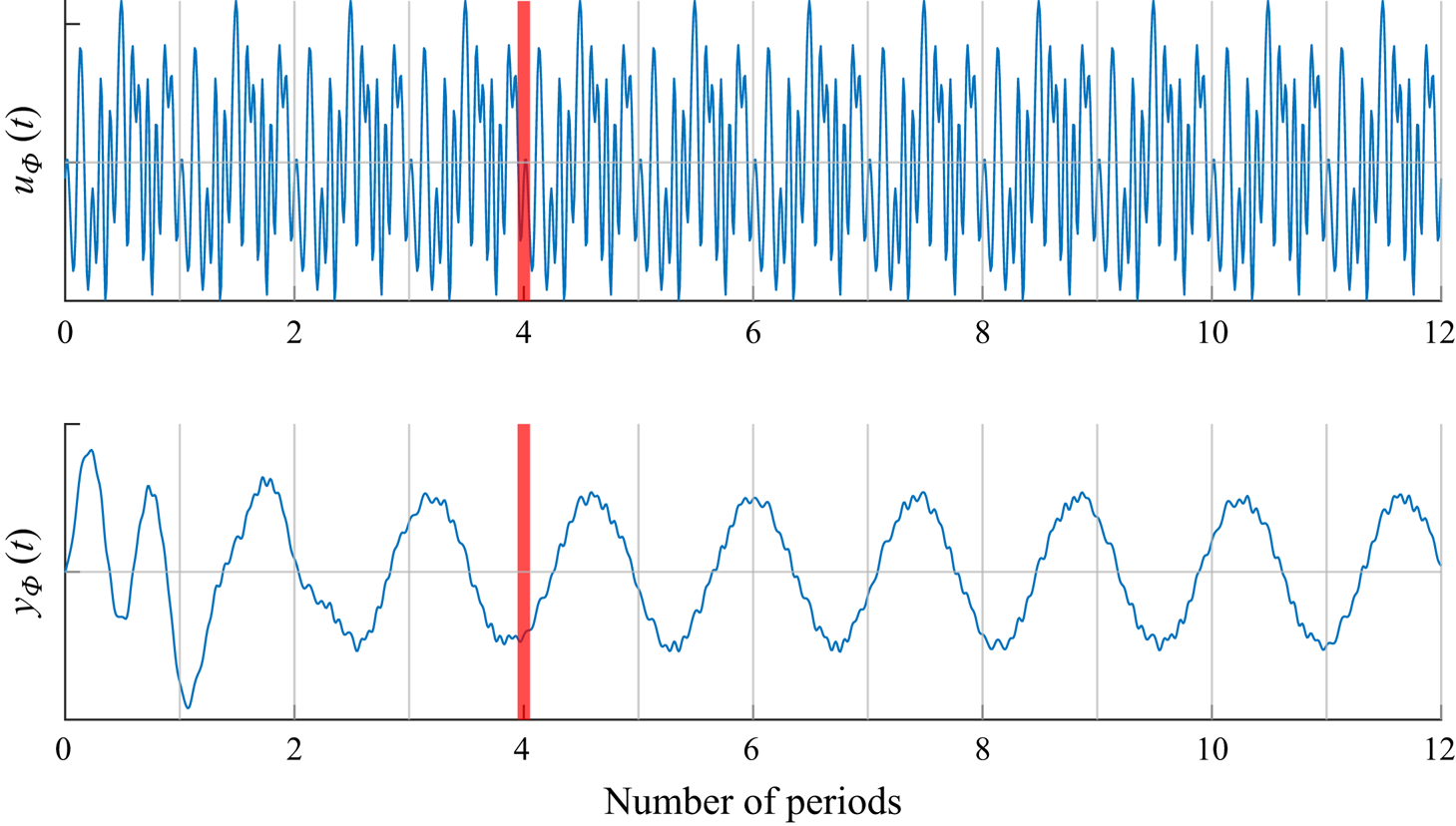

One repetition of the identification-control process is referred to as an iteration, and they are repeated until the equilibrium is reached. The following paragraph is described graphically in figure 3. At the start of the process, numbered iteration ![]() $i=0$, the flow is simulated from its perturbed unstable equilibrium, with the feedback controller

$i=0$, the flow is simulated from its perturbed unstable equilibrium, with the feedback controller ![]() $\tilde {K}_{0}=0$. Therefore, the flow evolves towards its natural limit cycle (

$\tilde {K}_{0}=0$. Therefore, the flow evolves towards its natural limit cycle (![]() $\mathcal {S}.5$). When the limit cycle is attained, at time

$\mathcal {S}.5$). When the limit cycle is attained, at time ![]() $t_0^I$, an exogenous signal

$t_0^I$, an exogenous signal ![]() $u_{ {\varPhi }}(t)$ is injected for the identification of a model

$u_{ {\varPhi }}(t)$ is injected for the identification of a model ![]() $G_0(s)$ of the oscillating flow with data from

$G_0(s)$ of the oscillating flow with data from ![]() $t \in [t_0^I, t_0^K]$ (

$t \in [t_0^I, t_0^K]$ (![]() $\mathcal {S}.2$). Then, a controller

$\mathcal {S}.2$). Then, a controller ![]() $K_0^+(s)$ is synthesized (

$K_0^+(s)$ is synthesized (![]() $\mathcal {S}.3$) to control

$\mathcal {S}.3$) to control ![]() $G_0(s)$. At time

$G_0(s)$. At time ![]() $t < t_0^K$, only the controller

$t < t_0^K$, only the controller ![]() $K_0=0$ is in the loop. At time

$K_0=0$ is in the loop. At time ![]() $t \geq t_0^K$, the new full-order controller is

$t \geq t_0^K$, the new full-order controller is ![]() $K_1 = \tilde {K}_0+K_0^+$. After a short duration

$K_1 = \tilde {K}_0+K_0^+$. After a short duration ![]() $T_{sw}$ (explained in § 2.6.3),

$T_{sw}$ (explained in § 2.6.3), ![]() $K_1$ is reduced with balanced truncation

$K_1$ is reduced with balanced truncation ![]() $\mathcal {B}_T$ and its low-order counterpart

$\mathcal {B}_T$ and its low-order counterpart ![]() $\tilde {K}_1=\mathcal {B}_T(K_0+K_0^+)$ is used in its place (

$\tilde {K}_1=\mathcal {B}_T(K_0+K_0^+)$ is used in its place (![]() $\mathcal {S}.4$). The flow reaches a new dynamical equilibrium with lower perturbation kinetic energy. Iteration

$\mathcal {S}.4$). The flow reaches a new dynamical equilibrium with lower perturbation kinetic energy. Iteration ![]() $i=1$ starts at

$i=1$ starts at ![]() $t=t_0^K$, and the notations are alike for the rest of the procedure.

$t=t_0^K$, and the notations are alike for the rest of the procedure.

Figure 3. At each iteration ![]() $i$, a time simulation is performed in closed loop with the controller

$i$, a time simulation is performed in closed loop with the controller ![]() $\tilde {K}_i(s)$; then, an exogenous signal

$\tilde {K}_i(s)$; then, an exogenous signal ![]() $u_{ {\varPhi }}(t)$ is injected for the identification of an LTI model

$u_{ {\varPhi }}(t)$ is injected for the identification of an LTI model ![]() $G_i(s)$, for which an LTI controller

$G_i(s)$, for which an LTI controller ![]() $K_i^+(s)$ is synthesized. This corresponds to the start of iteration

$K_i^+(s)$ is synthesized. This corresponds to the start of iteration ![]() $i+1$, where the controller in the loop is

$i+1$, where the controller in the loop is ![]() $\tilde {K}_{i+1} = \mathcal {B}_T(\tilde {K}_i + K_i^+)$ that should drive the flow to a new dynamical equilibrium with lower perturbation kinetic energy. The process is then repeated.

$\tilde {K}_{i+1} = \mathcal {B}_T(\tilde {K}_i + K_i^+)$ that should drive the flow to a new dynamical equilibrium with lower perturbation kinetic energy. The process is then repeated.

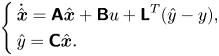

2.2.2. Control theory notations

The order of an LTI plant ![]() $G$ is denoted

$G$ is denoted ![]() $\partial ^\circ {G} \in \mathbb {N}$. The state-space representation of a transfer

$\partial ^\circ {G} \in \mathbb {N}$. The state-space representation of a transfer ![]() $G(s)$ with associated matrices

$G(s)$ with associated matrices ![]() $({{\boldsymbol{\mathsf{A}}}}, {{\boldsymbol{\mathsf{B}}}}, {{\boldsymbol{\mathsf{C}}}}, {{\boldsymbol{\mathsf{D}}}})$ is denoted as

$({{\boldsymbol{\mathsf{A}}}}, {{\boldsymbol{\mathsf{B}}}}, {{\boldsymbol{\mathsf{C}}}}, {{\boldsymbol{\mathsf{D}}}})$ is denoted as

\begin{equation} G= \left[ \begin{array}{c|c} \boldsymbol{\mathsf{A}} & \boldsymbol{\mathsf{B}} \\ \hline \boldsymbol{\mathsf{C}} & \boldsymbol{\mathsf{D}} \end{array}\right] , \end{equation}

\begin{equation} G= \left[ \begin{array}{c|c} \boldsymbol{\mathsf{A}} & \boldsymbol{\mathsf{B}} \\ \hline \boldsymbol{\mathsf{C}} & \boldsymbol{\mathsf{D}} \end{array}\right] , \end{equation}

such that the state, input and output ![]() $\boldsymbol {x}, \boldsymbol {u}, \boldsymbol {y}$ associated with this state-space realization of

$\boldsymbol {x}, \boldsymbol {u}, \boldsymbol {y}$ associated with this state-space realization of ![]() $G$ are as follows:

$G$ are as follows:

\begin{equation} \left\{ \begin{aligned} & \dot{\boldsymbol{x}} = {{\boldsymbol{\mathsf{A}}}}\boldsymbol{x} + {{\boldsymbol{\mathsf{B}}}}\boldsymbol{u}, \\ & \boldsymbol{y} = {{\boldsymbol{\mathsf{C}}}}\boldsymbol{x} + {{\boldsymbol{\mathsf{D}}}}\boldsymbol{u}. \end{aligned} \right. \end{equation}

\begin{equation} \left\{ \begin{aligned} & \dot{\boldsymbol{x}} = {{\boldsymbol{\mathsf{A}}}}\boldsymbol{x} + {{\boldsymbol{\mathsf{B}}}}\boldsymbol{u}, \\ & \boldsymbol{y} = {{\boldsymbol{\mathsf{C}}}}\boldsymbol{x} + {{\boldsymbol{\mathsf{D}}}}\boldsymbol{u}. \end{aligned} \right. \end{equation}

Note that most of the plants used in this study are single-input, single-output (SISO), i.e. with scalar ![]() $u, y$. Also, for two plants

$u, y$. Also, for two plants ![]() $G_1, G_2$ with common input

$G_1, G_2$ with common input ![]() $u$ and respective outputs

$u$ and respective outputs ![]() $y_1, y_2$, the plant sum

$y_1, y_2$, the plant sum ![]() $\varSigma =G_1+G_2$ is defined as the plant with input

$\varSigma =G_1+G_2$ is defined as the plant with input ![]() $u$ and output

$u$ and output ![]() $y_\varSigma =y_1+y_2$. Its state-space representation is derived easily.

$y_\varSigma =y_1+y_2$. Its state-space representation is derived easily.

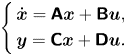

2.3. Use case: flow past a cylinder at low Reynolds number

2.3.1. Configuration

In this paper, the use case is an incompressible bidimensional flow past a cylinder, used in numerous past studies with slightly different set-ups and various control methods (e.g. Illingworth Reference Illingworth2016; Paris et al. Reference Paris, Beneddine and Dandois2021). Here, the configuration is taken from Jussiau et al. (Reference Jussiau, Leclercq, Demourant and Apkarian2022) and some details are recalled below. A cylinder of diameter ![]() $D$ is placed at the origin of a rectangular domain

$D$ is placed at the origin of a rectangular domain ![]() $\varOmega$, equipped with the Cartesian coordinate system

$\varOmega$, equipped with the Cartesian coordinate system ![]() $(x_1, x_2)$. All quantities are rendered non-dimensional with respect to the cylinder diameter

$(x_1, x_2)$. All quantities are rendered non-dimensional with respect to the cylinder diameter ![]() $D$ and the uniform upstream velocity magnitude

$D$ and the uniform upstream velocity magnitude ![]() ${v_1}_\infty$. The convective time unit is defined as

${v_1}_\infty$. The convective time unit is defined as ![]() $t_c=D/{v_1}_\infty$ and the Reynolds number is defined as

$t_c=D/{v_1}_\infty$ and the Reynolds number is defined as ![]() $Re={v_1}_\infty D / \nu$, balancing convective and viscous terms. The domain extends as

$Re={v_1}_\infty D / \nu$, balancing convective and viscous terms. The domain extends as ![]() $-15 \leq {x_1} \leq 20, |x_2| \leq 10$. The geometry is depicted in figure 4.

$-15 \leq {x_1} \leq 20, |x_2| \leq 10$. The geometry is depicted in figure 4.

Figure 4. Domain geometry for the flow past a cylinder. Dimensions are in black, while boundary conditions are in light grey. Drawing is not to scale.

The flow is described by its velocity ![]() ${\boldsymbol {v}} = [v_1, v_2]^T$ and pressure

${\boldsymbol {v}} = [v_1, v_2]^T$ and pressure ![]() $p$ in the domain

$p$ in the domain ![]() $\varOmega$, and satisfies the incompressible Navier–Stokes equations:

$\varOmega$, and satisfies the incompressible Navier–Stokes equations:

\begin{equation} \left\{ \begin{aligned} & \frac{\partial {\boldsymbol{v}}}{\partial t} + ({\boldsymbol{v}} \boldsymbol{\cdot} \boldsymbol{\nabla}){\boldsymbol{v}} ={-}\boldsymbol{\nabla} p + \frac{1}{Re}\nabla^2 {\boldsymbol{v}}, \\ & \boldsymbol{\nabla} \boldsymbol{\cdot} {\boldsymbol{v}} = 0. \end{aligned} \right. \end{equation}

\begin{equation} \left\{ \begin{aligned} & \frac{\partial {\boldsymbol{v}}}{\partial t} + ({\boldsymbol{v}} \boldsymbol{\cdot} \boldsymbol{\nabla}){\boldsymbol{v}} ={-}\boldsymbol{\nabla} p + \frac{1}{Re}\nabla^2 {\boldsymbol{v}}, \\ & \boldsymbol{\nabla} \boldsymbol{\cdot} {\boldsymbol{v}} = 0. \end{aligned} \right. \end{equation}

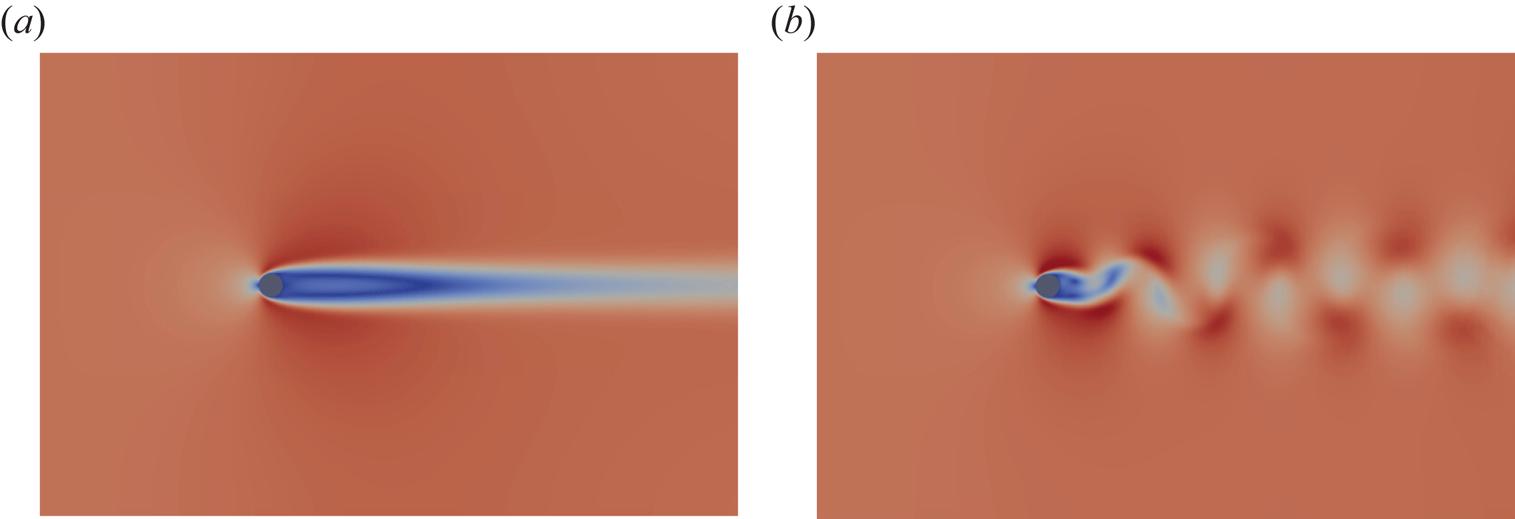

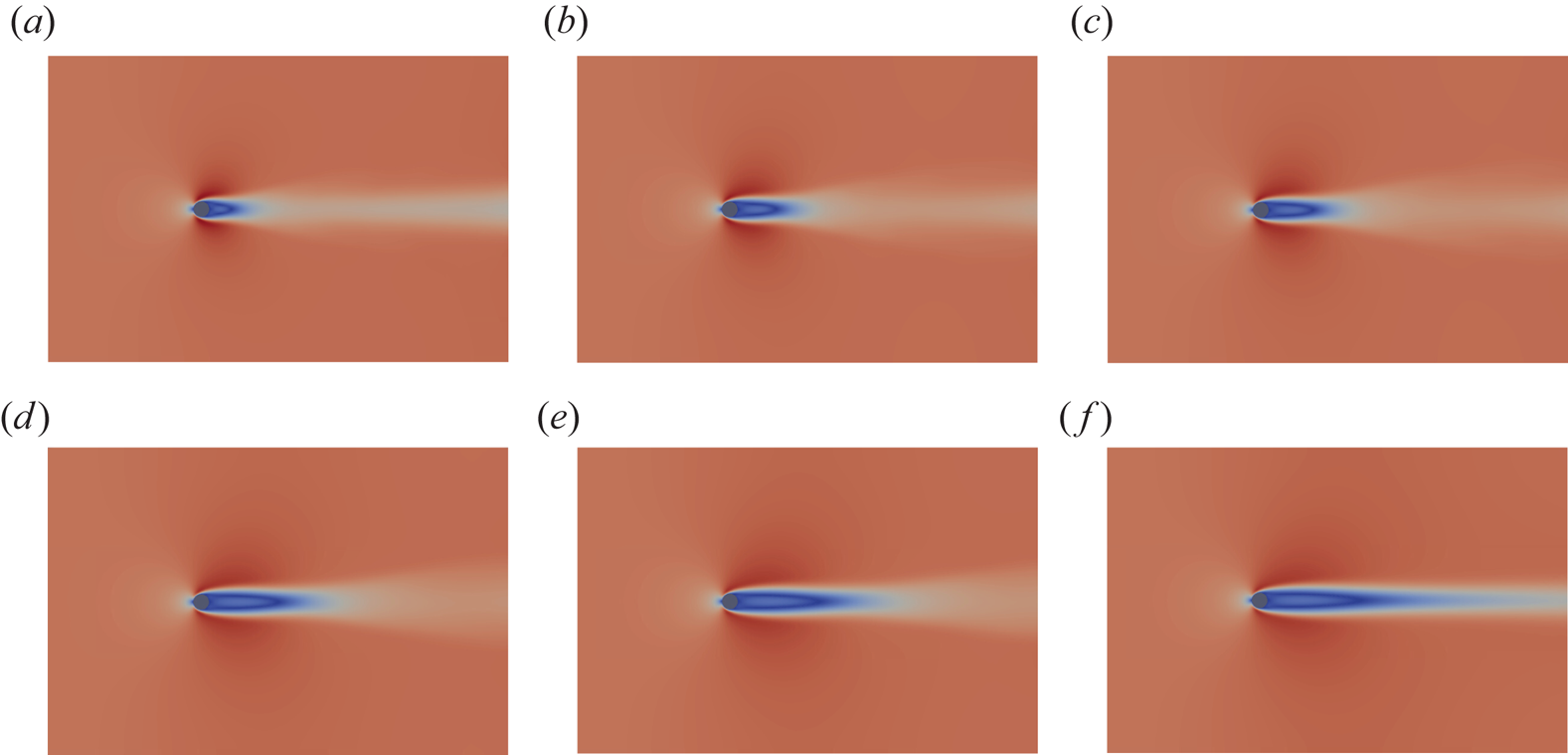

This dynamical system is known to undergo a supercritical Hopf bifurcation at the critical Reynolds number ![]() $Re_c \approx 47$ (Barkley Reference Barkley2006). Above the threshold, it displays an unstable equilibrium (here referred to as the base flow; see figure 5a) and a periodic attractor (i.e. a stable limit cycle; see figure 5b). In this study, we set

$Re_c \approx 47$ (Barkley Reference Barkley2006). Above the threshold, it displays an unstable equilibrium (here referred to as the base flow; see figure 5a) and a periodic attractor (i.e. a stable limit cycle; see figure 5b). In this study, we set ![]() $Re=100$.

$Re=100$.

Figure 5. Cylinder flow regimes (velocity magnitude). Unstable base flow (a) and snapshot of the attractor (b). Domain is cut for clarity.

2.3.2. Boundary conditions, control and simulation set-ups

Unactuated flow. A parallel flow enters from the left of the domain, directed to the right of the domain. The boundary conditions for the unforced flow, represented in figure 4, are detailed below.

(i) On the inlet

$\varGamma _i$, the fluid has parallel horizontal velocity

$\varGamma _i$, the fluid has parallel horizontal velocity  ${\boldsymbol {v}}^{i}=({v_1}_\infty,0)$, uniform along the vertical axis

${\boldsymbol {v}}^{i}=({v_1}_\infty,0)$, uniform along the vertical axis  $x_2$.

$x_2$.(ii) On the outlet

$\varGamma _o$, we impose standard outflow conditions with

$\varGamma _o$, we impose standard outflow conditions with  $p^{o}{\boldsymbol {n}} - Re^{-1}\boldsymbol {\nabla } {\boldsymbol {v}}^{o} \boldsymbol{\cdot} {\boldsymbol {n}}=0$ where

$p^{o}{\boldsymbol {n}} - Re^{-1}\boldsymbol {\nabla } {\boldsymbol {v}}^{o} \boldsymbol{\cdot} {\boldsymbol {n}}=0$ where  ${\boldsymbol {n}}$ is the outward-pointing vector.

${\boldsymbol {n}}$ is the outward-pointing vector.(iii) On the upper and lower boundaries

$\varGamma _{ul}$, that were set far from the cylinder to mitigate end effects, we impose an impermeability condition

$\varGamma _{ul}$, that were set far from the cylinder to mitigate end effects, we impose an impermeability condition  $v_2=0$.

$v_2=0$.(iv) On the surface of the cylinder

$\varGamma _c$ where actuation is not active, we impose a no-slip condition with

$\varGamma _c$ where actuation is not active, we impose a no-slip condition with  ${\boldsymbol {v}}^{c}=(0,0)$.

${\boldsymbol {v}}^{c}=(0,0)$.

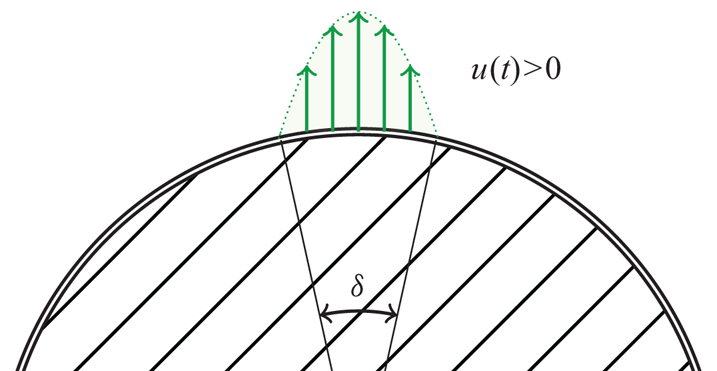

Actuation. In this configuration, the actuation is injection/suction of fluid at the upper and lower poles of the cylinder, in the vertical direction. Both actuators are 10![]() $^\circ$ wide and impose a parabolic profile

$^\circ$ wide and impose a parabolic profile ![]() ${v_2}_p(x_1)$ to the normal velocity of the fluid, modulated by the control amplitude

${v_2}_p(x_1)$ to the normal velocity of the fluid, modulated by the control amplitude ![]() $u(t)$ (negative or positive). On the controlled boundaries, the boundary condition is

$u(t)$ (negative or positive). On the controlled boundaries, the boundary condition is ![]() ${\boldsymbol {v}}^{act}(x_1, t) = ( 0, {v_2}_p(x_1) u(t) )$ (see figure 6). Both actuators are functioning antisymmetrically, in order to maintain an instantaneous zero net mass flux. In other words, a positive actuation amplitude

${\boldsymbol {v}}^{act}(x_1, t) = ( 0, {v_2}_p(x_1) u(t) )$ (see figure 6). Both actuators are functioning antisymmetrically, in order to maintain an instantaneous zero net mass flux. In other words, a positive actuation amplitude ![]() $u(t)>0$ corresponds to blowing from the top and suction from the bottom, and conversely with

$u(t)>0$ corresponds to blowing from the top and suction from the bottom, and conversely with ![]() $u(t)<0$.

$u(t)<0$.

Figure 6. Zoom on actuation set-up.

Sensing. It was shown in several past studies that a SISO (i.e. one actuator and one sensor) set-up can be adequate for controlling the cylinder configuration (Flinois & Morgans Reference Flinois and Morgans2016; Jin et al. Reference Jin, Illingworth and Sandberg2020; Jussiau et al. Reference Jussiau, Leclercq, Demourant and Apkarian2022) if the sensor is positioned in order to reconstruct sufficient information, and to not suffer too much from convective time delays. Following this trade-off, the sensor is chosen to provide the cross-stream velocity in the wake: ![]() $y(t) = v_2({x_1}=3, {x_2}=0, t)$. The sensor is assumed perfect and not corrupted by noise. Note that including a linear sensor model (e.g. limited bandwidth with a low-pass transfer function) would be seamless, as the approach is entirely based on data and only assumes linearity.

$y(t) = v_2({x_1}=3, {x_2}=0, t)$. The sensor is assumed perfect and not corrupted by noise. Note that including a linear sensor model (e.g. limited bandwidth with a low-pass transfer function) would be seamless, as the approach is entirely based on data and only assumes linearity.

Selecting the sensor location on the horizontal symmetry axis ![]() $x_2=0$ of the base flow yields an immediate benefit: the measurement value on the base flow can be deduced by symmetry arguments as

$x_2=0$ of the base flow yields an immediate benefit: the measurement value on the base flow can be deduced by symmetry arguments as ![]() $y_b=0$. This has a direct advantage, allowing the controller to operate directly on the measurement value

$y_b=0$. This has a direct advantage, allowing the controller to operate directly on the measurement value ![]() $y(t)$ while maintaining the natural base flow as an equilibrium point of the closed-loop system. In the case where the sensor were placed at a location with

$y(t)$ while maintaining the natural base flow as an equilibrium point of the closed-loop system. In the case where the sensor were placed at a location with ![]() $y_b\neq 0$, two alternatives are suggested. The first option is the controller operating on the error signal

$y_b\neq 0$, two alternatives are suggested. The first option is the controller operating on the error signal ![]() $e(t)=y(t)-y_b$, requiring the computation of

$e(t)=y(t)-y_b$, requiring the computation of ![]() $y_b$ and

$y_b$ and ![]() ${\boldsymbol {q}}_b$, which is excluded in this data-driven approach. The second option is using a controller with zero static gain (i.e.

${\boldsymbol {q}}_b$, which is excluded in this data-driven approach. The second option is using a controller with zero static gain (i.e. ![]() $K(0)=0$). In this case, it could operate directly with

$K(0)=0$). In this case, it could operate directly with ![]() $y(t)$, while ensuring the base flow remains an equilibrium point, and no other equilibrium with zero input may exist, as proven in Leclercq et al. (Reference Leclercq, Demourant, Poussot-Vassal and Sipp2019).

$y(t)$, while ensuring the base flow remains an equilibrium point, and no other equilibrium with zero input may exist, as proven in Leclercq et al. (Reference Leclercq, Demourant, Poussot-Vassal and Sipp2019).

2.3.3. Numerical methods

The incompressible Navier–Stokes equations in the two-dimensional domain (2.3) are solved in finite dimension with the finite element method using the toolbox FEniCS (Logg, Mardal & Wells Reference Logg, Mardal and Wells2012) in Python. The FEniCS toolbox is widely used for solving partial differential equations because of its very general framework and its native parallel computing capacity.

The unstructured mesh consists of approximately 25 000 triangles, more densely populated in the vicinity and in the wake of the cylinder. Finite elements are chosen as Taylor–Hood ![]() $(P_2, P_2, P_1)$ elements, leading to a discretized descriptor system of 113 000 states. For time stepping, a linear multistep method of second order is used. The nonlinear term is extrapolated with a second-order Adams–Bashforth scheme, while the viscous term is treated implicitly, making the temporal scheme semi-implicit. The time step is chosen as

$(P_2, P_2, P_1)$ elements, leading to a discretized descriptor system of 113 000 states. For time stepping, a linear multistep method of second order is used. The nonlinear term is extrapolated with a second-order Adams–Bashforth scheme, while the viscous term is treated implicitly, making the temporal scheme semi-implicit. The time step is chosen as ![]() $\Delta t = 5 \times 10^{-3}$ for stability and precision. Each simulation is run in parallel on 24 CPU cores with distributed memory (MPI). All large-dimensional linear systems are solved with the software package MUMPS (Amestoy et al. Reference Amestoy, Duff, L'Excellent and Koster2000), a sparse direct solver well suited to distributed-memory architectures and natively accessible from within FEniCS.

$\Delta t = 5 \times 10^{-3}$ for stability and precision. Each simulation is run in parallel on 24 CPU cores with distributed memory (MPI). All large-dimensional linear systems are solved with the software package MUMPS (Amestoy et al. Reference Amestoy, Duff, L'Excellent and Koster2000), a sparse direct solver well suited to distributed-memory architectures and natively accessible from within FEniCS.

2.3.4. Additional notations for characterizing the flow

For characterizing the flow globally, we define several operations and quantities that are reused in the following. First, the system (2.3) can be written as

where the state variable is defined as ![]() ${\boldsymbol {q}}=[\begin{smallmatrix}{\boldsymbol {v}} \\ p \end{smallmatrix}]$,

${\boldsymbol {q}}=[\begin{smallmatrix}{\boldsymbol {v}} \\ p \end{smallmatrix}]$, ![]() ${{\boldsymbol{\mathsf{E}}}} = [\begin{smallmatrix} {{\boldsymbol{\mathsf{I}}}} & 0 \\ 0 & 0 \end{smallmatrix}]$ and the nonlinear operator

${{\boldsymbol{\mathsf{E}}}} = [\begin{smallmatrix} {{\boldsymbol{\mathsf{I}}}} & 0 \\ 0 & 0 \end{smallmatrix}]$ and the nonlinear operator ![]() ${{\boldsymbol{\mathsf{F}}}}$ is expressed easily. The base flow denoted

${{\boldsymbol{\mathsf{F}}}}$ is expressed easily. The base flow denoted ![]() ${\boldsymbol {q}}_b$ refers to the unique steady equilibrium of (2.4) (Barkley Reference Barkley2006); we recall that it is linearly unstable. The model of the flow linearized around the base flow

${\boldsymbol {q}}_b$ refers to the unique steady equilibrium of (2.4) (Barkley Reference Barkley2006); we recall that it is linearly unstable. The model of the flow linearized around the base flow ![]() ${\boldsymbol {q}}_b$ (or indifferently a reduced-order approximation of said model) is denoted

${\boldsymbol {q}}_b$ (or indifferently a reduced-order approximation of said model) is denoted ![]() ${G_b}(s)$, and is used in the analysis of results in § 3. We also define the semi-inner product between two velocity–pressure fields

${G_b}(s)$, and is used in the analysis of results in § 3. We also define the semi-inner product between two velocity–pressure fields ![]() ${\boldsymbol {q}}=[\begin{smallmatrix}{\boldsymbol {v}} \\ p \end{smallmatrix}]$ and

${\boldsymbol {q}}=[\begin{smallmatrix}{\boldsymbol {v}} \\ p \end{smallmatrix}]$ and ![]() $\widetilde {{\boldsymbol {q}}}=[\begin{smallmatrix} \widetilde {{\boldsymbol {v}}} \\ \tilde {p} \end{smallmatrix}]$ as

$\widetilde {{\boldsymbol {q}}}=[\begin{smallmatrix} \widetilde {{\boldsymbol {v}}} \\ \tilde {p} \end{smallmatrix}]$ as

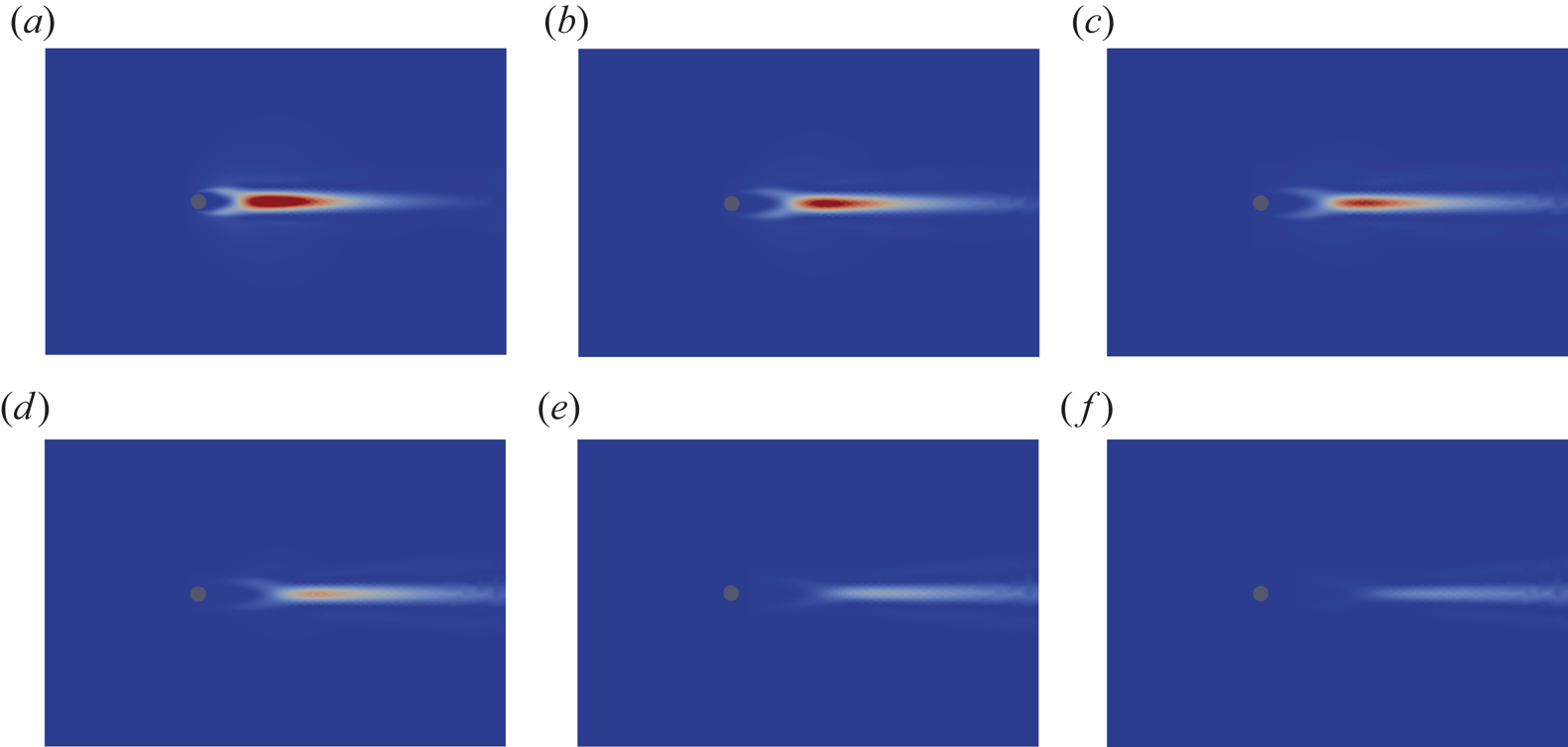

In order to quantify the distance from a field ![]() ${\boldsymbol {q}}$ to the base flow

${\boldsymbol {q}}$ to the base flow ![]() ${\boldsymbol {q}}_b$, we define the field

${\boldsymbol {q}}_b$, we define the field ![]() $\boldsymbol {\epsilon }({\boldsymbol {q}}) = ({\boldsymbol {q}}-{\boldsymbol {q}}_b)^T \boldsymbol {\cdot } {{\boldsymbol{\mathsf{E}}}} ({\boldsymbol {q}} - {\boldsymbol {q}}_b)$, providing information on a local level. In turn, the scalar perturbation kinetic energy (PKE) associated with a velocity–pressure field

$\boldsymbol {\epsilon }({\boldsymbol {q}}) = ({\boldsymbol {q}}-{\boldsymbol {q}}_b)^T \boldsymbol {\cdot } {{\boldsymbol{\mathsf{E}}}} ({\boldsymbol {q}} - {\boldsymbol {q}}_b)$, providing information on a local level. In turn, the scalar perturbation kinetic energy (PKE) associated with a velocity–pressure field ![]() ${\boldsymbol {q}}$ relative to the base flow

${\boldsymbol {q}}$ relative to the base flow ![]() ${\boldsymbol {q}}_b$ is defined as follows:

${\boldsymbol {q}}_b$ is defined as follows:

While these quantities are only available in simulation, they are used a posteriori to quantify the distance to the base flow, i.e. the distance to convergence.

Finally, when the flow is in a periodic regime (e.g. in feedback with a given controller), we define the mean flow as the temporal average of the flow variables ![]() $\bar {{\boldsymbol {q}}} = \langle {\boldsymbol {q}}(t) \rangle _T$ over a period. It is the same quantity used in Leclercq et al. (Reference Leclercq, Demourant, Poussot-Vassal and Sipp2019) for iterative identification, control and analysis of the flow, and is used in §§ 3 and 4.

$\bar {{\boldsymbol {q}}} = \langle {\boldsymbol {q}}(t) \rangle _T$ over a period. It is the same quantity used in Leclercq et al. (Reference Leclercq, Demourant, Poussot-Vassal and Sipp2019) for iterative identification, control and analysis of the flow, and is used in §§ 3 and 4.

2.4. Identification of an input–output model from data leveraging the mean resolvent

2.4.1. Introduction to the mean transfer function

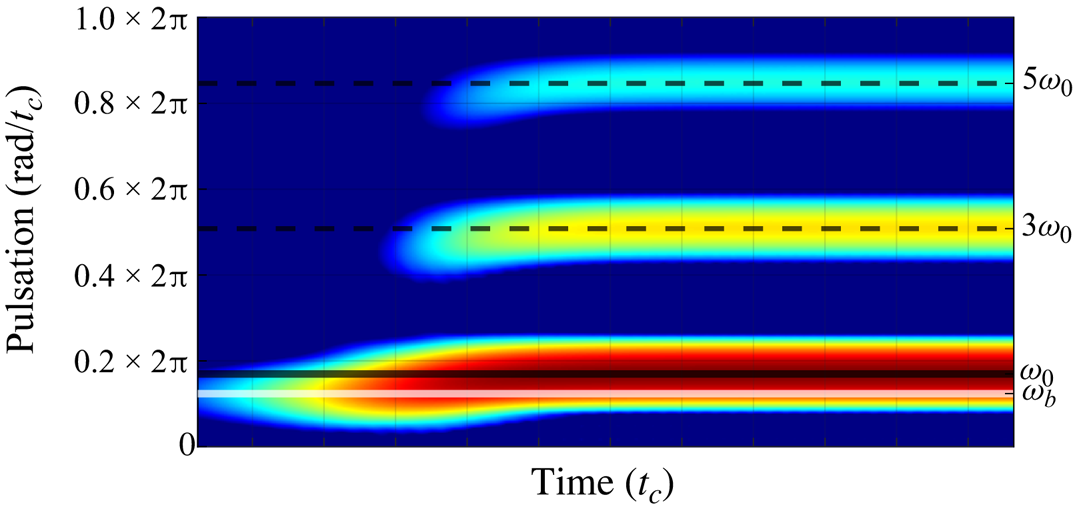

It is at first not obvious that the oscillating flow may be well approximated with an LTI model, moreover suitable for control purposes. Earlier justifications were given with variations of dynamic mode decomposition (Williams, Kevrekidis & Rowley Reference Williams, Kevrekidis and Rowley2015; Proctor, Brunton & Kutz Reference Proctor, Brunton and Kutz2016) whose theoretical link to the (linear) Koopman operator was established in Korda & Mezić (Reference Korda and Mezić2018b), and the models were used for control in, for example, Korda & Mezić (Reference Korda and Mezić2018a), Korda & Mezić (Reference Korda and Mezić2018b) and Arbabi et al. (Reference Arbabi, Korda and Mezić2018). In Leclercq & Sipp (Reference Leclercq and Sipp2023), a new relevant model around the limit cycle is introduced, based upon observations from Dahan, Morgans & Lardeau (Reference Dahan, Morgans and Lardeau2012), Dalla Longa, Morgans & Dahan (Reference Dalla Longa, Morgans and Dahan2017) and Evstafyeva, Morgans & Dalla Longa (Reference Evstafyeva, Morgans and Dalla Longa2017). This model, the so-called mean resolvent, is rooted in Floquet analysis and aims at providing the average linear response of the flow to a control input. It is also shown to be linked to the Koopman operator (Leclercq & Sipp Reference Leclercq and Sipp2023), and is extended to more complex attractors. This framework is briefly described in the rest of this section.

First, the linear response of the flow refers to the response of the flow to a given control input ![]() $\boldsymbol {f}(t)$ of small amplitude, i.e. small enough to allow linearization around the periodic deterministic unforced trajectory. Using a perturbation formulation, if the periodic unforced trajectory is denoted

$\boldsymbol {f}(t)$ of small amplitude, i.e. small enough to allow linearization around the periodic deterministic unforced trajectory. Using a perturbation formulation, if the periodic unforced trajectory is denoted ![]() $\boldsymbol {Q}(t)$, the linear response to the control

$\boldsymbol {Q}(t)$, the linear response to the control ![]() $\boldsymbol {f}(t)$ is

$\boldsymbol {f}(t)$ is ![]() $\delta {\boldsymbol {q}}(t)$, such that

$\delta {\boldsymbol {q}}(t)$, such that ![]() ${\boldsymbol {q}}(t) = \boldsymbol {Q}(t) + \delta {\boldsymbol {q}}(t)$.

${\boldsymbol {q}}(t) = \boldsymbol {Q}(t) + \delta {\boldsymbol {q}}(t)$.

Second, the average response of the flow is considered with respect to the phase on the limit cycle when the input ![]() $\boldsymbol {f}(t)$ is injected. On a periodic attractor of pulsation

$\boldsymbol {f}(t)$ is injected. On a periodic attractor of pulsation ![]() $\omega$, any time instant

$\omega$, any time instant ![]() $t$ is parametrized by a phase

$t$ is parametrized by a phase ![]() $\phi = \omega t \mod 2{\rm \pi} \in [0, 2{\rm \pi} )$, so that every point is described by its associated

$\phi = \omega t \mod 2{\rm \pi} \in [0, 2{\rm \pi} )$, so that every point is described by its associated ![]() $\phi$. The mean resolvent

$\phi$. The mean resolvent ![]() ${{\boldsymbol{\mathsf{R}}}}_0(s)$ is the operator predicting, in the frequency domain, the average linear response (over

${{\boldsymbol{\mathsf{R}}}}_0(s)$ is the operator predicting, in the frequency domain, the average linear response (over ![]() $\phi$) from a given input:

$\phi$) from a given input: ![]() $\langle \delta {\boldsymbol {q}}(s; \phi ) \rangle _\phi = {{\boldsymbol{\mathsf{R}}}}_0(s) {\boldsymbol {f}}(s)$.

$\langle \delta {\boldsymbol {q}}(s; \phi ) \rangle _\phi = {{\boldsymbol{\mathsf{R}}}}_0(s) {\boldsymbol {f}}(s)$.

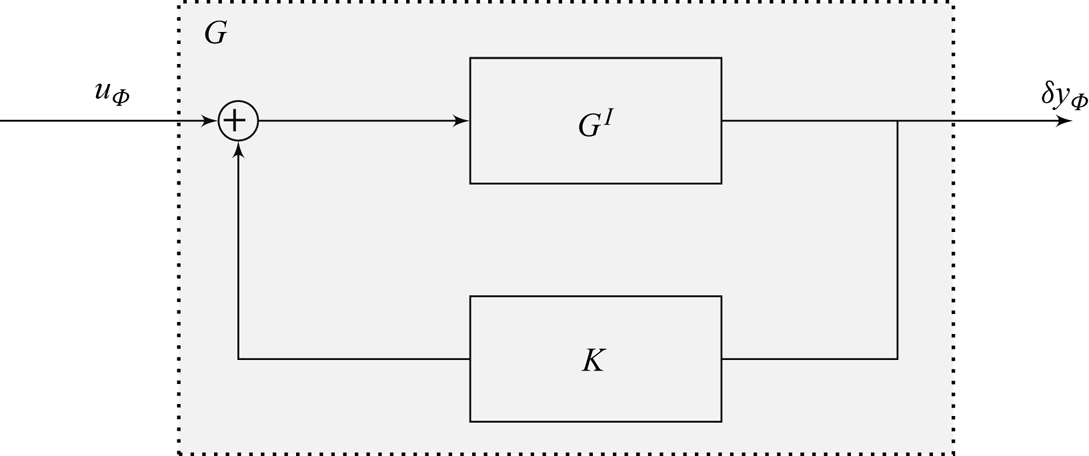

Here, we focus on a SISO transfer in the flow, i.e. the transfer between a single localized actuator and a single sensor signal. The actuation signal is such that ![]() $\boldsymbol {f}(t)={{\boldsymbol{\mathsf{B}}}}u(t)$ and we define the measurement deviation from the limit cycle as

$\boldsymbol {f}(t)={{\boldsymbol{\mathsf{B}}}}u(t)$ and we define the measurement deviation from the limit cycle as ![]() $\delta y(t) = {{\boldsymbol{\mathsf{C}}}} \delta {\boldsymbol {q}}(t)$, where

$\delta y(t) = {{\boldsymbol{\mathsf{C}}}} \delta {\boldsymbol {q}}(t)$, where ![]() ${{\boldsymbol{\mathsf{B}}}}, {{\boldsymbol{\mathsf{C}}}}$ are actuation and measurement fields, depending on the configuration. In this case, we study the SISO mean transfer function

${{\boldsymbol{\mathsf{B}}}}, {{\boldsymbol{\mathsf{C}}}}$ are actuation and measurement fields, depending on the configuration. In this case, we study the SISO mean transfer function ![]() $H_0(s): u(s) \mapsto \langle \delta y(s; \phi ) \rangle _\phi$, equal to

$H_0(s): u(s) \mapsto \langle \delta y(s; \phi ) \rangle _\phi$, equal to ![]() $H_0(s) = {{\boldsymbol{\mathsf{C}}}}{{\boldsymbol{\mathsf{R}}}}_0(s){{\boldsymbol{\mathsf{B}}}}$. It is shown in the following that it is possible to identify

$H_0(s) = {{\boldsymbol{\mathsf{C}}}}{{\boldsymbol{\mathsf{R}}}}_0(s){{\boldsymbol{\mathsf{B}}}}$. It is shown in the following that it is possible to identify ![]() $H_0(s)$ from data, with the full measurement

$H_0(s)$ from data, with the full measurement ![]() $y(t) = {{\boldsymbol{\mathsf{C}}}} {\boldsymbol {q}}(t)$ (since

$y(t) = {{\boldsymbol{\mathsf{C}}}} {\boldsymbol {q}}(t)$ (since ![]() $\delta y(t)$ is not measurable in practice).

$\delta y(t)$ is not measurable in practice).

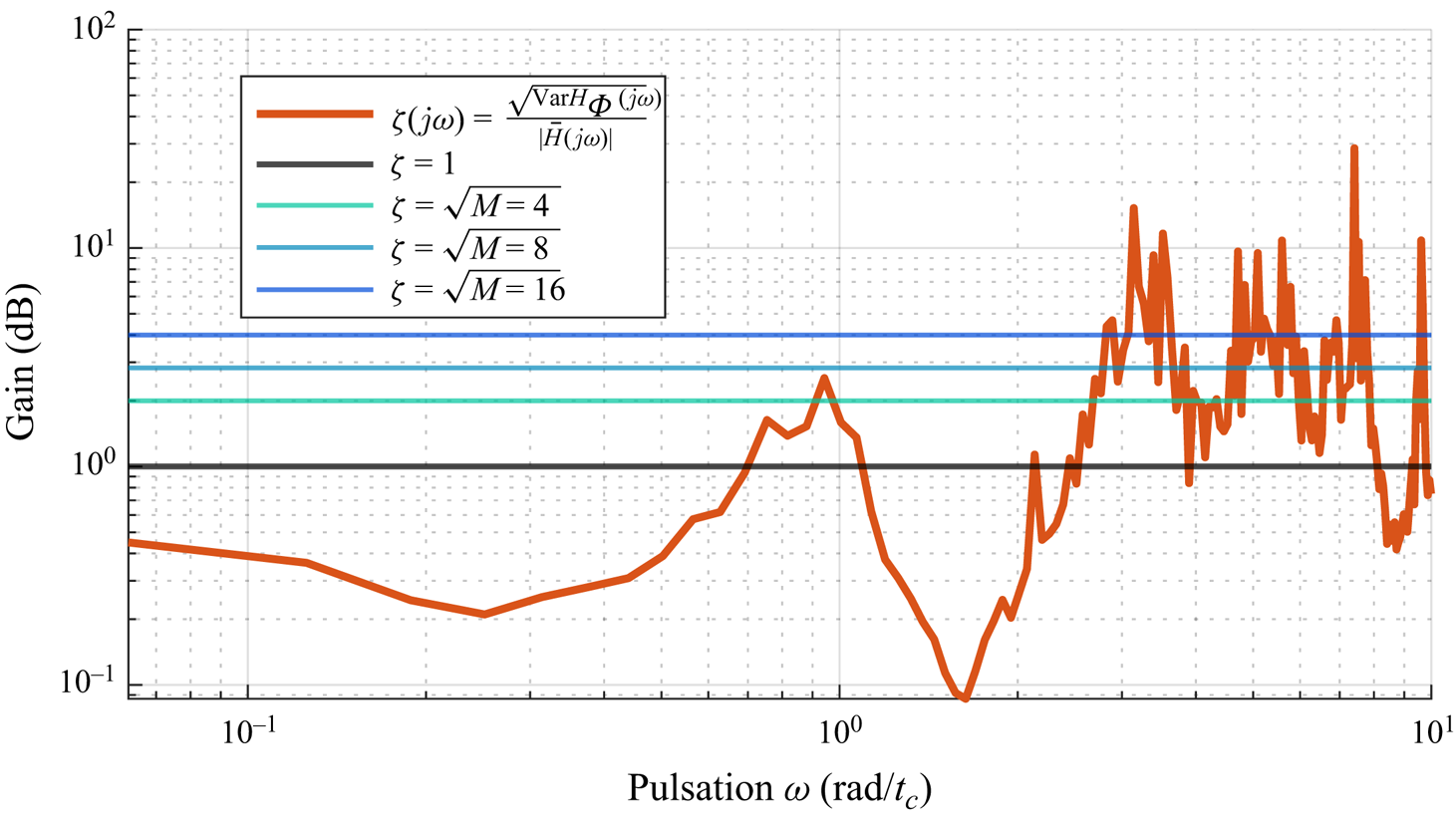

2.4.2. Mean frequency response

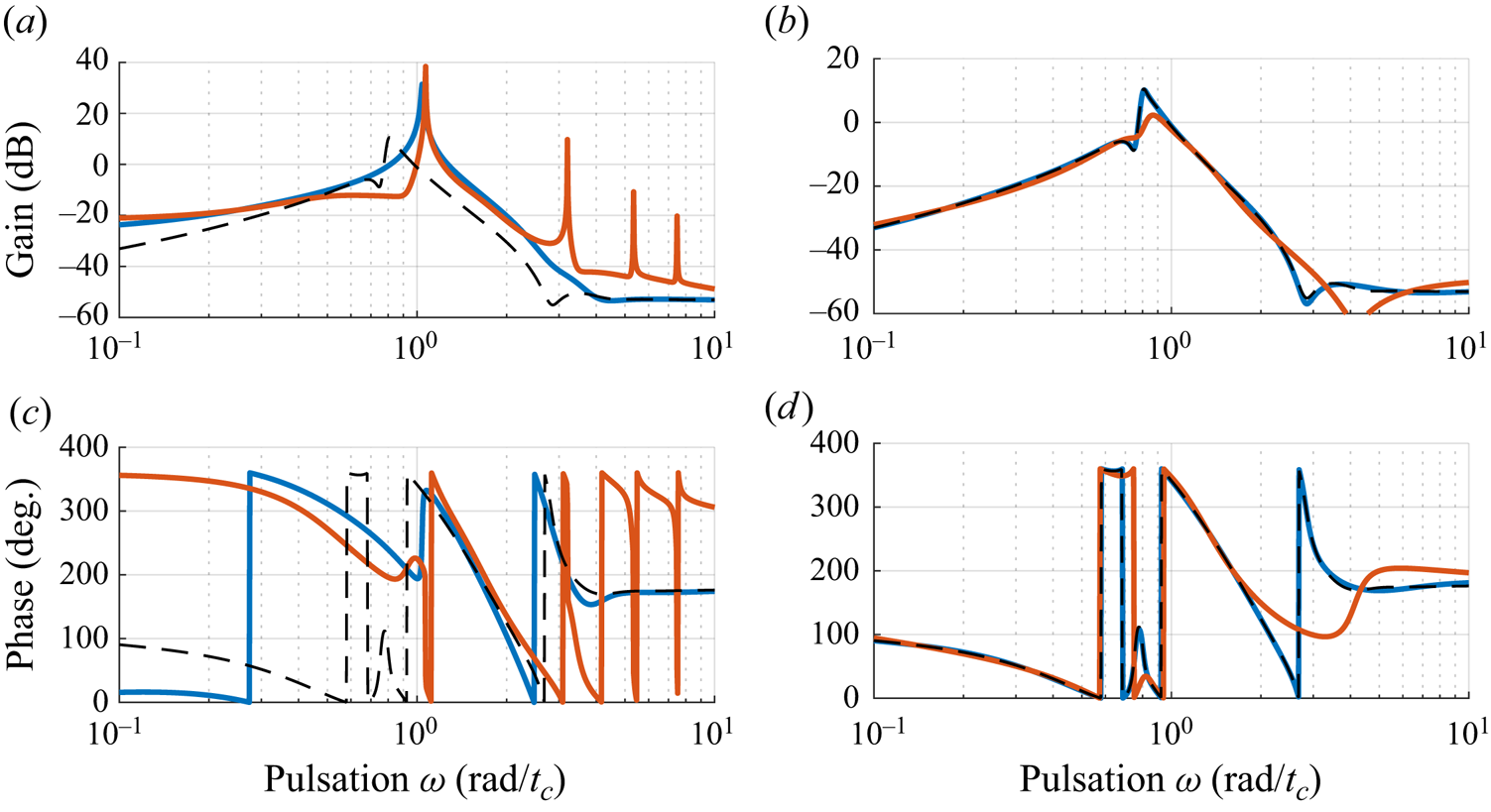

The identification of ![]() $H_0(s)$ is done in two steps. First, the frequency response

$H_0(s)$ is done in two steps. First, the frequency response ![]() $H_0(\jmath \omega )$ is extracted from input–output data on a discrete grid of frequencies. Second, a low-order state-space model is identified from these data. The transfer function of the low-order model is denoted

$H_0(\jmath \omega )$ is extracted from input–output data on a discrete grid of frequencies. Second, a low-order state-space model is identified from these data. The transfer function of the low-order model is denoted ![]() $G(s)$.

$G(s)$.

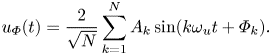

Multisine excitations. In order to extract the frequency response ![]() $H_0(\jmath \omega )$, we use here a particular class of input signals known as multisine excitations (Schoukens et al. Reference Schoukens, Guillaume and Pintelon1991). As shown in Leclercq & Sipp (Reference Leclercq and Sipp2023), any class of input signals could be used for this task (e.g. white noise, chirp, etc.), with various efficiency and a potential need for ensemble averaging. A multisine realization is a superposition of sines, depending on a random vector of independent identically distributed uniform variables

$H_0(\jmath \omega )$, we use here a particular class of input signals known as multisine excitations (Schoukens et al. Reference Schoukens, Guillaume and Pintelon1991). As shown in Leclercq & Sipp (Reference Leclercq and Sipp2023), any class of input signals could be used for this task (e.g. white noise, chirp, etc.), with various efficiency and a potential need for ensemble averaging. A multisine realization is a superposition of sines, depending on a random vector of independent identically distributed uniform variables ![]() $\boldsymbol {\varPhi }=[{\varPhi }_1, \dotsc, {\varPhi }_N] \sim \mathcal {U}([0, 2{\rm \pi} ]^N)$:

$\boldsymbol {\varPhi }=[{\varPhi }_1, \dotsc, {\varPhi }_N] \sim \mathcal {U}([0, 2{\rm \pi} ]^N)$:

\begin{equation} u_{{\varPhi}}(t) = \frac{2}{\sqrt N} \sum_{k=1}^{N} A_k \sin(k\omega_u t + {\varPhi}_k). \end{equation}

\begin{equation} u_{{\varPhi}}(t) = \frac{2}{\sqrt N} \sum_{k=1}^{N} A_k \sin(k\omega_u t + {\varPhi}_k). \end{equation}

The fundamental frequency of the multisine is ![]() $\omega _u$ and only harmonics

$\omega _u$ and only harmonics ![]() $k\omega _u, 1 \leq k \leq N$, are included, each with chosen amplitude

$k\omega _u, 1 \leq k \leq N$, are included, each with chosen amplitude ![]() $A_k$ (normalized by

$A_k$ (normalized by ![]() $\frac {1}{2}\sqrt N$) and random phase

$\frac {1}{2}\sqrt N$) and random phase ![]() ${\varPhi }_k$. The numerical values of the parameters

${\varPhi }_k$. The numerical values of the parameters ![]() $\omega _u, N, A_k$ are given in § 3.1.2. Multisines have been chosen for their deterministic amplitude spectrum and sparse representation in the frequency domain (Schoukens et al. Reference Schoukens, Lataire, Pintelon and Vandersteen2008; Schoukens, Vaes & Pintelon Reference Schoukens, Vaes and Pintelon2016), but any other input signal could be used for the identification in this context.

$\omega _u, N, A_k$ are given in § 3.1.2. Multisines have been chosen for their deterministic amplitude spectrum and sparse representation in the frequency domain (Schoukens et al. Reference Schoukens, Lataire, Pintelon and Vandersteen2008; Schoukens, Vaes & Pintelon Reference Schoukens, Vaes and Pintelon2016), but any other input signal could be used for the identification in this context.

Average of frequency responses and convergence to the mean frequency response. For a given input ![]() $u_{ {\varPhi }}(t)$, we denote

$u_{ {\varPhi }}(t)$, we denote ![]() $y_{ {\varPhi }}(t) = y(t) + \delta y_{ {\varPhi }}(t)$ the measured output, which is by definition the sum of the measurement signal of the unforced flow

$y_{ {\varPhi }}(t) = y(t) + \delta y_{ {\varPhi }}(t)$ the measured output, which is by definition the sum of the measurement signal of the unforced flow ![]() $y(t)$, and the forced contribution

$y(t)$, and the forced contribution ![]() $\delta y_{ {\varPhi }}(t)$ linear with respect to

$\delta y_{ {\varPhi }}(t)$ linear with respect to ![]() $u_{ {\varPhi }}(t)$. Following Leclercq & Sipp (Reference Leclercq and Sipp2023), the Fourier coefficients of the input and output at the forcing frequencies

$u_{ {\varPhi }}(t)$. Following Leclercq & Sipp (Reference Leclercq and Sipp2023), the Fourier coefficients of the input and output at the forcing frequencies ![]() $k\omega _u$ may be identified with harmonic averages (denoting the imaginary unit

$k\omega _u$ may be identified with harmonic averages (denoting the imaginary unit ![]() $\jmath$):

$\jmath$):

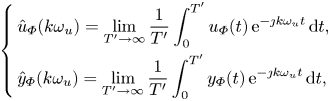

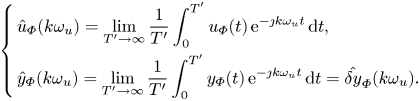

\begin{equation} \left\{ \begin{aligned} & \hat{u}_{{\varPhi}}(k\omega_u) = \lim_{T'\to \infty} \frac{1}{T'} \int_{0}^{T'} u_{{\varPhi}}(t) \, {\rm e}^{-\jmath k \omega_u t} \, {\rm d} t, \\ & \hat{y}_{{\varPhi}}(k\omega_u) = \lim_{T'\to \infty} \frac{1}{T'} \int_{0}^{T'} y_{{\varPhi}}(t) \, {\rm e}^{-\jmath k \omega_u t} \, {\rm d} t, \end{aligned} \right. \end{equation}

\begin{equation} \left\{ \begin{aligned} & \hat{u}_{{\varPhi}}(k\omega_u) = \lim_{T'\to \infty} \frac{1}{T'} \int_{0}^{T'} u_{{\varPhi}}(t) \, {\rm e}^{-\jmath k \omega_u t} \, {\rm d} t, \\ & \hat{y}_{{\varPhi}}(k\omega_u) = \lim_{T'\to \infty} \frac{1}{T'} \int_{0}^{T'} y_{{\varPhi}}(t) \, {\rm e}^{-\jmath k \omega_u t} \, {\rm d} t, \end{aligned} \right. \end{equation}

which are approximated in practice with discrete Fourier transforms (DFTs) (see § 3.1.2 and Appendix A). Also, as the forcing frequency ![]() $k\omega _u$ is chosen outside the frequency support of

$k\omega _u$ is chosen outside the frequency support of ![]() $y(t)$ we have

$y(t)$ we have

This is particularly important since we wish to identify the transfer between the input and the linear part of the output, which is not easily measurable in practice. Then, a frequency response depending on the phase ![]() $\boldsymbol {\varPhi }$ of the input may be computed as a ratio of Fourier coefficients at forced frequencies:

$\boldsymbol {\varPhi }$ of the input may be computed as a ratio of Fourier coefficients at forced frequencies:

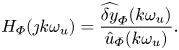

\begin{equation} H_{{\varPhi}}(\jmath k \omega_u) = \frac{\widehat{\delta y}_{{\varPhi}}(k\omega_u)}{\hat{u}_{{\varPhi}}(k\omega_u)}. \end{equation}

\begin{equation} H_{{\varPhi}}(\jmath k \omega_u) = \frac{\widehat{\delta y}_{{\varPhi}}(k\omega_u)}{\hat{u}_{{\varPhi}}(k\omega_u)}. \end{equation}

Now, using the expression of ![]() $y_{ {\varPhi }}(t)$ deduced from Leclercq & Sipp (Reference Leclercq and Sipp2023), it can be shown that

$y_{ {\varPhi }}(t)$ deduced from Leclercq & Sipp (Reference Leclercq and Sipp2023), it can be shown that

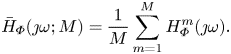

Therefore, if the experiment is repeated over ![]() $M$ realizations of

$M$ realizations of ![]() $u_{ {\varPhi }}(t)$ (i.e. by sampling

$u_{ {\varPhi }}(t)$ (i.e. by sampling ![]() $\boldsymbol {\varPhi }$), then the sample mean

$\boldsymbol {\varPhi }$), then the sample mean ![]() $\bar {H}_{ {\varPhi }}$ of

$\bar {H}_{ {\varPhi }}$ of ![]() $H_{ {\varPhi }}$ approximates

$H_{ {\varPhi }}$ approximates ![]() $H_0$ with variance

$H_0$ with variance ![]() ${{\rm Var}}(\bar {H}_{ {\varPhi }}(\jmath k \omega _u)) = ({1}/{M}){{\rm Var}}(H_{ {\varPhi }}(\jmath k \omega _u))$. It is notable that the ensemble average is done here on the multisine phase

${{\rm Var}}(\bar {H}_{ {\varPhi }}(\jmath k \omega _u)) = ({1}/{M}){{\rm Var}}(H_{ {\varPhi }}(\jmath k \omega _u))$. It is notable that the ensemble average is done here on the multisine phase ![]() $\boldsymbol {\varPhi }$, and not the phase

$\boldsymbol {\varPhi }$, and not the phase ![]() $\phi$ on the limit cycle where the signal

$\phi$ on the limit cycle where the signal ![]() $u_{ {\varPhi }}(t)$ is injected (which was done in Leclercq & Sipp (Reference Leclercq and Sipp2023)). Here, the phase on the limit cycle

$u_{ {\varPhi }}(t)$ is injected (which was done in Leclercq & Sipp (Reference Leclercq and Sipp2023)). Here, the phase on the limit cycle ![]() $\phi$ is assumed constant. In an experiment where

$\phi$ is assumed constant. In an experiment where ![]() $\phi$ cannot be chosen, the ensemble average would rather be performed on

$\phi$ cannot be chosen, the ensemble average would rather be performed on ![]() $\phi$ only, maintaining

$\phi$ only, maintaining ![]() $\boldsymbol {\varPhi }$ constant (i.e. injecting the same input signal at different instants on the limit cycle). As such, it would be possible to obtain a sample average of

$\boldsymbol {\varPhi }$ constant (i.e. injecting the same input signal at different instants on the limit cycle). As such, it would be possible to obtain a sample average of ![]() $\mathbb {E}(H_{\phi }(\jmath k \omega _u)) = H_0(\jmath k \omega _u)$.

$\mathbb {E}(H_{\phi }(\jmath k \omega _u)) = H_0(\jmath k \omega _u)$.

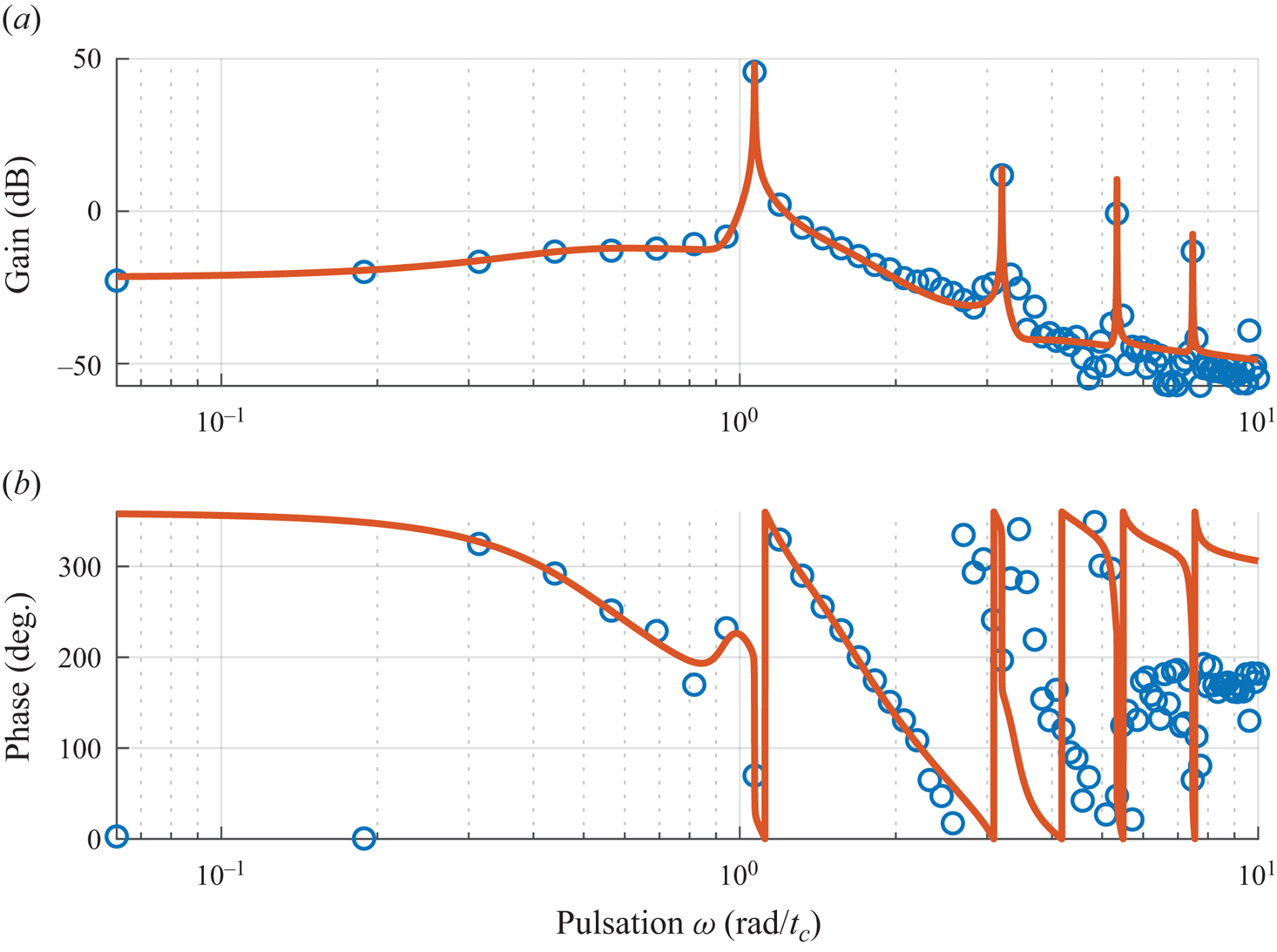

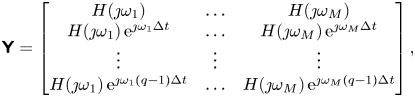

2.4.3. System identification

Now that ![]() $H_0(\jmath \omega )$ has been sampled on a grid of

$H_0(\jmath \omega )$ has been sampled on a grid of ![]() $\omega$, we wish to find a low-order state-space representation of the transfer function

$\omega$, we wish to find a low-order state-space representation of the transfer function ![]() $G(s)$ approximating the unknown

$G(s)$ approximating the unknown ![]() $H_0(s)$, accessible to control techniques. This task is performed with a subspace-based frequency identification method, but could be performed with any other frequency identification method, e.g. the eigensystem realization algorithm in frequency domain (Juang & Suzuki Reference Juang and Suzuki1988) or vector fitting (Gustavsen & Semlyen Reference Gustavsen and Semlyen1999; Ozdemir & Gumussoy Reference Ozdemir and Gumussoy2017). Subspace methods form a class of blackbox linear identification methods that do not rely on nonlinear optimization as most iterative model-fitting methods do. Here, the frequency observability range space extraction (FORSE) (Liu, Jacques & Miller Reference Liu, Jacques and Miller1994) estimates a discrete-time state-space model with order fixed a priori, in two distinct steps. Matrices

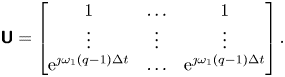

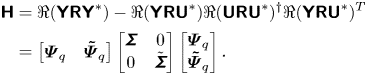

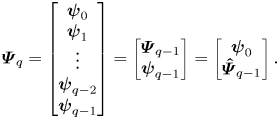

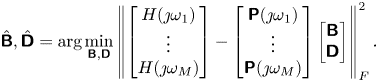

$H_0(s)$, accessible to control techniques. This task is performed with a subspace-based frequency identification method, but could be performed with any other frequency identification method, e.g. the eigensystem realization algorithm in frequency domain (Juang & Suzuki Reference Juang and Suzuki1988) or vector fitting (Gustavsen & Semlyen Reference Gustavsen and Semlyen1999; Ozdemir & Gumussoy Reference Ozdemir and Gumussoy2017). Subspace methods form a class of blackbox linear identification methods that do not rely on nonlinear optimization as most iterative model-fitting methods do. Here, the frequency observability range space extraction (FORSE) (Liu, Jacques & Miller Reference Liu, Jacques and Miller1994) estimates a discrete-time state-space model with order fixed a priori, in two distinct steps. Matrices ![]() ${{\boldsymbol{\mathsf{A}}}}$ and

${{\boldsymbol{\mathsf{A}}}}$ and ![]() ${{\boldsymbol{\mathsf{C}}}}$ are built directly from a singular value decomposition of a Hankel matrix built from the frequency response. Matrices

${{\boldsymbol{\mathsf{C}}}}$ are built directly from a singular value decomposition of a Hankel matrix built from the frequency response. Matrices ![]() ${{\boldsymbol{\mathsf{B}}}}$ and

${{\boldsymbol{\mathsf{B}}}}$ and ![]() ${{\boldsymbol{\mathsf{D}}}}$ are then found by solving a linear least squares problem. More details can be found in Liu et al. (Reference Liu, Jacques and Miller1994) or in Appendix B for a SISO version. Additional stability constraints may be enforced with linear matrix inequalities (Demourant & Poussot-Vassal Reference Demourant and Poussot-Vassal2017), as the transfers

${{\boldsymbol{\mathsf{D}}}}$ are then found by solving a linear least squares problem. More details can be found in Liu et al. (Reference Liu, Jacques and Miller1994) or in Appendix B for a SISO version. Additional stability constraints may be enforced with linear matrix inequalities (Demourant & Poussot-Vassal Reference Demourant and Poussot-Vassal2017), as the transfers ![]() $H_0(s), G(s)$ are expected to exhibit some marginally stable poles in this context.

$H_0(s), G(s)$ are expected to exhibit some marginally stable poles in this context.

For the sake of rendering the procedure as automatic as possible, the order of the model identified at each iteration, denoted ![]() $n_G=\partial ^\circ {G}$, is fixed. The choice of

$n_G=\partial ^\circ {G}$, is fixed. The choice of ![]() $n_G$ is discussed in § 3.1.2.

$n_G$ is discussed in § 3.1.2.

2.5. Control of the flow using the mean transfer function

2.5.1. Rationale

After we have determined an LTI model ![]() $G(s)$ of the fluid flow around its attractor, we wish to control it in order to reduce the self-sustained oscillations. Among the classic control methods such as pole placement, LQG,

$G(s)$ of the fluid flow around its attractor, we wish to control it in order to reduce the self-sustained oscillations. Among the classic control methods such as pole placement, LQG, ![]() ${{\mathcal {H}\scriptscriptstyle \infty }}$ techniques (e.g. mixed-sensitivity, loop-shaping, structured

${{\mathcal {H}\scriptscriptstyle \infty }}$ techniques (e.g. mixed-sensitivity, loop-shaping, structured ![]() ${{\mathcal {H}\scriptscriptstyle \infty }}$, etc.) and MPC, we choose the LQG framework for synthesis. It combines several advantages, such as being easy to automate, having predictable controller gain to some extent and producing a controller with relatively low complexity.

${{\mathcal {H}\scriptscriptstyle \infty }}$, etc.) and MPC, we choose the LQG framework for synthesis. It combines several advantages, such as being easy to automate, having predictable controller gain to some extent and producing a controller with relatively low complexity.

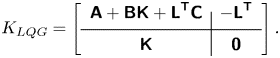

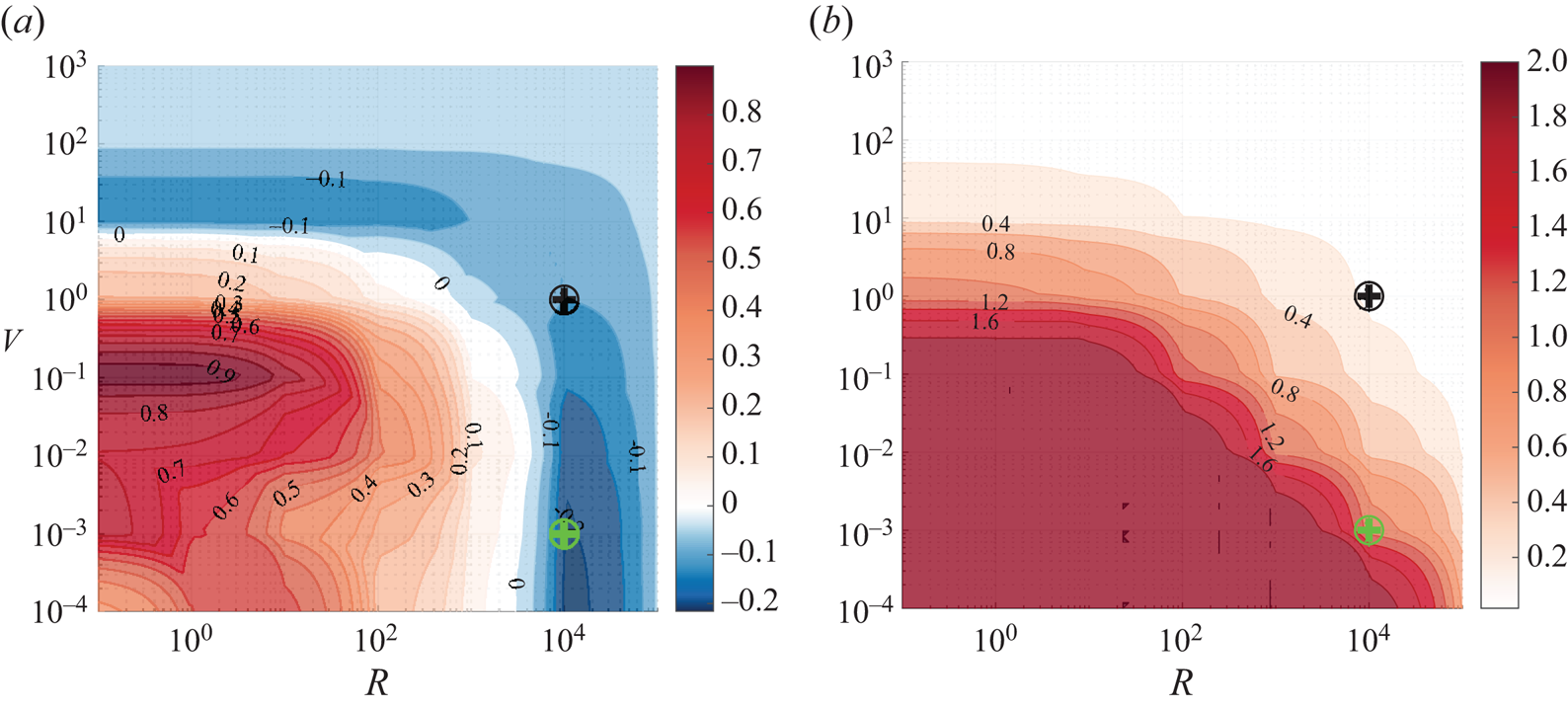

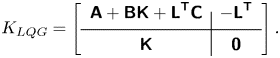

2.5.2. Principle of observed-state feedback

Linear quadratic Gaussian control is very popular in flow control (see e.g. Schmid & Sipp (Reference Schmid and Sipp2016), Barbagallo et al. (Reference Barbagallo, Sipp and Schmid2009), Kim & Bewley (Reference Kim and Bewley2007), Carini, Pralits & Luchini (Reference Carini, Pralits and Luchini2015) and Brunton & Noack (Reference Brunton and Noack2015) and references therein) due to its ease of use. The principle and main equations are recalled in Appendix C. Here, we simply recall that the dynamic LQG controller for a SISO plant  $G= \left [ \begin{array}{c|c} \boldsymbol{\mathsf{A}} & \boldsymbol{\mathsf{B}} \\ \hline \boldsymbol{\mathsf{C}} & \boldsymbol{\mathsf{0}} \end{array}\right ]$ can be calculated from the observer gain

$G= \left [ \begin{array}{c|c} \boldsymbol{\mathsf{A}} & \boldsymbol{\mathsf{B}} \\ \hline \boldsymbol{\mathsf{C}} & \boldsymbol{\mathsf{0}} \end{array}\right ]$ can be calculated from the observer gain ![]() ${{\boldsymbol{\mathsf{L}}}}$ (depending on state noise and output noise covariances

${{\boldsymbol{\mathsf{L}}}}$ (depending on state noise and output noise covariances ![]() ${{\boldsymbol{\mathsf{W}}}}, V$) and the state-feedback gain

${{\boldsymbol{\mathsf{W}}}}, V$) and the state-feedback gain ![]() ${{\boldsymbol{\mathsf{K}}}}$ (depending on state and input costs

${{\boldsymbol{\mathsf{K}}}}$ (depending on state and input costs ![]() ${{\boldsymbol{\mathsf{Q}}}}, R$) and expressed as follows:

${{\boldsymbol{\mathsf{Q}}}}, R$) and expressed as follows:

\begin{equation} K_{LQG} = \left[ \begin{array}{c|c} {{{\boldsymbol{\mathsf{A}}}}+{{\boldsymbol{\mathsf{BK}}}} + {{\boldsymbol{\mathsf{L}}}}^{{{\boldsymbol{\mathsf{T}}}}}{{\boldsymbol{\mathsf{C}}}}} & { -{{\boldsymbol{\mathsf{L}}}}^{{{\boldsymbol{\mathsf{T}}}}}} \\ \hline {{{\boldsymbol{\mathsf{K}}}}} & {{{\boldsymbol{\mathsf{0}}}}} \end{array}\right] .\end{equation}

\begin{equation} K_{LQG} = \left[ \begin{array}{c|c} {{{\boldsymbol{\mathsf{A}}}}+{{\boldsymbol{\mathsf{BK}}}} + {{\boldsymbol{\mathsf{L}}}}^{{{\boldsymbol{\mathsf{T}}}}}{{\boldsymbol{\mathsf{C}}}}} & { -{{\boldsymbol{\mathsf{L}}}}^{{{\boldsymbol{\mathsf{T}}}}}} \\ \hline {{{\boldsymbol{\mathsf{K}}}}} & {{{\boldsymbol{\mathsf{0}}}}} \end{array}\right] .\end{equation}We provide important remarks on the LQG controller below.

(i) The state-feedback gain

${{\boldsymbol{\mathsf{K}}}}$ and the observer gain

${{\boldsymbol{\mathsf{K}}}}$ and the observer gain  ${{\boldsymbol{\mathsf{L}}}}$ are computed independently. Additionally, each problem may be normalized. For the state feedback, the state weighting is chosen as

${{\boldsymbol{\mathsf{L}}}}$ are computed independently. Additionally, each problem may be normalized. For the state feedback, the state weighting is chosen as  ${{\boldsymbol{\mathsf{Q}}}}={{\boldsymbol{\mathsf{I}}}}_n$ and the problem is only parametrized by the value

${{\boldsymbol{\mathsf{Q}}}}={{\boldsymbol{\mathsf{I}}}}_n$ and the problem is only parametrized by the value  $R > 0$ penalizing the control input. Symmetrically, for the estimation problem, we choose

$R > 0$ penalizing the control input. Symmetrically, for the estimation problem, we choose  ${{\boldsymbol{\mathsf{W}}}} = {{\boldsymbol{\mathsf{I}}}}_n$ and the problem is parametrized by

${{\boldsymbol{\mathsf{W}}}} = {{\boldsymbol{\mathsf{I}}}}_n$ and the problem is parametrized by  $V > 0$. This approach is more conservative because states are weighted equally, but allows for parametrizing the LQG problem easily with only two positive scalars

$V > 0$. This approach is more conservative because states are weighted equally, but allows for parametrizing the LQG problem easily with only two positive scalars  $R, V > 0$. In the following, a controller stemming from a LQG synthesis with weightings

$R, V > 0$. In the following, a controller stemming from a LQG synthesis with weightings  $R, V$ is denoted

$R, V$ is denoted  $K_{LQG}(R, V)$.

$K_{LQG}(R, V)$.(ii) The matrix weights may be tuned to enforce specific behaviours for the solution controller (which was already underlined in Sipp & Schmid Reference Sipp and Schmid2016). For the state-feedback problem, one can prioritize small control inputs (

$R\to \infty$, low gain

$R\to \infty$, low gain  ${{\boldsymbol{\mathsf{K}}}}$) or reactive control (

${{\boldsymbol{\mathsf{K}}}}$) or reactive control ( $R\to 0$, large gain

$R\to 0$, large gain  ${{\boldsymbol{\mathsf{K}}}}$). Symmetrically, for the estimation problem, the choice is made between slow estimation (

${{\boldsymbol{\mathsf{K}}}}$). Symmetrically, for the estimation problem, the choice is made between slow estimation ( $V\to \infty$, low gain

$V\to \infty$, low gain  ${{\boldsymbol{\mathsf{L}}}}$) or fast estimation (

${{\boldsymbol{\mathsf{L}}}}$) or fast estimation ( $V \to 0$, large gain

$V \to 0$, large gain  ${{\boldsymbol{\mathsf{L}}}}$).

${{\boldsymbol{\mathsf{L}}}}$).(iii) While linear quadratic regulator controllers exhibit inherent robustness (in gain and phase margins) given diagonal

${{\boldsymbol{\mathsf{Q}}}}, {{\boldsymbol{\mathsf{R}}}}$ (Lehtomaki, Sandell & Athans Reference Lehtomaki, Sandell and Athans1981), these guarantees generally do not hold for LQG and stability margins may be arbitrarily small (Doyle Reference Doyle1978) but can be checked a posteriori.

${{\boldsymbol{\mathsf{Q}}}}, {{\boldsymbol{\mathsf{R}}}}$ (Lehtomaki, Sandell & Athans Reference Lehtomaki, Sandell and Athans1981), these guarantees generally do not hold for LQG and stability margins may be arbitrarily small (Doyle Reference Doyle1978) but can be checked a posteriori.

2.6. Controller stacking, balanced truncation and state initialization

2.6.1. Rationale

At the beginning of iteration ![]() $i+1$ of the procedure, a new controller

$i+1$ of the procedure, a new controller ![]() $K_{i}^+$ is synthesized and coupled to the flow, which is already in closed loop with the control law

$K_{i}^+$ is synthesized and coupled to the flow, which is already in closed loop with the control law ![]() $K_{i}$. The new controller operating in the loop would be

$K_{i}$. The new controller operating in the loop would be

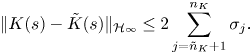

In the general case, while the newly designed controller ![]() $K_{i}^+$ has manageable order (

$K_{i}^+$ has manageable order (![]() $\partial ^\circ {K_i^+}=\partial ^\circ {G_i}=n_G$), the total controller

$\partial ^\circ {K_i^+}=\partial ^\circ {G_i}=n_G$), the total controller ![]() $K_{i+1}$ has order

$K_{i+1}$ has order ![]() $\partial ^\circ {K_{i+1}} = \partial ^\circ {K_i}+\partial ^\circ {K_i^+} =(i-1) n_G + n_G$ that is increasing linearly with iterations.

$\partial ^\circ {K_{i+1}} = \partial ^\circ {K_i}+\partial ^\circ {K_i^+} =(i-1) n_G + n_G$ that is increasing linearly with iterations.

In order to reduce the order of the controller, we resort, at each iteration, to balanced truncation of the controller operating in the loop. In other words, instead of using the full controller ![]() $K_{i+1}= K_{i} + K_{i}^{+}$ in the loop, we use a reduced-order version

$K_{i+1}= K_{i} + K_{i}^{+}$ in the loop, we use a reduced-order version ![]() $\tilde {K}_{i+1}$. Repeating the operation at each iteration leads to

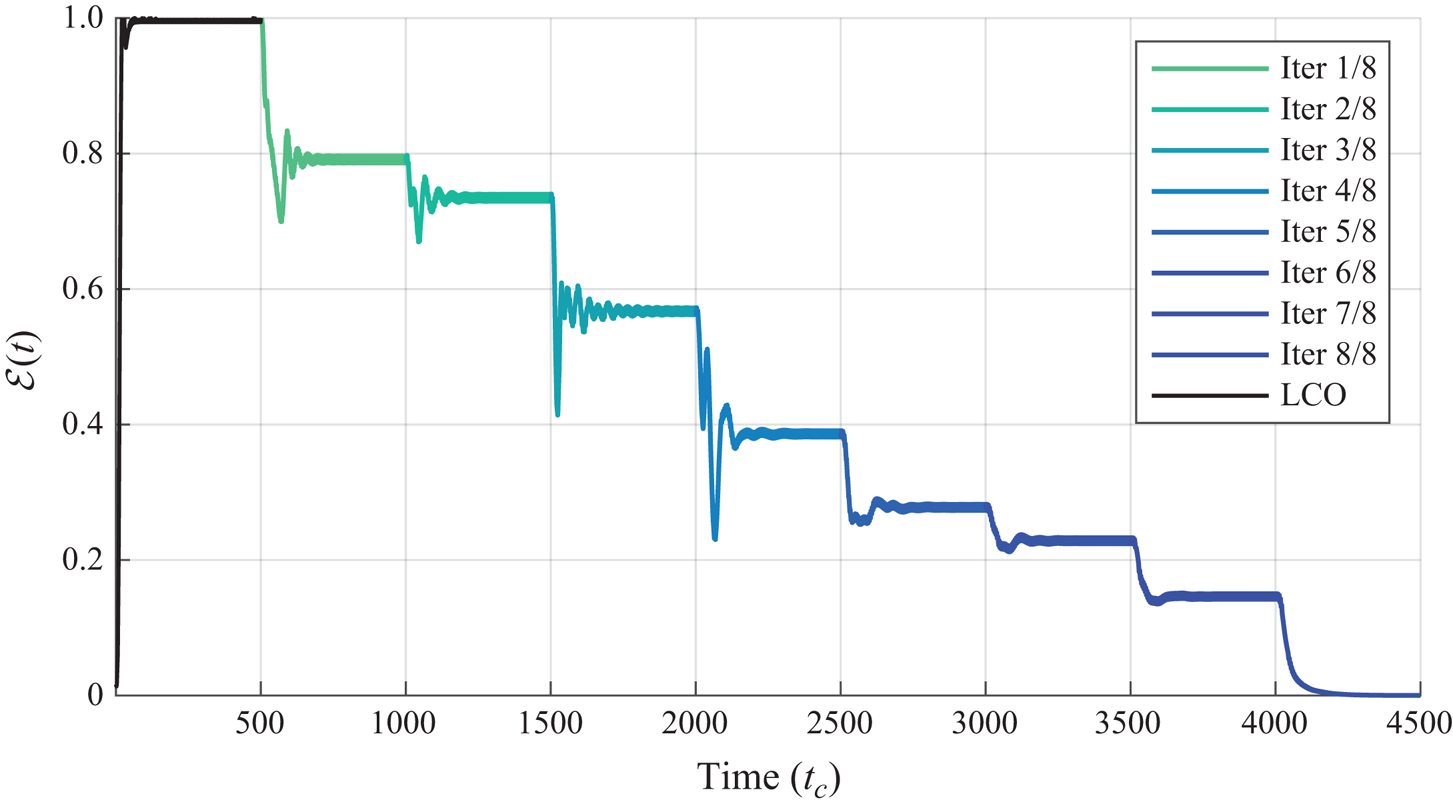

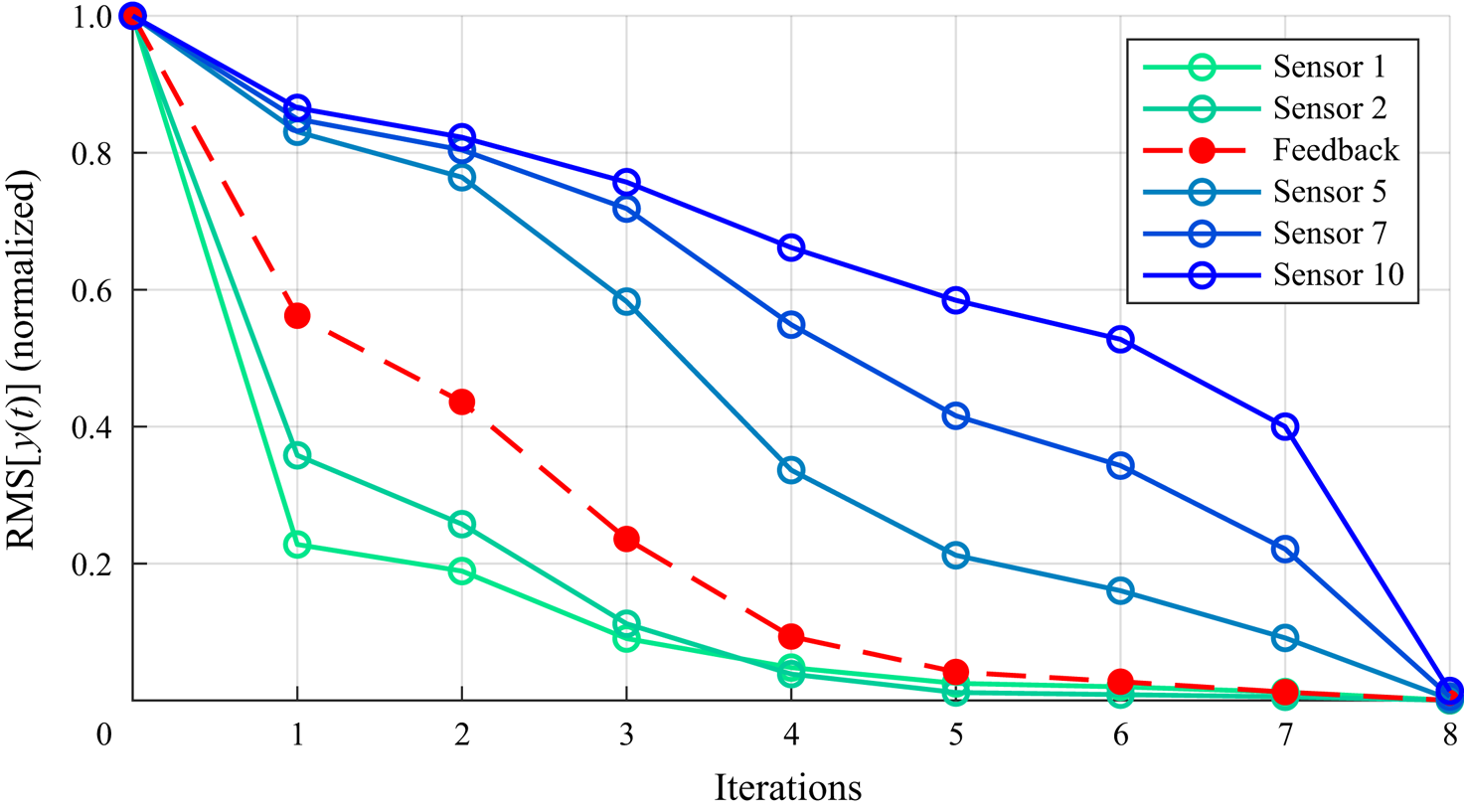

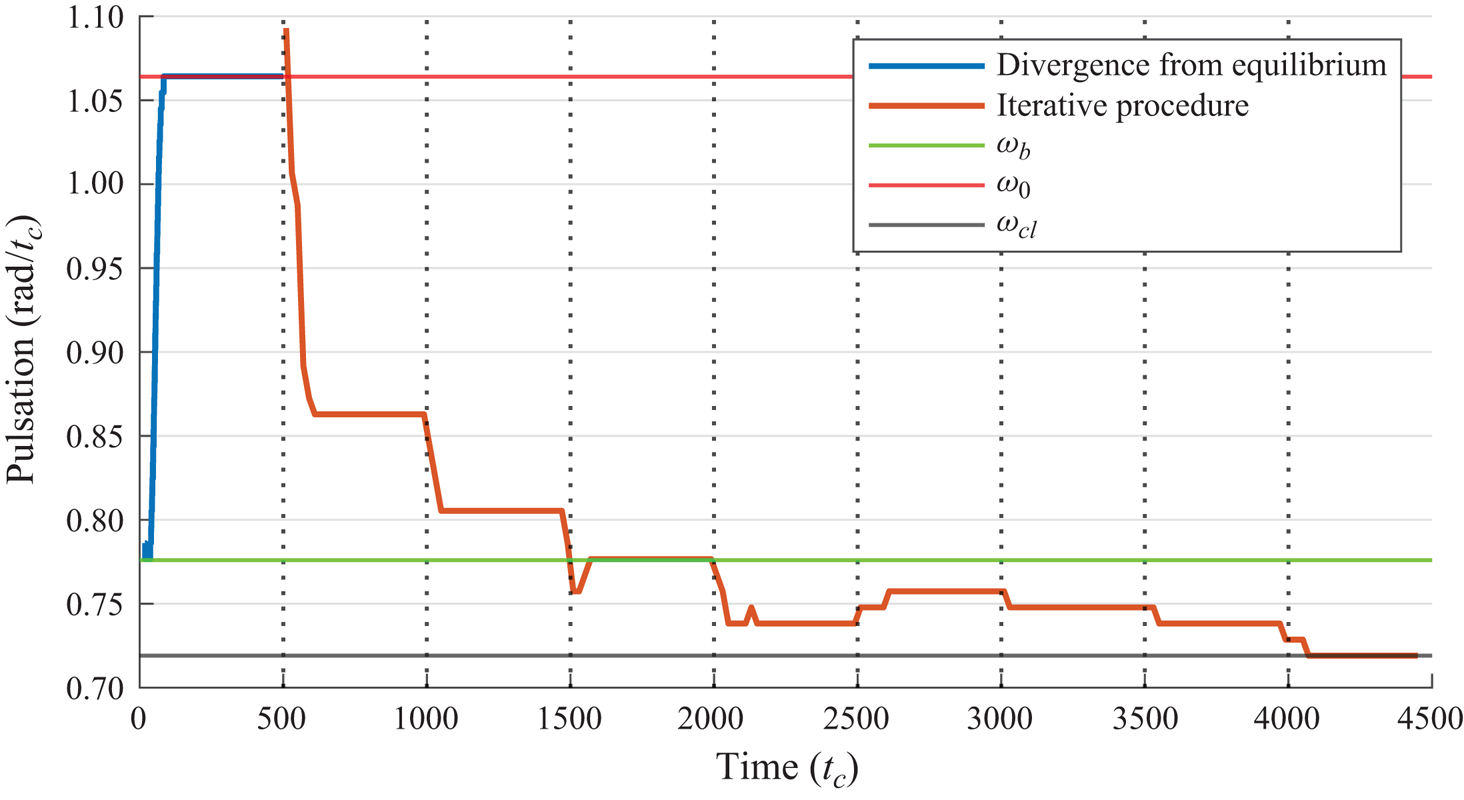

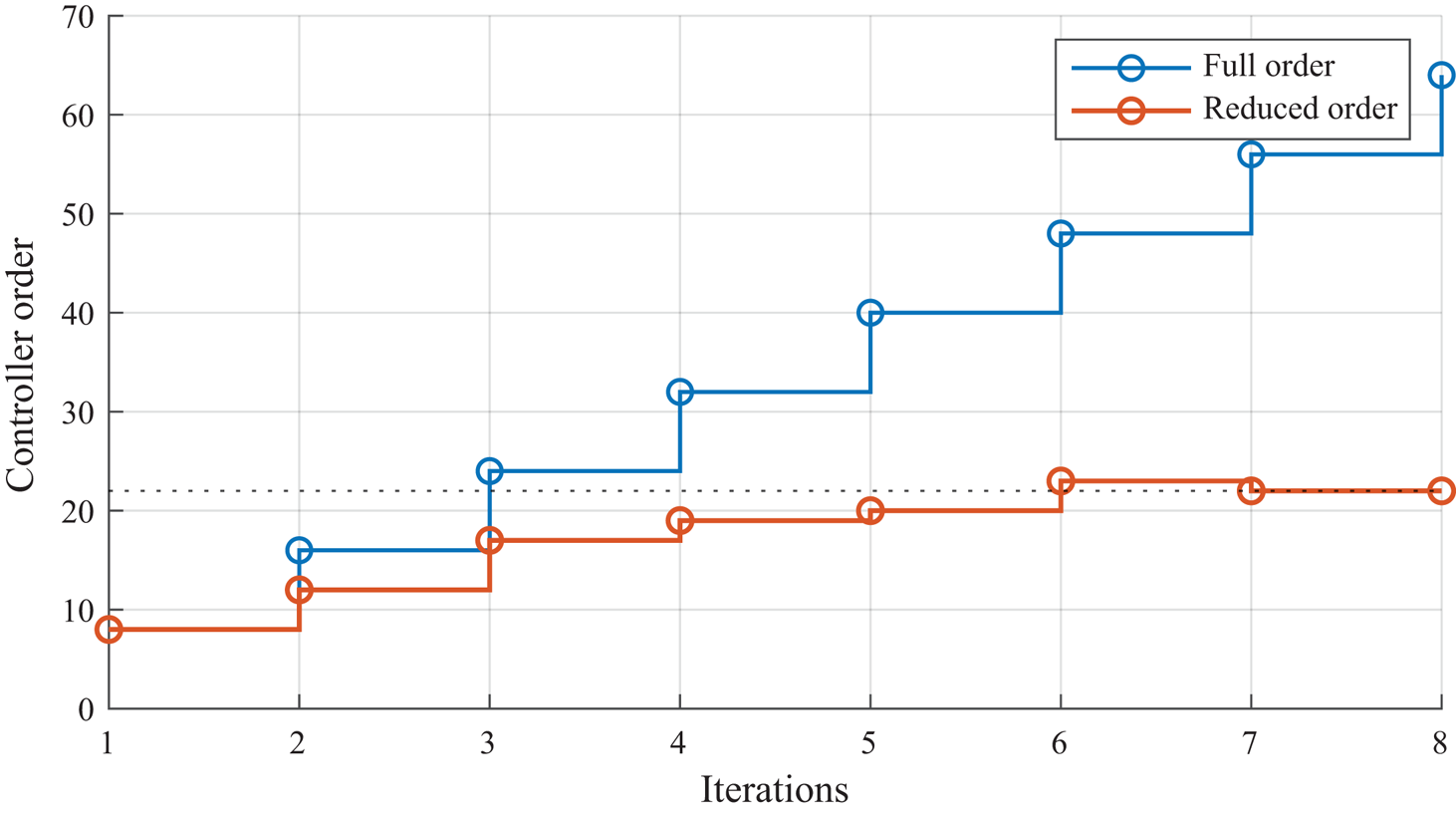

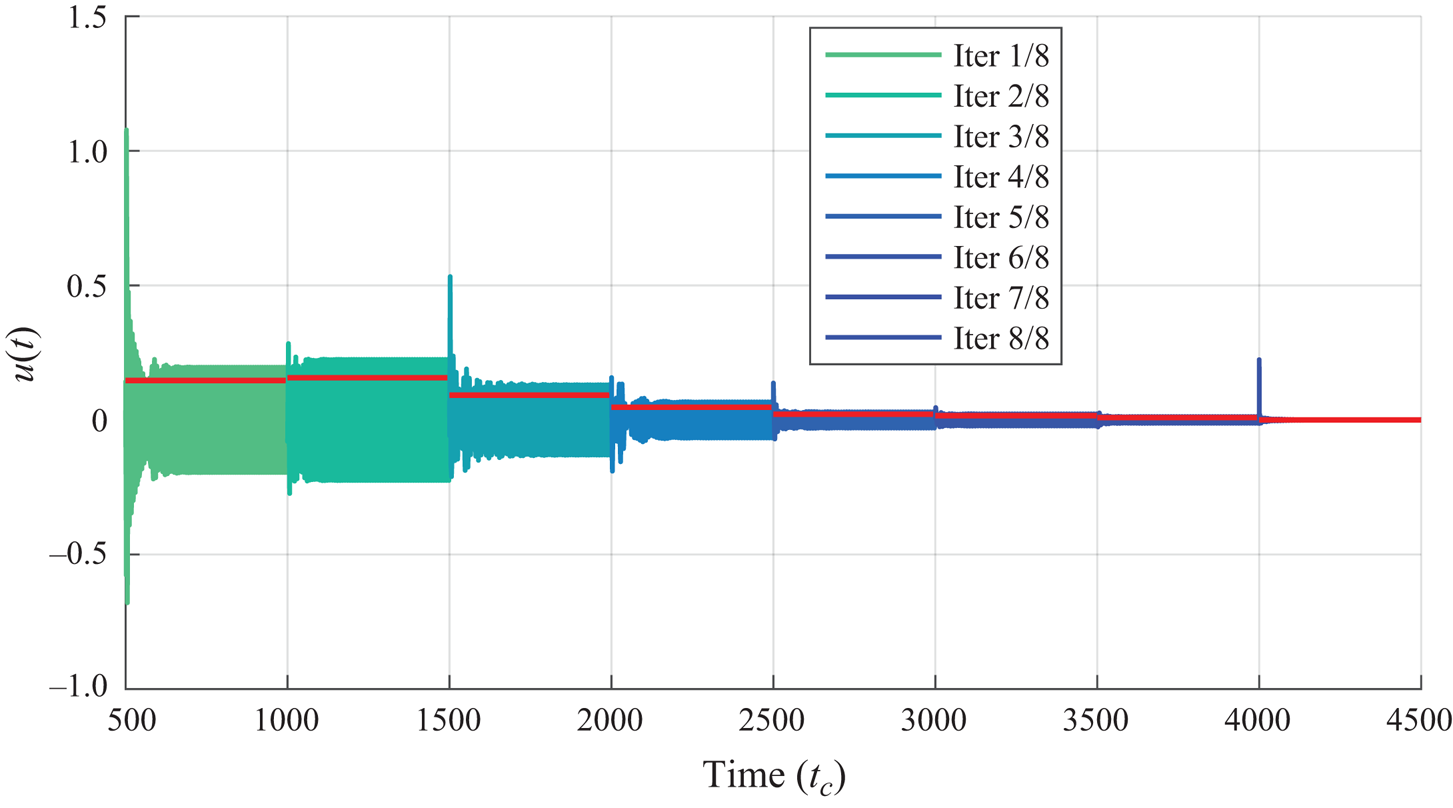

$\tilde {K}_{i+1}$. Repeating the operation at each iteration leads to