1. Introduction

A major challenge in dealing with chaotic fluid flows, whether it be performing experiments, running simulations or interpreting the results, is the high-dimensional nature of the state. Even for simulations in the smallest domains that sustain turbulence (a minimal flow unit (MFU)), the state dimension may be ![]() ${O}(10^5)$ (Jiménez & Moin Reference Jiménez and Moin1991; Hamilton, Kim & Waleffe Reference Hamilton, Kim and Waleffe1995). However, despite this nominal high-dimensionality, the dissipative nature of turbulent flows leads to the expectation that long-time dynamics collapse onto an invariant manifold of much lower dimension than the ambient dimension (Hopf Reference Hopf1948). By modelling the dynamics in a manifold coordinate system, simulations could be performed with a drastically lower-dimensional state representation, significantly speeding up computations. In addition, such a low-dimensional state representation is highly useful for downstream tasks such as control or design. Finding a low-dimensional, or ideally a minimal-dimensional, parameterisation of the manifold and an evolution equation for this parameterisation are both challenges. In this work we aim to address these challenges with a data-driven model, specifically for the task of reconstructing turbulent plane Couette flow (PCF).

${O}(10^5)$ (Jiménez & Moin Reference Jiménez and Moin1991; Hamilton, Kim & Waleffe Reference Hamilton, Kim and Waleffe1995). However, despite this nominal high-dimensionality, the dissipative nature of turbulent flows leads to the expectation that long-time dynamics collapse onto an invariant manifold of much lower dimension than the ambient dimension (Hopf Reference Hopf1948). By modelling the dynamics in a manifold coordinate system, simulations could be performed with a drastically lower-dimensional state representation, significantly speeding up computations. In addition, such a low-dimensional state representation is highly useful for downstream tasks such as control or design. Finding a low-dimensional, or ideally a minimal-dimensional, parameterisation of the manifold and an evolution equation for this parameterisation are both challenges. In this work we aim to address these challenges with a data-driven model, specifically for the task of reconstructing turbulent plane Couette flow (PCF).

The classic way to perform dimension reduction from data is to use the proper orthogonal decomposition (POD), also known as principal component analysis (PCA) or Karhunen–Loève decomposition (Holmes et al. Reference Holmes, Lumley, Berkooz and Rowley2012). This is a linear dimension reduction technique in which the state is projected onto the set of orthogonal modes that capture the maximum variance in the data. The POD is widely used for flow phenomena, some examples of which include turbulent channel flow (Moin & Moser Reference Moin and Moser1989; Ball, Sirovich & Keefe Reference Ball, Sirovich and Keefe1991), flat-plate boundary layers (Rempfer & Fasel Reference Rempfer and Fasel1994) and free shear jet flows (Arndt, Long & Glauser Reference Arndt, Long and Glauser1997). Smith, Moehlis & Holmes (Reference Smith, Moehlis and Holmes2005) showed how to incorporate system symmetries into the POD modes, the details of which we elaborate on in § 3.

Although the POD has seen wide use and is easy to interpret, more accurate reconstruction can be achieved with nonlinear methods, a result we highlight in § 3. Some popular methods for nonlinear dimension reduction include kernel PCA (Schölkopf, Smola & Müller Reference Schölkopf, Smola and Müller1998), diffusion maps (Coifman et al. Reference Coifman, Lafon, Lee, Maggioni, Nadler, Warner and Zucker2005), local linear embedding (LLE) (Roweis & Saul Reference Roweis and Saul2000), isometric feature mapping (Isomap) (Tenenbaum, de Silva & Langford Reference Tenenbaum, de Silva and Langford2000) and t-distributed stochastic neighbour embedding (tSNE) (Hinton & Roweis Reference Hinton and Roweis2003). These methods are described in more detail in Linot & Graham (Reference Linot and Graham2022), and an overview of other dimension reduction methods can be found in Van Der Maaten, Postma & Van Den Herik (Reference Van Der Maaten, Postma and Van Den Herik2009). One drawback of all of these methods, however, is that they reduce the dimension, but do not immediately provide a means to move from a low-dimensional state back to the full state. A popular dimension reduction method without these complications is the undercomplete autoencoder (Hinton & Salakhutdinov Reference Hinton and Salakhutdinov2006), which uses a neural network (NN) to map the input data into a lower-dimensional ‘latent space’ and another NN to map back to the original state space. We describe this structure in more detail in § 2. Some examples where autoencoders have been used for flow systems include flow around a cylinder (Murata, Fukami & Fukagata Reference Murata, Fukami and Fukagata2020), flow around a flat plate (Nair & Goza Reference Nair and Goza2020), Kolmogorov flow (Page, Brenner & Kerswell Reference Page, Brenner and Kerswell2021; Pérez De Jesús & Graham Reference Pérez De Jesús and Graham2023) and channel flow (Milano & Koumoutsakos Reference Milano and Koumoutsakos2002). Although we will not pursue this approach in the present work, it may be advantageous for multiple reasons to parametrise the manifold with overlapping local representations, as done in Floryan & Graham (Reference Floryan and Graham2022).

After reducing the dimension, the time evolution for the dynamics can be approximated from the equations of motion or in a completely data-driven manner. The classical method is to perform a Galerkin projection wherein the equations of motion are projected onto a set of modes (e.g. POD modes) (Holmes et al. Reference Holmes, Lumley, Berkooz and Rowley2012). However, in this approach, all the higher POD modes are neglected. An extension of this idea, called nonlinear Galerkin, is to assume that the time derivative of the coefficients of all of the higher modes is zero, but not the coefficients themselves (Foias et al. Reference Foias, Jolly, Kevrekidis, Sell and Titi1988; Titi Reference Titi1990; Graham, Steen & Titi Reference Graham, Steen and Titi1993); this is essentially a quasisteady state approximation for the higher modes. This improves the accuracy, but comes at a higher computational cost than the Galerkin method, although this can be somewhat mitigated by using a postprocessing Galerkin approach (García-Archilla, Novo & Titi Reference García-Archilla, Novo and Titi1998). Wan et al. (Reference Wan, Vlachas, Koumoutsakos and Sapsis2018) also showed a recurrent neural network (RNN), a NN that feeds into itself, can be used to improve the nonlinear Galerkin approximation. This RNN structure depends on a history of inputs, making it non-Markovian. In addition to these linear dimension reduction approaches, an autoencoder can be used with the equations of motion in the so-called manifold Galerkin approach, which Lee & Carlberg (Reference Lee and Carlberg2020) developed and applied to the viscous Burgers equation.

When the equations of motion are assumed to be unknown, and only snapshots of data are available, a number of different machine learning techniques exist to approximate the dynamics. Two of the most popular techniques are RNNs and reservoir computers. Vlachas et al. (Reference Vlachas, Pathak, Hunt, Sapsis, Girvan, Ott and Koumoutsakos2020) showed both these structures do an excellent job of capturing the chaotic dynamics of the Lorenz-96 equation and Kuramoto–Sivashinsky equation (KSE). For fluid flows, autoencoders and RNNs (specifically long short-term memory (LSTM) networks) have been used to model flow around a cylinders (Eivazi et al. Reference Eivazi, Veisi, Naderi and Esfahanian2020; Hasegawa et al. Reference Hasegawa, Fukami, Murata and Fukagata2020a), pitching airfoils (Eivazi et al. Reference Eivazi, Veisi, Naderi and Esfahanian2020), bluff bodies (Hasegawa et al. Reference Hasegawa, Fukami, Murata and Fukagata2020b) and MFU plane Poiseuille flow (PPF) (Nakamura et al. Reference Nakamura, Fukami, Hasegawa, Nabae and Fukagata2021). Although these methods often do an excellent job of predicting chaotic dynamics, the models are not Markovian, so a true assessment of the dimension of the state in these models has to account for the degrees of freedom used to store past history of the dynamics. These methods are also implemented in discrete rather than continuous time. These two properties are undesirable, because the underlying dynamics are Markovian and continuous in time, and not modelling them as such complicates applications and interpretations of the model. In particular, we want to use the model for state space analyses such as determination of periodic orbits (POs), where standard tools are available for ODEs that do not easily generalise to non-Markovian dynamic models.

Owing to these issues, we use neural ordinary differential equations (ODEs) (Chen et al. Reference Chen, Rubanova, Bettencourt and Duvenaud2019). In neural ODEs, the right-hand side of an ODE is represented as a NN that is trained to reconstruct the time evolution of the data from snapshots of training data. Linot & Graham (Reference Linot and Graham2022) showed that this is an effective method for modelling the chaotic dynamics of the KSE. Rojas, Dengel & Ribeiro (Reference Rojas, Dengel and Ribeiro2021) used neural ODEs to predict the periodic dynamics of flow around a cylinder, and Portwood et al. (Reference Portwood2019) used neural ODEs to predict the kinetic energy and dissipation of decaying turbulence.

In this work we investigate the dynamics of MFU Couette flow. The idea behind the MFU, first introduced by Jiménez & Moin (Reference Jiménez and Moin1991), is to reduce the simulation domain to the smallest size that sustains turbulence, thus isolating the key components of the turbulent nonlinear dynamics. Using an MFU for Couette flow at transitional Reynolds number, Hamilton et al. (Reference Hamilton, Kim and Waleffe1995) outlined the regeneration cycle of wall-bounded turbulence called the ‘self-sustaining process’ (SSP), which we describe in more detail in § 3. This system was later analysed with covariant Lyapunov analysis by Inubushi, Takehiro & Yamada (Reference Inubushi, Takehiro and Yamada2015), who found a Lyapunov time (the inverse of the leading Lyapunov exponent) of ![]() $\sim$48 time units.

$\sim$48 time units.

Many low-dimensional models have been developed to recreate the dynamics of the SSP. The first investigation of this topic was by Waleffe (Reference Waleffe1997), who developed an 8-mode model for shear flow between free-slip walls generated by a spatially sinusoidal forcing. He selected the modes based on intuition from the SSP and performed a Galerkin projection onto these modes. Moehlis, Faisst & Eckhardt (Reference Moehlis, Faisst and Eckhardt2004) later added an additional mode to Waleffe's model which enables modification of the mean profile by the turbulence, and made some modifications to the chosen modes. In this ‘MFE’ (Moehlis, Faisst, Eckhardt) model, Moehlis et al. found exact coherent states (ECS), which we discuss in the following, that did not exist in the 8-mode model. In addition, Moehlis et al. (Reference Moehlis, Smith, Holmes and Faisst2002) also used the POD modes on a domain slightly larger than the MFU to generate POD-Galerkin models. These low-dimensional models have been used as a starting point for testing data-driven models. For example, both LSTMs (Srinivasan et al. Reference Srinivasan, Guastoni, Azizpour, Schlatter and Vinuesa2019) and a Koopman operator method with nonlinear forcing (Eivazi et al. Reference Eivazi, Guastoni, Schlatter, Azizpour and Vinuesa2021) have been used to attempt to reconstruct the MFE model dynamics. Borrelli et al. (Reference Borrelli, Guastoni, Eivazi, Schlatter and Vinuesa2022) then applied these methods to PPF.

Finally, we note that a key approach to understanding complex nonlinear dynamical phenomena, such as the SSP of near-wall turbulence, is through study of the underlying state space structure of fixed points and POs. In the turbulence literature these are sometimes called ECS (Kawahara, Uhlmann & van Veen Reference Kawahara, Uhlmann and van Veen2012; Graham & Floryan Reference Graham and Floryan2021). Turbulence organises around ECS in the sense that trajectories chaotically move between different such states. The first ECS found were fixed-point solutions in PCF (Nagata Reference Nagata1990). Following this work, Waleffe (Reference Waleffe1998) was able to connect ECS of PCF and PPF to the SSP. Later, more fixed point ECS were found in MFU PCF and visualised by Gibson, Halcrow & Cvitanović (Reference Gibson, Halcrow and Cvitanović2008a). Unlike fixed points, which cannot capture dynamic phenomena at all, POs are able to represent key aspects of turbulent dynamics such as bursting behaviour. Kawahara & Kida (Reference Kawahara and Kida2001) found the first two POs for MFU PCF, one of which had statistics that agreed well with the SSP. Then, Viswanath (Reference Viswanath2007) found another PO and 4 new relative periodic orbits (RPOs) in this domain, and Gibson made these solutions available in (Gibson et al. Reference Gibson, Halcrow, Cvitanović and Viswanath2008b), along with a handful of others.

In the present work, we use autoencoders and neural ODEs, in a method we call ‘data-driven manifold dynamics’ (DManD) (Linot et al. Reference Linot, Burby, Tang, Balaprakash, Graham and Maulik2023a), to build a reduced-order model (ROM) for turbulent MFU PCF (Hamilton et al. Reference Hamilton, Kim and Waleffe1995). Section 2 outlines the details of the DManD framework. We then describe the details of the Couette flow in § 3.1, the results of the dimension reduction in § 3.2, and the DManD model's reconstruction of short- and long-time statistics in §§ 3.3 and 3.4, respectively. After showing that the models accurately reproduce these statistics, we compute RPOs for the model in § 3.5, finding several that are similar to previously known RPOs, as well as several that seem to be new. Finally, we summarise the results in § 4.

2. Framework

The fundamental ideas of the DManD framework are (1) that when modelling long-time dynamics of a dissipative system, only the finite-dimensional manifold ![]() $\mathcal {M}$ on which the dynamics lie needs to be considered, not the full state space, and (2) a low-dimensional coordinate system for this manifold and the dynamics in this coordinate system can be determined from data for the system. In general, the training data for the development of a DManD model come in the form of snapshots

$\mathcal {M}$ on which the dynamics lie needs to be considered, not the full state space, and (2) a low-dimensional coordinate system for this manifold and the dynamics in this coordinate system can be determined from data for the system. In general, the training data for the development of a DManD model come in the form of snapshots ![]() $\{\boldsymbol{u}_1,\boldsymbol{u}_2,\ldots,\boldsymbol{u}_M\}$, which are either the full state or measurements diffeomorphic to the full state (e.g. time delays (Takens Reference Takens1981; Young & Graham Reference Young and Graham2023)). Here we consider full-state data

$\{\boldsymbol{u}_1,\boldsymbol{u}_2,\ldots,\boldsymbol{u}_M\}$, which are either the full state or measurements diffeomorphic to the full state (e.g. time delays (Takens Reference Takens1981; Young & Graham Reference Young and Graham2023)). Here we consider full-state data ![]() $\boldsymbol{u}$ that live in an ambient space

$\boldsymbol{u}$ that live in an ambient space ![]() $\mathbb {R}^d$. We generate a time series of data by evolving this state forward in time according to

$\mathbb {R}^d$. We generate a time series of data by evolving this state forward in time according to

(In the present context, this equation represents a fully resolved direct numerical simulation (DNS).) With the full state, we can then define a mapping to a low-dimensional state representation

where ![]() $\boldsymbol{h}\in \mathbb {R}^{d_h}$ is a state in the manifold coordinate system. Finally, we define a mapping back to the full state

$\boldsymbol{h}\in \mathbb {R}^{d_h}$ is a state in the manifold coordinate system. Finally, we define a mapping back to the full state

For data that lie on a finite-dimensional invariant manifold of dimension ![]() $d_{\mathcal {M}}$, these functions can, in principle, reconstruct the state exactly (i.e.

$d_{\mathcal {M}}$, these functions can, in principle, reconstruct the state exactly (i.e. ![]() $\boldsymbol{\tilde {u}}=\boldsymbol{u}$). However, if the dimension

$\boldsymbol{\tilde {u}}=\boldsymbol{u}$). However, if the dimension ![]() $d_h$ is too low, or there are errors in the approximation of these functions, then

$d_h$ is too low, or there are errors in the approximation of these functions, then ![]() $\boldsymbol{\tilde {u}}$ approximates the state. Here we also note that we refer to

$\boldsymbol{\tilde {u}}$ approximates the state. Here we also note that we refer to ![]() $\boldsymbol{h}$ as a state in the manifold coordinate system, but this coordinate representation is non-unique, and we seek one of an infinite number of diffeomorphic representations. Then, with this low-dimensional state representation, we can define an evolution equation

$\boldsymbol{h}$ as a state in the manifold coordinate system, but this coordinate representation is non-unique, and we seek one of an infinite number of diffeomorphic representations. Then, with this low-dimensional state representation, we can define an evolution equation

The DManD model consists of the three functions ![]() $\boldsymbol{\chi}$,

$\boldsymbol{\chi}$, ![]() $\boldsymbol{\check {\chi }}$ and

$\boldsymbol{\check {\chi }}$ and ![]() $\boldsymbol{g}$. By approximating these functions, the evolution of trajectories on the manifold can be performed entirely in the manifold coordinates, which requires far fewer operations than a full simulation, as

$\boldsymbol{g}$. By approximating these functions, the evolution of trajectories on the manifold can be performed entirely in the manifold coordinates, which requires far fewer operations than a full simulation, as ![]() $d_h\ll d$. We choose to approximate all of these functions using NNs, but other representations could be used. The parameters (weights and biases) of these NNs (

$d_h\ll d$. We choose to approximate all of these functions using NNs, but other representations could be used. The parameters (weights and biases) of these NNs (![]() $\boldsymbol{\theta}_E$,

$\boldsymbol{\theta}_E$, ![]() $\boldsymbol{\theta}_D$,

$\boldsymbol{\theta}_D$, ![]() $\boldsymbol{\theta}_g$,

$\boldsymbol{\theta}_g$, ![]() $\boldsymbol{\theta}_\phi$) will be updated from data to minimise losses described in the following.

$\boldsymbol{\theta}_\phi$) will be updated from data to minimise losses described in the following.

Before continuing to the details of the approach, we pause here to emphasise some specific aspects of the model. In traditional ROMs, approximations are made to reduce the number of degrees of freedom, e.g. in POD-Galerkin the governing equations are linearly projected onto a low-dimensional subspace, with the inevitable loss of information. By contrast, if the dynamics indeed lie on a manifold embedded in the ambient state space, then in principle there is an exact mapping between coordinates in the ambient space and the manifold, i.e. a reduced-dimensional representation that involves no approximation. Similarly, on the invariant manifold, the dynamics are tangent to the manifold and can be exactly represented by an ODE on it. Thus, in this context there is no issue of ‘asymptotic convergence’ as the number of degrees of freedom in the model increases: the invariant manifold has a definite dimension ![]() $d_{\mathcal {M}}$, and once the manifold representation has that dimension, no further degrees of freedom should be necessary. In practice, determining

$d_{\mathcal {M}}$, and once the manifold representation has that dimension, no further degrees of freedom should be necessary. In practice, determining ![]() $d_{\mathcal {M}}$ from data is challenging (see Zeng & Graham Reference Zeng and Graham2023), and even if it is known, numerical errors will be present in whatever method (here NNs) is used to approximate the multidimensional nonlinear functions

$d_{\mathcal {M}}$ from data is challenging (see Zeng & Graham Reference Zeng and Graham2023), and even if it is known, numerical errors will be present in whatever method (here NNs) is used to approximate the multidimensional nonlinear functions ![]() $\boldsymbol{\chi}$,

$\boldsymbol{\chi}$, ![]() $\boldsymbol{\check {\chi}}$, and

$\boldsymbol{\check {\chi}}$, and ![]() $\boldsymbol{g}$ that define the model.

$\boldsymbol{g}$ that define the model.

Now we return to the implementation of the DManD approach. First, we train ![]() $\boldsymbol{\chi}$ and

$\boldsymbol{\chi}$ and ![]() $\boldsymbol{\check {\chi }}$ using an undercomplete autoencoder. This is a NN structure consisting of an encoder, which reduces dimension (

$\boldsymbol{\check {\chi }}$ using an undercomplete autoencoder. This is a NN structure consisting of an encoder, which reduces dimension (![]() $\boldsymbol{\chi}$), and a decoder, which expands dimension (

$\boldsymbol{\chi}$), and a decoder, which expands dimension (![]() $\boldsymbol{\check {\chi}}$). (An undercomplete autoencoder generates a lower-dimensional representation of the data (

$\boldsymbol{\check {\chi}}$). (An undercomplete autoencoder generates a lower-dimensional representation of the data (![]() $d_h< d$) (Goodfellow, Bengio & Courville Reference Goodfellow, Bengio and Courville2016). Overcomplete autoencoders also exist.) As mentioned in § 1, a common approach to dimension reduction is to project onto a set of POD modes so we will work with the data expressed in the POD basis, as detailed in § 3.2. Two specific approaches are used. POD gives the optimal linear projection in terms of reconstruction error, so in one approach we use this fact to train a so-called hybrid autoencoder. Here the encoder is written as the sum of POD and a correction in the form of a NN:

$d_h< d$) (Goodfellow, Bengio & Courville Reference Goodfellow, Bengio and Courville2016). Overcomplete autoencoders also exist.) As mentioned in § 1, a common approach to dimension reduction is to project onto a set of POD modes so we will work with the data expressed in the POD basis, as detailed in § 3.2. Two specific approaches are used. POD gives the optimal linear projection in terms of reconstruction error, so in one approach we use this fact to train a so-called hybrid autoencoder. Here the encoder is written as the sum of POD and a correction in the form of a NN:

In this equation, ![]() $\boldsymbol{\mathsf{U}}_k\in \mathbb {R}^{d \times k}$ is a matrix whose

$\boldsymbol{\mathsf{U}}_k\in \mathbb {R}^{d \times k}$ is a matrix whose ![]() $k$ columns are the first

$k$ columns are the first ![]() $k$ POD modes as ordered by variance and

$k$ POD modes as ordered by variance and ![]() $\mathcal {E}$ is a NN. The first term (

$\mathcal {E}$ is a NN. The first term (![]() $\boldsymbol{\mathsf{U}}_{d_h}^{\rm T}\boldsymbol{u}$) is the projection onto the leading

$\boldsymbol{\mathsf{U}}_{d_h}^{\rm T}\boldsymbol{u}$) is the projection onto the leading ![]() $d_h$ POD modes, and the second term is the NN correction. The matrix

$d_h$ POD modes, and the second term is the NN correction. The matrix ![]() $\boldsymbol{\mathsf{U}}_r$ in this term may either be a full change of basis with no approximation (

$\boldsymbol{\mathsf{U}}_r$ in this term may either be a full change of basis with no approximation (![]() $r=d$), or involve some dimension reduction (

$r=d$), or involve some dimension reduction (![]() $d>r> d_h$).

$d>r> d_h$).

For mapping back to the full state (decoding), we again sum POD with a correction

Here, ![]() $[\boldsymbol{h},\textbf{0}]^{{\rm T}}$ is the

$[\boldsymbol{h},\textbf{0}]^{{\rm T}}$ is the ![]() $\boldsymbol{h}$ vector zero-padded to the correct size, and

$\boldsymbol{h}$ vector zero-padded to the correct size, and ![]() $\mathcal{D}$ is a NN. The first term is the POD mapping back to the full space, and the second term is a NN correction. These hybrid autoencoder operations act as a shortcut connection on the optimal linear dimension reduction, which we (Linot & Graham Reference Linot and Graham2020) found useful for representing the data and achieving accurate reconstruction of

$\mathcal{D}$ is a NN. The first term is the POD mapping back to the full space, and the second term is a NN correction. These hybrid autoencoder operations act as a shortcut connection on the optimal linear dimension reduction, which we (Linot & Graham Reference Linot and Graham2020) found useful for representing the data and achieving accurate reconstruction of ![]() $\boldsymbol{u}$. Yu et al. (Reference Yu, Tian, Weinan and Qianxiao2021) also took a similar approach with POD shortcut connections over each layer of the network. We determine the NN parameters

$\boldsymbol{u}$. Yu et al. (Reference Yu, Tian, Weinan and Qianxiao2021) also took a similar approach with POD shortcut connections over each layer of the network. We determine the NN parameters ![]() $\boldsymbol{\theta}_E$ and

$\boldsymbol{\theta}_E$ and ![]() $\boldsymbol{\theta}_D$ by minimising

$\boldsymbol{\theta}_D$ by minimising

\begin{align}

L&=\dfrac{1}{dK}\sum_{i=1}^K\|\boldsymbol{u}(t_i)-\boldsymbol{\check{\chi}}(\boldsymbol{\chi}(\boldsymbol{u}(t_i);\boldsymbol{\theta}_E);

\boldsymbol{\theta}_D)\|^2\nonumber\\

&\quad +\dfrac{1}{d_hK}\sum_{i=1}^K\alpha \|\mathcal{E}(\boldsymbol{\mathsf{U}}_r^{\rm

T}\boldsymbol{u}(t_i);\boldsymbol{\theta}_E)+\mathcal{D}_{d_h}(\boldsymbol{h}(t_i);\boldsymbol{\theta}_D)\|^2.

\end{align}

\begin{align}

L&=\dfrac{1}{dK}\sum_{i=1}^K\|\boldsymbol{u}(t_i)-\boldsymbol{\check{\chi}}(\boldsymbol{\chi}(\boldsymbol{u}(t_i);\boldsymbol{\theta}_E);

\boldsymbol{\theta}_D)\|^2\nonumber\\

&\quad +\dfrac{1}{d_hK}\sum_{i=1}^K\alpha \|\mathcal{E}(\boldsymbol{\mathsf{U}}_r^{\rm

T}\boldsymbol{u}(t_i);\boldsymbol{\theta}_E)+\mathcal{D}_{d_h}(\boldsymbol{h}(t_i);\boldsymbol{\theta}_D)\|^2.

\end{align}

The first term in this loss is the mean-squared error (MSE) of the reconstruction ![]() $\boldsymbol{\tilde {u}}$, and the second term is a penalty that promotes accurate representation of the leading

$\boldsymbol{\tilde {u}}$, and the second term is a penalty that promotes accurate representation of the leading ![]() $d_h$ POD coefficients. In the second term,

$d_h$ POD coefficients. In the second term, ![]() $\mathcal {D}_{d_h}$ denotes the leading

$\mathcal {D}_{d_h}$ denotes the leading ![]() $d_h$ elements of the decoder output. When this term vanishes (with

$d_h$ elements of the decoder output. When this term vanishes (with ![]() $\alpha >0$), the autoencoder exactly matches the first

$\alpha >0$), the autoencoder exactly matches the first ![]() $d_h$ POD coefficients. This penalty does not reduce the size of the correction by the encoder, rather it promotes the removal of the encoder correction by the decoder. Here, and in the following, the norm is defined to be the

$d_h$ POD coefficients. This penalty does not reduce the size of the correction by the encoder, rather it promotes the removal of the encoder correction by the decoder. Here, and in the following, the norm is defined to be the ![]() $L_2$ norm

$L_2$ norm ![]() $\|\boldsymbol{q}\|^2=\sum _{i=1}^N q_i^2$, so the normalisation in front of the terms is to average over elements in the vector and the batch

$\|\boldsymbol{q}\|^2=\sum _{i=1}^N q_i^2$, so the normalisation in front of the terms is to average over elements in the vector and the batch ![]() $K$. For selecting

$K$. For selecting ![]() $\alpha$ and other hyperparameters of the NNs we swept over the parameters to find the best. We discuss the details of the hyperparameter selection and the minimisation procedure in § 3.

$\alpha$ and other hyperparameters of the NNs we swept over the parameters to find the best. We discuss the details of the hyperparameter selection and the minimisation procedure in § 3.

The second approach we use is a standard autoencoder, where, while still working in the POD basis, we do not learn the encoder and decoder in terms of deviations from POD projections but simply set ![]() $\boldsymbol{h}=\boldsymbol{\chi} (\boldsymbol{u};\boldsymbol{\theta}_E)=\mathcal{E}(\boldsymbol{\mathsf{U}}_r^{\rm T}\boldsymbol{u};\boldsymbol{\theta}_E)$ and

$\boldsymbol{h}=\boldsymbol{\chi} (\boldsymbol{u};\boldsymbol{\theta}_E)=\mathcal{E}(\boldsymbol{\mathsf{U}}_r^{\rm T}\boldsymbol{u};\boldsymbol{\theta}_E)$ and ![]() $\boldsymbol{\tilde{u}}=\boldsymbol{\check{\chi}}(\boldsymbol{h};\boldsymbol{\theta}_E)=\boldsymbol{\mathsf{U}}_r\mathcal {D}(\boldsymbol{h};\boldsymbol{\theta}_D)$. In § 3.2 we contrast the standard and hybrid approaches. In Linot & Graham (Reference Linot and Graham2020) we found lower autoencoder error by performing the POD change of basis, and even lower error by taking the hybrid approach for chaotic data from the KSE. In the present context, we found the performance of the two approaches in the POD basis to be very similar, as further discussed in the following.

$\boldsymbol{\tilde{u}}=\boldsymbol{\check{\chi}}(\boldsymbol{h};\boldsymbol{\theta}_E)=\boldsymbol{\mathsf{U}}_r\mathcal {D}(\boldsymbol{h};\boldsymbol{\theta}_D)$. In § 3.2 we contrast the standard and hybrid approaches. In Linot & Graham (Reference Linot and Graham2020) we found lower autoencoder error by performing the POD change of basis, and even lower error by taking the hybrid approach for chaotic data from the KSE. In the present context, we found the performance of the two approaches in the POD basis to be very similar, as further discussed in the following.

Next, we approximate ![]() $\boldsymbol{g}$ using a neural ODE. A drawback of training a single dense NN for

$\boldsymbol{g}$ using a neural ODE. A drawback of training a single dense NN for ![]() $\boldsymbol{g}$ is that the resulting dynamics may become weakly unstable, with linear growth at long times (Linot & Graham Reference Linot and Graham2022; Linot et al. Reference Linot, Burby, Tang, Balaprakash, Graham and Maulik2023a). To avoid this, we use a ‘stabilised’ neural ODE approach by adding a linear damping term onto the output of the NN, giving

$\boldsymbol{g}$ is that the resulting dynamics may become weakly unstable, with linear growth at long times (Linot & Graham Reference Linot and Graham2022; Linot et al. Reference Linot, Burby, Tang, Balaprakash, Graham and Maulik2023a). To avoid this, we use a ‘stabilised’ neural ODE approach by adding a linear damping term onto the output of the NN, giving

For training ![]() $\boldsymbol{g}_{{NN}}$ we integrate (2.8) over some time window

$\boldsymbol{g}_{{NN}}$ we integrate (2.8) over some time window ![]() $\tau$ from time

$\tau$ from time ![]() $t_i$ to

$t_i$ to ![]() $t_i+\tau$ yielding

$t_i+\tau$ yielding

We use the standard Dormand–Prince method (Dormand & Prince Reference Dormand and Prince1980) to perform this integration, but we note that this method does not depend on the integration scheme and different methods can be used when training and deploying the model. Depending on the situation, one may either learn ![]() $\boldsymbol{\mathsf{A}}$ from data or fix it. Here we set it to the diagonal matrix

$\boldsymbol{\mathsf{A}}$ from data or fix it. Here we set it to the diagonal matrix

where ![]() ${\sigma}_i(\boldsymbol{h})$ is the standard deviation of the

${\sigma}_i(\boldsymbol{h})$ is the standard deviation of the ![]() $i$th component of

$i$th component of ![]() $\boldsymbol{h}$,

$\boldsymbol{h}$, ![]() $\beta$ is a tunable parameter and

$\beta$ is a tunable parameter and ![]() ${\boldsymbol{\mathsf{\delta}}}_{ij}$ is the Kronecker delta. In Linot & Graham (Reference Linot and Graham2022) and Linot et al. (Reference Linot, Burby, Tang, Balaprakash, Graham and Maulik2023a), we found that without this stabilising linear term, small errors in the dynamics would eventually grow resulting in

${\boldsymbol{\mathsf{\delta}}}_{ij}$ is the Kronecker delta. In Linot & Graham (Reference Linot and Graham2022) and Linot et al. (Reference Linot, Burby, Tang, Balaprakash, Graham and Maulik2023a), we found that without this stabilising linear term, small errors in the dynamics would eventually grow resulting in ![]() $\boldsymbol{g}_{{NN}}$ outputting a constant value, which led to continuous linear growth away from the attractor. Including the linear damping term effectively eliminates this behaviour by attracting trajectories back to the origin, preventing them from moving far away from the training data. In § 3.4 we show that this approach drastically improves the long-time performance of these models.

$\boldsymbol{g}_{{NN}}$ outputting a constant value, which led to continuous linear growth away from the attractor. Including the linear damping term effectively eliminates this behaviour by attracting trajectories back to the origin, preventing them from moving far away from the training data. In § 3.4 we show that this approach drastically improves the long-time performance of these models.

We then determine the parameters ![]() $\boldsymbol{\theta}_g$ by minimising the difference between the predicted state

$\boldsymbol{\theta}_g$ by minimising the difference between the predicted state ![]() $\boldsymbol{\tilde {h}}(t_i+\tau )$ and the true state

$\boldsymbol{\tilde {h}}(t_i+\tau )$ and the true state ![]() $\boldsymbol{h}(t_i+\tau )$, averaged over the data:

$\boldsymbol{h}(t_i+\tau )$, averaged over the data:

\begin{equation} J=\dfrac{1}{d_hK}\sum_{i=1}^K(\|\boldsymbol{h}(t_i+\tau)-\boldsymbol{\tilde{h}}(t_i+\tau)\|^2). \end{equation}

\begin{equation} J=\dfrac{1}{d_hK}\sum_{i=1}^K(\|\boldsymbol{h}(t_i+\tau)-\boldsymbol{\tilde{h}}(t_i+\tau)\|^2). \end{equation}

For clarity, we show the specific loss we use, which sums over only a single snapshot forward in time at a fixed ![]() $\tau$. More generally, the loss can be formulated for arbitrary snapshot spacing and for multiple snapshots forward in time. To compute the gradient of

$\tau$. More generally, the loss can be formulated for arbitrary snapshot spacing and for multiple snapshots forward in time. To compute the gradient of ![]() $J$ with respect to the NN parameters

$J$ with respect to the NN parameters ![]() $\boldsymbol{\theta}_g$, automatic differentiation can be used to backpropagate through the ODE solver that is used to compute the time integral in (2.9), or an adjoint problem can be solved backwards in time (Chen et al. Reference Chen, Rubanova, Bettencourt and Duvenaud2019). The adjoint method uses less memory than backpropagation, but

$\boldsymbol{\theta}_g$, automatic differentiation can be used to backpropagate through the ODE solver that is used to compute the time integral in (2.9), or an adjoint problem can be solved backwards in time (Chen et al. Reference Chen, Rubanova, Bettencourt and Duvenaud2019). The adjoint method uses less memory than backpropagation, but ![]() $\boldsymbol{h}$ is low-dimensional and we will select a short time window for training, so we choose to backpropagate through the solver.

$\boldsymbol{h}$ is low-dimensional and we will select a short time window for training, so we choose to backpropagate through the solver.

So far this approach to approximating ![]() $\boldsymbol{\chi}$,

$\boldsymbol{\chi}$, ![]() $\boldsymbol{\check {\chi}}$ and

$\boldsymbol{\check {\chi}}$ and ![]() $\boldsymbol{g}$ is general and does not directly account for the fact that the underlying equations are often invariant to certain symmetry operations. For example, one of the symmetries in PCF is a continuous translation symmetry in

$\boldsymbol{g}$ is general and does not directly account for the fact that the underlying equations are often invariant to certain symmetry operations. For example, one of the symmetries in PCF is a continuous translation symmetry in ![]() $x$ and

$x$ and ![]() $z$ (i.e. any solution shifted to another location in the domain gives another solution). This poses an issue for training, because, in principle, the training data must include all these translations to accurately model the dynamics under any translation. We discuss these and other symmetries of PCF in § 3.1.

$z$ (i.e. any solution shifted to another location in the domain gives another solution). This poses an issue for training, because, in principle, the training data must include all these translations to accurately model the dynamics under any translation. We discuss these and other symmetries of PCF in § 3.1.

In practice, accounting for continuous symmetries is most important along directions that sample different phases very slowly. For PCF, the mean flow is in the ![]() $x$ direction, leading to good phase sampling along this direction. However, there is no mean flow in the

$x$ direction, leading to good phase sampling along this direction. However, there is no mean flow in the ![]() $z$ direction, so sampling all phases relies on the slow phase diffusion in that direction. Therefore, we only explicitly account for the

$z$ direction, so sampling all phases relies on the slow phase diffusion in that direction. Therefore, we only explicitly account for the ![]() $z$-phase in § 3, but in the current discussion we present the general framework accounting for all continuous symmetries.

$z$-phase in § 3, but in the current discussion we present the general framework accounting for all continuous symmetries.

One intuitive way to account for the phase would be to augment the data with phase-shifted versions of the data, however, this suffers from two drawbacks: (1) this can drastically slow down the training procedure by including all of these phases and (2) data augmentation does not guarantee that the resulting dynamics are equivariant to phase shifts. Instead, to address the issue of continuous translations, we add an additional preprocessing step to the data, using the method of slices (Budanur, Borrero-Echeverry & Cvitanović Reference Budanur, Borrero-Echeverry and Cvitanović2015a; Budanur et al. Reference Budanur, Cvitanović, Davidchack and Siminos2015b) to split the state ![]() $\boldsymbol{u}$ into a pattern

$\boldsymbol{u}$ into a pattern ![]() $\boldsymbol{u}_p\in \mathbb {R}^d$ and a phase

$\boldsymbol{u}_p\in \mathbb {R}^d$ and a phase ![]() $\boldsymbol{\phi} \in \mathbb {R}^c$. Pérez De Jesús & Graham (Reference Pérez De Jesús and Graham2023) found for Kolmogorov flow that this step leads to an order of magnitude reduction in mean squared reconstruction error. The number of continuous translation symmetries for which we explicitly account determines

$\boldsymbol{\phi} \in \mathbb {R}^c$. Pérez De Jesús & Graham (Reference Pérez De Jesús and Graham2023) found for Kolmogorov flow that this step leads to an order of magnitude reduction in mean squared reconstruction error. The number of continuous translation symmetries for which we explicitly account determines ![]() $c$. We discuss the details of computing the pattern and the phase in § 3.1.

$c$. We discuss the details of computing the pattern and the phase in § 3.1.

In transforming the data to pattern and phase dynamics no information about the system is lost, and the autoencoder and neural ODE become equivariant to translations. As shown in Linot & Graham (Reference Linot and Graham2020) and Pérez De Jesús & Graham (Reference Pérez De Jesús and Graham2023), autoencoders perform better on the pattern dynamics than the state without phase alignment. In addition, separating the pattern and phase is useful because the evolution of both the pattern and the phase only depend on the pattern. Thus, we simply replace ![]() $\boldsymbol{u}$ with

$\boldsymbol{u}$ with ![]() $\boldsymbol{u}_p$ in all the above equations and then write one additional ODE for the phase

$\boldsymbol{u}_p$ in all the above equations and then write one additional ODE for the phase

We then fix the parameters of ![]() $\boldsymbol{g}$ to evolve

$\boldsymbol{g}$ to evolve ![]() $\boldsymbol{h}$ (from

$\boldsymbol{h}$ (from ![]() $\boldsymbol{u}_p$) forward in time and use that to make a phase prediction

$\boldsymbol{u}_p$) forward in time and use that to make a phase prediction

Finally, we determine the parameters ![]() $\boldsymbol{\theta}_\phi$ to minimise the difference between the predicted phase

$\boldsymbol{\theta}_\phi$ to minimise the difference between the predicted phase ![]() $\boldsymbol{\tilde {\phi }}(t_i+\tau )$ and the true phase

$\boldsymbol{\tilde {\phi }}(t_i+\tau )$ and the true phase ![]() $\boldsymbol{\phi} (t_i+\tau )$

$\boldsymbol{\phi} (t_i+\tau )$

\begin{equation} J_\phi=\dfrac{1}{cK}\sum_{i=1}^K(\|\boldsymbol{\phi}(t_i+\tau)-\boldsymbol{\tilde{\phi}}(t_i+\tau)\|^2), \end{equation}

\begin{equation} J_\phi=\dfrac{1}{cK}\sum_{i=1}^K(\|\boldsymbol{\phi}(t_i+\tau)-\boldsymbol{\tilde{\phi}}(t_i+\tau)\|^2), \end{equation}

using the method described previously to compute the gradient of ![]() $J_\phi$.

$J_\phi$.

3. Results

3.1. Description of PCF data

In the following sections we apply DManD to DNS of turbulent PCF in a MFU domain. Specifically, we consider the well-studied Hamilton, Kim and Waleffe (HKW) domain (Hamilton et al. Reference Hamilton, Kim and Waleffe1995). We made this selection to compare our DManD results with the analysis of the SSP in this domain, to compare our DManD results with other Galerkin-based ROMs and to compare our DManD results with known unstable periodic solutions in this domain.

For PCF we solve the Navier–Stokes equations (NSEs)

for a fluid confined between two plates moving in opposite directions with the same speed. Equation (3.1) is the non-dimensionalised form of the equations with velocities in the streamwise ![]() $x\in [0,L_x]$, wall-normal

$x\in [0,L_x]$, wall-normal ![]() $y\in [-1,1]$ and spanwise

$y\in [-1,1]$ and spanwise ![]() $z\in [0,L_z]$ directions defined as

$z\in [0,L_z]$ directions defined as ![]() $\boldsymbol {v}=[v_x,v_y,v_z]$ and pressure

$\boldsymbol {v}=[v_x,v_y,v_z]$ and pressure ![]() $p$. We solve this equation for a domain with periodic boundary conditions in

$p$. We solve this equation for a domain with periodic boundary conditions in ![]() $x$ and

$x$ and ![]() $z$ (

$z$ (![]() $\boldsymbol {v}(0,y, z)=\boldsymbol {v}(L_x,y, z), \boldsymbol {v}(x,y, 0)=\boldsymbol {v}(x,y, L_z)$) and no-slip, no-penetration boundary conditions in

$\boldsymbol {v}(0,y, z)=\boldsymbol {v}(L_x,y, z), \boldsymbol {v}(x,y, 0)=\boldsymbol {v}(x,y, L_z)$) and no-slip, no-penetration boundary conditions in ![]() $y$ (

$y$ (![]() $v_x(x,\pm 1, z)=\pm 1, v_y(x,\pm 1, z)=v_z(x,\pm 1, z)=0$). The complexity of the flow increases as the Reynolds number

$v_x(x,\pm 1, z)=\pm 1, v_y(x,\pm 1, z)=v_z(x,\pm 1, z)=0$). The complexity of the flow increases as the Reynolds number ![]() $Re$ increases and the domain size

$Re$ increases and the domain size ![]() $L_x$ and

$L_x$ and ![]() $L_z$ increase. Here we use the HKW cell, which is at

$L_z$ increase. Here we use the HKW cell, which is at ![]() $Re=400$ with a domain size

$Re=400$ with a domain size ![]() $[L_x,L_y,L_z]=[1.75{\rm \pi},2,1.2{\rm \pi} ]$ (Hamilton et al. Reference Hamilton, Kim and Waleffe1995). The HKW cell is one of the simplest flows that sustains turbulence for extended periods of time before relaminarising. We chose to use this flow because it is well studied (refer to § 1), it isolates the SSP (Hamilton et al. Reference Hamilton, Kim and Waleffe1995) and a library of ECS exists for this domain (Gibson et al. Reference Gibson, Halcrow, Cvitanović and Viswanath2008b). Here we are interested in modelling the turbulent dynamics, so we will remove data upon relaminarisation as detailed in the following.

$[L_x,L_y,L_z]=[1.75{\rm \pi},2,1.2{\rm \pi} ]$ (Hamilton et al. Reference Hamilton, Kim and Waleffe1995). The HKW cell is one of the simplest flows that sustains turbulence for extended periods of time before relaminarising. We chose to use this flow because it is well studied (refer to § 1), it isolates the SSP (Hamilton et al. Reference Hamilton, Kim and Waleffe1995) and a library of ECS exists for this domain (Gibson et al. Reference Gibson, Halcrow, Cvitanović and Viswanath2008b). Here we are interested in modelling the turbulent dynamics, so we will remove data upon relaminarisation as detailed in the following.

Equation (3.1), under the boundary conditions described, is invariant (and its solutions equivariant) under the discrete symmetries of point reflections about ![]() $[x,y,z]=[0,0,0]$

$[x,y,z]=[0,0,0]$

reflection about the ![]() $z=0$ plane

$z=0$ plane

and rotation by ![]() ${\rm \pi}$ about the

${\rm \pi}$ about the ![]() $z$-axis

$z$-axis

In addition to the discrete symmetries, there are also continuous translation symmetries in ![]() $x$ and

$x$ and ![]() $z$

$z$

We incorporate all these symmetries in the POD representation (Smith et al. Reference Smith, Moehlis and Holmes2005), as we discuss further in § 3.2. Then, we use the method of slices (Budanur et al. Reference Budanur, Borrero-Echeverry and Cvitanović2015a) to phase align in the ![]() $z$ direction. By phase aligning in

$z$ direction. By phase aligning in ![]() $z$ we fix the location of the low-speed streak. Without the alignment in

$z$ we fix the location of the low-speed streak. Without the alignment in ![]() $z$, models performed poorly because the models needed to learn how to represent every spatial shift of every snapshot. In what follows, we only consider phase alignment in

$z$, models performed poorly because the models needed to learn how to represent every spatial shift of every snapshot. In what follows, we only consider phase alignment in ![]() $z$, but we note that extending this work to phase alignment in

$z$, but we note that extending this work to phase alignment in ![]() $x$ is straightforward. To phase align the data, we use the first Fourier mode method of slices (Budanur et al. Reference Budanur, Borrero-Echeverry and Cvitanović2015a). First, we compute a phase by taking the Fourier transform of the streamwise velocity in

$x$ is straightforward. To phase align the data, we use the first Fourier mode method of slices (Budanur et al. Reference Budanur, Borrero-Echeverry and Cvitanović2015a). First, we compute a phase by taking the Fourier transform of the streamwise velocity in ![]() $x$ and

$x$ and ![]() $z$ (

$z$ (![]() $\hat {v}_x(k_x,y,k_z)=\mathcal {F}_{x,z}(v_x)$) at a specific

$\hat {v}_x(k_x,y,k_z)=\mathcal {F}_{x,z}(v_x)$) at a specific ![]() $y$ location (

$y$ location (![]() $y_1$) to compute the phase

$y_1$) to compute the phase

The variables ![]() $k_x$ and

$k_x$ and ![]() $k_z$ are the streamwise and spanwise wavenumbers. We select

$k_z$ are the streamwise and spanwise wavenumbers. We select ![]() $y_1$ to be one grid point off the bottom wall. Any

$y_1$ to be one grid point off the bottom wall. Any ![]() $y$ location should work for the phase alignment, but we found choosing the point in the viscous sublayer resulted in a more gradual change of the phase. Rapid changes in the phase make prediction difficult and can require rescaling time, as in Budanur et al. (Reference Budanur, Cvitanović, Davidchack and Siminos2015b), which we want to avoid. Then we compute the pattern dynamics by using the Fourier shift theorem to set the phase to 0 (i.e. move the low-speed streak to the centre of the channel)

$y$ location should work for the phase alignment, but we found choosing the point in the viscous sublayer resulted in a more gradual change of the phase. Rapid changes in the phase make prediction difficult and can require rescaling time, as in Budanur et al. (Reference Budanur, Cvitanović, Davidchack and Siminos2015b), which we want to avoid. Then we compute the pattern dynamics by using the Fourier shift theorem to set the phase to 0 (i.e. move the low-speed streak to the centre of the channel)

We generate turbulent PCF trajectories using the pseudo-spectral Channelflow code developed by Gibson (Reference Gibson2012) and Gibson et al. (Reference Gibson2021). In this code, the velocity and pressure fields are projected onto Fourier modes in ![]() $x$ and

$x$ and ![]() $z$ and Chebyshev polynomials of the first kind in

$z$ and Chebyshev polynomials of the first kind in ![]() $y$. These coefficients are evolved forward in time first using the multistage SMRK2 scheme (Spalart, Moser & Rogers Reference Spalart, Moser and Rogers1991), then, after taking multiple timesteps, using the multistep Adams–Bashforth backward-differentiation 3 scheme (Peyret Reference Peyret2002). At each timestep, a pressure boundary condition is found such that incompressibility is satisfied at the wall (

$y$. These coefficients are evolved forward in time first using the multistage SMRK2 scheme (Spalart, Moser & Rogers Reference Spalart, Moser and Rogers1991), then, after taking multiple timesteps, using the multistep Adams–Bashforth backward-differentiation 3 scheme (Peyret Reference Peyret2002). At each timestep, a pressure boundary condition is found such that incompressibility is satisfied at the wall (![]() ${\rm d}v_y/{{\rm d}\kern 0.05em y}=0$) using the influence matrix method and tau correction developed by Kleiser & Schumann (Reference Kleiser and Schumann1980).

${\rm d}v_y/{{\rm d}\kern 0.05em y}=0$) using the influence matrix method and tau correction developed by Kleiser & Schumann (Reference Kleiser and Schumann1980).

Data were generated with ![]() $\Delta t=0.0325$ on a grid of

$\Delta t=0.0325$ on a grid of ![]() $[N_x,N_y,N_z]=[32,35,32]$ in

$[N_x,N_y,N_z]=[32,35,32]$ in ![]() $x$,

$x$, ![]() $y$ and

$y$ and ![]() $z$ for the HKW cell. Starting from random divergence-free initial conditions, we ran simulations forward for either

$z$ for the HKW cell. Starting from random divergence-free initial conditions, we ran simulations forward for either ![]() $10\,000$ time units or until relaminarisation. Then we dropped the first 1000 time units as transient data and the last

$10\,000$ time units or until relaminarisation. Then we dropped the first 1000 time units as transient data and the last ![]() $1000$ time units to avoid laminar data, and repeated with a new initial condition until we had

$1000$ time units to avoid laminar data, and repeated with a new initial condition until we had ![]() $91\,562$ time units of data stored at intervals of one time unit. We split these data into

$91\,562$ time units of data stored at intervals of one time unit. We split these data into ![]() $80\,\%$ for training and

$80\,\%$ for training and ![]() $20\,\%$ for testing. Finally, we preprocess the data by computing the mean over snapshots and the

$20\,\%$ for testing. Finally, we preprocess the data by computing the mean over snapshots and the ![]() $x$ and

$x$ and ![]() $z$ directions

$z$ directions ![]() $\bar {\boldsymbol {v}}(y)$ from the training data and subtracting it from all data

$\bar {\boldsymbol {v}}(y)$ from the training data and subtracting it from all data ![]() $\boldsymbol {v}'=\boldsymbol {v}-\bar {\boldsymbol {v}}$, and then we compute the pattern

$\boldsymbol {v}'=\boldsymbol {v}-\bar {\boldsymbol {v}}$, and then we compute the pattern ![]() $\boldsymbol {v}'_p$ and the phase

$\boldsymbol {v}'_p$ and the phase ![]() $\phi$ as described previously. The pattern

$\phi$ as described previously. The pattern ![]() $\boldsymbol{u}_p$ described in § 2 is

$\boldsymbol{u}_p$ described in § 2 is ![]() $\boldsymbol {v}'_p$ flattened into a vector (i.e.

$\boldsymbol {v}'_p$ flattened into a vector (i.e. ![]() $d=3N_xN_yN_z$). The POD and NN training use only the training data, and all comparisons use test data unless otherwise specified.

$d=3N_xN_yN_z$). The POD and NN training use only the training data, and all comparisons use test data unless otherwise specified.

3.2. Dimension reduction and dynamic model construction

3.2.1. Linear dimension reduction with POD: from  ${O}(10^5)$ to

${O}(10^5)$ to  ${O}(10^3)$

${O}(10^3)$

The first task in DManD for this Couette flow data is finding a low-dimensional parameterisation of the manifold on which the long-time dynamics lie. We parameterise this manifold in two steps. First, we reduce the dimension down from ![]() $d= {O}(10^5)$ to

$d= {O}(10^5)$ to ![]() $d_{{POD}}={O}(10^3)$ with the POD and, second, we use an autoencoder to reduce the dimension down to

$d_{{POD}}={O}(10^3)$ with the POD and, second, we use an autoencoder to reduce the dimension down to ![]() $d_h$, which ideally is equal to

$d_h$, which ideally is equal to ![]() $d_{\mathcal {M}}$. The first step is simply a preprocessing step to reduce the size of the data, which reduces the number of parameters in the autoencoder. Due to Whitney's embedding theorem (Whitney Reference Whitney1936, Reference Whitney1944), we know that as long as

$d_{\mathcal {M}}$. The first step is simply a preprocessing step to reduce the size of the data, which reduces the number of parameters in the autoencoder. Due to Whitney's embedding theorem (Whitney Reference Whitney1936, Reference Whitney1944), we know that as long as ![]() $d_{\mathcal {M}}< d_{POD}/2$, then this POD representation is diffeomorphic to the full state. As we show later, the manifold dimension

$d_{\mathcal {M}}< d_{POD}/2$, then this POD representation is diffeomorphic to the full state. As we show later, the manifold dimension ![]() $d_{\mathcal {M}}$ appears to be far lower than

$d_{\mathcal {M}}$ appears to be far lower than ![]() $d_{POD}/2$, so no information of the full state should be lost with this first step.

$d_{POD}/2$, so no information of the full state should be lost with this first step.

In other words, there are four different spaces for representing the state in this process. There is the (infinite-dimensional) solution space of the NSEs, the ![]() $d$-dimensional data space generated by the DNS, which we will refer to as the ‘full state’, the

$d$-dimensional data space generated by the DNS, which we will refer to as the ‘full state’, the ![]() $d_{POD}$-dimensional POD representation of the full state and the

$d_{POD}$-dimensional POD representation of the full state and the ![]() $d_h$-dimensional representation in the manifold coordinate system. We assume that our high-resolution DNS solution accurately represents a true solution of the NSEs, and further assume that this solution lies on a low-dimensional manifold of dimension

$d_h$-dimensional representation in the manifold coordinate system. We assume that our high-resolution DNS solution accurately represents a true solution of the NSEs, and further assume that this solution lies on a low-dimensional manifold of dimension ![]() $d_{\mathcal {M}}$. Then, given a sufficient number of dimensions, the POD, followed by the autoencoder, should provide a representation diffeomorphic to the full state. However, we cannot know that a sufficient number of dimensions has been chosen until after training the models.

$d_{\mathcal {M}}$. Then, given a sufficient number of dimensions, the POD, followed by the autoencoder, should provide a representation diffeomorphic to the full state. However, we cannot know that a sufficient number of dimensions has been chosen until after training the models.

The POD originates with the question of what function ![]() $\boldsymbol {\varPhi }$ maximises

$\boldsymbol {\varPhi }$ maximises

where ![]() $\langle {\cdot }\rangle$ is the ensemble average and the inner product is defined to be

$\langle {\cdot }\rangle$ is the ensemble average and the inner product is defined to be

with the corresponding energy norm ![]() $\|\boldsymbol {q}\|_E^2=(\boldsymbol {q},\boldsymbol {q})_E$. Solutions

$\|\boldsymbol {q}\|_E^2=(\boldsymbol {q},\boldsymbol {q})_E$. Solutions ![]() $\boldsymbol {\varPhi }^{(n)}$ to this problem satisfy the eigenvalue problem

$\boldsymbol {\varPhi }^{(n)}$ to this problem satisfy the eigenvalue problem

\begin{equation} \sum_{j=1}^3 \int_0^{L_x}\int_{-1}^1 \int_0^{L_z} \langle v'_i(\boldsymbol{x}, t) v_j^{\prime*}(\boldsymbol{x}^{\prime}, t)\rangle \varPhi_j^{(n)}(\boldsymbol{x}^{\prime})\, {\rm d}\boldsymbol{x}^{\prime}=\lambda_i \varPhi_i^{(n)} (\boldsymbol{x}) \end{equation}

\begin{equation} \sum_{j=1}^3 \int_0^{L_x}\int_{-1}^1 \int_0^{L_z} \langle v'_i(\boldsymbol{x}, t) v_j^{\prime*}(\boldsymbol{x}^{\prime}, t)\rangle \varPhi_j^{(n)}(\boldsymbol{x}^{\prime})\, {\rm d}\boldsymbol{x}^{\prime}=\lambda_i \varPhi_i^{(n)} (\boldsymbol{x}) \end{equation}

(Smith et al. Reference Smith, Moehlis and Holmes2005; Holmes et al. Reference Holmes, Lumley, Berkooz and Rowley2012). Unfortunately, upon approximating these integrals, with the trapezoidal rule for example, this becomes a ![]() $d \times d$ matrix, making computation intractable. Furthermore, computing the average in (3.10), without any modifications, results in POD modes that fail to preserve the underlying symmetries described previously.

$d \times d$ matrix, making computation intractable. Furthermore, computing the average in (3.10), without any modifications, results in POD modes that fail to preserve the underlying symmetries described previously.

In order to make this problem computationally tractable, and preserve symmetries, we apply the POD method used in Smith et al. (Reference Smith, Moehlis and Holmes2005), with the slight difference that we first subtract off the mean of the state before performing the analysis. The first step in this procedure is to treat the POD modes as Fourier modes in both the ![]() $x$ and

$x$ and ![]() $z$ directions. Holmes et al. (Reference Holmes, Lumley, Berkooz and Rowley2012) showed that for translation-invariant directions, Fourier modes are the optimal POD modes. This step transforms the eigenvalue problem into

$z$ directions. Holmes et al. (Reference Holmes, Lumley, Berkooz and Rowley2012) showed that for translation-invariant directions, Fourier modes are the optimal POD modes. This step transforms the eigenvalue problem into

\begin{equation} L_x L_z \sum_{j=1}^3 \int_{-1}^1\langle \hat{v}'_i(k_x,y^{\prime},k_z,t) \hat{v}_j^{\prime*}(k_x,y^{\prime},k_z,t)\rangle \varphi_{jk_xk_z}^{(n)}(y^{\prime}) \,{{\rm d}\kern0.05em y}^{\prime}=\lambda_{k_xk_z}^{(n)} \varphi_{ik_xk_z}^{(n)}(y), \end{equation}

\begin{equation} L_x L_z \sum_{j=1}^3 \int_{-1}^1\langle \hat{v}'_i(k_x,y^{\prime},k_z,t) \hat{v}_j^{\prime*}(k_x,y^{\prime},k_z,t)\rangle \varphi_{jk_xk_z}^{(n)}(y^{\prime}) \,{{\rm d}\kern0.05em y}^{\prime}=\lambda_{k_xk_z}^{(n)} \varphi_{ik_xk_z}^{(n)}(y), \end{equation}

which reduces the ![]() $d \times d$ eigenvalue problem down to a

$d \times d$ eigenvalue problem down to a ![]() $3 N_y \times 3 N_y$ eigenvalue problem for every wavenumber pair

$3 N_y \times 3 N_y$ eigenvalue problem for every wavenumber pair ![]() $(k_x,k_z)$ of Fourier coefficients. We used

$(k_x,k_z)$ of Fourier coefficients. We used ![]() $5000$ snapshots evenly sampled over the training data to compute the POD modes. Then, to account for the discrete symmetries, the data are augmented such that the mean in (3.11) is computed by adding all the discrete symmetries of each snapshot, i.e. we compute

$5000$ snapshots evenly sampled over the training data to compute the POD modes. Then, to account for the discrete symmetries, the data are augmented such that the mean in (3.11) is computed by adding all the discrete symmetries of each snapshot, i.e. we compute ![]() $\langle \hat {v}'_i \hat {v}_j^{\prime *}\rangle$ for

$\langle \hat {v}'_i \hat {v}_j^{\prime *}\rangle$ for ![]() $\boldsymbol {v}$,

$\boldsymbol {v}$, ![]() $\mathcal {P}\boldsymbol {v}$,

$\mathcal {P}\boldsymbol {v}$, ![]() $\mathcal {R}\boldsymbol {v}$ and

$\mathcal {R}\boldsymbol {v}$ and ![]() $\mathcal {RP}\boldsymbol {v}$ and average the results.

$\mathcal {RP}\boldsymbol {v}$ and average the results.

This analysis gives us POD modes

and eigenvalues ![]() $\lambda _{k_xk_z}^{(n)}$. The projection onto these modes results in complex values unless

$\lambda _{k_xk_z}^{(n)}$. The projection onto these modes results in complex values unless ![]() $k_x=k_z=0$. We sort the modes from largest eigenvalue to smallest eigenvalue (

$k_x=k_z=0$. We sort the modes from largest eigenvalue to smallest eigenvalue (![]() $\lambda _i$) and project the phase-aligned data (i.e.

$\lambda _i$) and project the phase-aligned data (i.e. ![]() $\boldsymbol {v}'_p$, as described in § 3.1) onto the leading

$\boldsymbol {v}'_p$, as described in § 3.1) onto the leading ![]() $256$ modes, giving us a vector of POD coefficients

$256$ modes, giving us a vector of POD coefficients ![]() $\boldsymbol{a}(t)$. A majority of these modes are complex (i.e. 2 degrees of freedom), so projecting onto these modes results in a

$\boldsymbol{a}(t)$. A majority of these modes are complex (i.e. 2 degrees of freedom), so projecting onto these modes results in a ![]() $502$-dimensional system, i.e.

$502$-dimensional system, i.e. ![]() $d_{POD}=502$. In figure 1(a) we plot the eigenvalues, which show a rapid drop and then a long tail that contributes little to the energy content. By dividing the eigenvalues of the leading

$d_{POD}=502$. In figure 1(a) we plot the eigenvalues, which show a rapid drop and then a long tail that contributes little to the energy content. By dividing the eigenvalues of the leading ![]() $256$ modes by the total, we find these modes contain

$256$ modes by the total, we find these modes contain ![]() $99.8\,\%$ of the energy. To further illustrate that

$99.8\,\%$ of the energy. To further illustrate that ![]() $256$ modes is sufficient to represent the state in this case, we consider the reconstruction of statistics after projecting onto the POD modes. In figure 1(b) we show the reconstruction of four components of the Reynolds stress,

$256$ modes is sufficient to represent the state in this case, we consider the reconstruction of statistics after projecting onto the POD modes. In figure 1(b) we show the reconstruction of four components of the Reynolds stress, ![]() $\langle v^{\prime 2}_x\rangle$,

$\langle v^{\prime 2}_x\rangle$, ![]() $\langle v^{\prime 2}_z\rangle$,

$\langle v^{\prime 2}_z\rangle$, ![]() $\langle v^{\prime 2}_y\rangle$ and

$\langle v^{\prime 2}_y\rangle$ and ![]() $\langle v^{\prime }_xv^{\prime }_y\rangle$. The projection onto POD modes matches all of these quantities extremely well.

$\langle v^{\prime }_xv^{\prime }_y\rangle$. The projection onto POD modes matches all of these quantities extremely well.

Figure 1. (a) Eigenvalues of POD modes sorted in descending order. (b) Components of the Reynolds stress for data generated by the DNS and this data projected onto 256 POD modes. In (b) the curves are, from top to bottom, ![]() $\langle v^{\prime 2}_x\rangle$,

$\langle v^{\prime 2}_x\rangle$, ![]() $\langle v^{\prime 2}_z\rangle$,

$\langle v^{\prime 2}_z\rangle$, ![]() $\langle v^{\prime 2}_y\rangle$ and

$\langle v^{\prime 2}_y\rangle$ and ![]() $\langle v^{\prime }_xv^{\prime }_y\rangle$.

$\langle v^{\prime }_xv^{\prime }_y\rangle$.

3.2.2. Nonlinear dimension reduction with autoencoders: from  ${O}(10^3)$ to

${O}(10^3)$ to  ${O}(10^1)$

${O}(10^1)$

Now that we have converted the data to POD coefficients and filtered out the low-energy modes, we next train an autoencoder to perform nonlinear dimension reduction. However, we first ‘preprocess’ the POD coefficients by normalising the coefficients before using them in the autoencoder. It is common practice before NN training to subtract the mean and divide by the standard deviation of each component. We do not take this approach here because the standard deviation of the higher POD coefficients, which contribute less to the reconstruction, is much smaller than the lower POD coefficients. In order to retain the important information in the magnitudes, but put the values in a more amenable form for NN training, we instead normalise the POD coefficients by subtracting the mean and dividing by the maximum standard deviation.

With the above preprocessing step completed, we now turn to the reduction of dimension with the nonlinear approach enabled by the autoencoder structure. We consider three approaches to reducing the dimension of the normalised POD coefficient vector ![]() $\boldsymbol{a}$: (1) training a hybrid autoencoder; (2) training a standard autoencoder; and (3) linear projection onto a small set of POD modes. We describe the first two approaches in § 2, and note that the POD projection onto

$\boldsymbol{a}$: (1) training a hybrid autoencoder; (2) training a standard autoencoder; and (3) linear projection onto a small set of POD modes. We describe the first two approaches in § 2, and note that the POD projection onto ![]() $256$ (complex) modes can be written as

$256$ (complex) modes can be written as ![]() $\boldsymbol{a}=\boldsymbol{\mathsf{U}}_r^{\rm T}\boldsymbol{u}$. The third approach corresponds to setting

$\boldsymbol{a}=\boldsymbol{\mathsf{U}}_r^{\rm T}\boldsymbol{u}$. The third approach corresponds to setting ![]() $\mathcal {E}$ and

$\mathcal {E}$ and ![]() $\mathcal {D}$ to zero in 2.5 and 2.6.

$\mathcal {D}$ to zero in 2.5 and 2.6.

To optimise the parameters of the hybrid and standard autoencoders, we use an Adam optimiser (Kingma & Ba Reference Kingma and Ba2015) in Keras (Chollet Reference Chollet2015) to minimise the loss in (2.7) with the normalised POD coefficients as inputs. We train for 500 epochs with a learning rate scheduler that drops the learning rate from ![]() $10^{-3}$ to

$10^{-3}$ to ![]() $10^{-4}$ after 400 epochs. At this point, we see no improvement in the reconstruction error. For the hybrid autoencoder approach, we set

$10^{-4}$ after 400 epochs. At this point, we see no improvement in the reconstruction error. For the hybrid autoencoder approach, we set ![]() $\alpha =0.01$, and for the standard autoencoder

$\alpha =0.01$, and for the standard autoencoder ![]() $\alpha =0$ (in practice, this term is not included). Table 1 includes additional NN architecture details. We determined these network parameters through a manual parameter sweep by varying the shape and activation functions of the NNs to achieve the lowest error without excessive computational cost.

$\alpha =0$ (in practice, this term is not included). Table 1 includes additional NN architecture details. We determined these network parameters through a manual parameter sweep by varying the shape and activation functions of the NNs to achieve the lowest error without excessive computational cost.

Table 1. Architectures of NNs. ‘Shape’ indicates the dimension of each layer, ‘Activation’ the corresponding activation functions and ‘sig’ is the sigmoid activation. ‘Learning rate’ gives the learning rate at multiple times during training. We dropped the learning rate at even intervals.

In figure 2(a) we show the relative reconstruction error for the three approaches over a range of dimensions ![]() $d_h$. We compute this error by computing the energy norm of the difference between the state in the ambient space and the predicted state. Then, we divide that quantity by the energy norm of the state in the ambient space and average over the test data. We use NNs with the same architectures for both the standard and the hybrid autoencoder approaches. Due to the variability introduced into autoencoder training by randomly initialised weights and stochasticity in the optimisation, we show the error for four separately trained autoencoders, at each

$d_h$. We compute this error by computing the energy norm of the difference between the state in the ambient space and the predicted state. Then, we divide that quantity by the energy norm of the state in the ambient space and average over the test data. We use NNs with the same architectures for both the standard and the hybrid autoencoder approaches. Due to the variability introduced into autoencoder training by randomly initialised weights and stochasticity in the optimisation, we show the error for four separately trained autoencoders, at each ![]() $d_h$. We see that the autoencoders perform much better than POD in the range of dimensions considered here.

$d_h$. We see that the autoencoders perform much better than POD in the range of dimensions considered here.

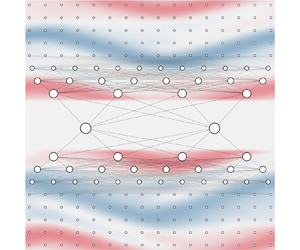

Figure 2. (a) Relative error in the energy norm on test data for POD, standard autoencoders and hybrid autoencoders at various dimensions ![]() $d_h$. At each dimension there are four standard and four hybrid autoencoders. (b) The centreline streamwise velocity for three examples in the DNS and reconstructed using the hybrid autoencoder with

$d_h$. At each dimension there are four standard and four hybrid autoencoders. (b) The centreline streamwise velocity for three examples in the DNS and reconstructed using the hybrid autoencoder with ![]() $d_h=18$. The relative error of reconstruction from top to bottom is

$d_h=18$. The relative error of reconstruction from top to bottom is ![]() $0.021$,

$0.021$, ![]() $0.071$ and

$0.071$ and ![]() $0.048$.

$0.048$.

The performance of the standard and hybrid autoencoder approaches is very similar, and the MSE begins to level off for ![]() $d_h\gtrsim 18$. Given a perfect autoencoder (i.e. one that has latent dimension

$d_h\gtrsim 18$. Given a perfect autoencoder (i.e. one that has latent dimension ![]() $d_h\geq d_{\mathcal {M}}$ and sufficient capacity to determine an exact mapping between the ambient space and the manifold), the error in this figure ideally should vanish once

$d_h\geq d_{\mathcal {M}}$ and sufficient capacity to determine an exact mapping between the ambient space and the manifold), the error in this figure ideally should vanish once ![]() $d_h$ becomes high enough; however, there is always some error in the autoencoder, either due to finite capacity or limitations in the training process, that prevents this from happening. Figure 2(b) shows three examples of the flowfields and their reconstruction for a hybrid autoencoder at

$d_h$ becomes high enough; however, there is always some error in the autoencoder, either due to finite capacity or limitations in the training process, that prevents this from happening. Figure 2(b) shows three examples of the flowfields and their reconstruction for a hybrid autoencoder at ![]() $d_h=18$. This autoencoder captures the key structures of these snapshots. The results in the following use the hybrid approach. We took this approach because the low-dimensional representations of the hybrid autoencoders displayed less variability in predictions from trial to trial than those of the standard autoencoders. This led, in turn, to less variability when training neural ODEs to predict the time dynamics of the different low-dimensional representations of these autoencoders.

$d_h=18$. This autoencoder captures the key structures of these snapshots. The results in the following use the hybrid approach. We took this approach because the low-dimensional representations of the hybrid autoencoders displayed less variability in predictions from trial to trial than those of the standard autoencoders. This led, in turn, to less variability when training neural ODEs to predict the time dynamics of the different low-dimensional representations of these autoencoders.

Before discussing how to use the low-dimensional representations from these autoencoders to train stabilised neural ODEs, we briefly mention convolutional neural networks (CNNs). These could be a reasonable choice for the autoencoder in the framework outlined previously. We chose not to use them because empirically we found lower errors using dense NNs in the POD basis for simple chaotic systems such as the KSE. Once in the POD basis, a CNN holds no advantage over a densely connected network because there are no longer spatial connections between the POD coefficients. In addition, although standard CNNs are invariant to small translations of the input they are not equivariant to inputs, which is what we desire. Separating out pattern and phase variables ensures equivariance for any function approximation of ![]() $\boldsymbol{\chi}$ and

$\boldsymbol{\chi}$ and ![]() $\boldsymbol{\check {\chi}}$, making it an appealing choice over trying to constrain the structure of the NN to ensure equivariance.

$\boldsymbol{\check {\chi}}$, making it an appealing choice over trying to constrain the structure of the NN to ensure equivariance.

3.2.3. Neural ODE training

After training four autoencoders at each dimension ![]() $d_h$, we chose a set of damping parameters,

$d_h$, we chose a set of damping parameters, ![]() $\beta$, and for each, then trained four stabilised neural ODEs for all four autoencoders at every dimension

$\beta$, and for each, then trained four stabilised neural ODEs for all four autoencoders at every dimension ![]() $d_h$. This results in

$d_h$. This results in ![]() $16$ models at every

$16$ models at every ![]() $d_h$ and

$d_h$ and ![]() $\beta$. The final

$\beta$. The final ![]() $\beta$ value of

$\beta$ value of ![]() $0.1$ was selected so that long-time trajectories neither blew up nor decayed too strongly. We trained each of these models to predict one time unit ahead (i.e.

$0.1$ was selected so that long-time trajectories neither blew up nor decayed too strongly. We trained each of these models to predict one time unit ahead (i.e. ![]() $\tau =1$) because predicting further ahead took longer to train, and did not improve results. In Linot & Graham (Reference Linot and Graham2022) we ran a more extensive study on the KSE of varying this parameter, where we found the predictive capabilities do not start to deteriorate until the time window approached a Lyapunov time.

$\tau =1$) because predicting further ahead took longer to train, and did not improve results. In Linot & Graham (Reference Linot and Graham2022) we ran a more extensive study on the KSE of varying this parameter, where we found the predictive capabilities do not start to deteriorate until the time window approached a Lyapunov time.

Before training the ODEs, we preprocess each autoencoder's latent space data set ![]() $\boldsymbol{h}$ by subtracting the mean. It is important to centre the data because the linear damping (2.10) pushes trajectories towards the origin. We train the stabilised neural ODEs to predict the evolution of the centred data by using an Adam optimiser in PyTorch (Chen et al. Reference Chen, Rubanova, Bettencourt and Duvenaud2019; Paszke et al. Reference Paszke2019) to minimise the loss in (2.11). We train using a learning rate scheduler that drops at three even intervals during training and we train until the learning curve stops improving. Table 1 presents the details of this NN. Unless otherwise stated, we show results for the 1 model out of those 16 at each dimension with the lowest relative error averaged over all the statistics we consider. Here we emphasise that for all comparisons between the DNS and the DManD model we will use the same initial conditions for the two simulations. In the DManD model, this consists of encoding the initial condition for the DNS and then evolving it forward with the neural ODE in the latent space to obtain a time series of

$\boldsymbol{h}$ by subtracting the mean. It is important to centre the data because the linear damping (2.10) pushes trajectories towards the origin. We train the stabilised neural ODEs to predict the evolution of the centred data by using an Adam optimiser in PyTorch (Chen et al. Reference Chen, Rubanova, Bettencourt and Duvenaud2019; Paszke et al. Reference Paszke2019) to minimise the loss in (2.11). We train using a learning rate scheduler that drops at three even intervals during training and we train until the learning curve stops improving. Table 1 presents the details of this NN. Unless otherwise stated, we show results for the 1 model out of those 16 at each dimension with the lowest relative error averaged over all the statistics we consider. Here we emphasise that for all comparisons between the DNS and the DManD model we will use the same initial conditions for the two simulations. In the DManD model, this consists of encoding the initial condition for the DNS and then evolving it forward with the neural ODE in the latent space to obtain a time series of ![]() $\boldsymbol{h}$. Then we decode this time series for comparison. Finally, to provide a rough estimate of the computational cost of training these NNs we note that training the autoencoder (

$\boldsymbol{h}$. Then we decode this time series for comparison. Finally, to provide a rough estimate of the computational cost of training these NNs we note that training the autoencoder (![]() $\mathcal {E}, \mathcal {D}$), the stabilised neural ODE (

$\mathcal {E}, \mathcal {D}$), the stabilised neural ODE (![]() $\boldsymbol{g}_{{NN}}$) and the phase neural ODE (

$\boldsymbol{g}_{{NN}}$) and the phase neural ODE (![]() $\boldsymbol{g}_{\phi }$) to convergence took

$\boldsymbol{g}_{\phi }$) to convergence took ![]() $\sim$4.4, 1.0 and 1.7 h, respectively, for the

$\sim$4.4, 1.0 and 1.7 h, respectively, for the ![]() $d_h=18$ hybrid autoencoder model on a 2.40 GHz Intel Xeon CPU E5-2640 v4.

$d_h=18$ hybrid autoencoder model on a 2.40 GHz Intel Xeon CPU E5-2640 v4.

3.3. Short-time tracking

In the following two sections, we evaluate the performance of the DManD models at reconstructing short-time trajectories and long-time statistics. Figure 3 shows snapshots of the streamwise velocity at the centre plane of the channel, ![]() $y=0$, for the DNS and DManD at

$y=0$, for the DNS and DManD at ![]() $d_h=18$. We choose to show results for