1 Introduction

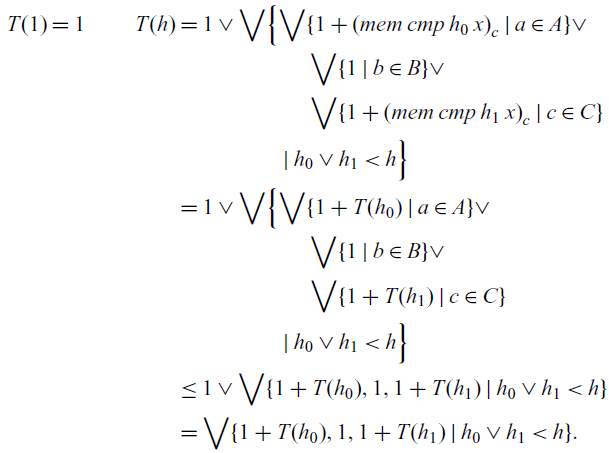

The method for analyzing the asymptotic cost of a (functional) program f(x) that is typically taught to introductory undergraduate students is to extract a recurrence ![]() $T_f(n)$ that describes an upper bound on the cost of f(x) in terms of the size of x and then establish a nonrecursive upper bound on

$T_f(n)$ that describes an upper bound on the cost of f(x) in terms of the size of x and then establish a nonrecursive upper bound on ![]() $T_f(n)$ (we will focus on upper bounds, but much of what we say holds mutatis mutandis for lower bounds, and hence tight bounds). The goal of this work is to put the process of this informal approach to cost analysis on firm mathematical footing. Of course, various formalizations of cost analysis have been discussed for almost as long as there has been a distinct subfield of Programming Languages. Most of the recent work in this area is focused on developing formal techniques for cost analysis that enable the (possibly automated) analysis of as large a swath of programs as possible. In doing so, the type systems and the logics used grow ever more complex. There is work that incorporates size and cost into type information, for example, by employing refinement types or type-and-effect systems. There is work that formalizes reasoning about cost in program logics such as separation logic with time credits. But as witnessed by most undergraduate texts on algorithm analysis, complex type systems and separation logic are not commonly taught. Instead, a function of some form (a recurrence) that computes the cost in terms of the size of the argument is extracted from the source code. This is the case for “simple” compositional worst-case analyses but also more more complex techniques. For example, the banker’s and physicist’s methods of amortized analysis likewise proceed by extracting a function to describe cost; the notion of cost itself, and the extraction of a suitably precise cost function, is more complex, but broadly speaking the structure of the analysis is the same. That is the space we are investigating here: how do we justify that informal process?Footnote 1

The justification might not itself play a role in applying the technique informally, any more than we require introductory students to understand the theory behind a type inference algorithm in order to informally understand why their programs typecheck. But certainly that theory should be settled. Our approach is through denotational semantics, which, in addition to justifying the informal process, also helps to explicate a few questions, such as why length is an appropriate measure of size for cost recurrences for polymorphic list functions (a question that is close to, but not quite the same as, parametricity).

$T_f(n)$ (we will focus on upper bounds, but much of what we say holds mutatis mutandis for lower bounds, and hence tight bounds). The goal of this work is to put the process of this informal approach to cost analysis on firm mathematical footing. Of course, various formalizations of cost analysis have been discussed for almost as long as there has been a distinct subfield of Programming Languages. Most of the recent work in this area is focused on developing formal techniques for cost analysis that enable the (possibly automated) analysis of as large a swath of programs as possible. In doing so, the type systems and the logics used grow ever more complex. There is work that incorporates size and cost into type information, for example, by employing refinement types or type-and-effect systems. There is work that formalizes reasoning about cost in program logics such as separation logic with time credits. But as witnessed by most undergraduate texts on algorithm analysis, complex type systems and separation logic are not commonly taught. Instead, a function of some form (a recurrence) that computes the cost in terms of the size of the argument is extracted from the source code. This is the case for “simple” compositional worst-case analyses but also more more complex techniques. For example, the banker’s and physicist’s methods of amortized analysis likewise proceed by extracting a function to describe cost; the notion of cost itself, and the extraction of a suitably precise cost function, is more complex, but broadly speaking the structure of the analysis is the same. That is the space we are investigating here: how do we justify that informal process?Footnote 1

The justification might not itself play a role in applying the technique informally, any more than we require introductory students to understand the theory behind a type inference algorithm in order to informally understand why their programs typecheck. But certainly that theory should be settled. Our approach is through denotational semantics, which, in addition to justifying the informal process, also helps to explicate a few questions, such as why length is an appropriate measure of size for cost recurrences for polymorphic list functions (a question that is close to, but not quite the same as, parametricity).

Turning to the technical development, in previous work (Danner et al. 2013;Reference Danner, Licata and Ramyaa2015), we have developed a recurrence extraction technique for higher order functional programs for which the bounding is provable that is based on work by Danner & Royer (Reference Danner and Royer2007). The technique is described as follows:

1. We define what is essentially a monadic translation into the writer monad from a call-by-value source language that supports inductive types and structural recursion (fold) to a call-by-name recurrence language; we refer to programs in the latter language as syntactic recurrences. The recurrence language is axiomatized by a size (pre)order rather than equations. The syntactic recurrence extracted from a source-language program f(x) describes both the cost and result of f(x) in terms of x.

-

2. We define a bounding relation between source language programs and syntactic recurrences. The bounding relation is a logical relation that captures the notion that the syntactic recurrence is in fact a bound on the operational cost and the result of the source language program. This notion extends reasonably to higher type, where higher type arguments of a syntactic recurrence are thought of recurrences that are bounds on the corresponding arguments of the source language program. We then prove a bounding theorem that asserts that every typeable program in the source language is related to the recurrence extracted from it.

-

3. The syntactic recurrence is interpreted in a model of the recurrence language. This is where values are abstracted to some notion of size; e.g., the interpretation may be defined so that a value of inductive type

$\delta$ is interpreted by the number of

$\delta$ is interpreted by the number of  $\delta$-constructors in v. We call the interpretation of a syntactic recurrence a semantic recurrence, and it is the semantic recurrences that are intended to match the recurrences that arise from informal analyses.

$\delta$-constructors in v. We call the interpretation of a syntactic recurrence a semantic recurrence, and it is the semantic recurrences that are intended to match the recurrences that arise from informal analyses.

In this paper, we extend the above approach in several ways. First and foremost, we investigate the models, semantic recurrences, and size abstraction more thoroughly than in previous work and show how different models can be used to formally justify typical informal extract-and-solve cost analyses. Second, we add ML-style ![]() $\mathtt{let}$-polymorphism and adapt the techniques to an environment-based operational semantics, a more realistic foundation for implementation than the substitution-based semantics used in previous work. In recent work, we have extended the technique for source languages with call-by-name and general recursion (Kavvos et al. Reference Kavvos, Morehouse, Licata and Danner2020), and for amortized analyses (Cutler et al. Reference Cutler, Licata and Danner2020); we do not consider these extensions in the main body of this paper, in order to focus on the above issues in isolation.

$\mathtt{let}$-polymorphism and adapt the techniques to an environment-based operational semantics, a more realistic foundation for implementation than the substitution-based semantics used in previous work. In recent work, we have extended the technique for source languages with call-by-name and general recursion (Kavvos et al. Reference Kavvos, Morehouse, Licata and Danner2020), and for amortized analyses (Cutler et al. Reference Cutler, Licata and Danner2020); we do not consider these extensions in the main body of this paper, in order to focus on the above issues in isolation.

Our source language, which we describe in Section 2, is a call-by-value higher order functional language with inductive datatypes and structural recursion (fold) and ML-style ![]() $\mathtt{let}$-polymorphism. That is,

$\mathtt{let}$-polymorphism. That is, ![]() $\mathtt{let}$-bound identifiers may be assigned a quantified type, provided that type is instantiated at quantifier-free types in the body of the

$\mathtt{let}$-bound identifiers may be assigned a quantified type, provided that type is instantiated at quantifier-free types in the body of the ![]() $\mathtt{let}$ expression. Restricting to polymorphism that is predicative (quantifiers can be instantiated only with nonquantified types), first-order (quantifiers range over types, not type constructors), and second-class (polymorphic functions cannot themselves be the input to other functions) is sufficient to program a number of example programs, without complicating the denotational models used to analyze them. We define an environment-based operational semantics where each rule is annotated with a cost. For simplicity, we only “charge” for each unfolding of a recursive call, but the technique extends easily for any notion of cost that can be defined in terms of evaluation rules. We could also replace the rule-based cost annotations with a “tick” construct that the programmer inserts at the code points for which a charge should be made, though this requires the programmer to justify the cost model.

$\mathtt{let}$ expression. Restricting to polymorphism that is predicative (quantifiers can be instantiated only with nonquantified types), first-order (quantifiers range over types, not type constructors), and second-class (polymorphic functions cannot themselves be the input to other functions) is sufficient to program a number of example programs, without complicating the denotational models used to analyze them. We define an environment-based operational semantics where each rule is annotated with a cost. For simplicity, we only “charge” for each unfolding of a recursive call, but the technique extends easily for any notion of cost that can be defined in terms of evaluation rules. We could also replace the rule-based cost annotations with a “tick” construct that the programmer inserts at the code points for which a charge should be made, though this requires the programmer to justify the cost model.

The recurrence language in Section 3 is a call-by-name ![]() $\lambda$-calculus with explicit predicative polymorphism (via type abstraction and type application) and an additional type for costs. Ultimately, we care only about the meaning of a syntactic recurrence, not so much any particular strategy for evaluating it, and such a focus on mathematical reasoning makes call-by-name an appropriate formalism. The choice of explicit predicative polymorphism instead of

$\lambda$-calculus with explicit predicative polymorphism (via type abstraction and type application) and an additional type for costs. Ultimately, we care only about the meaning of a syntactic recurrence, not so much any particular strategy for evaluating it, and such a focus on mathematical reasoning makes call-by-name an appropriate formalism. The choice of explicit predicative polymorphism instead of ![]() $\mathtt{let}$-polymorphism is minor, but arises from the same concerns: our main interest is in the models of the language, and it is simpler to describe models of the former than of the latter. To describe the recurrence language as call-by-name is not quite right because the verification of the bounding theorem that relates source programs to syntactic recurrences does not require an operational semantics. Instead it suffices to axiomatize the recurrence language by a preorder, which we call the size order. The size order is defined in Figure 11, and a brief glimpse will show the reader that the axioms primarily consist of directed versions of the standard call-by-name equations. This is the minimal set of axioms necessary to verify the bounding theorem, but as we discuss more fully when we investigate models of the recurrence language, there is more to the size order than that. In a nutshell, a model in which the size order axiom for a given type constructor is nontrivial (i.e., in which the two sides are not actually equal) is a model that genuinely abstracts that particular type constructor to a size.

$\mathtt{let}$-polymorphism is minor, but arises from the same concerns: our main interest is in the models of the language, and it is simpler to describe models of the former than of the latter. To describe the recurrence language as call-by-name is not quite right because the verification of the bounding theorem that relates source programs to syntactic recurrences does not require an operational semantics. Instead it suffices to axiomatize the recurrence language by a preorder, which we call the size order. The size order is defined in Figure 11, and a brief glimpse will show the reader that the axioms primarily consist of directed versions of the standard call-by-name equations. This is the minimal set of axioms necessary to verify the bounding theorem, but as we discuss more fully when we investigate models of the recurrence language, there is more to the size order than that. In a nutshell, a model in which the size order axiom for a given type constructor is nontrivial (i.e., in which the two sides are not actually equal) is a model that genuinely abstracts that particular type constructor to a size.

We can think of the cost type in the recurrence language as the “output” of the writer monad, and the recurrence extraction function that we give in Section 4 as the call-by-value monadic translation of the source language. In some sense, then the recurrence extracted from a source program is just a cost-annotated version of the program. However, we think of the “program” part of the syntactic recurrence differently: it represents the size of the source program. Thus, the syntactic recurrence simultaneously describes both the cost and size of the original program, what we refer to as a complexity. It is no surprise that we must extract both simultaneously, if for no other reason than compositionality, because if we are to describe cost in terms of size, then the cost of f(g(x)) depends on both the cost and size of g(x). Thinking of recurrence extraction as a call-by-value monadic translation gives us insight into how to think of the size of a function: it is a mapping from sizes (of inputs) to complexities (of computing the result on an input of that size). This leads us to view size as a form of usage, or potential cost, and it is this last term that we adopt instead of size.Footnote 2

The bounding relation ![]() $e\preceq E$ that we define in Section 5 is a logical relation between source programs e and syntactic recurrences E. A syntactic recurrence is really a complexity, and

$e\preceq E$ that we define in Section 5 is a logical relation between source programs e and syntactic recurrences E. A syntactic recurrence is really a complexity, and ![]() $e\preceq E$ says that the operational cost of e is bounded by the cost component of E and that the value of e is bounded by the potential component of E. The Bounding Theorem (Theorem 1) tells us that every typeable program is bounded by the recurrence extracted from it. Its proof is somewhat long and technical, but follows the usual pattern for verifying the Fundamental Theorem for a logical relation, and the details are in Appendix 3.

$e\preceq E$ says that the operational cost of e is bounded by the cost component of E and that the value of e is bounded by the potential component of E. The Bounding Theorem (Theorem 1) tells us that every typeable program is bounded by the recurrence extracted from it. Its proof is somewhat long and technical, but follows the usual pattern for verifying the Fundamental Theorem for a logical relation, and the details are in Appendix 3.

In the recurrence language, the “data” necessary to describe the size has as much information as the original program; in the semantics we can abstract away as much or as little of this information as necessary. After defining environment models of the recurrence language in Section 6 (following Bruce et al. Reference Bruce, Meyer and Mitchell1990), in Section 7, we give several examples to demonstrate that different size abstractions result in semantic recurrences that formally justify typical extract-and-solve analyses. We stress that we are not attempting to analyze the cost of heretofore unanalyzed programs. Our goal is a formal process that mirrors as closely as possible the informal process we use at the board and on paper. The main examples demonstrate analyses where

1. The size of a value v of inductive type

$\delta$ is defined in terms of the number of

$\delta$ is defined in terms of the number of  $\delta$-constructors in v. For example, a list is measured by its length, a tree by either its size or its height, etc., enabling typical size-based recurrences (Section 7.2).

$\delta$-constructors in v. For example, a list is measured by its length, a tree by either its size or its height, etc., enabling typical size-based recurrences (Section 7.2).-

2. The size of a value v of inductive type

$\delta$ is (more-or-less) the number of constructors of every inductive type in v. For example, the size of a

$\delta$ is (more-or-less) the number of constructors of every inductive type in v. For example, the size of a  $\mathtt{nat tree}~t$ is the number of

$\mathtt{nat tree}~t$ is the number of  $\mathtt{nat tree}$ constructors in t (its usual “size”) along with the maximum number of

$\mathtt{nat tree}$ constructors in t (its usual “size”) along with the maximum number of  $\textsf{nat}$ constructors in any node of t, enabling the analysis of functions with more complex costs, such as the function that sums the nodes of a

$\textsf{nat}$ constructors in any node of t, enabling the analysis of functions with more complex costs, such as the function that sums the nodes of a  $\mathtt{nat tree}$ (Section 7.3).

$\mathtt{nat tree}$ (Section 7.3). -

3. A polymorphic function can be analyzed in terms of a notion of size that is more abstract than that given by its instances (Section 7.4). For example, while the size of a

$\mathtt{nat tree}$ may be a pair (k, n), where k is the maximum key value and n the size (e.g., to permit analysis of the function that sums all the nodes), we may want the domain of the recurrence extracted from a function of type

$\mathtt{nat tree}$ may be a pair (k, n), where k is the maximum key value and n the size (e.g., to permit analysis of the function that sums all the nodes), we may want the domain of the recurrence extracted from a function of type  $\alpha\mathtt{tree}\rightarrow\rho$ to be

$\alpha\mathtt{tree}\rightarrow\rho$ to be  $\mathbf{N}$, corresponding to counting only the

$\mathbf{N}$, corresponding to counting only the  $\mathtt{tree}$ constructors.

$\mathtt{tree}$ constructors. -

4. We make use of the fact that the interpretation of the size order just has to satisfy certain axioms to derive recurrences for lower bounds. As an example, we parlay this into a formal justification for the informal argument that

$\mathtt{map}(f\mathbin\circ g)$ is more efficient than

$\mathtt{map}(f\mathbin\circ g)$ is more efficient than  $(\mathtt{map}\,f)\mathbin\circ(\mathtt{map}\,g)$ (Section 7.5).

$(\mathtt{map}\,f)\mathbin\circ(\mathtt{map}\,g)$ (Section 7.5).

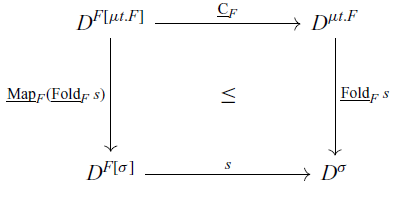

These examples end up clarifying the role of the size order, as mentioned earlier. It is not just the rules necessary to drive the proof of the syntactic bounding theorem, but a nontrivial interpretation of ![]() $\leq_\sigma$ (i.e., one in which

$\leq_\sigma$ (i.e., one in which ![]() $e\leq_\sigma e'$ is valid but not

$e\leq_\sigma e'$ is valid but not ![]() $e = e'$) tells us that we have a model with a nontrivial size abstraction for

$e = e'$) tells us that we have a model with a nontrivial size abstraction for ![]() $\sigma$. This clarification highlights interesting analogies with abstract interpretation: (1) when a datatype

$\sigma$. This clarification highlights interesting analogies with abstract interpretation: (1) when a datatype ![]() $\delta = \mu t F$ is interpreted by a nontrivial size abstraction, there is an abstract interpretation between

$\delta = \mu t F$ is interpreted by a nontrivial size abstraction, there is an abstract interpretation between ![]() $[\![\delta]\!]$ (abstract) and

$[\![\delta]\!]$ (abstract) and ![]() $[\![\,F[\delta]]\!]$ (concrete) and (2) interpreting the recurrence extracted from a polymorphic function in terms of a more abstract notion of size is possible if there is an abstract interpretation between two models.

$[\![\,F[\delta]]\!]$ (concrete) and (2) interpreting the recurrence extracted from a polymorphic function in terms of a more abstract notion of size is possible if there is an abstract interpretation between two models.

The remaining sections of the paper discuss recent work in cost analysis and how our work relates to it, as well as limitations of and future directions for our approach.

2 The source language

The source language that serves as the object language of our recurrence extraction technique is a higher order language with inductive types, structural iteration (fold) over those types, and ML-style polymorphism (i.e., predicative polymorphism, with polymorphic identifiers introduced only in let bindings), with an environment-based operational semantics that approximates typical implementation. This generalizes the source language of Danner et al. (Reference Danner, Licata and Ramyaa2015), which introduced the technique for a monomorphically typed language with a substitution-based semantics. We address general recursion in Section 8.

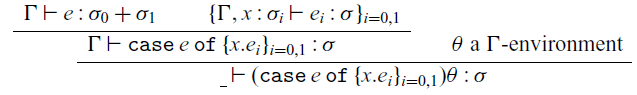

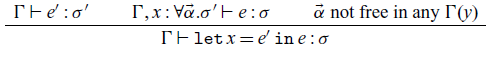

The grammar and typing rules for expressions are given in Figure 1. Type assignment derives (quantifier-free) types for expressions given a type context that assigns type schemes (quantified types) to identifiers. We write ![]() $\_$ for the empty type context. Values are not a subset of expressions because, as one would expect in implementation, a function value consists of a function expression along with a value environment: a binding of free variables to values. The same holds for values of suspension type, and we refer to any pair of an expression and a value environment for its free variables as a closure (thus we use closure more freely than the usual parlance, in which it is restricted to functions). We adopt the notation common in the explicit substitution literature (e.g., Abadi et al. Reference Abadi, Cardelli, Curien and LÉvy1991) and write

$\_$ for the empty type context. Values are not a subset of expressions because, as one would expect in implementation, a function value consists of a function expression along with a value environment: a binding of free variables to values. The same holds for values of suspension type, and we refer to any pair of an expression and a value environment for its free variables as a closure (thus we use closure more freely than the usual parlance, in which it is restricted to functions). We adopt the notation common in the explicit substitution literature (e.g., Abadi et al. Reference Abadi, Cardelli, Curien and LÉvy1991) and write ![]() $v\theta$ for a closure with value environment

$v\theta$ for a closure with value environment ![]() $\theta$. Since the typing for

$\theta$. Since the typing for ![]() $\mathtt{map}$ and

$\mathtt{map}$ and ![]() $\mathtt{mapv}$ expressions depend on values, this requires a separate notion of typing for values, which in turn depends on a notion of typing for closures. These are defined in Figures 2 and 3. There is nothing deep in the typing of a closure value

$\mathtt{mapv}$ expressions depend on values, this requires a separate notion of typing for values, which in turn depends on a notion of typing for closures. These are defined in Figures 2 and 3. There is nothing deep in the typing of a closure value ![]() $v\theta$ under context

$v\theta$ under context ![]() $\Gamma$. Morally, the rules just formalize that v can be assigned the expected type without regard to

$\Gamma$. Morally, the rules just formalize that v can be assigned the expected type without regard to ![]() $\theta$ and that

$\theta$ and that ![]() $\theta(x)$ is of type

$\theta(x)$ is of type ![]() $\Gamma(x)$. But since type contexts may assign type schemes, whereas type assignment only derives types, the formal definition is that

$\Gamma(x)$. But since type contexts may assign type schemes, whereas type assignment only derives types, the formal definition is that ![]() $\theta(x)$ can be assigned any instance of

$\theta(x)$ can be assigned any instance of ![]() $\Gamma(x)$.

$\Gamma(x)$.

Fig. 1. A source language with let polymorphism and inductive datatypes. ![]() $\mathtt{map}$ and

$\mathtt{map}$ and ![]() $\mathtt{mapv}$ expressions depend on values, which are defined in Figure 2.

$\mathtt{mapv}$ expressions depend on values, which are defined in Figure 2.

Fig. 2. Grammar and typing rules for values. For any value environment ![]() $\theta$, it must be that for all x there is

$\theta$, it must be that for all x there is ![]() $\sigma$ such that

$\sigma$ such that ![]() $_\vdash{\theta(x)}:{\sigma}$. The judgment

$_\vdash{\theta(x)}:{\sigma}$. The judgment ![]() $\Gamma{\vdash e\theta}:{\sigma}$ is defined in Figure 3.

$\Gamma{\vdash e\theta}:{\sigma}$ is defined in Figure 3.

Fig. 3. Typing for closures.

We will freely assume notation for n-ary sums and products and their corresponding introduction and elimination forms, such as ![]() $\sigma_0\times\sigma_1\times\sigma_2$,

$\sigma_0\times\sigma_1\times\sigma_2$, ![]() $({e_0, e_1, e_2})$, and

$({e_0, e_1, e_2})$, and ![]() $\pi_1 e$ for

$\pi_1 e$ for ![]() ${({\sigma_0}\times{\sigma_1})}\times{\sigma_2}$,

${({\sigma_0}\times{\sigma_1})}\times{\sigma_2}$, ![]() $((e_{0},e_{1}),e_2)$, and

$((e_{0},e_{1}),e_2)$, and ![]() $\pi_1(\pi_0 e)$, respectively. We write

$\pi_1(\pi_0 e)$, respectively. We write ![]() $\mathop{\mathrm{fv}}\nolimits(e)$ for the free variables of e and

$\mathop{\mathrm{fv}}\nolimits(e)$ for the free variables of e and ![]() $\mathrm{ftv}(\tau)$ for the free type variables of

$\mathrm{ftv}(\tau)$ for the free type variables of ![]() $\tau$.

$\tau$.

Inductive types are defined by shape functors, ranged over by the metavariable F; a generic inductive type has the form ![]() $\mu t.F$. If F is a shape functor and

$\mu t.F$. If F is a shape functor and ![]() $\sigma$ a type, then

$\sigma$ a type, then ![]() $F[\sigma]$ is the result of substituting

$F[\sigma]$ is the result of substituting ![]() $\sigma$ for free occurrences of t in F (the

$\sigma$ for free occurrences of t in F (the ![]() $\mu$ operator binds t, of course). Formally a shape functor is just a type, and so when certain concepts are defined by induction on type, they are automatically defined for shape functors as well. In the syntax for shape functors, t is a fixed type variable, and hence simultaneous nested definitions are not allowed. That is, types such as

$\mu$ operator binds t, of course). Formally a shape functor is just a type, and so when certain concepts are defined by induction on type, they are automatically defined for shape functors as well. In the syntax for shape functors, t is a fixed type variable, and hence simultaneous nested definitions are not allowed. That is, types such as ![]() $\mu t.{{\textsf{unit}}+{\mu s.{\textsf{unit}+{s\times t}}}}$ are forbidden. However, an inductive type can be used inside of other types via the constant functor

$\mu t.{{\textsf{unit}}+{\mu s.{\textsf{unit}+{s\times t}}}}$ are forbidden. However, an inductive type can be used inside of other types via the constant functor ![]() $(\sigma)$ production of F, e.g. coding the type

$(\sigma)$ production of F, e.g. coding the type ![]() $(\alpha \mathtt{list})\mathtt{list}$ as

$(\alpha \mathtt{list})\mathtt{list}$ as ![]() $\mu t.\mathtt{unit}+(\mu t.\mathtt{unit}+\alpha\times t)\times t$. This restriction is just to simplify the presentation of the languages and models, and lifting it does not require fundamental changes. Figure 4 gives a number of types and values that we will use in examples. We warn the reader that because most models of the recurrence language have nonstandard interpretations of inductive types, the types

$\mu t.\mathtt{unit}+(\mu t.\mathtt{unit}+\alpha\times t)\times t$. This restriction is just to simplify the presentation of the languages and models, and lifting it does not require fundamental changes. Figure 4 gives a number of types and values that we will use in examples. We warn the reader that because most models of the recurrence language have nonstandard interpretations of inductive types, the types ![]() $\sigma$ and

$\sigma$ and ![]() $\mu t.\sigma$ may be treated very differently even when t is not free in

$\mu t.\sigma$ may be treated very differently even when t is not free in ![]() $\sigma$. Thus, it can actually make a real difference whether we define

$\sigma$. Thus, it can actually make a real difference whether we define ![]() $\mathtt{bool}$ to be

$\mathtt{bool}$ to be ![]() $\mathtt{unit}+\mathtt{unit}$ or

$\mathtt{unit}+\mathtt{unit}$ or ![]() $\mu t.{\mathtt{unit}+\mathtt{unit}}$ (if every type that would be defined by an ML

$\mu t.{\mathtt{unit}+\mathtt{unit}}$ (if every type that would be defined by an ML ![]() $\mathtt{datatype}$ declaration were implemented as a possibly degenerate inductive type).

$\mathtt{datatype}$ declaration were implemented as a possibly degenerate inductive type).

Fig. 4. Some standard types in the source language.

For every inductive type ![]() $\delta=\mu t.F$ there is an associated constructor

$\delta=\mu t.F$ there is an associated constructor ![]() $\mathtt{c}_\delta$, destructor

$\mathtt{c}_\delta$, destructor ![]() $\mathtt{d}_\delta$, and iterator

$\mathtt{d}_\delta$, and iterator ![]() $\mathtt{fold}_\delta$. Thought of informally as term constants, the first two have the typical types

$\mathtt{fold}_\delta$. Thought of informally as term constants, the first two have the typical types ![]() ${F[\delta]}\rightarrow{\delta}$ and

${F[\delta]}\rightarrow{\delta}$ and ![]() ${\delta\rightarrow{F[\delta]}}$, but the type of

${\delta\rightarrow{F[\delta]}}$, but the type of ![]() $\mathtt{fold}_\delta$ is somewhat nonstandard:

$\mathtt{fold}_\delta$ is somewhat nonstandard: ![]() ${\delta\rightarrow{({F[\sigma \mathtt{susp}]}\rightarrow{\sigma})}}\rightarrow{\sigma}$. We use suspension types of the form

${\delta\rightarrow{({F[\sigma \mathtt{susp}]}\rightarrow{\sigma})}}\rightarrow{\sigma}$. We use suspension types of the form ![]() $\sigma\mathtt{susp}$ primarily to delay computation of recursive calls in evaluating

$\sigma\mathtt{susp}$ primarily to delay computation of recursive calls in evaluating ![]() $\mathtt{fold}$ expressions. This is not necessary for any theoretical concerns, but rather practical: without something like this, implementations of standard programs would have unexpected costs. We will return to this when we discuss the operational semantics. We also observe that in this informal treatment, the types are not polymorphic; in our setting, polymorphism and inductive types are orthogonal concerns.

$\mathtt{fold}$ expressions. This is not necessary for any theoretical concerns, but rather practical: without something like this, implementations of standard programs would have unexpected costs. We will return to this when we discuss the operational semantics. We also observe that in this informal treatment, the types are not polymorphic; in our setting, polymorphism and inductive types are orthogonal concerns.

The ![]() $\mathtt{map}_F$ and

$\mathtt{map}_F$ and ![]() $\mathtt{mapv}_F$ constructors are used to define the operational semantics of

$\mathtt{mapv}_F$ constructors are used to define the operational semantics of ![]() $\mathtt{fold}_{\mu t.F}$. The latter witnesses functoriality of shape functors. Informally speaking, evaluation of

$\mathtt{fold}_{\mu t.F}$. The latter witnesses functoriality of shape functors. Informally speaking, evaluation of ![]() $\mathtt{mapv}_F y.{v'}\mathtt{into} v$ traverses a value v of type

$\mathtt{mapv}_F y.{v'}\mathtt{into} v$ traverses a value v of type ![]() $F[\delta]$, applying a function

$F[\delta]$, applying a function ![]() $y\mapsto v'$ of type

$y\mapsto v'$ of type ![]() $\delta\rightarrow\sigma$ to each inductive subvalue of type

$\delta\rightarrow\sigma$ to each inductive subvalue of type ![]() $\delta$ to obtain a value of type

$\delta$ to obtain a value of type ![]() $F[\sigma]$.

$F[\sigma]$. ![]() $\mathtt{map}_F$ is a technical tool for defining this action when F is an arrow shape, in which case the value of type

$\mathtt{map}_F$ is a technical tool for defining this action when F is an arrow shape, in which case the value of type ![]() $F[\delta]$ is really a delayed computation and hence is represented by an arbitrary expression. Because the definition of

$F[\delta]$ is really a delayed computation and hence is represented by an arbitrary expression. Because the definition of ![]() $\mathtt{map}_F$ and

$\mathtt{map}_F$ and ![]() $\mathtt{mapv}_F$ depend on values, we must define them (and their typing) simultaneously with terms. Furthermore, evaluation of

$\mathtt{mapv}_F$ depend on values, we must define them (and their typing) simultaneously with terms. Furthermore, evaluation of ![]() $\mathtt{mapv}_{\rho\to F}$ results in a function closure value that contains a

$\mathtt{mapv}_{\rho\to F}$ results in a function closure value that contains a ![]() $\mathtt{map}_F$ expression, and the function closure itself is, as usual, an ordinary

$\mathtt{map}_F$ expression, and the function closure itself is, as usual, an ordinary ![]() $\lambda$-expression. This is also the reason that

$\lambda$-expression. This is also the reason that ![]() $\mathtt{map}$ and

$\mathtt{map}$ and ![]() $\mathtt{mapv}$ are part of the language, rather than just part of the metalanguage used to define the operational semantics. They are not intended to be used in program definitions though, so we make the following definition:

$\mathtt{mapv}$ are part of the language, rather than just part of the metalanguage used to define the operational semantics. They are not intended to be used in program definitions though, so we make the following definition:

Definition (Core language) The core language consists of the terms of the source language that are typeable not using ![]() $\mathtt{map}$ or

$\mathtt{map}$ or ![]() $\mathtt{mapv}$.

$\mathtt{mapv}$.

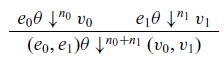

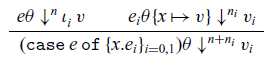

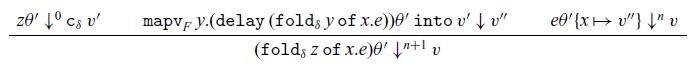

The operational cost semantics for the language is defined in Figure 5 and its dependencies, which define a relation ![]() ${e \theta}\downarrow^{n}{v}$, where e is a (well-typed) expression,

${e \theta}\downarrow^{n}{v}$, where e is a (well-typed) expression, ![]() $\theta$ a value environment, v a value, and n a non-negative integer. As with closure values, we write a closure with expression e and value environment

$\theta$ a value environment, v a value, and n a non-negative integer. As with closure values, we write a closure with expression e and value environment ![]() $\theta$ as

$\theta$ as ![]() $e\theta$, and opt for this notation for compactness (a more typically presentation might be

$e\theta$, and opt for this notation for compactness (a more typically presentation might be ![]() $\theta\vdash\ {e}\downarrow^{n}{v}$). The intended meaning is that under value environment

$\theta\vdash\ {e}\downarrow^{n}{v}$). The intended meaning is that under value environment ![]() $\theta$, the term e evaluates to the value v with cost n. A value environment that needs to be spelled out will be written

$\theta$, the term e evaluates to the value v with cost n. A value environment that needs to be spelled out will be written ![]() $\{ {x_0}\mapsto{v_0},\dots,{x_{n-1}}\mapsto{v_{n-1}} \}$ or more commonly

$\{ {x_0}\mapsto{v_0},\dots,{x_{n-1}}\mapsto{v_{n-1}} \}$ or more commonly ![]() $\{{\vec x}\mapsto{\vec v}\}$. We write

$\{{\vec x}\mapsto{\vec v}\}$. We write ![]() $\theta\{y \mapsto v\}$ for extending a value environment

$\theta\{y \mapsto v\}$ for extending a value environment ![]() $\theta$ by binding the (possibly fresh) variable y to v. Value environments are part of the language, so when we write

$\theta$ by binding the (possibly fresh) variable y to v. Value environments are part of the language, so when we write ![]() $e\theta$, the bindings are not immediately applied. However, we use a substitution notation

$e\theta$, the bindings are not immediately applied. However, we use a substitution notation ![]() $\{v/y\}$ for defining the semantics of

$\{v/y\}$ for defining the semantics of ![]() $\mathtt{mapv}_t$ because this is defined in the metalanguage as a metaoperation. Using explicit environments, rather than a metalanguage notation for substitutions, adds a certain amount of syntactic complexity. The payoff is a semantics that more closely reflects typical implementation.

$\mathtt{mapv}_t$ because this is defined in the metalanguage as a metaoperation. Using explicit environments, rather than a metalanguage notation for substitutions, adds a certain amount of syntactic complexity. The payoff is a semantics that more closely reflects typical implementation.

Fig. 5. The operational cost semantics for the source language. We only define evaluation for closures ![]() $e\theta$ such that

$e\theta$ such that ![]() $_\vdash{e\theta}:{\sigma}$ for some

$_\vdash{e\theta}:{\sigma}$ for some ![]() $\sigma$, and hence just write

$\sigma$, and hence just write ![]() $e\theta$. The semantics for

$e\theta$. The semantics for ![]() $\mathtt{fold}$ depends on the semantics for

$\mathtt{fold}$ depends on the semantics for ![]() $\mathtt{map}$, which is given in Figure 6.

$\mathtt{map}$, which is given in Figure 6.

Fig. 6. The operational semantics for the source language ![]() $\mathtt{map}$ and

$\mathtt{map}$ and ![]() $\mathtt{mapv}$ constructors. Substitution of values is defined in Figure 7.

$\mathtt{mapv}$ constructors. Substitution of values is defined in Figure 7.

Fig. 7. Substitution of values for identifiers in values, ![]() ${v'}\{v/y\}$.

${v'}\{v/y\}$.

Our approach to charging some amount of cost for each step of the evaluation, where that amount may depend on the main term former, is standard. Recurrence extraction is parametric in these choices. We observe that our environment-based semantics permits us to charge even for looking up the value of an identifier, something that is difficult to codify in a substitution-based semantics. Our particular choice to charge one unit of cost for each unfolding of a ![]() $\mathtt{fold}$, and no cost for any other form, is admittedly ad hoc, but gives expected costs with a minimum of bookkeeping fuss, especially when it comes to the semantic interpretations of the recurrences. Another common alternative is to define a tick operation

$\mathtt{fold}$, and no cost for any other form, is admittedly ad hoc, but gives expected costs with a minimum of bookkeeping fuss, especially when it comes to the semantic interpretations of the recurrences. Another common alternative is to define a tick operation ![]() $\mathtt{tick} : \alpha\to\alpha$ which charges a unit of cost, as done by Danielsson (Reference Danielsson2008) and others. This requires the user to annotate the code at the points for which cost should be charged, which increases the load on the programmer, but allows her to be specific about exactly what to count (e.g., only comparisons). It is straightforward to adapt our approach to that setting.

$\mathtt{tick} : \alpha\to\alpha$ which charges a unit of cost, as done by Danielsson (Reference Danielsson2008) and others. This requires the user to annotate the code at the points for which cost should be charged, which increases the load on the programmer, but allows her to be specific about exactly what to count (e.g., only comparisons). It is straightforward to adapt our approach to that setting.

The reason for suspending the recursive call in the semantics of ![]() $\mathtt{fold}$ is to ensure that typical recursively defined functions that do not always evaluate all recursive calls still have the expected cost. For example, consider membership testing in a binary search tree. Typical ML code for such a function might look something like the code in Figure 8(a). This function is linear in the height of t because the lazy evaluation of conditionals ensures that at most one recursive call is evaluated. If we were to implement—member—with a—fold—operator for trees that does not suspend the recursive call (so the step function would have type

$\mathtt{fold}$ is to ensure that typical recursively defined functions that do not always evaluate all recursive calls still have the expected cost. For example, consider membership testing in a binary search tree. Typical ML code for such a function might look something like the code in Figure 8(a). This function is linear in the height of t because the lazy evaluation of conditionals ensures that at most one recursive call is evaluated. If we were to implement—member—with a—fold—operator for trees that does not suspend the recursive call (so the step function would have type ![]() $\mathtt{int}\times\mathtt{bool}\times\mathtt{bool}\times\mathtt{bool}$), as in Figure 8(b), then the recursive calls—r0—and—r1—are evaluated at each step, leading to a cost that is linear in the size of t, rather than the height. Our solution is to ensure that the recursive calls are delayed, and only evaluated when the corresponding branch of the conditional is evaluated, so the step function has type

$\mathtt{int}\times\mathtt{bool}\times\mathtt{bool}\times\mathtt{bool}$), as in Figure 8(b), then the recursive calls—r0—and—r1—are evaluated at each step, leading to a cost that is linear in the size of t, rather than the height. Our solution is to ensure that the recursive calls are delayed, and only evaluated when the corresponding branch of the conditional is evaluated, so the step function has type ![]() $\mathtt{int}\times\mathtt{bool\,susp}\times\mathtt{bool\,susp}\times\mathtt{bool}$, in which case the code looks something like that of Figure 8(c). This issue does not come up when recursive definitions are allowed only at function type, as is typical in call-by-value languages. However, in order to simplify the construction of models, here we restrict recursive definitions to the use of

$\mathtt{int}\times\mathtt{bool\,susp}\times\mathtt{bool\,susp}\times\mathtt{bool}$, in which case the code looks something like that of Figure 8(c). This issue does not come up when recursive definitions are allowed only at function type, as is typical in call-by-value languages. However, in order to simplify the construction of models, here we restrict recursive definitions to the use of ![]() $\mathtt{fold}_\delta$ (i.e., to structural recursion only, rather than general recursion), which must be permitted to have any result type.

$\mathtt{fold}_\delta$ (i.e., to structural recursion only, rather than general recursion), which must be permitted to have any result type.

Fig. 8. Using suspension types to control evaluation of recursive calls in fold-like constructs.

Given the complexity of the language, it behooves us to verify type preservation. For this cost is irrelevant, so we write ![]() ${e\theta}\downarrow{v}$ to mean

${e\theta}\downarrow{v}$ to mean ![]() ${e\theta}\downarrow^{n}{v}$ for some n. Remember that we our notion of closure includes expressions of any type, so this theorem does not just state type preservation for functions.

${e\theta}\downarrow^{n}{v}$ for some n. Remember that we our notion of closure includes expressions of any type, so this theorem does not just state type preservation for functions.

Theorem 1 (Type preservation) If ![]() $_\vdash{e\theta}:{\sigma}$ and

$_\vdash{e\theta}:{\sigma}$ and ![]() ${e\theta}\downarrow{v}$, then

${e\theta}\downarrow{v}$, then ![]() $_\vdash v: \sigma$.

$_\vdash v: \sigma$.

Proof. See Appendix 1. ■

3 The recurrence language

The recurrence language is defined in Figure 9. It is a standard system of predicative polymorphism with explicit type abstraction and application. Most of the time we will elide type annotations from variable bindings, mentioning them only when demanded for clarity. The types and terms corresponding to those of Figure 4 are given in Figure 10. This is the language into which we will extract syntactic recurrences from the source language. A syntactic recurrence is more-or-less a cost-annotated version of a source language program. As we are interested in the value (denotational semantics) of the recurrences and not in operational considerations, we think of the recurrence language in a more call-by-name way (although, as we will see, the main mode of reasoning is with respect to an ordering, rather than equality).

Fig. 9. The recurrence language grammar and typing.

Fig. 10. Some standard types in the recurrence language corresponding to those in Figure 4.

Fig. 11. The size order relation that defines the semantics of the recurrence language. The macro ![]() $F[(y:\rho).e',e$ is defined in Figure 12.

$F[(y:\rho).e',e$ is defined in Figure 12.

3.1 The cost type

The recurrence language has a cost type ![]() $\textsf{C}$. As we discuss in Section 4, we can think of recurrence extraction as a monadic translation into the writer monad, where the “writing” action is to increment the cost component. Thus it suffices to ensure that

$\textsf{C}$. As we discuss in Section 4, we can think of recurrence extraction as a monadic translation into the writer monad, where the “writing” action is to increment the cost component. Thus it suffices to ensure that ![]() $\textsf{C}$ is a monoid, though in our examples of models, it is usually interpreted as a set with more structure (e.g., the natural numbers adjoined with an “infinite” element).

$\textsf{C}$ is a monoid, though in our examples of models, it is usually interpreted as a set with more structure (e.g., the natural numbers adjoined with an “infinite” element).

3.2 The “missing” pieces from the source language

There are no suspension types, nor term constructors corresponding to ![]() $\mathtt{let}$,

$\mathtt{let}$, ![]() $\mathtt{map}$, or

$\mathtt{map}$, or ![]() $\mathtt{mapv}$. We are primarily interested in the denotations of expressions in the recurrence language, not in carefully accounting for the cost of evaluating them. Of course, it is convenient to have the standard syntactic sugar

$\mathtt{mapv}$. We are primarily interested in the denotations of expressions in the recurrence language, not in carefully accounting for the cost of evaluating them. Of course, it is convenient to have the standard syntactic sugar ![]() $\textsf{let}\,{x_0=e_0,\dots,x_{n-1}=e_{n-1}}\,\textsf{in}\,{e}$ for

$\textsf{let}\,{x_0=e_0,\dots,x_{n-1}=e_{n-1}}\,\textsf{in}\,{e}$ for ![]() ${e}\{e_0,\dots,e_{n-1}/x_0,\dots,x_{n-1}\}$. Because of the way in which the size order is axiomatized, this must be defined as a substitution, not as a

${e}\{e_0,\dots,e_{n-1}/x_0,\dots,x_{n-1}\}$. Because of the way in which the size order is axiomatized, this must be defined as a substitution, not as a ![]() $\beta$-expansion. Likewise, we still need a construct that witnesses functoriality of shape functors, but it suffices to do so with a metalanguage macro

$\beta$-expansion. Likewise, we still need a construct that witnesses functoriality of shape functors, but it suffices to do so with a metalanguage macro ![]() $F[(x:\rho).e',e]$ that is defined in Figure 12.

$F[(x:\rho).e',e]$ that is defined in Figure 12.

Fig. 12. The macro ![]() $F[\rho; y.e', e]$.

$F[\rho; y.e', e]$.

Fig. 13. The complexity and potential translation of types. Remember that although we have a grammar for structure functors F, they are actually just a subgrammar of the small types, so we do not require a separate translation function for them.

Fig. 14. Notation related to recurrence language expressions and recurrence extraction.

Fig. 15. The recurrence extraction function on source language terms. On the right-hand sides, ![]() $(c, p) = \|e\|$ and

$(c, p) = \|e\|$ and ![]() $(c_i, p_i) = \|e_i\|$ (note that

$(c_i, p_i) = \|e_i\|$ (note that ![]() $\|e\|$ is always a pair).

$\|e\|$ is always a pair).

3.3 Datatype constructor, destructor, and fold

The constructor, destructor, and fold terms are similar to those in the source language, though here we use the more typical type for ![]() $\textsf{fold}_\delta$. Since type abstraction and application are explicit in the recurrence language, it may feel a bit awkward that

$\textsf{fold}_\delta$. Since type abstraction and application are explicit in the recurrence language, it may feel a bit awkward that ![]() $\beta$-reduction for types seems to change these constants; for example,

$\beta$-reduction for types seems to change these constants; for example,![]() $(\Lambda\alpha.\cdots\mathtt{fold_{\alpha\,list}}e'\mathtt{of}x.e\cdots)\sigma$ would convert to

$(\Lambda\alpha.\cdots\mathtt{fold_{\alpha\,list}}e'\mathtt{of}x.e\cdots)\sigma$ would convert to ![]() $\cdots\mathtt{fold_\alpha\,list}e'\mathtt{of}x.e\cdots$. The right way to think of this is that there is really a single constant

$\cdots\mathtt{fold_\alpha\,list}e'\mathtt{of}x.e\cdots$. The right way to think of this is that there is really a single constant ![]() $\textsf{fold}$ that maps inductive types to the corresponding operator—in effect, we write

$\textsf{fold}$ that maps inductive types to the corresponding operator—in effect, we write ![]() $\textsf{fold}_\delta$ for

$\textsf{fold}_\delta$ for ![]() $\textsf{fold}\,\delta$, and so the substitution of a type for a type variable does not change the constant, but rather the argument to

$\textsf{fold}\,\delta$, and so the substitution of a type for a type variable does not change the constant, but rather the argument to ![]() $\textsf{fold}$.

$\textsf{fold}$.

The choice as to whether to implement datatype-related constructs as term formers or as constants and whether they should be polymorphic or not is mostly a matter of convenience. The choice here meshes better with the definitions of environment models we use in Section 6, but using constants does little other than force us to insert some semantic functions into some definitions. However, one place where this is not quite the whole story is for ![]() $\textsf{fold}_\delta$, which one might be tempted to implement as a term constant of type

$\textsf{fold}_\delta$, which one might be tempted to implement as a term constant of type ![]() $\forall{\vec{\alpha}\beta}.(F[\beta]\to\beta)\to\delta\to\beta$, where

$\forall{\vec{\alpha}\beta}.(F[\beta]\to\beta)\to\delta\to\beta$, where ![]() $\vec\alpha = \mathrm{ftv}(\delta)$. The typing we have chosen is equivalent with respect to any standard operational or denotational semantics. However, our denotational semantics will be non-standard, and the choice turns out to matter in the model construction of Section 7.4. There, we show how to identify type abstraction with size abstraction in a precise sense. Were we to use the polymorphic type for

$\vec\alpha = \mathrm{ftv}(\delta)$. The typing we have chosen is equivalent with respect to any standard operational or denotational semantics. However, our denotational semantics will be non-standard, and the choice turns out to matter in the model construction of Section 7.4. There, we show how to identify type abstraction with size abstraction in a precise sense. Were we to use the polymorphic type for ![]() $\textsf{fold}_\delta$, then even when

$\textsf{fold}_\delta$, then even when ![]() $\delta$ is monomorphic,

$\delta$ is monomorphic, ![]() $\textsf{fold}_\delta$ would still have polymorphic type (for the result), and that would force us to perform undesirable size abstraction on values of the monomorphic type.

$\textsf{fold}_\delta$ would still have polymorphic type (for the result), and that would force us to perform undesirable size abstraction on values of the monomorphic type.

3.4 The size order

The semantics of the recurrence language is described in terms of size orderings ![]() $\leq_\tau$ that is defined in Figure 11 for each type

$\leq_\tau$ that is defined in Figure 11 for each type ![]() $\tau$ (although the rules only define a preorder, we will continue to refer to it as an order). The syntactic recurrence extracted from a program of type

$\tau$ (although the rules only define a preorder, we will continue to refer to it as an order). The syntactic recurrence extracted from a program of type ![]() $\sigma$ has the type

$\sigma$ has the type ![]() $\textsf{C}\times{\langle\langle\sigma\rangle\rangle}$. The intended interpretation of

$\textsf{C}\times{\langle\langle\sigma\rangle\rangle}$. The intended interpretation of ![]() $\langle\langle\sigma\rangle\rangle$ is the set of sizes of source language values of type

$\langle\langle\sigma\rangle\rangle$ is the set of sizes of source language values of type ![]() $\sigma$. We expect to be able to compare sizes, and that is the role of

$\sigma$. We expect to be able to compare sizes, and that is the role of ![]() $\leq_\sigma$. Although

$\leq_\sigma$. Although ![]() $\leq_\textsf{C}$ is more appropriately thought of as an ordering on costs, general comments about

$\leq_\textsf{C}$ is more appropriately thought of as an ordering on costs, general comments about ![]() $\leq$ apply equally to

$\leq$ apply equally to ![]() $\leq_\textsf{C}$, so we describe it as a size ordering as well to reduce verbosity.Footnote 3 The relation

$\leq_\textsf{C}$, so we describe it as a size ordering as well to reduce verbosity.Footnote 3 The relation ![]() $\leq_\textsf{C}$ just requires that

$\leq_\textsf{C}$ just requires that ![]() $\textsf{C}$ be a monoid (i.e., have an associative operation with an identity). In particular, there are no axioms governing 1 needed to prove the bounding theorem; it is not even necessary to require that

$\textsf{C}$ be a monoid (i.e., have an associative operation with an identity). In particular, there are no axioms governing 1 needed to prove the bounding theorem; it is not even necessary to require that ![]() $0\not=1$ or even

$0\not=1$ or even ![]() $0\leq_\textsf{C} 1$.

$0\leq_\textsf{C} 1$.

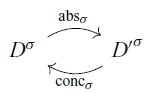

Let us gain some intuition behind the axioms for ![]() $\leq_\sigma$. On the one hand, they are just directed versions of the standard call-by-name equational calculus that one might expect. In the proof of the Syntactic Bounding Theorem (Theorem 5), that is the role they play. But there is more going on here than that. The intended interpretation of the axioms is that the introduction-elimination round-trips that they describe provide a possibly less precise description of size than what is started with. It may help to analogize with abstract interpretation here: an introductory form serves as an abstraction, whereas an elimination form serves as a concretization. In practice, the interpretation of products, sums, and arrows do not perform any abstraction, and so in the models we present in Section 6, the corresponding axioms are witnessed by equality (e.g., for (

$\leq_\sigma$. On the one hand, they are just directed versions of the standard call-by-name equational calculus that one might expect. In the proof of the Syntactic Bounding Theorem (Theorem 5), that is the role they play. But there is more going on here than that. The intended interpretation of the axioms is that the introduction-elimination round-trips that they describe provide a possibly less precise description of size than what is started with. It may help to analogize with abstract interpretation here: an introductory form serves as an abstraction, whereas an elimination form serves as a concretization. In practice, the interpretation of products, sums, and arrows do not perform any abstraction, and so in the models we present in Section 6, the corresponding axioms are witnessed by equality (e.g., for (![]() $\beta_\times$),

$\beta_\times$), ![]() $[\![{e_i}]\!] = [\![{\pi_i ({e_0}{e_1})]\!]}$). That is not the case for datatypes and type quantification, so let us examine this in more detail.

$[\![{e_i}]\!] = [\![{\pi_i ({e_0}{e_1})]\!]}$). That is not the case for datatypes and type quantification, so let us examine this in more detail.

Looking forward to definitions from Section 4, if ![]() $\sigma$ is a source language type, then

$\sigma$ is a source language type, then ![]() $\langle\langle\sigma\rangle\rangle$ is the potential type corresponding to

$\langle\langle\sigma\rangle\rangle$ is the potential type corresponding to ![]() $\sigma$ and is intended to be interpreted as a set of sizes for

$\sigma$ and is intended to be interpreted as a set of sizes for ![]() $\sigma$ values. It happens that

$\sigma$ values. It happens that ![]() $\langle\langle{\mathtt{list}\sigma}\rangle\rangle = {\langle\langle\sigma\rangle\rangle}\mathtt{list}$, so a

$\langle\langle{\mathtt{list}\sigma}\rangle\rangle = {\langle\langle\sigma\rangle\rangle}\mathtt{list}$, so a ![]() $\sigma\mathtt{list}$ value

$\sigma\mathtt{list}$ value ![]() $vs = [v_0,\dots,v_{n-1}]$ is extracted as a list of

$vs = [v_0,\dots,v_{n-1}]$ is extracted as a list of ![]() $\langle\langle\sigma\rangle\rangle$ values, each of which represents the size of one of the

$\langle\langle\sigma\rangle\rangle$ values, each of which represents the size of one of the ![]() $v_i$s. Hence, a great deal of information is preserved about the original source-language program. But frequently the interpretation (the denotational semantics of the recurrence language) abstracts away many of those details. For example, we might interpret

$v_i$s. Hence, a great deal of information is preserved about the original source-language program. But frequently the interpretation (the denotational semantics of the recurrence language) abstracts away many of those details. For example, we might interpret ![]() ${\langle\langle\sigma\rangle\rangle}\mathtt{list}$ as

${\langle\langle\sigma\rangle\rangle}\mathtt{list}$ as ![]() $\mathbf{N}$ (the natural numbers),

$\mathbf{N}$ (the natural numbers), ![]() $\textsf{nil}$ as 0, and

$\textsf{nil}$ as 0, and ![]() $\textsf{cons}$ as successor (with respect to its second argument), thereby yielding a semantics in which each list is interpreted as its length (we define two such “constructor-counting” models in Sections 7.2 and 7.3). Bearing in mind that

$\textsf{cons}$ as successor (with respect to its second argument), thereby yielding a semantics in which each list is interpreted as its length (we define two such “constructor-counting” models in Sections 7.2 and 7.3). Bearing in mind that ![]() ${\langle\langle\sigma\rangle\rangle}\textsf{list} = \mu t.F$ with

${\langle\langle\sigma\rangle\rangle}\textsf{list} = \mu t.F$ with ![]() $F = \textsf{unit}+{{\langle\langle\sigma\rangle\rangle}\times{t}}$, the interpretation of

$F = \textsf{unit}+{{\langle\langle\sigma\rangle\rangle}\times{t}}$, the interpretation of ![]() $F[{\langle\langle\sigma\rangle\rangle}\textsf{list}]$ must be

$F[{\langle\langle\sigma\rangle\rangle}\textsf{list}]$ must be ![]() $[\![\textsf{unit}]\!] + ([\![{\langle\langle\sigma\rangle\rangle}]\!]\times\mathbf{N})$. Let us assume that

$[\![\textsf{unit}]\!] + ([\![{\langle\langle\sigma\rangle\rangle}]\!]\times\mathbf{N})$. Let us assume that ![]() $+$ and

$+$ and ![]() $\times$ are given their usual interpretations (though as we will see in Section 7, that is often not sufficient). For brevity, we will write

$\times$ are given their usual interpretations (though as we will see in Section 7, that is often not sufficient). For brevity, we will write ![]() $\textsf{c}$ and

$\textsf{c}$ and ![]() $\textsf{d}$ for

$\textsf{d}$ for ![]() $\textsf{c}_{{\langle\langle\sigma\rangle\rangle}\textsf{list}}$ and

$\textsf{c}_{{\langle\langle\sigma\rangle\rangle}\textsf{list}}$ and ![]() $\textsf{d}_{{\langle\langle\sigma\rangle\rangle}\textsf{list}}$. Thus,

$\textsf{d}_{{\langle\langle\sigma\rangle\rangle}\textsf{list}}$. Thus, ![]() $\textsf{c}(y, n) = 1+n$ represents the size of a source-language list that is built using

$\textsf{c}(y, n) = 1+n$ represents the size of a source-language list that is built using ![]() $\mathtt{c}_{\sigma\textsf{list}}$ when applied to a head of size y (which is irrelevant) and a tail of size n. The question is, what should the value of

$\mathtt{c}_{\sigma\textsf{list}}$ when applied to a head of size y (which is irrelevant) and a tail of size n. The question is, what should the value of ![]() $\textsf{d}(1+n)$ be? It ought to somehow describe all possible pairs that are mapped to

$\textsf{d}(1+n)$ be? It ought to somehow describe all possible pairs that are mapped to ![]() $1+n$ by

$1+n$ by ![]() $\textsf{c}$. Ignoring the possibility that it is an element of

$\textsf{c}$. Ignoring the possibility that it is an element of ![]() $[\![\textsf{unit}]\!]{}$ (which seems obviously wrong), and assuming the

$[\![\textsf{unit}]\!]{}$ (which seems obviously wrong), and assuming the ![]() $\mathbf{N}$-component ought to be n, no one pair

$\mathbf{N}$-component ought to be n, no one pair ![]() $(y', n)\in[\![{\langle\langle\sigma\rangle\rangle}]\!]\times\mathbf{N}$ seems to do the job. However, if we assume the existence of an maximum element

$(y', n)\in[\![{\langle\langle\sigma\rangle\rangle}]\!]\times\mathbf{N}$ seems to do the job. However, if we assume the existence of an maximum element ![]() $\infty$ of

$\infty$ of ![]() $[\![{\langle\langle\sigma\rangle\rangle}]\!]{}$, then

$[\![{\langle\langle\sigma\rangle\rangle}]\!]{}$, then ![]() $(\infty, n)$ is an upper bound on all pairs (y’, n) such that

$(\infty, n)$ is an upper bound on all pairs (y’, n) such that ![]() $\textsf{c}(y', n) = 1+n$, and so it seem reasonable to set

$\textsf{c}(y', n) = 1+n$, and so it seem reasonable to set ![]() $\textsf{d}(1+n) = (\infty, n)$. But in this case, the round trip is not an identity because

$\textsf{d}(1+n) = (\infty, n)$. But in this case, the round trip is not an identity because ![]() $\textsf{d}(\textsf{c}(y, n)) = (\infty, n) \geq (y, n)$, and so

$\textsf{d}(\textsf{c}(y, n)) = (\infty, n) \geq (y, n)$, and so ![]() $\beta_{\delta}$ and

$\beta_{\delta}$ and ![]() $\beta_{\delta\textsf{fold}}$ are not witnessed by equality.

$\beta_{\delta\textsf{fold}}$ are not witnessed by equality.

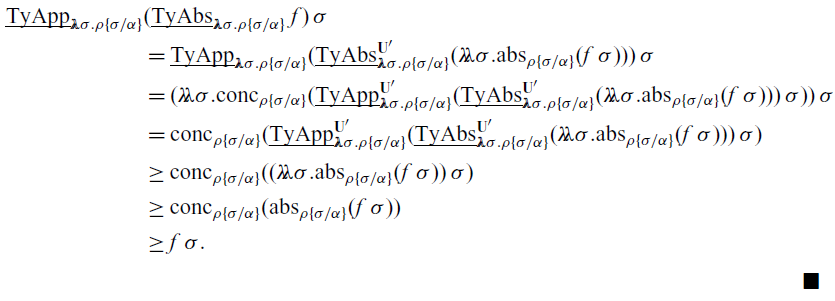

Turning to type quantification, the standard interpretation of ![]() $\forall\alpha\sigma$ is

$\forall\alpha\sigma$ is ![]() $\prod_{U\in\mathbf{U}}[\![{\sigma}]\!]{\{\alpha\mapsto U\}}$ for a suitable index set

$\prod_{U\in\mathbf{U}}[\![{\sigma}]\!]{\{\alpha\mapsto U\}}$ for a suitable index set ![]() $\mathbf{U}$ (in the setting of predicative polymorphism, this does not pose any foundational difficulties), and the interpretation of a polymoprhic program is the

$\mathbf{U}$ (in the setting of predicative polymorphism, this does not pose any foundational difficulties), and the interpretation of a polymoprhic program is the ![]() $\mathbf{U}$-indexed tuple of all of its instances. Let

$\mathbf{U}$-indexed tuple of all of its instances. Let ![]() ${\lambda{xs}.{e}}\mathbin: {{\alpha}\textsf{list}\rightarrow{\rho}}$ be a polymorphic program in the source language. The recurrence extracted from it essentially has the form

${\lambda{xs}.{e}}\mathbin: {{\alpha}\textsf{list}\rightarrow{\rho}}$ be a polymorphic program in the source language. The recurrence extracted from it essentially has the form ![]() ${\Lambda\alpha.{{\lambda{xs}.{E}}}} \mathbin:\forall\alpha.{{\alpha\textsf{list}}\rightarrow{\textsf{C}\times{\langle\langle\rho\rangle\rangle}}}$. Let us consider a denotational semantics in which

${\Lambda\alpha.{{\lambda{xs}.{E}}}} \mathbin:\forall\alpha.{{\alpha\textsf{list}}\rightarrow{\textsf{C}\times{\langle\langle\rho\rangle\rangle}}}$. Let us consider a denotational semantics in which ![]() $[\![{\sigma\textsf{list}}]\!]{} =\mathbf{N}\times\mathbf{N}$, where (k, n) describes a

$[\![{\sigma\textsf{list}}]\!]{} =\mathbf{N}\times\mathbf{N}$, where (k, n) describes a ![]() $\sigma\textsf{list}$ value with maximum component size k and length n (this is a variant of the semantics in Section 7.3). We are then in the conceptually unfortunate situation that the analysis of this polymorphic recurrence depends on its instances, which are defined in terms of not only the list length, but also the sizes of the list values. Parametricity tells us that the list value sizes are irrelevant, but our model fails to convey that. Instead, we really want to interpret the type of the recurrence as

$\sigma\textsf{list}$ value with maximum component size k and length n (this is a variant of the semantics in Section 7.3). We are then in the conceptually unfortunate situation that the analysis of this polymorphic recurrence depends on its instances, which are defined in terms of not only the list length, but also the sizes of the list values. Parametricity tells us that the list value sizes are irrelevant, but our model fails to convey that. Instead, we really want to interpret the type of the recurrence as ![]() $\mathbf{N}\to([\![\textsf{C}]\!]\times[\![{\langle\langle\rho\rangle\rangle}]\!])$, where the domain corresponds to list length. This is a non-standard interpretation of quantified types, and so the interpretations of quantifier introduction and elimination will also be non-standard. In our approach to solving this problem, those interpretations in turn depend on the existence of a Galois connection between

$\mathbf{N}\to([\![\textsf{C}]\!]\times[\![{\langle\langle\rho\rangle\rangle}]\!])$, where the domain corresponds to list length. This is a non-standard interpretation of quantified types, and so the interpretations of quantifier introduction and elimination will also be non-standard. In our approach to solving this problem, those interpretations in turn depend on the existence of a Galois connection between ![]() $\mathbf{N}$ and

$\mathbf{N}$ and ![]() $\mathbf{N}\times\mathbf{N}$, for example mapping the length n (quantified type) to

$\mathbf{N}\times\mathbf{N}$, for example mapping the length n (quantified type) to ![]() $(\infty, n)$ (an upper bound on instances), and we might map (k, n) (instance type) to n. The round trip for type quantification corresponds to

$(\infty, n)$ (an upper bound on instances), and we might map (k, n) (instance type) to n. The round trip for type quantification corresponds to ![]() $(k, n)\mapsto n \mapsto (\infty, n)$, and hence

$(k, n)\mapsto n \mapsto (\infty, n)$, and hence ![]() $(\beta_\forall)$ is not witnessed by equality (we deploy the usual conjugation with these two functions in order to propogate the inequality to function types while respecting contravariance). We describe an instance of this sort of model construction in Section 7.4, although there we are not able to eliminate the

$(\beta_\forall)$ is not witnessed by equality (we deploy the usual conjugation with these two functions in order to propogate the inequality to function types while respecting contravariance). We describe an instance of this sort of model construction in Section 7.4, although there we are not able to eliminate the ![]() $\mathbf{U}$-indexed product, and so the type of the recurrence is interpreted by

$\mathbf{U}$-indexed product, and so the type of the recurrence is interpreted by ![]() $\prod_{U\in\mathbf{U}}\mathbf{N}\to([\![\textsf{C}]\!]{}\times[\![{\langle\langle\rho\rangle\rangle}]\!]{})$.

$\prod_{U\in\mathbf{U}}\mathbf{N}\to([\![\textsf{C}]\!]{}\times[\![{\langle\langle\rho\rangle\rangle}]\!]{})$.

4 Recurrence extraction

A challenge in defining recurrence extraction is that computing only evaluation cost is insufficient for enabling compositionality because the cost of f(g(x)) depends on the size of g(x) as well as its cost. To drive this home, consider a typical higher order function such as

The cost of ![]() $\mathtt{map}(f, xs)$ depends on the cost of evaluating f on the elements of xs, and hence (indirectly) on the sizes of the elements of xs. And since

$\mathtt{map}(f, xs)$ depends on the cost of evaluating f on the elements of xs, and hence (indirectly) on the sizes of the elements of xs. And since ![]() $\mathtt{map}(f, xs)$ might itself be an argument to another function (e.g., another—map—), we also need to predict the sizes of the elements of

$\mathtt{map}(f, xs)$ might itself be an argument to another function (e.g., another—map—), we also need to predict the sizes of the elements of ![]() $\mathtt{map}(f, xs)$, which depends on the size of the output of f. Thus, to analyze —map—, we should be given a recurrence for the cost and size of f(x) in terms of the size of x, from which we produce a recurrence that gives the cost and size of

$\mathtt{map}(f, xs)$, which depends on the size of the output of f. Thus, to analyze —map—, we should be given a recurrence for the cost and size of f(x) in terms of the size of x, from which we produce a recurrence that gives the cost and size of ![]() $\mathtt{map}(f, xs)$ in terms of the size of xs. We call the size of the value of an expression that expression’s potential because the size of the value determines what future (potential) uses of that value will cost (use cost would be another reasonable term for potential).

$\mathtt{map}(f, xs)$ in terms of the size of xs. We call the size of the value of an expression that expression’s potential because the size of the value determines what future (potential) uses of that value will cost (use cost would be another reasonable term for potential).

Motivated by this discussion, we define translations ![]() $\langle\langle\cdot\rangle\rangle$ from source language types to complexity types and

$\langle\langle\cdot\rangle\rangle$ from source language types to complexity types and ![]() $\|\cdot\|$ from source language terms to recurrence language terms so that if

$\|\cdot\|$ from source language terms to recurrence language terms so that if ![]() $e\mathbin:\sigma$, then

$e\mathbin:\sigma$, then ![]() $\|e\|\mathbin:\textsf{C}\times\langle\langle\sigma\rangle\rangle$. In the recurrence language, we call an expression of type

$\|e\|\mathbin:\textsf{C}\times\langle\langle\sigma\rangle\rangle$. In the recurrence language, we call an expression of type ![]() $\langle\langle{\sigma}\rangle\rangle$ a potential and an expression of type

$\langle\langle{\sigma}\rangle\rangle$ a potential and an expression of type ![]() $\textsf{C}\times\langle\langle{\tau}\rangle\rangle$ a complexity. We abbreviate

$\textsf{C}\times\langle\langle{\tau}\rangle\rangle$ a complexity. We abbreviate ![]() $\textsf{C} \times \langle\langle{\tau}\rangle\rangle$ by

$\textsf{C} \times \langle\langle{\tau}\rangle\rangle$ by ![]() $\|\tau\|$. The first component of

$\|\tau\|$. The first component of ![]() $\|e\|$ is intended to be an upper bound on the cost of evaluating e, and the second component of

$\|e\|$ is intended to be an upper bound on the cost of evaluating e, and the second component of ![]() $\|e\|$ is intended to be an upper bound on the potential of e. The weakness of the size order axioms only allows us to conclude “upper bound” syntactically (hence the definition of the bounding relations in Figure 16), though one can define models of the recurrence language in which the interpretations are exact. The potential of a type-level 0 expression is a measure of the size of that value to which it evaluates, because that is how the value contributes to the cost of future computations. And as we just described, the potential of a type-

$\|e\|$ is intended to be an upper bound on the potential of e. The weakness of the size order axioms only allows us to conclude “upper bound” syntactically (hence the definition of the bounding relations in Figure 16), though one can define models of the recurrence language in which the interpretations are exact. The potential of a type-level 0 expression is a measure of the size of that value to which it evaluates, because that is how the value contributes to the cost of future computations. And as we just described, the potential of a type-![]() $\rho\to\sigma$ function f is itself a function from potentials of type

$\rho\to\sigma$ function f is itself a function from potentials of type ![]() $\rho$ (upper bounds on sizes of arguments x of f) to complexities of type

$\rho$ (upper bounds on sizes of arguments x of f) to complexities of type ![]() $\sigma$ (an upper bound on the cost of evaluating f(x) and the size of the result).

$\sigma$ (an upper bound on the cost of evaluating f(x) and the size of the result).

Fig. 16. The type-indexed bounding relations.

Returning to ![]() $\mathtt{map} :(\rho\rightarrow\sigma)\times\rho\mathtt{list}\rightarrow\sigma\mathtt{list}$, its potential should describe what future uses of

$\mathtt{map} :(\rho\rightarrow\sigma)\times\rho\mathtt{list}\rightarrow\sigma\mathtt{list}$, its potential should describe what future uses of ![]() $\mathtt{map}$ will cost, in terms of the potentials of its arguments. In this call-by-value setting, the arguments will already have been evaluated, so their costs do not play into the potential of

$\mathtt{map}$ will cost, in terms of the potentials of its arguments. In this call-by-value setting, the arguments will already have been evaluated, so their costs do not play into the potential of ![]() $\mathtt{map}$ (the recurrence that is extracted from an application expression will take those costs into account). The above discussion suggests that

$\mathtt{map}$ (the recurrence that is extracted from an application expression will take those costs into account). The above discussion suggests that ![]() $\langle\langle(\rho\to\sigma)\times\rho\mathtt{list}\to\sigma\mathtt{list}\rangle\rangle$ ought to be

$\langle\langle(\rho\to\sigma)\times\rho\mathtt{list}\to\sigma\mathtt{list}\rangle\rangle$ ought to be ![]() $(\langle\langle\rho\rangle\rangle\to\textsf{C}\times\sigma)\times\langle\langle\rho\mathtt{list}\rangle\rangle\rightarrow\textsf{C}\times\langle\langle\sigma\mathtt{list}\rangle\rangle$. For the argument function, we are provided a recurrence that maps

$(\langle\langle\rho\rangle\rangle\to\textsf{C}\times\sigma)\times\langle\langle\rho\mathtt{list}\rangle\rangle\rightarrow\textsf{C}\times\langle\langle\sigma\mathtt{list}\rangle\rangle$. For the argument function, we are provided a recurrence that maps ![]() $\rho$-potentials to

$\rho$-potentials to ![]() $\sigma$-complexities. For the argument list, we are provided a

$\sigma$-complexities. For the argument list, we are provided a ![]() $(\rho\mathtt{list})$-potential. Using these, the potential of

$(\rho\mathtt{list})$-potential. Using these, the potential of ![]() $\mathtt{map}$ must give the cost for doing the whole

$\mathtt{map}$ must give the cost for doing the whole ![]() $\mathtt{map}$ and give a

$\mathtt{map}$ and give a ![]() $(\sigma\mathtt{list})$-potential for the value. This illustrates how the potential of a higher order function is itself a higher order function.

$(\sigma\mathtt{list})$-potential for the value. This illustrates how the potential of a higher order function is itself a higher order function.

Since ![]() $\langle\langle\rho\rangle\rangle$ has as much “information” as

$\langle\langle\rho\rangle\rangle$ has as much “information” as ![]() $\rho$, syntactic recurrence extraction does not abstract values as sizes (e.g., we do not replace a list by its length). This permits us to prove a general bounding theorem independent of the particular abstraction (i.e., semantics) that a client may wish to use. Because of this, the complexity translation has a succinct description. For any monoid

$\rho$, syntactic recurrence extraction does not abstract values as sizes (e.g., we do not replace a list by its length). This permits us to prove a general bounding theorem independent of the particular abstraction (i.e., semantics) that a client may wish to use. Because of this, the complexity translation has a succinct description. For any monoid ![]() $(\textsf{C},+,0)$, the writer monad (Wadler Reference Wadler1992)

$(\textsf{C},+,0)$, the writer monad (Wadler Reference Wadler1992) ![]() $\textsf{C}\times -$ is a monad with

$\textsf{C}\times -$ is a monad with

The monad laws follow from the monoid laws for ![]() $\textsf{C}$. Thinking of

$\textsf{C}$. Thinking of ![]() $\textsf{C}$ as costs, these say that the cost of

$\textsf{C}$ as costs, these say that the cost of ![]() $\textsf{return}(e)$ is zero, and that the cost of bind is the sum of the cost of

$\textsf{return}(e)$ is zero, and that the cost of bind is the sum of the cost of ![]() $E_1$ and the cost of

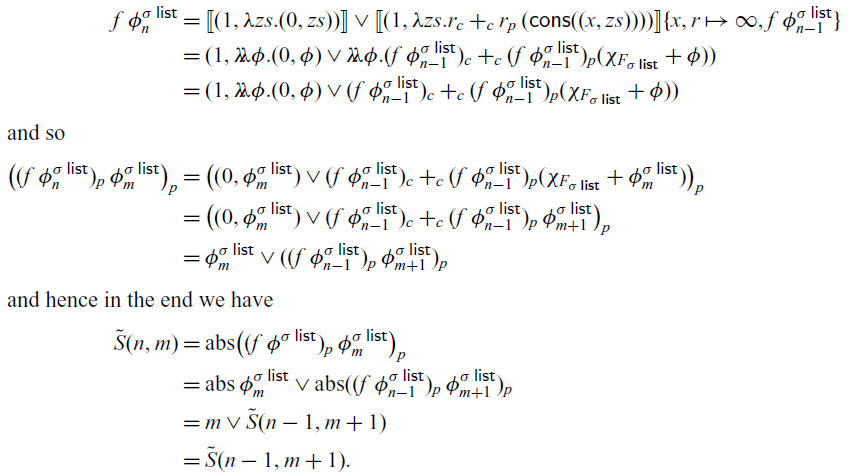

$E_1$ and the cost of ![]() $E_2$ on the potential of