1 Introduction

Answer set programming (ASP) (Reference LifschitzLifschitz (2002)) offers a rich knowledge representation language along with a powerful solving technology. While the paradigm has developed further, several probabilisitic extensions of ASP have been proposed, among them Lpmln (Reference Lee and WangLee and Wang, 2016), ProbLog (Reference Raedt, Kimmig and ToivonenRaedt et al., 2007), and P-log (Reference Baral, Gelfond and RushtonBaral et al., 2009).

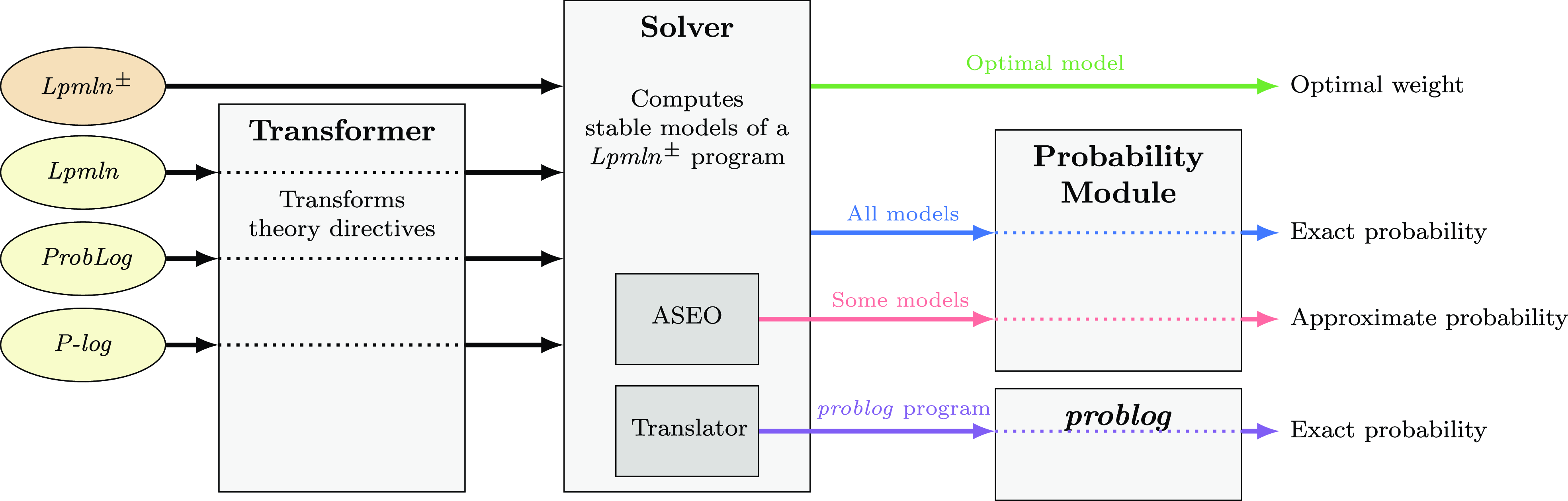

In this work, we present an extension of the ASP system clingo, called plingo, which features various probabilistic reasoning modes. Plingo is centered on

![]() $\textit{Lpmln}^{\pm }$

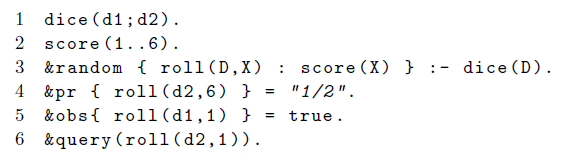

, a simple variant of the probabilistic language Lpmln, which is based upon a weighted scheme from Markov logic (Reference Richardson and DomingosRichardson and Domingos, 2006). Lpmln has already proven to be useful in several settings (Reference Lee and WangLee and Wang, 2018; Reference Ahmadi, Lee, Papotti and SaeedAhmadi et al., 2019) and it serves us also as a middle-ground formalism connecting to other probabilistic modeling languages. We rely on translations from ProbLog and P-log to Lpmln (Reference Lee and WangLee and Wang, 2016; Reference Lee and YangLee and Yang, 2017), respectively, to capture these approaches as well. In fact, Lpmln has already been implemented in the system lpmln2asp (Reference Lee, Talsania and WangLee et al., 2017) by mapping Lpmln-based reasoning into reasoning modes in clingo (viz., optimization and enumeration of stable models). As such, the core of plingo can be seen as a re-implementation of lpmln2asp that is well integrated with clingo by using its multi-shot and theory reasoning functionalities.

$\textit{Lpmln}^{\pm }$

, a simple variant of the probabilistic language Lpmln, which is based upon a weighted scheme from Markov logic (Reference Richardson and DomingosRichardson and Domingos, 2006). Lpmln has already proven to be useful in several settings (Reference Lee and WangLee and Wang, 2018; Reference Ahmadi, Lee, Papotti and SaeedAhmadi et al., 2019) and it serves us also as a middle-ground formalism connecting to other probabilistic modeling languages. We rely on translations from ProbLog and P-log to Lpmln (Reference Lee and WangLee and Wang, 2016; Reference Lee and YangLee and Yang, 2017), respectively, to capture these approaches as well. In fact, Lpmln has already been implemented in the system lpmln2asp (Reference Lee, Talsania and WangLee et al., 2017) by mapping Lpmln-based reasoning into reasoning modes in clingo (viz., optimization and enumeration of stable models). As such, the core of plingo can be seen as a re-implementation of lpmln2asp that is well integrated with clingo by using its multi-shot and theory reasoning functionalities.

In more detail, the language

![]() $\textit{Lpmln}^{\pm }$

constitutes a subset of Lpmln restricting the form of weight rules while being extended with ASP’s regular weak constraints. This restriction allows us to partition logic programs into two independent parts: a hard part generating optimal stable models and a soft part determining the probabilities of these optimal stable models. Arguably, this separation yields a simpler semantics that leads in turn to an easier way of modeling probabilistic logic programs. Nonetheless, it turns out that this variant is still general enough to capture full Lpmln.

$\textit{Lpmln}^{\pm }$

constitutes a subset of Lpmln restricting the form of weight rules while being extended with ASP’s regular weak constraints. This restriction allows us to partition logic programs into two independent parts: a hard part generating optimal stable models and a soft part determining the probabilities of these optimal stable models. Arguably, this separation yields a simpler semantics that leads in turn to an easier way of modeling probabilistic logic programs. Nonetheless, it turns out that this variant is still general enough to capture full Lpmln.

The system plingo implements the language

![]() $\textit{Lpmln}^{\pm }$

within the input language of clingo. The idea is to describe the hard part in terms of normal rules and weak constraints at priority levels different from

$\textit{Lpmln}^{\pm }$

within the input language of clingo. The idea is to describe the hard part in terms of normal rules and weak constraints at priority levels different from

![]() $0$

and the soft part via weak constraints at priority level

$0$

and the soft part via weak constraints at priority level

![]() $0$

. This fits well with the semantics of clingo, which considers higher priority levels more important. On top of this, plingo offers three alternative frontends for Lpmln, P-log, and ProbLog, respectively, featuring dedicated language constructs that are in turn translated into the format described above. The frontends rely on the translations from ProbLog and P-log to Lpmln from Reference Lee and WangLee and Wang (2016) and Reference Lee and YangLee and Yang (2017), respectively, and on our translation from Lpmln to

$0$

. This fits well with the semantics of clingo, which considers higher priority levels more important. On top of this, plingo offers three alternative frontends for Lpmln, P-log, and ProbLog, respectively, featuring dedicated language constructs that are in turn translated into the format described above. The frontends rely on the translations from ProbLog and P-log to Lpmln from Reference Lee and WangLee and Wang (2016) and Reference Lee and YangLee and Yang (2017), respectively, and on our translation from Lpmln to

![]() $\textit{Lpmln}^{\pm }$

. For solving, the basic algorithm of plingo follows the approach of lpmln2asp by reducing probabilistic reasoning to clingo’s regular optimization and enumeration modes. This is complemented by two additional solving methods. The first is an approximation algorithm that calculates probabilities using only the most probable

$\textit{Lpmln}^{\pm }$

. For solving, the basic algorithm of plingo follows the approach of lpmln2asp by reducing probabilistic reasoning to clingo’s regular optimization and enumeration modes. This is complemented by two additional solving methods. The first is an approximation algorithm that calculates probabilities using only the most probable

![]() $k$

stable models given an input parameter

$k$

stable models given an input parameter

![]() $k$

. This algorithm takes advantage of a new improved implementation of the task of ASEO (Reference Pajunen and JanhunenPajunen and Janhunen (2021)). The second method is based on a novel translation from

$k$

. This algorithm takes advantage of a new improved implementation of the task of ASEO (Reference Pajunen and JanhunenPajunen and Janhunen (2021)). The second method is based on a novel translation from

![]() $\textit{Lpmln}^{\pm }$

to ProbLog, which we introduce in this paper. The method translates the input program into a ProbLog program and then runs the system problog 2.2 (Reference Fierens, den Broeck, Renkens, Shterionov, Gutmann, Thon, Janssens and RaedtFierens et al., 2015) on that program. Naturally, this approach benefits from the current and future developments of ProbLog solvers. Interestingly, the solving techniques of problog 2.2 are quite mature, and they are different to the ones implemented in plingo, making the approach a good complement to the system.

$\textit{Lpmln}^{\pm }$

to ProbLog, which we introduce in this paper. The method translates the input program into a ProbLog program and then runs the system problog 2.2 (Reference Fierens, den Broeck, Renkens, Shterionov, Gutmann, Thon, Janssens and RaedtFierens et al., 2015) on that program. Naturally, this approach benefits from the current and future developments of ProbLog solvers. Interestingly, the solving techniques of problog 2.2 are quite mature, and they are different to the ones implemented in plingo, making the approach a good complement to the system.

We have empirically evaluated plingo’s performance by comparing its different solving methods and by contrasting them to original implementations of Lpmln, ProbLog, and P-log. The results show that the different solving approaches of plingo are indeed complementary and that plingo performs at the same level as other probabilistic reasoning systems.

There are many other probabilistic extensions of logic programming. For a recent review about them, we refer the reader to Reference Cozman and MauáCozman and Mauá (2020) and the references therein. Among the latest work most relevant to us, from the knowledge representation perspective, the credal semantics (Reference Cozman and MauáCozman and Mauá, 2020) and SMProblog (Reference Totis, Kimmig and RaedtTotis et al., 2021) can be seen as two different generalizations of ProbLog to normal logic programs under stable models semantics. From the implementation perspective, Reference Eiter, Hecher and KieselEiter et al. (2021) present a method for algebraic answer set counting, implemented in the system aspmc, and show promising results on its application to probabilistic inference under stable model semantics.

The paper is organized as follows. In Section 2, we review the necessary background material about Lpmln and ProbLog. In Section 3, we define our language

![]() $\textit{Lpmln}^{\pm }$

and introduce the translations from Lpmln to

$\textit{Lpmln}^{\pm }$

and introduce the translations from Lpmln to

![]() $\textit{Lpmln}^{\pm }$

and from

$\textit{Lpmln}^{\pm }$

and from

![]() $\textit{Lpmln}^{\pm }$

to ProbLog. We continue in Section 4 with the description of the language of the system plingo. There, we also present the frontends of Lpmln, P-log, and ProbLog, going through various examples. Section 5 is devoted to the description of the implementation of plingo, and Section 6 describes the experimental evaluation. We conclude in Section 7. The proofs of the theoretical results are available in the Appendix.

$\textit{Lpmln}^{\pm }$

to ProbLog. We continue in Section 4 with the description of the language of the system plingo. There, we also present the frontends of Lpmln, P-log, and ProbLog, going through various examples. Section 5 is devoted to the description of the implementation of plingo, and Section 6 describes the experimental evaluation. We conclude in Section 7. The proofs of the theoretical results are available in the Appendix.

This article extends the conference paper presented in Reference Hahn, Janhunen, Kaminski, Romero, Rühling and SchaubHahn et al. (2022). It includes many new examples to illustrate the formal definitions and the proofs of the formal results. Most notably, this extended version introduces the translation from

![]() $\textit{Lpmln}^{\pm }$

to ProbLog, its implementation in plingo, and its experimental evaluation using problog 2.2. The comparison with problog of Reference Hahn, Janhunen, Kaminski, Romero, Rühling and SchaubHahn et al. (2022) had shown us that problog could be much faster that plingo and the other ASP-based probabilistic systems. This extension basically turns that handicap into an opportunity for plingo.

$\textit{Lpmln}^{\pm }$

to ProbLog, its implementation in plingo, and its experimental evaluation using problog 2.2. The comparison with problog of Reference Hahn, Janhunen, Kaminski, Romero, Rühling and SchaubHahn et al. (2022) had shown us that problog could be much faster that plingo and the other ASP-based probabilistic systems. This extension basically turns that handicap into an opportunity for plingo.

2 Background

A logic program is a set of propositional formulas. A rule is a propositional formula of the form

![]() $H \leftarrow B$

where the head

$H \leftarrow B$

where the head

![]() $H$

is a disjunction of literals and the body

$H$

is a disjunction of literals and the body

![]() $B$

is either

$B$

is either

![]() $\top$

or a conjunction of literals. A rule is normal if

$\top$

or a conjunction of literals. A rule is normal if

![]() $H$

is an atom

$H$

is an atom

![]() $a$

, and it is a choice rule if

$a$

, and it is a choice rule if

![]() $H$

is a disjunction

$H$

is a disjunction

![]() $a \vee \neg a$

for some atom

$a \vee \neg a$

for some atom

![]() $a$

. If

$a$

. If

![]() $B$

is

$B$

is

![]() $\top$

, we write simply

$\top$

, we write simply

![]() $H$

, and if the rule is normal, we call it a fact. We often identify a set of facts with the corresponding set of atoms. A normal logic program is a set of normal rules. An interpretation is a set of propositional atoms. An interpretation

$H$

, and if the rule is normal, we call it a fact. We often identify a set of facts with the corresponding set of atoms. A normal logic program is a set of normal rules. An interpretation is a set of propositional atoms. An interpretation

![]() $X$

is a stable model of a logic program

$X$

is a stable model of a logic program

![]() $\Pi$

if it is a subset minimal model of the program that results from replacing in

$\Pi$

if it is a subset minimal model of the program that results from replacing in

![]() $\Pi$

every maximal subformula that is not satisfied by

$\Pi$

every maximal subformula that is not satisfied by

![]() $X$

by

$X$

by

![]() $\bot$

(Reference FerrarisFerraris, 2005). The set of stable models of a logic program

$\bot$

(Reference FerrarisFerraris, 2005). The set of stable models of a logic program

![]() $\Pi$

is denoted by

$\Pi$

is denoted by

![]() $\mathit{SM}(\Pi )$

. A logic program with weak constraints is a set

$\mathit{SM}(\Pi )$

. A logic program with weak constraints is a set

![]() $\Pi _1 \cup \Pi _2$

where

$\Pi _1 \cup \Pi _2$

where

![]() $\Pi _1$

is a logic program and

$\Pi _1$

is a logic program and

![]() $\Pi _2$

is a set of weak constraints of the form

$\Pi _2$

is a set of weak constraints of the form

![]() $ :\sim \, F[\mathit{w}, \mathit{l}]$

where

$ :\sim \, F[\mathit{w}, \mathit{l}]$

where

![]() $F$

is a formula,

$F$

is a formula,

![]() $\mathit{w}$

is a real number weight, and

$\mathit{w}$

is a real number weight, and

![]() $\mathit{l}$

is a nonnegative integer. The cost of a stable model

$\mathit{l}$

is a nonnegative integer. The cost of a stable model

![]() $X$

of

$X$

of

![]() $\Pi _1$

at some nonnegative integer level

$\Pi _1$

at some nonnegative integer level

![]() $l$

is the sum of the costs

$l$

is the sum of the costs

![]() $w$

of the weak constraints

$w$

of the weak constraints

![]() $:\sim \, F[\mathit{w}, \mathit{l}]$

from

$:\sim \, F[\mathit{w}, \mathit{l}]$

from

![]() $\Pi _1$

whose formula

$\Pi _1$

whose formula

![]() $F$

is satisfied by

$F$

is satisfied by

![]() $X$

. Given two stable models

$X$

. Given two stable models

![]() $X$

and

$X$

and

![]() $Y$

of

$Y$

of

![]() $\Pi _1$

,

$\Pi _1$

,

![]() $X$

is preferred to

$X$

is preferred to

![]() $Y$

wrt.

$Y$

wrt.

![]() $\Pi _2$

if there is some nonnegative integer

$\Pi _2$

if there is some nonnegative integer

![]() $l$

such that the cost of

$l$

such that the cost of

![]() $X$

at

$X$

at

![]() $l$

is smaller than the cost of

$l$

is smaller than the cost of

![]() $Y$

at

$Y$

at

![]() $l$

, and for all

$l$

, and for all

![]() $l^{\prime} \gt l$

, the costs of

$l^{\prime} \gt l$

, the costs of

![]() $X$

and

$X$

and

![]() $Y$

at

$Y$

at

![]() $l^{\prime}$

are the same.

$l^{\prime}$

are the same.

![]() $X$

is an optimal model of

$X$

is an optimal model of

![]() $\Pi _1 \cup \Pi _2$

if it is a stable model of

$\Pi _1 \cup \Pi _2$

if it is a stable model of

![]() $\Pi _1$

and there is no stable model

$\Pi _1$

and there is no stable model

![]() $Y$

of

$Y$

of

![]() $\Pi _1$

such that

$\Pi _1$

such that

![]() $Y$

is preferred to

$Y$

is preferred to

![]() $X$

wrt.

$X$

wrt.

![]() $\Pi _2$

(Reference Buccafurri, Leone and RulloBuccafurri et al., 2000).

$\Pi _2$

(Reference Buccafurri, Leone and RulloBuccafurri et al., 2000).

We review the definition of Lpmln from Reference Lee and WangLee and Wang (2016). An Lpmln program

![]() $\Pi$

is a finite set of weighted formulas

$\Pi$

is a finite set of weighted formulas

![]() $w : F$

where

$w : F$

where

![]() $F$

is a propositional formula and

$F$

is a propositional formula and

![]() $w$

is either a real number (in which case, the weighted formula is called soft) or

$w$

is either a real number (in which case, the weighted formula is called soft) or

![]() $\alpha$

for denoting the infinite weight (in which case, the weighted formula is called hard). If

$\alpha$

for denoting the infinite weight (in which case, the weighted formula is called hard). If

![]() $\Pi$

is an Lpmln program, by

$\Pi$

is an Lpmln program, by

![]() $\Pi ^{\mathit{soft}}$

and

$\Pi ^{\mathit{soft}}$

and

![]() $\Pi ^{\mathit{hard}}$

we denote the set of soft and hard formulas of

$\Pi ^{\mathit{hard}}$

we denote the set of soft and hard formulas of

![]() $\Pi$

, respectively. For any Lpmln program

$\Pi$

, respectively. For any Lpmln program

![]() $\Pi$

and any set

$\Pi$

and any set

![]() $X$

of atoms,

$X$

of atoms,

![]() ${\overline{\Pi }}$

denotes the set of (unweighted) formulas obtained from

${\overline{\Pi }}$

denotes the set of (unweighted) formulas obtained from

![]() $\Pi$

by dropping the weights, and

$\Pi$

by dropping the weights, and

![]() $\Pi _X$

denotes the set of weighted formulas

$\Pi _X$

denotes the set of weighted formulas

![]() $w : F$

in

$w : F$

in

![]() $\Pi$

such that

$\Pi$

such that

![]() $X \models F$

. Given an Lpmln program

$X \models F$

. Given an Lpmln program

![]() $\Pi$

,

$\Pi$

,

![]() $\mathit{SSM}(\Pi )$

denotes the set of soft stable models

$\mathit{SSM}(\Pi )$

denotes the set of soft stable models

![]() $ \{X \mid X \textrm{ is a stable model of }{{\overline{\Pi _X}}} \}.$

The total weight of

$ \{X \mid X \textrm{ is a stable model of }{{\overline{\Pi _X}}} \}.$

The total weight of

![]() $\Pi$

, written

$\Pi$

, written

![]() $\mathit{TW}(\Pi )$

, is defined as

$\mathit{TW}(\Pi )$

, is defined as

![]() $\mathit{exp}(\sum _{w:F \in \Pi }w)$

.

$\mathit{exp}(\sum _{w:F \in \Pi }w)$

.

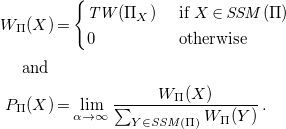

The weight

![]() $W_{\Pi }(X)$

of an interpretation and its probability

$W_{\Pi }(X)$

of an interpretation and its probability

![]() $P_{\Pi }(X)$

are defined, respectively, as

$P_{\Pi }(X)$

are defined, respectively, as

\begin{align*}{W_{\Pi }(X)} & = \begin{cases}{\mathit{TW}(\Pi _X)} & \textrm{ if } X \in{\mathit{SSM}(\Pi )} \\ 0 & \textrm{ otherwise } \end{cases} \\ \textrm{ and } \\{P_{\Pi }(X)} & = \lim _{\alpha \rightarrow \infty } \frac{{W_{\Pi }(X)}}{\sum _{Y \in{\mathit{SSM}(\Pi )}}{W_{\Pi }(Y)}} \, . \end{align*}

\begin{align*}{W_{\Pi }(X)} & = \begin{cases}{\mathit{TW}(\Pi _X)} & \textrm{ if } X \in{\mathit{SSM}(\Pi )} \\ 0 & \textrm{ otherwise } \end{cases} \\ \textrm{ and } \\{P_{\Pi }(X)} & = \lim _{\alpha \rightarrow \infty } \frac{{W_{\Pi }(X)}}{\sum _{Y \in{\mathit{SSM}(\Pi )}}{W_{\Pi }(Y)}} \, . \end{align*}

An interpretation

![]() $X$

is called a probabilistic stable model of

$X$

is called a probabilistic stable model of

![]() $\Pi$

if

$\Pi$

if

![]() ${P_{\Pi }(X)} \neq 0$

.

${P_{\Pi }(X)} \neq 0$

.

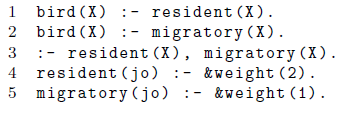

Example 1. Let

![]() $\Pi _1$

be the Lpmln program that consists of the following formulas:

$\Pi _1$

be the Lpmln program that consists of the following formulas:

The soft stable models of

![]() $\Pi _1$

are

$\Pi _1$

are

![]() $\emptyset$

,

$\emptyset$

,

![]() $\{a\}$

,

$\{a\}$

,

![]() $\{b\}$

, and

$\{b\}$

, and

![]() $\{a,b\}$

. Their weights and probabilities are as follows:

$\{a,b\}$

. Their weights and probabilities are as follows:

To calculate the probabilities, we first determine the denominator

For the soft stable model

![]() $\{b\}$

, we get

$\{b\}$

, we get

If we apply

![]() $\lim _{\alpha \rightarrow \infty }$

, we see that the denominator tends to infinity, while the numerator is a constant. Therefore, the whole expression tends to

$\lim _{\alpha \rightarrow \infty }$

, we see that the denominator tends to infinity, while the numerator is a constant. Therefore, the whole expression tends to

![]() $0$

as

$0$

as

![]() $\alpha$

approaches infinity. In the same way, we can simplify the denominator by removing the

$\alpha$

approaches infinity. In the same way, we can simplify the denominator by removing the

![]() $\mathit{exp}(1)$

as it will always be dominated by the other terms containing

$\mathit{exp}(1)$

as it will always be dominated by the other terms containing

![]() $\mathit{exp}(\alpha )$

. For the soft stable models

$\mathit{exp}(\alpha )$

. For the soft stable models

![]() $\{a\}$

and

$\{a\}$

and

![]() $\{a,b\}$

we then get the exact probabilities

$\{a,b\}$

we then get the exact probabilities

![]() ${P_{\Pi _1}(\{a\})} = 1 / (1+e)$

and

${P_{\Pi _1}(\{a\})} = 1 / (1+e)$

and

![]() ${P_{\Pi _1}(\{a,b\})} = e / (1+e)$

whose approximate values we showed above. For the soft stable model

${P_{\Pi _1}(\{a,b\})} = e / (1+e)$

whose approximate values we showed above. For the soft stable model

![]() $\{ \emptyset \}$

, the probability is

$\{ \emptyset \}$

, the probability is

![]() $0$

since the weight is

$0$

since the weight is

![]() $0$

.

$0$

.

Let

![]() $\Pi _2=\Pi _1 \cup \{\alpha : \neg a\}$

. The soft stable models of

$\Pi _2=\Pi _1 \cup \{\alpha : \neg a\}$

. The soft stable models of

![]() $\Pi _2$

are the same as those of

$\Pi _2$

are the same as those of

![]() $\Pi _1$

, but their weights and probabilities are different:

$\Pi _1$

, but their weights and probabilities are different:

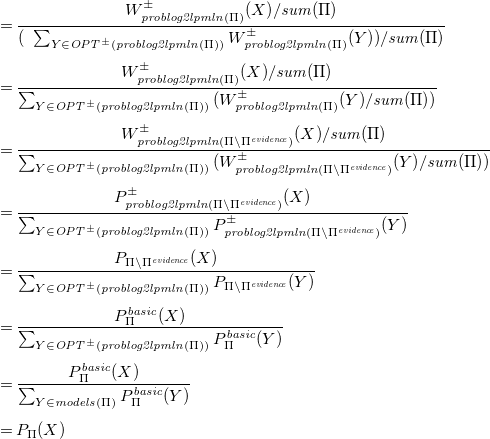

Let

![]() $\Pi _3$

and

$\Pi _3$

and

![]() $\Pi _4$

replace the formula

$\Pi _4$

replace the formula

![]() $1: b$

by the two formulas

$1: b$

by the two formulas

![]() $\alpha : b \vee \neg b$

and

$\alpha : b \vee \neg b$

and

![]() $1 : \neg \neg b$

in

$1 : \neg \neg b$

in

![]() $\Pi _1$

and

$\Pi _1$

and

![]() $\Pi _2$

, respectively, i.e.,

$\Pi _2$

, respectively, i.e.,

![]() $\Pi _3 = \{ \alpha : a, \, \alpha : b \vee \neg b, \, 1 : \neg \neg b \}$

and

$\Pi _3 = \{ \alpha : a, \, \alpha : b \vee \neg b, \, 1 : \neg \neg b \}$

and

![]() $\Pi _4=\Pi _3 \cup \{\alpha : \neg a\}$

. The soft stable models of

$\Pi _4=\Pi _3 \cup \{\alpha : \neg a\}$

. The soft stable models of

![]() $\Pi _3$

are the same as those of

$\Pi _3$

are the same as those of

![]() $\Pi _1$

, their weights are the same as in

$\Pi _1$

, their weights are the same as in

![]() $\Pi _1$

but incremented by

$\Pi _1$

but incremented by

![]() $\alpha$

, since all of them satisfy the new choice rule

$\alpha$

, since all of them satisfy the new choice rule

![]() $\alpha : b \vee \neg b$

, and their probabilities are the same as in

$\alpha : b \vee \neg b$

, and their probabilities are the same as in

![]() $\Pi _1$

. The same relation holds between the soft stable models of

$\Pi _1$

. The same relation holds between the soft stable models of

![]() $\Pi _4$

and

$\Pi _4$

and

![]() $\Pi _2$

.

$\Pi _2$

.

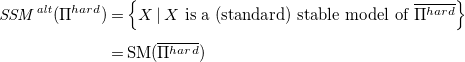

Besides the standard definition, we consider also an alternative definition for Lpmln from Reference Lee and WangLee and Wang (2016), where soft stable models must satisfy all hard formulas of

![]() $\Pi$

. In this case, we have

$\Pi$

. In this case, we have

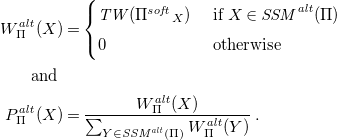

while the weight

![]() $W_{\Pi }^{\mathit{alt}}(X)$

of an interpretation and its probability

$W_{\Pi }^{\mathit{alt}}(X)$

of an interpretation and its probability

![]() $P_{\Pi }^{\mathit{alt}}(X)$

are defined, respectively, as

$P_{\Pi }^{\mathit{alt}}(X)$

are defined, respectively, as

\begin{align*}{W_{\Pi }^{\mathit{alt}}(X)} & = \begin{cases}{\mathit{TW}({\Pi ^{\mathit{soft}}}_X)} & \textrm{ if } X \in{\mathit{SSM}^{\mathit{alt}}(\Pi )} \\[3pt] 0 & \textrm{ otherwise } \end{cases} \\ \textrm{ and } \\{P_{\Pi }^{\mathit{alt}}(X)} & = \frac{{W_{\Pi }^{\mathit{alt}}(X)}}{\sum _{Y \in{\mathit{SSM}^{\mathit{alt}}(\Pi )}}{W_{\Pi }^{\mathit{alt}}(Y)}} \, . \end{align*}

\begin{align*}{W_{\Pi }^{\mathit{alt}}(X)} & = \begin{cases}{\mathit{TW}({\Pi ^{\mathit{soft}}}_X)} & \textrm{ if } X \in{\mathit{SSM}^{\mathit{alt}}(\Pi )} \\[3pt] 0 & \textrm{ otherwise } \end{cases} \\ \textrm{ and } \\{P_{\Pi }^{\mathit{alt}}(X)} & = \frac{{W_{\Pi }^{\mathit{alt}}(X)}}{\sum _{Y \in{\mathit{SSM}^{\mathit{alt}}(\Pi )}}{W_{\Pi }^{\mathit{alt}}(Y)}} \, . \end{align*}

The set

![]() $\mathit{SSM}^{\mathit{alt}}(\Pi )$

may be empty if there is no soft stable model that satisfies all hard formulas of

$\mathit{SSM}^{\mathit{alt}}(\Pi )$

may be empty if there is no soft stable model that satisfies all hard formulas of

![]() $\Pi$

, in which case

$\Pi$

, in which case

![]() $P_{\Pi }^{\mathit{alt}}(X)$

is not defined. On the other hand, if

$P_{\Pi }^{\mathit{alt}}(X)$

is not defined. On the other hand, if

![]() $\mathit{SSM}^{\mathit{alt}}(\Pi )$

is not empty, then for every interpretation

$\mathit{SSM}^{\mathit{alt}}(\Pi )$

is not empty, then for every interpretation

![]() $X$

, the values of

$X$

, the values of

![]() $P_{\Pi }^{\mathit{alt}}(X)$

and

$P_{\Pi }^{\mathit{alt}}(X)$

and

![]() $P_{\Pi }(X)$

are the same (cf. Proposition2 from Reference Lee and WangLee and Wang (2016)).

$P_{\Pi }(X)$

are the same (cf. Proposition2 from Reference Lee and WangLee and Wang (2016)).

Example 2. According to the alternative definition of Lpmln, the soft stable models of both

![]() $\Pi _1$

and

$\Pi _1$

and

![]() $\Pi _3$

are

$\Pi _3$

are

![]() $\{a\}$

and

$\{a\}$

and

![]() $\{a,b\}$

. Their weights with respect to

$\{a,b\}$

. Their weights with respect to

![]() $\Pi _1$

are

$\Pi _1$

are

![]() $\mathit{exp}(0)$

and

$\mathit{exp}(0)$

and

![]() $\mathit{exp}(1)$

, respectively, and they are also

$\mathit{exp}(1)$

, respectively, and they are also

![]() $\mathit{exp}(0)$

and

$\mathit{exp}(0)$

and

![]() $\mathit{exp}(1)$

with respect to

$\mathit{exp}(1)$

with respect to

![]() $\Pi _3$

. The denominator is thus

$\Pi _3$

. The denominator is thus

![]() $1+e$

in both cases, and therefore, their probabilities are

$1+e$

in both cases, and therefore, their probabilities are

![]() $1/(1+e)$

and

$1/(1+e)$

and

![]() $e/(1+e)$

, the same as under the the standard definition. In turn, programs

$e/(1+e)$

, the same as under the the standard definition. In turn, programs

![]() $\Pi _2$

and

$\Pi _2$

and

![]() $\Pi _4$

have no soft stable models with the alternative semantics, and for this reason, the probabilities of all of their interpretations are undefined.

$\Pi _4$

have no soft stable models with the alternative semantics, and for this reason, the probabilities of all of their interpretations are undefined.

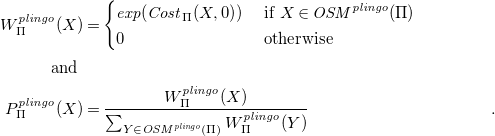

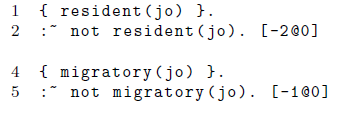

In the next paragraphs, we adapt the definition of ProbLog from Reference Fierens, den Broeck, Renkens, Shterionov, Gutmann, Thon, Janssens and RaedtFierens et al. (2015) to our notation. A basic ProbLog program

![]() $\Pi$

consists of two parts: a set

$\Pi$

consists of two parts: a set

![]() ${{\Pi }^{\mathit{normal}}}$

of normal rules and a set

${{\Pi }^{\mathit{normal}}}$

of normal rules and a set

![]() ${{\Pi }^{\mathit{probs}}}$

of probabilistic facts of the form

${{\Pi }^{\mathit{probs}}}$

of probabilistic facts of the form

![]() $p :: a$

for some probability

$p :: a$

for some probability

![]() $p$

and some atom

$p$

and some atom

![]() $a$

. Without loss of generality, we consider that

$a$

. Without loss of generality, we consider that

![]() $p$

is strictly between

$p$

is strictly between

![]() $0$

and

$0$

and

![]() $1$

. By

$1$

. By

![]() ${{\mathit{choices}(\Pi )}}$

, we denote the set

${{\mathit{choices}(\Pi )}}$

, we denote the set

![]() $\{ a \mid p :: a \in{{{\Pi }^{\mathit{probs}}}}\}$

of atoms occurring in the probabilistic facts of such a program. We say that a basic ProbLog program is valid if it satisfies these conditions:

$\{ a \mid p :: a \in{{{\Pi }^{\mathit{probs}}}}\}$

of atoms occurring in the probabilistic facts of such a program. We say that a basic ProbLog program is valid if it satisfies these conditions:

-

1. The probabilistic atoms

$a \in{{{\mathit{choices}(\Pi )}}}$

must not occur in any head

$a \in{{{\mathit{choices}(\Pi )}}}$

must not occur in any head

$H$

of any rule

$H$

of any rule

$H \leftarrow B$

from

$H \leftarrow B$

from

${\Pi }^{\mathit{normal}}$

.

${\Pi }^{\mathit{normal}}$

. -

2. For every set

$X \subseteq{{{\mathit{choices}(\Pi )}}}$

, the well-founded model (Reference Van Gelder, Ross and SchlipfVan Gelder et al., 1991) of

$X \subseteq{{{\mathit{choices}(\Pi )}}}$

, the well-founded model (Reference Van Gelder, Ross and SchlipfVan Gelder et al., 1991) of

${{{\Pi }^{\mathit{normal}}}}\cup X$

must be total.

${{{\Pi }^{\mathit{normal}}}}\cup X$

must be total.

The second condition holds, in particular, if

![]() ${{\Pi }^{\mathit{normal}}}$

is positive or stratified (Reference Van Gelder, Ross and SchlipfVan Gelder et al., 1991). Note also that if the second condition holds, then the program

${{\Pi }^{\mathit{normal}}}$

is positive or stratified (Reference Van Gelder, Ross and SchlipfVan Gelder et al., 1991). Note also that if the second condition holds, then the program

![]() ${{{\Pi }^{\mathit{normal}}}}\cup X$

has a unique stable model that coincides with the true atoms of its well-founded model. Following Reference Fierens, den Broeck, Renkens, Shterionov, Gutmann, Thon, Janssens and RaedtFierens et al. (2015), we consider only basic ProbLog programs that are valid.

${{{\Pi }^{\mathit{normal}}}}\cup X$

has a unique stable model that coincides with the true atoms of its well-founded model. Following Reference Fierens, den Broeck, Renkens, Shterionov, Gutmann, Thon, Janssens and RaedtFierens et al. (2015), we consider only basic ProbLog programs that are valid.

Given a basic ProbLog program

![]() $\Pi$

, the probability

$\Pi$

, the probability

![]() $P_{\Pi }(X)$

of an interpretation

$P_{\Pi }(X)$

of an interpretation

![]() $X$

is defined as follows:

$X$

is defined as follows:

-

• If

$X$

is the (unique) stable model of

$X$

is the (unique) stable model of

${{{\Pi }^{\mathit{normal}}}}\cup (X \cap{{{\mathit{choices}(\Pi )}}})$

, then

${{{\Pi }^{\mathit{normal}}}}\cup (X \cap{{{\mathit{choices}(\Pi )}}})$

, then

$P_{\Pi }(X)$

is the product of the products

$P_{\Pi }(X)$

is the product of the products \begin{align*} \prod _{\substack{\phantom{a}p :: a \in{{{\Pi }^{\mathit{probs}}}} \\ a \in X}}p \textrm{ and } \prod _{\substack{\phantom{a}p :: a \in{{{\Pi }^{\mathit{probs}}}} \\ a \notin X}}1 - p. \end{align*}

\begin{align*} \prod _{\substack{\phantom{a}p :: a \in{{{\Pi }^{\mathit{probs}}}} \\ a \in X}}p \textrm{ and } \prod _{\substack{\phantom{a}p :: a \in{{{\Pi }^{\mathit{probs}}}} \\ a \notin X}}1 - p. \end{align*}

-

• Otherwise,

$P_{\Pi }(X)$

is

$P_{\Pi }(X)$

is

$0$

.

$0$

.

Example 3. Let

![]() $\Pi _6$

be the ProbLog program that consists of the following elements:

$\Pi _6$

be the ProbLog program that consists of the following elements:

We have that

![]() ${{{\Pi }_{6}^{\mathit{probs}}}}=\{ 0.4 :: a\}$

, and

${{{\Pi }_{6}^{\mathit{probs}}}}=\{ 0.4 :: a\}$

, and

![]() ${{{\Pi }_{6}^{\mathit{normal}}}}=\{ b \leftarrow \neg a\}$

,

${{{\Pi }_{6}^{\mathit{normal}}}}=\{ b \leftarrow \neg a\}$

,

![]() ${{{\mathit{choices}(\Pi _6)}}}=\{a\}$

. Program

${{{\mathit{choices}(\Pi _6)}}}=\{a\}$

. Program

![]() $\Pi _6$

is valid because it satisfies both condition 1, since the unique atom

$\Pi _6$

is valid because it satisfies both condition 1, since the unique atom

![]() $a \in{{{\mathit{choices}(\Pi _6)}}}$

does not occur in the head of the unique rule of

$a \in{{{\mathit{choices}(\Pi _6)}}}$

does not occur in the head of the unique rule of

![]() ${{\Pi }_{6}^{\mathit{normal}}}$

, and condition 2, since the program

${{\Pi }_{6}^{\mathit{normal}}}$

, and condition 2, since the program

![]() ${{\Pi }_{6}^{\mathit{normal}}}$

is stratified. The interpretations

${{\Pi }_{6}^{\mathit{normal}}}$

is stratified. The interpretations

![]() $\{a\}$

and

$\{a\}$

and

![]() $\{b\}$

have probability

$\{b\}$

have probability

![]() $0.4$

and

$0.4$

and

![]() $0.6$

, respectively, and the others have probability

$0.6$

, respectively, and the others have probability

![]() $0$

.

$0$

.

In Reference Fierens, den Broeck, Renkens, Shterionov, Gutmann, Thon, Janssens and RaedtFierens et al. (2015), the definition of an inference task may include the specification of some evidence. Here, to simplify the presentation, it is convenient to include the evidence as part of a ProbLog program. To do this, we represent this evidence by formulas of the form

![]() $\neg a$

or

$\neg a$

or

![]() $\neg \neg a$

for some atom

$\neg \neg a$

for some atom

![]() $a$

, which we call evidence literals. We use evidence literals of the form

$a$

, which we call evidence literals. We use evidence literals of the form

![]() $\neg \neg a$

, instead of normal atoms of the form

$\neg \neg a$

, instead of normal atoms of the form

![]() $a$

, to distinguish them clearly from normal facts and to simplify our presentation later. Then, we consider extended ProbLog programs that contain a set

$a$

, to distinguish them clearly from normal facts and to simplify our presentation later. Then, we consider extended ProbLog programs that contain a set

![]() ${{\Pi }^{\mathit{evidence}}}$

of evidence literals in addition to normal rules and probabilistic facts. Both the notation and the definition of validity that we introduced above carry over naturally to ProbLog programs of this extended form. Just like before, we consider only valid extended ProbLog programs. Next, let

${{\Pi }^{\mathit{evidence}}}$

of evidence literals in addition to normal rules and probabilistic facts. Both the notation and the definition of validity that we introduced above carry over naturally to ProbLog programs of this extended form. Just like before, we consider only valid extended ProbLog programs. Next, let

![]() $\Pi$

be an extended ProbLog program. If

$\Pi$

be an extended ProbLog program. If

![]() $X$

is an interpretation, by

$X$

is an interpretation, by

![]() $P^{\mathit{basic}}_{\Pi }(X)$

we denote the probability

$P^{\mathit{basic}}_{\Pi }(X)$

we denote the probability

![]() $P_{{{{\Pi }^{\mathit{normal}}}}\cup{{{\Pi }^{\mathit{probs}}}}}(X)$

of the corresponding basic ProbLog program. Then, the probability of the evidence of

$P_{{{{\Pi }^{\mathit{normal}}}}\cup{{{\Pi }^{\mathit{probs}}}}}(X)$

of the corresponding basic ProbLog program. Then, the probability of the evidence of

![]() $\Pi$

is the sum of the basic probabilities

$\Pi$

is the sum of the basic probabilities

![]() $P^{\mathit{basic}}_{\Pi }(X)$

of the interpretations

$P^{\mathit{basic}}_{\Pi }(X)$

of the interpretations

![]() $X$

that satisfy all evidence literals in

$X$

that satisfy all evidence literals in

![]() ${{\Pi }^{\mathit{evidence}}}$

. Finally, given an extended ProbLog program

${{\Pi }^{\mathit{evidence}}}$

. Finally, given an extended ProbLog program

![]() $\Pi$

, the probability

$\Pi$

, the probability

![]() $P_{\Pi }(X)$

of an interpretation

$P_{\Pi }(X)$

of an interpretation

![]() $X$

is

$X$

is

-

• undefined if the probability of the evidence of

$\Pi$

is zero, otherwise

$\Pi$

is zero, otherwise -

• it is

$0$

if

$0$

if

$X$

does not satisfy all evidence literals in

$X$

does not satisfy all evidence literals in

${{\Pi }^{\mathit{evidence}}}$

, and otherwise

${{\Pi }^{\mathit{evidence}}}$

, and otherwise -

• it is the quotient between

$P^{\mathit{basic}}_{\Pi }(X)$

and the probability of the evidence of

$P^{\mathit{basic}}_{\Pi }(X)$

and the probability of the evidence of

$\Pi$

.

$\Pi$

.

Basic ProbLog programs are a special case of extended ProbLog programs where

![]() ${{\Pi }^{\mathit{evidence}}}$

is empty. From now on, we refer to extended ProbLog programs simply as ProbLog programs.

${{\Pi }^{\mathit{evidence}}}$

is empty. From now on, we refer to extended ProbLog programs simply as ProbLog programs.

Example 4. Let

![]() $\Pi _7=\Pi _6\cup \{\neg b\}$

be the ProbLog program that extends

$\Pi _7=\Pi _6\cup \{\neg b\}$

be the ProbLog program that extends

![]() $\Pi _6$

by the evidence literal

$\Pi _6$

by the evidence literal

![]() $\neg b$

. It holds that

$\neg b$

. It holds that

![]() $P^{\mathit{basic}}_{\Pi _7}(X)$

is the same as

$P^{\mathit{basic}}_{\Pi _7}(X)$

is the same as

![]() $P_{\Pi _6}(X)$

for all interpretations

$P_{\Pi _6}(X)$

for all interpretations

![]() $X$

. Given this, the only interpretation of

$X$

. Given this, the only interpretation of

![]() $\Pi _7$

with basic probability greater than

$\Pi _7$

with basic probability greater than

![]() $0$

that satisfies the evidence literal

$0$

that satisfies the evidence literal

![]() $\neg b$

is

$\neg b$

is

![]() $\{a\}$

, whose basic probability is

$\{a\}$

, whose basic probability is

![]() $0.4$

. Hence, the probability of the evidence of

$0.4$

. Hence, the probability of the evidence of

![]() $\Pi _7$

is

$\Pi _7$

is

![]() $0.4$

. Then, the probability of the interpretation

$0.4$

. Then, the probability of the interpretation

![]() $\{a\}$

is

$\{a\}$

is

![]() $1$

, and it is

$1$

, and it is

![]() $0$

for all other interpretations. If we replace the evidence literal

$0$

for all other interpretations. If we replace the evidence literal

![]() $\neg b$

by

$\neg b$

by

![]() $\neg \neg b$

, then it is

$\neg \neg b$

, then it is

![]() $\{b\}$

who has probability

$\{b\}$

who has probability

![]() $1$

, and the others have probability

$1$

, and the others have probability

![]() $0$

. And if we add both

$0$

. And if we add both

![]() $\neg b$

and

$\neg b$

and

![]() $\neg \neg b$

at the same time, then the probabilities of all interpretations become undefined.

$\neg \neg b$

at the same time, then the probabilities of all interpretations become undefined.

We close this section with the definition of the probability of a query atom

![]() $q$

that is similar in both versions of Lpmln and in ProbLog: it is undefined if the probability of the interpretations of the corresponding program is undefined, which cannot happen with the standard definition of Lpmln, and otherwise it is the sum of the probabilities of the interpretations that contain the query atom.

$q$

that is similar in both versions of Lpmln and in ProbLog: it is undefined if the probability of the interpretations of the corresponding program is undefined, which cannot happen with the standard definition of Lpmln, and otherwise it is the sum of the probabilities of the interpretations that contain the query atom.

3 The language

$\textit{Lpmln}^{\pm }$

$\textit{Lpmln}^{\pm }$

In this section, we introduce the language

![]() $\textit{Lpmln}^{\pm }$

and present translations from Lpmln to

$\textit{Lpmln}^{\pm }$

and present translations from Lpmln to

![]() $\textit{Lpmln}^{\pm }$

, and from

$\textit{Lpmln}^{\pm }$

, and from

![]() $\textit{Lpmln}^{\pm }$

to ProbLog. The former are used in the frontends of Lpmln, ProbLog and P-log, combined with the translations from ProbLog and P-log to Lpmln from Reference Lee and WangLee and Wang (2016) and Reference Lee and YangLee and Yang (2017), respectively. The latter is used in the solving component of plingo to translate from

$\textit{Lpmln}^{\pm }$

to ProbLog. The former are used in the frontends of Lpmln, ProbLog and P-log, combined with the translations from ProbLog and P-log to Lpmln from Reference Lee and WangLee and Wang (2016) and Reference Lee and YangLee and Yang (2017), respectively. The latter is used in the solving component of plingo to translate from

![]() $\textit{Lpmln}^{\pm }$

to ProbLog and run a problog solver.

$\textit{Lpmln}^{\pm }$

to ProbLog and run a problog solver.

The language

![]() $\textit{Lpmln}^{\pm }$

is based on Lpmln under the alternative semantics. The superscript

$\textit{Lpmln}^{\pm }$

is based on Lpmln under the alternative semantics. The superscript

![]() $\pm$

in the name indicates that the new language both extends and restricts Lpmln. The extension simply consists in adding weak constraints to the language. This is a natural extension that allows us to capture the whole Lpmln language under both the alternative and the standard semantics. On the other hand, the restriction limits the form of soft formulas to soft integrity constraints of the form

$\pm$

in the name indicates that the new language both extends and restricts Lpmln. The extension simply consists in adding weak constraints to the language. This is a natural extension that allows us to capture the whole Lpmln language under both the alternative and the standard semantics. On the other hand, the restriction limits the form of soft formulas to soft integrity constraints of the form

![]() $w : \neg F$

for some propositional formula

$w : \neg F$

for some propositional formula

![]() $F$

. This is attractive because it allows us to provide a definition of the semantics that is arguably very simple and intuitive. Interestingly, the translations from ProbLog and P-log (Reference Lee and WangLee and Wang, 2016; Reference Lee and YangLee and Yang, 2017) fall into this fragment of Lpmln. Recall that in ASP, integrity constraints of the form

$F$

. This is attractive because it allows us to provide a definition of the semantics that is arguably very simple and intuitive. Interestingly, the translations from ProbLog and P-log (Reference Lee and WangLee and Wang, 2016; Reference Lee and YangLee and Yang, 2017) fall into this fragment of Lpmln. Recall that in ASP, integrity constraints of the form

![]() $\neg F$

do not affect the generation of stable models, but they can only eliminate some of the stable models generated by the rest of the program. In Lpmln, soft integrity constraints parallel that role, since they do not affect the generation of soft stable models, but they can only affect the probabilistic weights of the soft stable models generated by the rest of the program. More precisely, it holds that the soft stable models of an Lpmln program

$\neg F$

do not affect the generation of stable models, but they can only eliminate some of the stable models generated by the rest of the program. In Lpmln, soft integrity constraints parallel that role, since they do not affect the generation of soft stable models, but they can only affect the probabilistic weights of the soft stable models generated by the rest of the program. More precisely, it holds that the soft stable models of an Lpmln program

![]() $\Pi$

remain the same if we remove from

$\Pi$

remain the same if we remove from

![]() $\Pi$

all its soft integrity constraints. The reader can check that this is the case in our example programs

$\Pi$

all its soft integrity constraints. The reader can check that this is the case in our example programs

![]() $\Pi _3$

and

$\Pi _3$

and

![]() $\Pi _4$

if we remove their soft integrity constraint

$\Pi _4$

if we remove their soft integrity constraint

![]() $1 : \neg \neg b$

. This observation leads us to the following proposition.

$1 : \neg \neg b$

. This observation leads us to the following proposition.

Proposition 1.

If

![]() $\Pi$

is an Lpmln program such that

$\Pi$

is an Lpmln program such that

![]() $\Pi ^{\mathit{soft}}$

contains only soft integrity constraints, then

$\Pi ^{\mathit{soft}}$

contains only soft integrity constraints, then

![]() ${\mathit{SSM}^{\mathit{alt}}(\Pi )}={\mathit{SM}({{\overline{{\Pi ^{\mathit{hard}}}}}})}$

.

${\mathit{SSM}^{\mathit{alt}}(\Pi )}={\mathit{SM}({{\overline{{\Pi ^{\mathit{hard}}}}}})}$

.

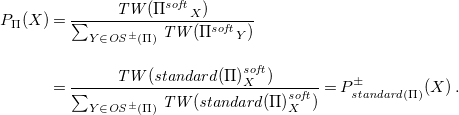

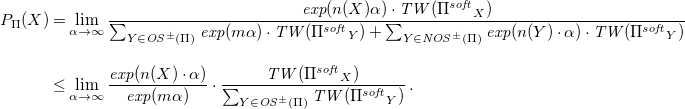

This allows us to leave aside the notion of soft stable models and simply replace in

![]() $W_{\Pi }^{\mathit{alt}}(X)$

and

$W_{\Pi }^{\mathit{alt}}(X)$

and

![]() $P_{\Pi }^{\mathit{alt}}(X)$

the set

$P_{\Pi }^{\mathit{alt}}(X)$

the set

![]() $\mathit{SSM}^{\mathit{alt}}(\Pi )$

by

$\mathit{SSM}^{\mathit{alt}}(\Pi )$

by

![]() $\mathit{SM}({{\overline{{\Pi ^{\mathit{hard}}}}}})$

. From this perspective, an Lpmln program of this restricted form has two separated parts:

$\mathit{SM}({{\overline{{\Pi ^{\mathit{hard}}}}}})$

. From this perspective, an Lpmln program of this restricted form has two separated parts:

![]() $\Pi ^{\mathit{hard}}$

, which generates stable models, and

$\Pi ^{\mathit{hard}}$

, which generates stable models, and

![]() $\Pi ^{\mathit{soft}}$

, which determines the weights of the stable models, from which their probabilities can be calculated.

$\Pi ^{\mathit{soft}}$

, which determines the weights of the stable models, from which their probabilities can be calculated.

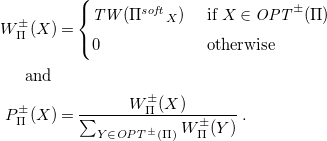

With these ideas, we can define the syntax and semantics of

![]() $\textit{Lpmln}^{\pm }$

programs. Formally, an

$\textit{Lpmln}^{\pm }$

programs. Formally, an

![]() $\textit{Lpmln}^{\pm }$

program

$\textit{Lpmln}^{\pm }$

program

![]() $\Pi$

is a set of hard formulas, soft integrity constraints, and weak constraints, denoted, respectively, by

$\Pi$

is a set of hard formulas, soft integrity constraints, and weak constraints, denoted, respectively, by

![]() $\Pi ^{\mathit{hard}}$

,

$\Pi ^{\mathit{hard}}$

,

![]() $\Pi ^{\mathit{soft}}$

, and

$\Pi ^{\mathit{soft}}$

, and

![]() $\Pi ^{\mathit{weak}}$

. In what follows, we may identify a hard formula or a set of them with their corresponding unweighted versions. We say that

$\Pi ^{\mathit{weak}}$

. In what follows, we may identify a hard formula or a set of them with their corresponding unweighted versions. We say that

![]() $\Pi$

is normal if

$\Pi$

is normal if

![]() $\Pi ^{\mathit{hard}}$

is normal. By

$\Pi ^{\mathit{hard}}$

is normal. By

![]() $\mathit{OPT}^{{\pm }}(\Pi )$

we denote the optimal stable models of

$\mathit{OPT}^{{\pm }}(\Pi )$

we denote the optimal stable models of

![]() ${{\overline{{\Pi ^{\mathit{hard}}}}}} \cup{\Pi ^{\mathit{weak}}}$

. Then, the weight and the probability of an interpretation

${{\overline{{\Pi ^{\mathit{hard}}}}}} \cup{\Pi ^{\mathit{weak}}}$

. Then, the weight and the probability of an interpretation

![]() $X$

, written

$X$

, written

![]() $W_{\Pi }^{{\pm }}(X)$

and

$W_{\Pi }^{{\pm }}(X)$

and

![]() $P_{\Pi }^{{\pm }}(X)$

, are defined as

$P_{\Pi }^{{\pm }}(X)$

, are defined as

\begin{align*}{W_{\Pi }^{{\pm }}(X)} & = \begin{cases}{\mathit{TW}({\Pi ^{\mathit{soft}}}_X)} & \textrm{ if } X \in{\mathit{OPT}^{{\pm }}(\Pi )} \\[3pt] 0 & \textrm{ otherwise } \end{cases} \\ \textrm{ and } \\{P_{\Pi }^{{\pm }}(X)} & = \frac{{W_{\Pi }^{{\pm }}(X)}}{\sum _{Y \in{\mathit{OPT}^{{\pm }}(\Pi )}}{W_{\Pi }^{{\pm }}(Y)}} \, . \end{align*}

\begin{align*}{W_{\Pi }^{{\pm }}(X)} & = \begin{cases}{\mathit{TW}({\Pi ^{\mathit{soft}}}_X)} & \textrm{ if } X \in{\mathit{OPT}^{{\pm }}(\Pi )} \\[3pt] 0 & \textrm{ otherwise } \end{cases} \\ \textrm{ and } \\{P_{\Pi }^{{\pm }}(X)} & = \frac{{W_{\Pi }^{{\pm }}(X)}}{\sum _{Y \in{\mathit{OPT}^{{\pm }}(\Pi )}}{W_{\Pi }^{{\pm }}(Y)}} \, . \end{align*}

Note that, as before,

![]() $\mathit{OPT}^{{\pm }}(\Pi )$

may be empty, in which case

$\mathit{OPT}^{{\pm }}(\Pi )$

may be empty, in which case

![]() $P_{\Pi }^{{\pm }}(X)$

is not defined. Naturally, when

$P_{\Pi }^{{\pm }}(X)$

is not defined. Naturally, when

![]() $\Pi ^{\mathit{weak}}$

is empty the semantics coincide with the alternative semantics for Lpmln. In this case,

$\Pi ^{\mathit{weak}}$

is empty the semantics coincide with the alternative semantics for Lpmln. In this case,

![]() $\mathit{OPT}^{{\pm }}(\Pi )$

is equal to

$\mathit{OPT}^{{\pm }}(\Pi )$

is equal to

![]() $\mathit{SM}({{\overline{{\Pi ^{\mathit{hard}}}}}})$

, which by Proposition1 is equal to

$\mathit{SM}({{\overline{{\Pi ^{\mathit{hard}}}}}})$

, which by Proposition1 is equal to

![]() $\mathit{SSM}^{\mathit{alt}}(\Pi )$

, and both definitions are the same.

$\mathit{SSM}^{\mathit{alt}}(\Pi )$

, and both definitions are the same.

Example 5. Programs

![]() $\Pi _1$

and

$\Pi _1$

and

![]() $\Pi _2$

are not

$\Pi _2$

are not

![]() $\textit{Lpmln}^{\pm }$

programs because they contain the soft formula

$\textit{Lpmln}^{\pm }$

programs because they contain the soft formula

![]() $1 : b$

. On the other hand,

$1 : b$

. On the other hand,

![]() $\Pi _3$

and

$\Pi _3$

and

![]() $\Pi _4$

are

$\Pi _4$

are

![]() $\textit{Lpmln}^{\pm }$

programs, and they define the same probabilities as under the alternative semantics of Lpmln. Let us introduce the

$\textit{Lpmln}^{\pm }$

programs, and they define the same probabilities as under the alternative semantics of Lpmln. Let us introduce the

![]() $\textit{Lpmln}^{\pm }$

program

$\textit{Lpmln}^{\pm }$

program

![]() $\Pi _5$

that replaces in

$\Pi _5$

that replaces in

![]() $\Pi _3$

the formula

$\Pi _3$

the formula

![]() $\alpha : a$

by the formulas

$\alpha : a$

by the formulas

![]() $\alpha : a \vee \neg a$

and

$\alpha : a \vee \neg a$

and

![]() $:\sim \, a [-1,1]$

, that is

$:\sim \, a [-1,1]$

, that is

![]() $\Pi _5 = \{ \alpha : a \vee \neg a, \, :\sim \, a [-1,1], \, \alpha : b \vee \neg b, \, 1 : \neg \neg b \}$

. The set

$\Pi _5 = \{ \alpha : a \vee \neg a, \, :\sim \, a [-1,1], \, \alpha : b \vee \neg b, \, 1 : \neg \neg b \}$

. The set

![]() $\mathit{OPT}^{{\pm }}(\Pi _5)$

consists of the models

$\mathit{OPT}^{{\pm }}(\Pi _5)$

consists of the models

![]() $\{a\}$

and

$\{a\}$

and

![]() $\{a,b\}$

, whose weights are

$\{a,b\}$

, whose weights are

![]() $\mathit{exp}(0)$

and

$\mathit{exp}(0)$

and

![]() $\mathit{exp}(1)$

, respectively, and whose probabilities are the same as in

$\mathit{exp}(1)$

, respectively, and whose probabilities are the same as in

![]() $\Pi _3$

.

$\Pi _3$

.

3.1 From Lpmln to

$\textit{Lpmln}^{\pm }$

$\textit{Lpmln}^{\pm }$

We translate Lpmln programs to

![]() $\textit{Lpmln}^{\pm }$

programs following the idea of the translation lpmln2wc from Reference Lee and YangLee and Yang (2017). An Lpmln program

$\textit{Lpmln}^{\pm }$

programs following the idea of the translation lpmln2wc from Reference Lee and YangLee and Yang (2017). An Lpmln program

![]() $\Pi$

under the standard semantics is captured by the

$\Pi$

under the standard semantics is captured by the

![]() $\textit{Lpmln}^{\pm }$

program

$\textit{Lpmln}^{\pm }$

program

![]() $\mathit{standard}(\Pi )$

that contains

$\mathit{standard}(\Pi )$

that contains

-

• the hard formulas

$ \{ \alpha : F \vee \neg F \mid w:F \in \Pi \}$

,

$ \{ \alpha : F \vee \neg F \mid w:F \in \Pi \}$

, -

• the soft formulas

$\{ w : \neg \neg F \mid w :F \in \Pi, w \neq \alpha \}$

, and

$\{ w : \neg \neg F \mid w :F \in \Pi, w \neq \alpha \}$

, and -

• the weak constraints

$\{ :\sim \, F [-1,1] \mid w : F \in \Pi, w = \alpha \}$

.

$\{ :\sim \, F [-1,1] \mid w : F \in \Pi, w = \alpha \}$

.

The hard formulas generate the soft stable models of

![]() $\Pi$

, the weak constraints select those which satisfy most of the hard formulas of

$\Pi$

, the weak constraints select those which satisfy most of the hard formulas of

![]() $\Pi$

, while the soft formulas attach the right weight to each of them, without interfering in their generation. The alternative semantics is captured by the translation

$\Pi$

, while the soft formulas attach the right weight to each of them, without interfering in their generation. The alternative semantics is captured by the translation

![]() $\mathit{alternative}(\Pi )$

that contains

$\mathit{alternative}(\Pi )$

that contains

-

• the hard formulas

$\{ \alpha : F \mid w:F \in \Pi, w = \alpha \} \cup \{ \alpha : F \vee \neg F \mid w:F \in \Pi, w \neq \alpha \}$

,

$\{ \alpha : F \mid w:F \in \Pi, w = \alpha \} \cup \{ \alpha : F \vee \neg F \mid w:F \in \Pi, w \neq \alpha \}$

, -

• the same soft formulas as in

$\mathit{standard}(\Pi )$

, and

$\mathit{standard}(\Pi )$

, and -

• no weak constraints.

The first hard formulas enforce that the hard formulas of

![]() $\Pi$

must be satisfied, while the latter is the same as in

$\Pi$

must be satisfied, while the latter is the same as in

![]() $\mathit{standard}(\Pi )$

, but only for the soft formulas of

$\mathit{standard}(\Pi )$

, but only for the soft formulas of

![]() $\Pi$

. The weak constraints are not needed anymore.

$\Pi$

. The weak constraints are not needed anymore.

Proposition 2.

Let

![]() $\Pi$

be an Lpmln program. For every interpretation

$\Pi$

be an Lpmln program. For every interpretation

![]() $X$

, it holds that

$X$

, it holds that

\begin{align*} &{P_{\Pi }(X)} ={P_{{\mathit{standard}(\Pi )}}^{{\pm }}(X)} \quad \textrm{ and } \\ &{P_{\Pi }^{\mathit{alt}}(X)} ={P_{{\mathit{alternative}(\Pi )}}^{{\pm }}(X)} \, . \end{align*}

\begin{align*} &{P_{\Pi }(X)} ={P_{{\mathit{standard}(\Pi )}}^{{\pm }}(X)} \quad \textrm{ and } \\ &{P_{\Pi }^{\mathit{alt}}(X)} ={P_{{\mathit{alternative}(\Pi )}}^{{\pm }}(X)} \, . \end{align*}

Example 6. The

![]() $\textit{Lpmln}^{\pm }$

programs

$\textit{Lpmln}^{\pm }$

programs

![]() $\Pi _3$

,

$\Pi _3$

,

![]() $\Pi _4$

, and

$\Pi _4$

, and

![]() $\Pi _5$

of our examples are the result of applying the previous translations to the Lpmln programs

$\Pi _5$

of our examples are the result of applying the previous translations to the Lpmln programs

![]() $\Pi _1$

and

$\Pi _1$

and

![]() $\Pi _2$

. Namely,

$\Pi _2$

. Namely,

![]() $\Pi _3$

is

$\Pi _3$

is

![]() $\mathit{alternative}(\Pi _1)$

,

$\mathit{alternative}(\Pi _1)$

,

![]() $\Pi _4$

is

$\Pi _4$

is

![]() $\mathit{alternative}(\Pi _2)$

, and

$\mathit{alternative}(\Pi _2)$

, and

![]() $\Pi _5$

is

$\Pi _5$

is

![]() $\mathit{standard}(\Pi _1)$

. Accordingly, for all interpretations

$\mathit{standard}(\Pi _1)$

. Accordingly, for all interpretations

![]() $X$

, it holds that

$X$

, it holds that

![]() ${P_{\Pi _1}^{\mathit{alt}}(X)}={P_{\Pi _3}^{{\pm }}(X)}$

,

${P_{\Pi _1}^{\mathit{alt}}(X)}={P_{\Pi _3}^{{\pm }}(X)}$

,

![]() ${P_{\Pi _2}^{\mathit{alt}}(X)}={P_{\Pi _4}^{{\pm }}(X)}$

, and

${P_{\Pi _2}^{\mathit{alt}}(X)}={P_{\Pi _4}^{{\pm }}(X)}$

, and

![]() ${P_{\Pi _1}(X)}={P_{\Pi _5}^{{\pm }}(X)}$

. The program

${P_{\Pi _1}(X)}={P_{\Pi _5}^{{\pm }}(X)}$

. The program

![]() $\mathit{standard}(\Pi _2)$

is

$\mathit{standard}(\Pi _2)$

is

![]() $\Pi _5\cup \{\alpha : \neg a \vee \neg \neg a, \, :\sim \, \neg a [-1,1]\}$

.

$\Pi _5\cup \{\alpha : \neg a \vee \neg \neg a, \, :\sim \, \neg a [-1,1]\}$

.

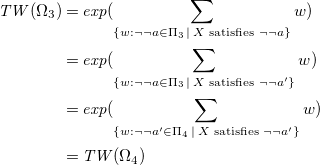

As noted in Reference Lee, Talsania and WangLee et al. (2017), these kinds of translations can be troublesome when applied to logic programs with variables in the input language of clingo (Reference Calimeri, Faber, Gebser, Ianni, Kaminski, Krennwallner, Leone, Maratea, Ricca and SchaubCalimeri et al., 2020). This is the case of the Lpmln frontend in plingo, where the rules at the input can be seen as safe implications

![]() $H \leftarrow B$

where

$H \leftarrow B$

where

![]() $H$

is a disjunction and

$H$

is a disjunction and

![]() $B$

a conjunction of first-order atoms. It is hard to see how to apply the previous translations in such a way that the resulting soft formulas and weak constraints belong to the input language of clingo, since the result has to safisfy clingo’s safety conditions. For instance, if we try to apply the

$B$

a conjunction of first-order atoms. It is hard to see how to apply the previous translations in such a way that the resulting soft formulas and weak constraints belong to the input language of clingo, since the result has to safisfy clingo’s safety conditions. For instance, if we try to apply the

![]() $\mathit{standard}$

translation to the hard rule a(X) :- b(X)., a possible approach could generate the two weak constraints : a(X). [-1,X] and : not b(X). [-1,X], but the second of them is not safe and will not be accepted by clingo. To overcome this problem, we can use the negative versions of the previous translations, based on the translation lpmln2wc

$\mathit{standard}$

translation to the hard rule a(X) :- b(X)., a possible approach could generate the two weak constraints : a(X). [-1,X] and : not b(X). [-1,X], but the second of them is not safe and will not be accepted by clingo. To overcome this problem, we can use the negative versions of the previous translations, based on the translation lpmln2wc

![]() $^{pnt}$

from Reference Lee and YangLee and Yang (2017), where the soft formulas for both translations are

$^{pnt}$

from Reference Lee and YangLee and Yang (2017), where the soft formulas for both translations are

and the weak constraints for the standard semantics are

Observe that now

![]() $F$

always occurs under one negation. In this case, when

$F$

always occurs under one negation. In this case, when

![]() $F$

has the form

$F$

has the form

![]() $H \leftarrow B$

, the formulas

$H \leftarrow B$

, the formulas

![]() $\neg F$

can be simply written as

$\neg F$

can be simply written as

![]() $\neg H \wedge B$

, and this formulation can be easily incorporated into clingo. For instance, a(X) :- b(X). is translated in this way to : not a(X), b(X). [1,X], which is safe and accepted by clingo. These negative versions are the result of applying to

$\neg H \wedge B$

, and this formulation can be easily incorporated into clingo. For instance, a(X) :- b(X). is translated in this way to : not a(X), b(X). [1,X], which is safe and accepted by clingo. These negative versions are the result of applying to

![]() $\mathit{standard}(\Pi )$

and

$\mathit{standard}(\Pi )$

and

![]() $\mathit{alternative}(\Pi )$

the translation of the following proposition, and then simplifying the soft formulas of the form

$\mathit{alternative}(\Pi )$

the translation of the following proposition, and then simplifying the soft formulas of the form

![]() $-w : \neg \neg \neg F$

to

$-w : \neg \neg \neg F$

to

![]() $-w : \neg F$

.

$-w : \neg F$

.

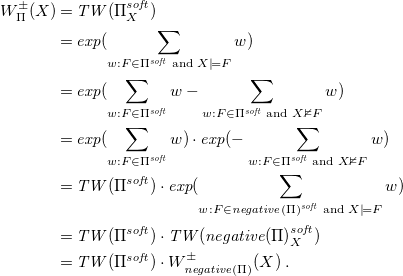

Proposition 3.

Given an

![]() $\textit{Lpmln}^{\pm }$

program

$\textit{Lpmln}^{\pm }$

program

![]() $\Pi$

, let

$\Pi$

, let

![]() $\mathit{negative}(\Pi )$

be the program

$\mathit{negative}(\Pi )$

be the program

For every interpretation

![]() $X$

, it holds that

$X$

, it holds that

![]() $P_{\Pi }^{{\pm }}(X)$

and

$P_{\Pi }^{{\pm }}(X)$

and

![]() $P_{{\mathit{negative}(\Pi )}}^{{\pm }}(X)$

coincide.

$P_{{\mathit{negative}(\Pi )}}^{{\pm }}(X)$

coincide.

This proposition is closely related to Corollary 1 from Reference Lee and YangLee and Yang (2017).

Example 7. The program

![]() $\mathit{negative}(\Pi _5)$

is

$\mathit{negative}(\Pi _5)$

is

![]() $\{ \alpha : a \vee \neg a, \, :\sim \, \neg a [1,1], \, \alpha : b \vee \neg b, \, -1 : \neg \neg \neg b \}$

. Its last formula can be simplified to

$\{ \alpha : a \vee \neg a, \, :\sim \, \neg a [1,1], \, \alpha : b \vee \neg b, \, -1 : \neg \neg \neg b \}$

. Its last formula can be simplified to

![]() $-1 : \neg b$

. The optimal stable models of this program are

$-1 : \neg b$

. The optimal stable models of this program are

![]() $\{a\}$

and

$\{a\}$

and

![]() $\{a,b\}$

, their weights are

$\{a,b\}$

, their weights are

![]() $\mathit{exp}(-1)$

and

$\mathit{exp}(-1)$

and

![]() $\mathit{exp}(0)$

, respectively, and their probabilities are the same as in

$\mathit{exp}(0)$

, respectively, and their probabilities are the same as in

![]() $\Pi _5$

.

$\Pi _5$

.

3.2 From ProbLog to

$\textit{Lpmln}^{\pm }$

and back

$\textit{Lpmln}^{\pm }$

and back

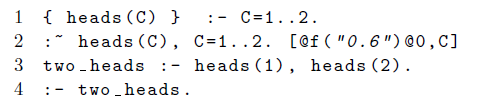

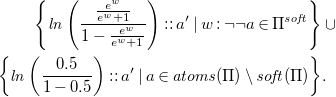

Reference Lee and WangLee and Wang (2016) show how to translate ProbLog programs to Lpmln. We obtain a translation from ProbLog to

![]() $\textit{Lpmln}^{\pm }$

by combining that translation with our

$\textit{Lpmln}^{\pm }$

by combining that translation with our

![]() $\mathit{alternative}$

translation from Lpmln to

$\mathit{alternative}$

translation from Lpmln to

![]() $\textit{Lpmln}^{\pm }$

. Recall that we may identify a hard formula or a set of them by their corresponding unweighted versions. Let

$\textit{Lpmln}^{\pm }$

. Recall that we may identify a hard formula or a set of them by their corresponding unweighted versions. Let

![]() $\Pi$

be a ProbLog program, then the

$\Pi$

be a ProbLog program, then the

![]() $\textit{Lpmln}^{\pm }$

program

$\textit{Lpmln}^{\pm }$

program

![]() ${{\mathit{problog2lpmln}(\Pi )}}$

is:Footnote

1

${{\mathit{problog2lpmln}(\Pi )}}$

is:Footnote

1

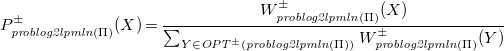

Proposition 4.

Let

![]() $\Pi$

be a ProbLog program. For every interpretation

$\Pi$

be a ProbLog program. For every interpretation

![]() $X$

, it holds that

$X$

, it holds that

![]() $P_{\Pi }(X)$

and

$P_{\Pi }(X)$

and

![]() $P_{{{{\mathit{problog2lpmln}(\Pi )}}}}^{{\pm }}(X)$

are the same.

$P_{{{{\mathit{problog2lpmln}(\Pi )}}}}^{{\pm }}(X)$

are the same.

Example 8. Given our previous ProbLog program

![]() $\Pi _7=\{ b \leftarrow \neg a, \, \neg b, \, 0.4 :: a \}$

, the

$\Pi _7=\{ b \leftarrow \neg a, \, \neg b, \, 0.4 :: a \}$

, the

![]() $\textit{Lpmln}^{\pm }$

program

$\textit{Lpmln}^{\pm }$

program

![]() ${{\mathit{problog2lpmln}(\Pi _7)}}$

consists of the following formulas:

${{\mathit{problog2lpmln}(\Pi _7)}}$

consists of the following formulas:

where

![]() $-0.405$

is the result of

$-0.405$

is the result of

![]() $\mathit{ln}(0.4/(1-0.4))$

. It holds that

$\mathit{ln}(0.4/(1-0.4))$

. It holds that

![]() $P_{{{{\mathit{problog2lpmln}(\Pi _7)}}}}^{{\pm }}(X)$

is

$P_{{{{\mathit{problog2lpmln}(\Pi _7)}}}}^{{\pm }}(X)$

is

![]() $1$

when

$1$

when

![]() $X=\{a\}$

, and it is

$X=\{a\}$

, and it is

![]() $0$

otherwise, which is the same as

$0$

otherwise, which is the same as

![]() $P_{\Pi _7}(X)$

.

$P_{\Pi _7}(X)$

.

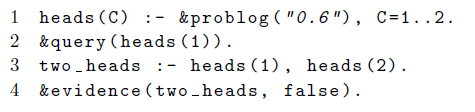

In the remainder of this section, we present a translation in the other direction, from

![]() $\textit{Lpmln}^{\pm }$

to ProbLog. The translation applies to non-disjunctive

$\textit{Lpmln}^{\pm }$

to ProbLog. The translation applies to non-disjunctive

![]() $\textit{Lpmln}^{\pm }$

programs without weak constraints. At first sight, it may seem counterintuitive that such a translation is possible, since ProbLog is based on the well-founded semantics, and the second condition for valid ProbLog programs severely restricts the form of their normal part. However, a closer look reveals that this restriction can be compensated by the other components of ProbLog’s programs: probabilistic facts and evidence literals. As we will see, they can fulfill the role of choice rules and integrity constraints in ASP, respectively. Under this view, ProbLog programs resemble logic programs that follow the Generate, Define and Test methodology (Reference LifschitzLifschitz, 2002) where probabilistic facts generate possible solutions, normal rules define additional predicates, and evidence literals filter the actual solutions. This relation makes the existence of a translation more intuitive. We make it precise in the next paragraphs.

$\textit{Lpmln}^{\pm }$

programs without weak constraints. At first sight, it may seem counterintuitive that such a translation is possible, since ProbLog is based on the well-founded semantics, and the second condition for valid ProbLog programs severely restricts the form of their normal part. However, a closer look reveals that this restriction can be compensated by the other components of ProbLog’s programs: probabilistic facts and evidence literals. As we will see, they can fulfill the role of choice rules and integrity constraints in ASP, respectively. Under this view, ProbLog programs resemble logic programs that follow the Generate, Define and Test methodology (Reference LifschitzLifschitz, 2002) where probabilistic facts generate possible solutions, normal rules define additional predicates, and evidence literals filter the actual solutions. This relation makes the existence of a translation more intuitive. We make it precise in the next paragraphs.

We present the translation for a normal

![]() $\textit{Lpmln}^{\pm }$

program

$\textit{Lpmln}^{\pm }$

program

![]() $\Pi$

without weak constraints, whose soft formulas have the form

$\Pi$

without weak constraints, whose soft formulas have the form

![]() $w : \neg \neg a$

for some atom

$w : \neg \neg a$

for some atom

![]() $a$

. We assume that in

$a$

. We assume that in

![]() $\Pi$

there are no different soft formulas

$\Pi$

there are no different soft formulas

![]() $w_1 : \neg \neg a$

and

$w_1 : \neg \neg a$

and

![]() $w_2 : \neg \neg a$

for the same atom

$w_2 : \neg \neg a$

for the same atom

![]() $a$

. Using well-known translations, it is easy to extend the results to more general types of

$a$

. Using well-known translations, it is easy to extend the results to more general types of

![]() $\textit{Lpmln}^{\pm }$

programs, as long as the complexity of deciding the satisfiability of the hard part remains in

$\textit{Lpmln}^{\pm }$

programs, as long as the complexity of deciding the satisfiability of the hard part remains in

![]() $\mathit{NP}$

, and the programs contain no weak constraints. In fact, the implementation of this translation in our system plingo works for non-disjunctive clingo programs (Reference Calimeri, Faber, Gebser, Ianni, Kaminski, Krennwallner, Leone, Maratea, Ricca and SchaubCalimeri et al., 2020).

$\mathit{NP}$

, and the programs contain no weak constraints. In fact, the implementation of this translation in our system plingo works for non-disjunctive clingo programs (Reference Calimeri, Faber, Gebser, Ianni, Kaminski, Krennwallner, Leone, Maratea, Ricca and SchaubCalimeri et al., 2020).

We modify

![]() $\Pi$

in four steps until we have a

$\Pi$

in four steps until we have a

![]() $\textit{Lpmln}^{\pm }$

program that, in step 5, we can easily turn into a ProbLog program by inverting the translation

$\textit{Lpmln}^{\pm }$

program that, in step 5, we can easily turn into a ProbLog program by inverting the translation

![]() ${{\mathit{problog2lpmln}}}$

. We take as our running example the following

${{\mathit{problog2lpmln}}}$

. We take as our running example the following

![]() $\textit{Lpmln}^{\pm }$

program

$\textit{Lpmln}^{\pm }$

program

![]() $\Pi _8$

that is the result of applying to

$\Pi _8$

that is the result of applying to

![]() $\Pi _3$

the usual translation from choice rules to normal rules:

$\Pi _3$

the usual translation from choice rules to normal rules:

The (optimal) stable models of

![]() $\Pi _8$

are

$\Pi _8$

are

![]() $\{a,\mathit{nb}\}$

and

$\{a,\mathit{nb}\}$

and

![]() $\{a,b\}$

. Their probabilities are

$\{a,b\}$

. Their probabilities are

![]() $0.269$

and

$0.269$

and

![]() $0.731$

, respectively, just like those of

$0.731$

, respectively, just like those of

![]() $\{a\}$

and

$\{a\}$

and

![]() $\{a,b\}$

with respect to

$\{a,b\}$

with respect to

![]() $\Pi _3$

.

$\Pi _3$

.

Step 1. We assume that the atom

![]() $\mathit{bot}$

does not occur in

$\mathit{bot}$

does not occur in

![]() $\Pi$

, and we add the literal

$\Pi$

, and we add the literal

![]() $\neg{\mathit{bot}}$

to

$\neg{\mathit{bot}}$

to

![]() $\Pi$

. In the end, this will be the unique evidence literal in the resulting ProbLog program. Integrity constraints

$\Pi$

. In the end, this will be the unique evidence literal in the resulting ProbLog program. Integrity constraints

![]() $\bot \leftarrow B$

are not allowed in ProbLog, but once we have the evidence literal

$\bot \leftarrow B$

are not allowed in ProbLog, but once we have the evidence literal

![]() $\neg{\mathit{bot}}$

, we can represent them simply by

$\neg{\mathit{bot}}$

, we can represent them simply by

![]() ${\mathit{bot}} \leftarrow B$

. This shows how evidence literals fulfill the role of integrity constraints.

${\mathit{bot}} \leftarrow B$

. This shows how evidence literals fulfill the role of integrity constraints.

Step 2. For every atom

![]() $a$

occurring in

$a$

occurring in

![]() $\Pi$

, we add the following rules introducing a new atom

$\Pi$

, we add the following rules introducing a new atom

![]() $a^{\prime}$

that works as a copy of

$a^{\prime}$

that works as a copy of

![]() $a$

:

$a$

:

The choice rule selects a truth value for

![]() $a^{\prime}$

, while the other rules act as integrity constraints that enforce the truth values of

$a^{\prime}$

, while the other rules act as integrity constraints that enforce the truth values of

![]() $a$

and

$a$

and

![]() $a^{\prime}$

to be the same. After adding

$a^{\prime}$

to be the same. After adding

![]() $\neg{\mathit{bot}}$

and these rules to

$\neg{\mathit{bot}}$

and these rules to

![]() $\Pi$

, the resulting

$\Pi$

, the resulting

![]() $\textit{Lpmln}^{\pm }$

program has the same stable models as before, but for every atom

$\textit{Lpmln}^{\pm }$

program has the same stable models as before, but for every atom

![]() $a$

in a stable model, we also have its copy

$a$

in a stable model, we also have its copy

![]() $a^{\prime}$

. Apart from this, the probabilities of the stable models remain the same. In our example, we add to

$a^{\prime}$

. Apart from this, the probabilities of the stable models remain the same. In our example, we add to

![]() $\Pi _8$

the literal

$\Pi _8$

the literal

![]() $\neg{\mathit{bot}}$

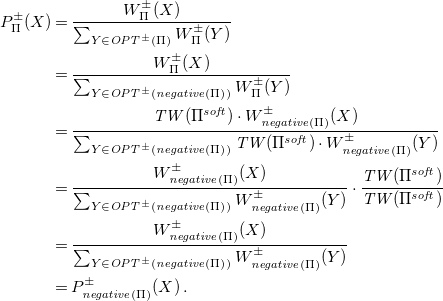

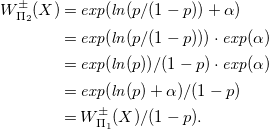

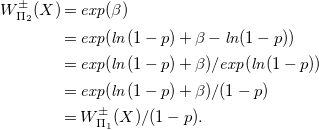

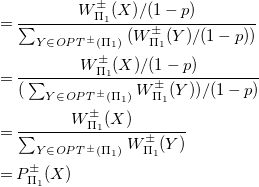

, as well as the formulas (1) for the three atoms

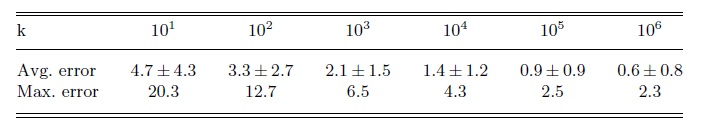

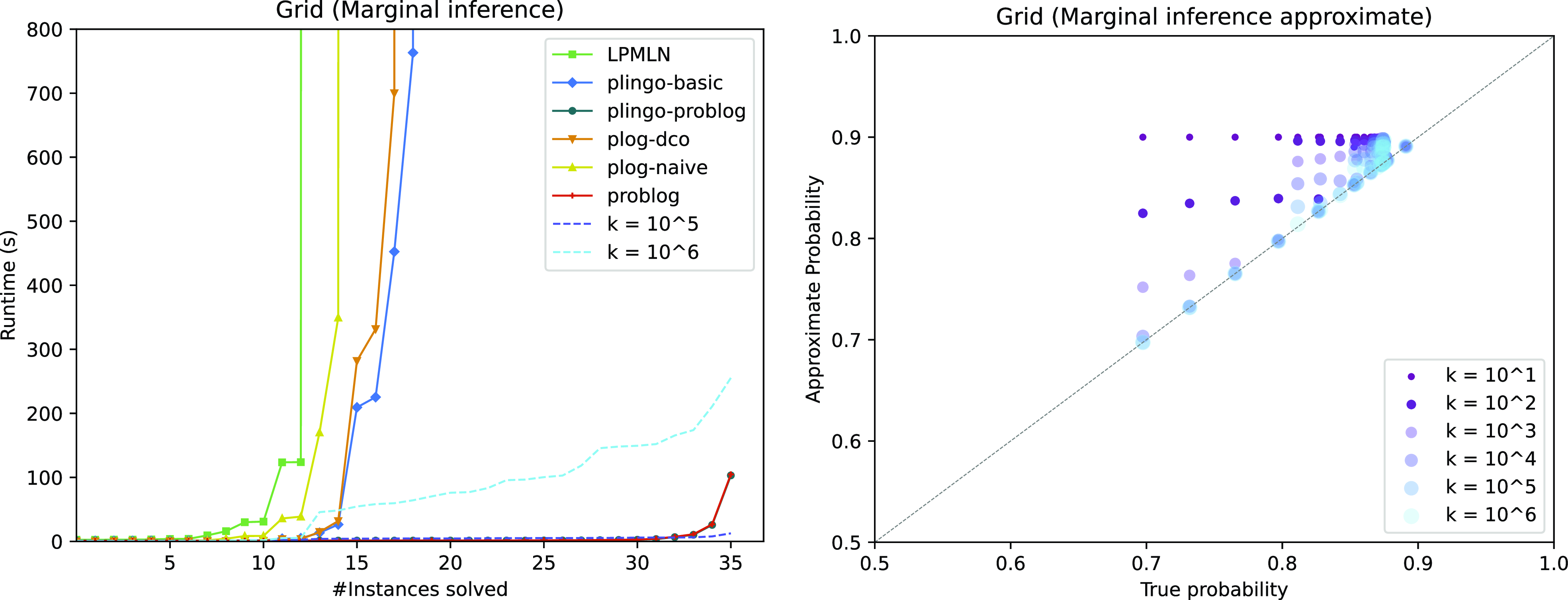

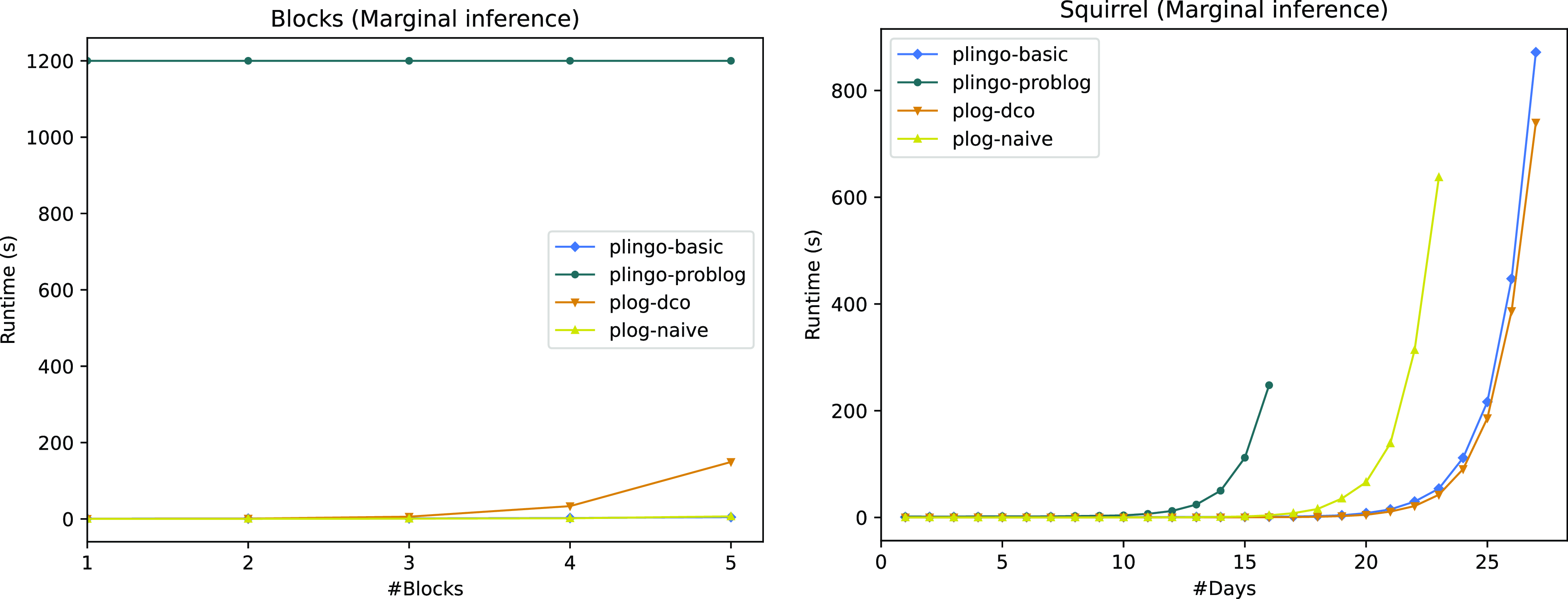

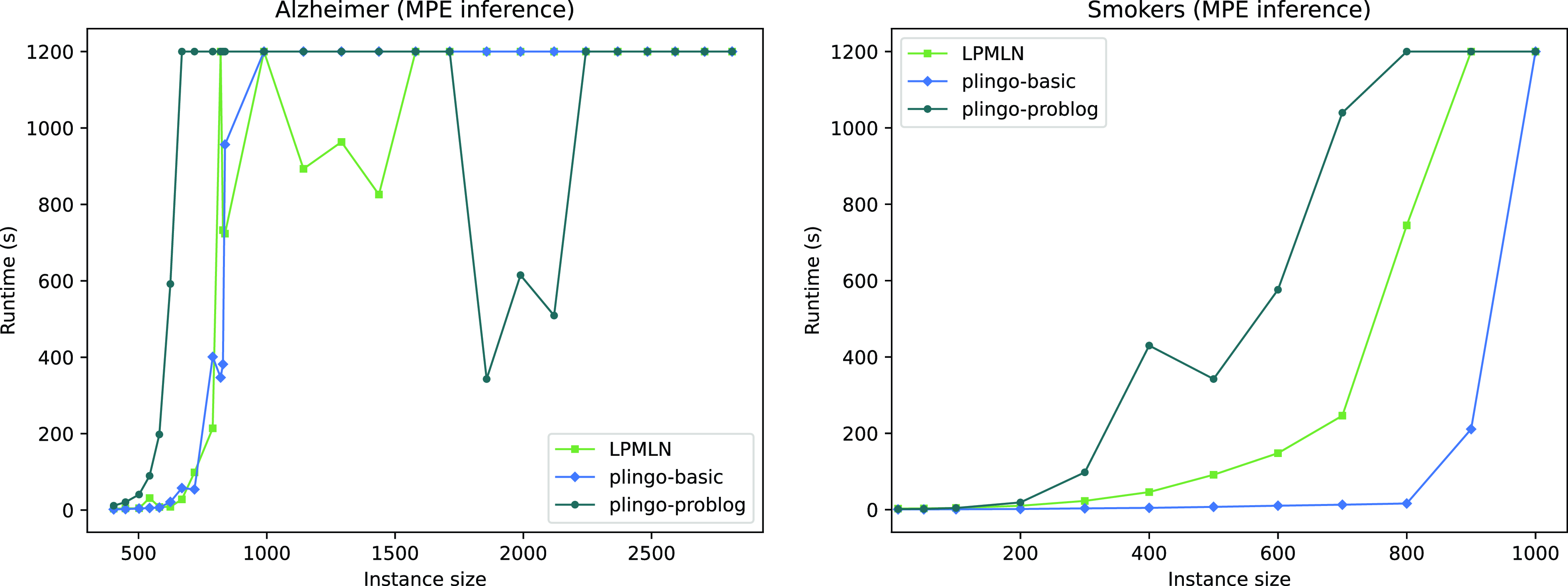

$\neg{\mathit{bot}}$