Policy Significance Statement

This research is essential for policy makers that are attempting to develop indicators to measure the prevalence of internet censorship in a country, either for the purpose of conditioning development assistance on good governance or measuring project progress toward the goals of a freer and more open internet. Expert analyses (such as Freedom House or V-Dem) are more effective at identifying censorship in very repressive regimes where verifiable information is limited and may be more useful to civil society and advocacy organizations, while remote sensing (such as Access Now or the OpenNet initiative) data are more verifiable and objective and may be more useful to donors.

1. What is Internet Censorship?

Since the 1990s, the internet has spread around the world, reaching 3.8 billion people in three decades and fundamentally changing the way information is produced, disseminated, and consumed (Cohen-Almagor, Reference Cohen-Almagor and Luppicini2013; International Telecommunication Union, 2020; Shahbaz and Funk, Reference Shahbaz and Funk2020). Policymakers, civil society, and academics have praised the internet as a tool for encouraging freedom of speech and information globally (Reno v. ACLU, 1997; Howard et al., Reference Howard, Agarwal and Hussain2011; Corduneanu-Huci and Hamilton, Reference Corduneanu-Huci and Hamilton2018; USAID, 2020). The Arab Spring in the early 2010s is often cited as an example of how the internet can help facilitate information sharing across civil society and hasten transitions to democracy (Howard et al., Reference Howard, Agarwal and Hussain2011; Roberts et al., Reference Roberts, Zuckerman and Palfrey2011; Stepanova, Reference Stepanova2011; Farrell, Reference Farrell2012). However, just as quickly as information has spread across the digital world, governments have found ways to restrict access through various forms of internet censorship (Zittrain et al., Reference Zittrain, Faris, Noman, Clark, Tilton and Morrison-Westphal2017; Lakshmana, Reference Lakshmana2018; Gopaldas, Reference Gopaldas2019; Zeleke, Reference Zeleke2019; Chun-Chih and Thung-Hong, Reference Chun-Chih and Thung-Hong2020).

We define internet censorship as any method used to intentionally prevent information or services from reaching users over the internet. We focus on government censorship, as censorship by internet service providers (ISPs) is rare and often directed by the government (Zittrain et al., Reference Zittrain, Faris, Noman, Clark, Tilton and Morrison-Westphal2017; Taye, Reference Taye2020). As opposed to traditional censorship, which often involves arresting or attacking members of the media to stop content production (McColm et al., Reference McColm, Finn, Payne, Ryan, Sussman and Zarycky1991; Karatnycky et al., Reference Karatnycky, Piano and Puddington2003), internet censorship requires new tools from repressive governments, who often cannot stop the global production of information,Footnote 1 prevent it from entering their country, or stop their citizens from engaging with it (Clark et al., Reference Clark, Faris and Jones2017; Zittrain et al., Reference Zittrain, Faris, Noman, Clark, Tilton and Morrison-Westphal2017). In place of these traditional methods, governments often censor the internet through internet filtering and internet shutdowns.Footnote 2

1.1. Internet filtering

Internet filtering is used to restrict users’ access to specific websites, domains, or IP addresses through technical blocks, including but not limited to DNS poisoning, hypertext transfer protocol (HTTP) filtering through middleboxes, and IP filtering (Zittrain et al., Reference Zittrain, Faris, Noman, Clark, Tilton and Morrison-Westphal2017; Yadav and Chakravarty, Reference Yadav and Chakravarty2018). Governments may deploy internet filtering software themselves, or they may compel ISPs to block or filter certain content within their country (Puyosa and Chaguaceda, Reference Puyosa and Chaguaceda2017; Zittrain et al., Reference Zittrain, Faris, Noman, Clark, Tilton and Morrison-Westphal2017).

Governments block content for a variety of reasons. Some governments want to restrict the flow of information by blocking e-mail, social media, or video calling services (Carsten, Reference Carsten2014; Zittrain et al., Reference Zittrain, Faris, Noman, Clark, Tilton and Morrison-Westphal2017). Other governments block online content that expresses certain political views, such as content from opposition parties, civil society, human rights advocates, or specific minority groups (Zittrain et al., Reference Zittrain, Faris, Noman, Clark, Tilton and Morrison-Westphal2017; Chun-Chih and Thung-Hong, Reference Chun-Chih and Thung-Hong2020; Shahbaz and Funk, Reference Shahbaz and Funk2020). Some restrict content for social, cultural, or religious reasons, such as content related to sexuality, gambling, drugs, alcohol, or other content that is perceived to be offensive (Zittrain et al., Reference Zittrain, Faris, Noman, Clark, Tilton and Morrison-Westphal2017). Governments may block content continuously, or only at specific times, such as around an election (Zittrain et al., Reference Zittrain, Faris, Noman, Clark, Tilton and Morrison-Westphal2017; Anthonio, Reference Anthonio2020; Taye, Reference Taye2020). Governments may restrict information around a specific event to rewrite history to hide repressive practices, spread disinformation, or the eliminate cultural heritage of minority groups (Anonymous, 2021; Berg, Reference Berg2021; Bontridder and Poullet, Reference Bontridder and Poullet2021; Cook, Reference Cook2022). They may be transparent––noting that access to certain sites is not permitted––or they may try to disguise the filtering so that the lack of access appears to be a technical problem––such as displaying “file not found” on a restricted website (Dalek et al., Reference Dalek, Deibert, McKune, Gill, Senft and Noor2015; Zittrain et al., Reference Zittrain, Faris, Noman, Clark, Tilton and Morrison-Westphal2017; Taye, Reference Taye2020).

In recent years, improvements in security protocols and circumvention tools have made filtering challenging. Encryption makes it difficult for censors to see which portions of a website a user is attempting to access (Clark et al., Reference Clark, Faris and Jones2017; Rahimi and Gupta, Reference Rahimi and Gupta2020). Hypertext transfer protocol secure (HTTPS) in particular has made it challenging for governments to restrict certain pages without censoring the entire website (Clark et al., Reference Clark, Faris and Jones2017; Zittrain et al., Reference Zittrain, Faris, Noman, Clark, Tilton and Morrison-Westphal2017; Rahimi and Gupta, Reference Rahimi and Gupta2020). This leads governments to restrict either the entirety of a website or none of it (e.g., all of Wikipedia or none of it, instead of just select pages). Circumvention tools like virtual private networks (VPNs) can also get around this selective filtering but are ineffective against full internet shutdowns (Al-Saqaf, Reference Al-Saqaf2015).

1.2. Internet shutdowns

In part due to the increasing difficulty of filtering select content, governments are more often turning to blunt censorship tools, such as dramatically slowing the speed of the internet (also known as throttling) or shutting down the entire internet (Al-Saqaf, Reference Al-Saqaf2015; Taye, Reference Taye2020). Internet shutdowns were rare in the early 2010s (Rydzak, Reference Rydzak2018; Subramanian, Reference Subramanian2012; Roberts et al., Reference Roberts, Zuckerman and Palfrey2011) but have become increasingly common (CIPESA, 2019; Taye, Reference Taye2020), often occurring around specific events such as an election or large protest (Zittrain et al., Reference Zittrain, Faris, Noman, Clark, Tilton and Morrison-Westphal2017; Anthonio, Reference Anthonio2020; Taye, Reference Taye2020). Governments often cite concerns about violent protest or instability as a reason for shutting down the internet (Zittrain et al., Reference Zittrain, Faris, Noman, Clark, Tilton and Morrison-Westphal2017; Taye, Reference Taye2020), although studies have demonstrated that shutting down the internet tends to increase the likelihood of violence, rather than decrease it (Rydzak, Reference Rydzak2018, Reference Rydzak2019). Like filtering, shutdowns may be done in a way that makes it difficult to differentiate between intentional shutdowns and technical issues. Internet shutdowns may be country-wide or targeted, so that only certain regions are shut down, and they may last only a few hours or months (Taye, Reference Taye2020). Internet shutdowns are often cited as more harmful than internet filtering since they impact the entire internet economy (West, Reference West2016; Raveendran and Leberknight, Reference Raveendran and Leberknight2018; NetBlocks, 2020; Woodhams and Migliano, Reference Woodhams and Migliano2020).

Today, both internet filtering and internet shutdowns are widespread practices, with some sources estimating that some form of internet censorship currently exists in more than half of countries in the world (Bischoff, Reference Bischoff2020; Mechkova et al., Reference Mechkova, Daniel, Brigitte and Steven2020). Internet filtering has been widespread for many years, but the number of internet shutdowns has increased dramatically each year since the mid-2010s (Zittrain et al., Reference Zittrain, Faris, Noman, Clark, Tilton and Morrison-Westphal2017; Selva, Reference Selva2019). Estimating the exact number of governments that utilize internet filtering or internet shutdowns is challenging, since many governments attempt to hide or disguise their internet censorship, and technical failures can be mistaken for censorship (Crandall et al., Reference Crandall, Crete-Nishihata and Knockel2015; Gueorguiev et al., Reference Gueorguiev, Shao and Crabtree2017; Pearce et al., Reference Pearce, Ensafi, Li, Feamster and Paxson2018; VanderSloot et al., Reference VanderSloot, McDonald, Scott, Halderman and Ensafi2018). This paper explores two main methods of measuring internet censorship––expert analysis and remote measurement––and examines the pros and cons of each. We compare the findings from four of the most accessible datasets on internet censorship and discuss the tradeoffs faced by policy-makers, civil society, and academics that use these data.

2. Measuring Internet Censorship

Government censorship of the internet is inherently focused on the removal and obfuscation of information. Governments often work to hide both the content of the internet from their citizens and the methods they are using to hide that content (Gueorguiev et al., Reference Gueorguiev, Shao and Crabtree2017; VanderSloot et al., Reference VanderSloot, McDonald, Scott, Halderman and Ensafi2018). This means that measurement of internet censorship can be both challenging and dangerous as governments can target citizens that are attempting to uncover censorship (Crandall et al., Reference Crandall, Crete-Nishihata and Knockel2015; Narayanan and Zevenbergen, Reference Narayanan and Zevenbergen2015; Pearce et al., Reference Pearce, Ensafi, Li, Feamster and Paxson2018; VanderSloot et al., Reference VanderSloot, McDonald, Scott, Halderman and Ensafi2018; Weinberg, Reference Weinberg2018). However, having accurate measures of internet censorship is important for a range of stakeholders, including users attempting to subvert it, academics attempting to better understand it, and donors or advocates attempting to address it or incentivize policies that limit it.

2.1. Literature review

Despite a need for accurate measures of internet censorship, we find that almost no work has been done empirically comparing the consistency of methodologies for measuring internet censorship. We conduct a systematic review of the literature on internet censorship using Google Scholar. We search the full text of all articles containing the terms “internet censorship,” “internet filtering,” or “internet shutdowns” and choose four datasets that are among the most often cited: Freedom House’s Freedom of the Net; Varieties of Democracy’s Digital Society Project (V-Dem); OpenNet Initiative (ONI); and Access Now’s #KeepItOn data. We focus on datasets that may be useful to donor and advocacy organizations that prioritize public and accessible data with broad country coverage as described in Section 2.2. Other datasets identified in the literature include data from Howard et al. (Reference Howard, Agarwal and Hussain2011), Censored Planet (2020), Open Observatory of Network Interference (2020), and ICLab (Niaki et al., Reference Niaki, Cho, Weinberg, Hoang, Razaghpanah, Christin and Gill2020).

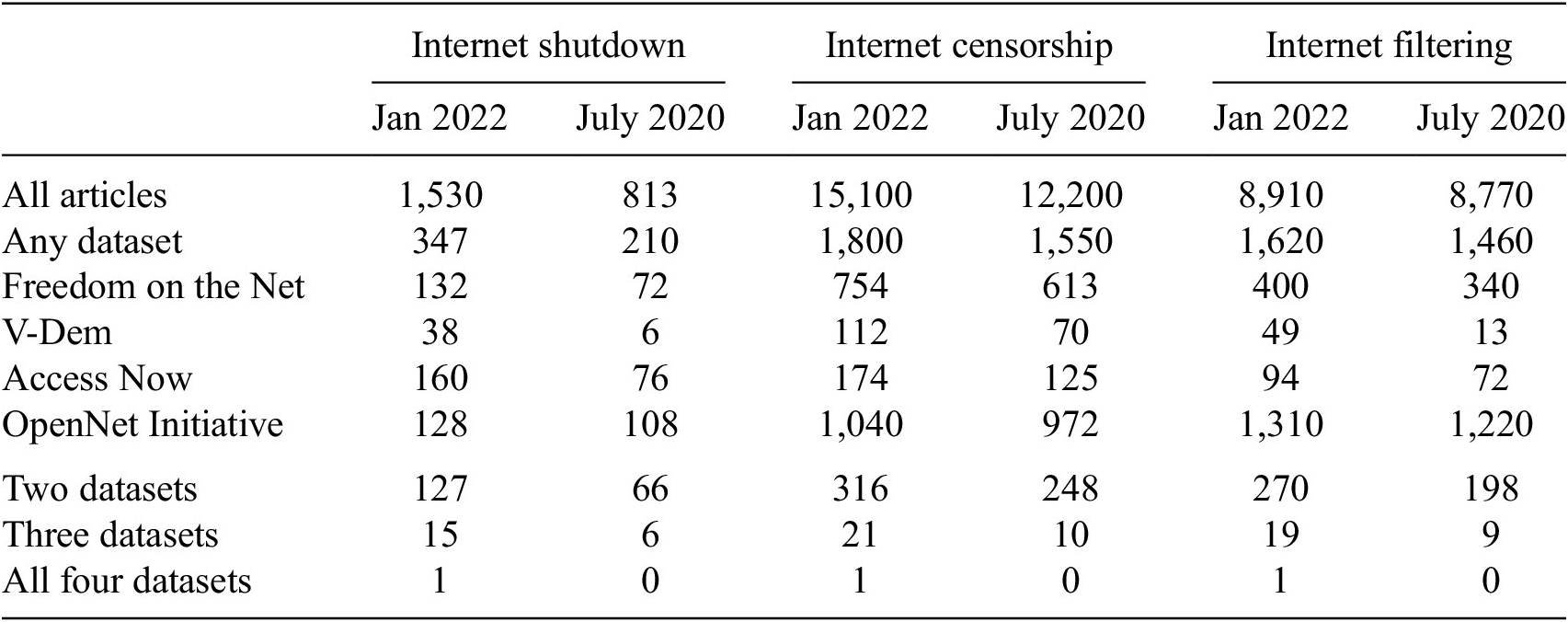

We then repeat the search with the same terms as well as the name of each dataset: searching each combination of one of the initial search terms (internet censorship, internet filtering, and internet censorship) and each dataset (“Freedom on the Net,” “V-Dem” OR “Varieties of Democracy,” “OpenNet Initiative,” and “Access Now”). Then we repeat the process with each possible combination of datasets with each of the initial search terms to identify other works comparing these datasets. We review all articles that include more than one dataset and one of the initial search terms somewhere in the full text to determine whether these works are comparing measurement methods between these datasets. Table 1 depicts the findings of each of these searches, as well as the results of searches from an earlier iteration of this analysis conducted in July 2020 (Fletcher and Hayes-Birchler, Reference Fletcher and Hayes-Birchler2020).

Table 1. Number of google scholar results on internet censorship, filtering, and shutdowns by dataset

Note. Few articles reference one or more dataset, and those that do rarely compare datasets and never compare overall methodologies.

We find that at least one of these datasets is featured in 23% of works including the words “internet shutdowns,” 18% of the works including the words “Internet filtering” and 12% of works including the words “internet censorship,” indicating that these datasets are widely used in the literature. In the case of the Freedom House and V-Dem datasets, often the articles are citing qualitative results from their reports or using variables other than those that measure internet censorship (this is the case with the only paper that cites all four datasets: Joshi (Reference Joshi2021)). Despite the wide use of these data, we find that only two works compare the results of two of these datasets (Frantz et al., Reference Frantz, Kendall-Taylor and Wright2020 compare ONI and V-Dem, and Feldstein, Reference Feldstein2021 compare V-Dem and Access Now), and no articles compare any three or all four.

Frantz et al. (Reference Frantz, Kendall-Taylor and Wright2020) compare V-Dem’s data on internet shutdowns and internet filtering with three other datasets, two datasets produced for specific articles (Howard et al., Reference Howard, Agarwal and Hussain2011; Rydzak, Reference Rydzak2018), and ONI. The Howard et al. (Reference Howard, Agarwal and Hussain2011) dataset is largely an expert analysis created using a database of historical news sources and interviews with experts. The Rydzak dataset is a hybrid dataset gathered from expert reviews of historical events and remotely sensed data from Google’s Traffic Disruptions tool, Oracle’s Internet Intelligence, and Access Now. Unlike this paper, Frantz et al. (Reference Frantz, Kendall-Taylor and Wright2020) do not convert scores into binary variables, but rather look at the correlations of the individual scores. Frantz finds that V-Dem is somewhat correlated with the Howard et al. (Reference Howard, Agarwal and Hussain2011) and Rydzak datasets, but minimally correlated with ONI data.

Feldstein (Reference Feldstein2021) briefly compares three datasets on internet shutdowns from V-Dem, Access Now, and NetBlocks (Woodhams and Migliano, Reference Woodhams and Migliano2020); however, his primary concern is with the different kinds of information provided by these datasets as opposed to testing these datasets when they ask the same questions. He notes that the length of a shutdown (as measured by Access Now) is not necessarily predictive of its cost (as measured by NetBlocks) or its political impact (as measured by V-Dem). He concludes that Access Now’s data may be less effective at describing the depth of impact of a shutdown compared with the other two datasets but does not comment on their comparative accuracy when measuring whether a shutdown took place.

While he does not directly compare the datasets or concepts focused on here, Kawerau (Reference Kawerau2021) does compare remote sensing and expert analysis with respect to cyber-security. Specifically, he compares V-Dem’s data on “Does the government have sufficiently technologically skilled staff and resources to mitigate harm from cyber-security threats?” with remotely sensed data on the susceptibility of IP addresses to cybersecurity threats from Shodan.io using the Common Vulnerability Scoring System (CVSS). Kawerau finds that while there is a statistically significant relationship between these two measurements, V-Dem finds more vulnerability than the remote measurement-based indicator.

As an aside, it is notable that the literature appears to be tracking the shift from internet filtering to internet shutdowns as a method of internet censorship. The number of articles featuring the phrase “internet shutdowns” has nearly doubled in the last year and a half increasing by over 700 articles, while the number of articles on internet filtering has barely moved, increasing by fewer than 200 articles. Additionally, datasets that continue to update (Freedom House, V-Dem, and Access Now) have continued to see stronger growth in their usage, despite ONI still being used in more articles overall.

2.2. Consumers and producers of internet censorship data

We find there are many reasons consumers seek data on which governments censor the internet. Academics have an interest in understanding trends in internet censorship and its relationship to other phenomena (e.g., Howard et al., Reference Howard, Agarwal and Hussain2011; Freyburg and Garbe, Reference Freyburg and Garbe2018; Sagir and Varlioglu, Reference Sagir and Varlioglu2020; Sutterlin, Reference Sutterlin2020). Some consumers are technical experts and internet users working to circumvent censorship practices (e.g., Roberts et al., Reference Roberts, Zuckerman and Palfrey2011; Leberknight et al., Reference Leberknight, Chiang, Poor and Wong2012; Al-Saqaf, Reference Al-Saqaf2015). Other consumers are advocacy or donor organizations that use the data to pressure governments to stop internet censorship (e.g., Millennium Challenge Corporation, 2019; Parks and Thompson, Reference Parks and Thompson2020; Sayadi and Taye, Reference Sayadi and Taye2020; SK, Reference SK2020).

An indicator measuring internet censorship might be of use to assess the United Nation’s (UN) Sustainable Development Goals (SDGs), particularly target 16.10 “Ensure public access to information and protect fundamental freedoms, in accordance with national legislation and international agreements” (UN General Assembly, 2015). The current targets for this goal do not include any reference to internet access, rather focusing on illegal attacks on journalists and freedom of public information laws (UN General Assembly, 2021). Incorporating a target focused on internet censorship could better align this indicator with its purported focus on fundamental freedoms, update this indicator for a world where more and more people get their information from online sources, and address concerns that have dogged the SDGs from their inception that they fail to promote accountable, transparent, and democratic governance (Smith, Reference Smith2015; Smith and Gladstein, Reference Smith and Gladstein2018; International Telecommunication Union, 2020).

While all consumers of these data value an accurate reflection of the world, they may place more or less value on other characteristics of a dataset. An academic researcher may value a dataset that includes many years of historical data for the purpose of running regressions. Several academic papers reviewed that use these data focus on the creation of historic datasets that may look decades backward (Howard et al., Reference Howard, Agarwal and Hussain2011; Rydzak, Reference Rydzak2018; Frantz et al., Reference Frantz, Kendall-Taylor and Wright2020). A user of circumvention tools in an authoritarian country might value data that is constantly updated. Some tools are particularly geared for these users, such as OONI or NetBlocks which are updated on a daily or even hourly basis (Open Observatory of Network Interference, 2020; NetBlocks, 2022b). Some donors are interested in a dataset with broad country coverage, publicly available and accessible data, and measurements explicitly linked to governance (Millennium Challenge Corporation, 2019; USAID, 2019; Tilley and Jenkins, Reference Tilley and Jenkins2020; Fletcher, Reference Fletcher2021; Carneades, 2022). Donors and advocates can use these data to target development aid to countries that are pursuing good governance reforms, identify countries that may need to reform their internet regulations, or pressure autocratic regimes to reduce oppression (Wagner, Reference Wagner, Gollatz and Calderaro2013). We focus on datasets and criteria of interest to global donor and advocacy organizations.

We find that there are two broad methods of measuring internet censorship referenced in the literature: expert analysis and remote measurement. We define expert analysis as a process where one or more experts answer specific questions, which are used to create quantitative scores about internet censorship in a country. Remote measurement uses software and user reports to sense and catalog specific censorship events, often with human oversight. The datasets we use from Freedom House and V-Dem are expert analyses (Freedom House, 2019; Pemstein et al., Reference Pemstein, Marquardt, Tzelgov, Wang, Medzihorsky, Krusell, Miri and von Römer2020). The datasets we use from ONI and Access Now are remotely measured (Faris and Villeneuve, Reference Faris, Villeneuve, Deibert, Rohozinski and Zittrain2008; Access Now, 2017). While some work has been done to compare individual datasets or new tools with existing tools for validity (Frantz et al., Reference Frantz, Kendall-Taylor and Wright2020; Raman et al., Reference Raman, Stoll, Dalek, Sarabi, Ramesh, Scott and Ensafi2020; Feldstein, Reference Feldstein2021), we find no work comparing methodologies as we do here and in Fletcher and Hayes-Birchler (Reference Fletcher and Hayes-Birchler2020).

2.2.1. Expert analysis

The methodology for expert analyses involves periodically surveying one or more experts and aggregating that information into a quantitative measure. These analyses are published regularly, usually on an annual basis. Sometimes they include disaggregated data on certain responses or narratives explaining the rationale for score changes. These data are used by researchers to provide a general context for country policy environments (e.g., Maréchal, Reference Maréchal2017), variables in regressions (e.g., Sagir and Varlioglu, Reference Sagir and Varlioglu2020), and to determine funding and incentivize reform (Millennium Challenge Corporation, 2019). While the reports produced by these organizations can provide helpful context for censorship, there are some drawbacks to expert analyses, which do not document specific events nor provide information as to exactly how the internet was censored in particular instances (Roberts et al., Reference Roberts, Zuckerman and Palfrey2011). Given that these datasets are only produced once a year, they are less useful for users attempting to actively circumvent government censorship in real time.

Examples of expert analyses with questions on internet censorship include Freedom House’s Freedom on the Net report (which includes the Key Internet Controls report), Reporters Without Borders’ Press Freedom Index, and V-Dem’s Digital Society Project. In this report, we focus on V-Dem and Freedom House’s Key Internet Controls, as these have disaggregated data, which examines the same questions on internet censorship. These two datasets use different methods of expert analysis: Freedom House trains a single expert or organization in their methodology and how to create a narrative report (Freedom House, 2019). The expert answers specific questions on over 100 issues, and then meets with other experts to normalize ratings around the world (Freedom House, 2019). V-Dem surveys multiple experts and then aggregates their responses into a single score (Pemstein et al., Reference Pemstein, Marquardt, Tzelgov, Wang, Medzihorsky, Krusell, Miri and von Römer2020). V-Dem uses bridge coding, overlap coding, and anchoring vignettes, where experts code the same situations to normalize responses to a common scale (Coppedge et al., Reference Coppedge, Gerring, Knutsen, Lindberg, Teorell, Marquardt, Medzihorsky, Pemstein, Alizada, Gastaldi, Hindle, Pernes, von Römer, Tzelgov, Wang and Wilson2021). Other expert analyses are focused on creating historical datasets for use by researchers (e.g., Howard et al., Reference Howard, Agarwal and Hussain2011). These are not particularly useful to donors or civil society due to the fact that they are not regularly updated (Millennium Challenge Corporation, 2019).

2.2.2. Remote measurement

Remote measurement of internet censorship involves sensing and cataloging specific instances of censorship (such as certain pages that were blocked or moments when the internet was shut down in a particular place). We divide remote measurement into three categories: no oversight, manual oversight, and automated oversight. No oversight methods generally involve a program testing for a particular type of censorship in each country, with the raw data being made available for use by other researchers. Examples include OONI, which uses software installed on computers of volunteers around the world to sense censorship instances, or ICLab (Niaki et al., Reference Niaki, Cho, Weinberg, Hoang, Razaghpanah, Christin and Gill2020; Open Observatory of Network Interference, 2020). However, without some degree of oversight, the raw data produced by these methods are prone to false positives, false negatives, and other technical challenges (Weaver et al., Reference Weaver, Sommer and Paxson2009; Pearce et al., Reference Pearce, Ensafi, Li, Feamster and Paxson2018; Weinberg, Reference Weinberg2018; Yadav and Chakravarty, Reference Yadav and Chakravarty2018).Footnote 3 Yet these data can be useful for users attempting to circumvent censorship because they are published daily or hourly and users can ground truth any potential false positives/negatives themselves.

To mitigate these challenges, many datasets turn to some type of oversight. Manual oversight methods are those which involve some level of human testing or aggregation of instances of censorship. This may involve a machine identifying a possible instance of censorship and a human checking to see if it can be confirmed, or a human reviewing a series of automated tests and aggregating them into a single score. The two remotely measured datasets reviewed here both use manual oversight. ONI has volunteers download software on their computers that test a list of potentially censored pages. These automated results are then reviewed by humans and aggregated into a score for each of four policy categories (Faris and Villeneuve, Reference Faris, Villeneuve, Deibert, Rohozinski and Zittrain2008). Access Now uses both volunteer reports and machine sensing methods to detect potential shutdowns and then uses local volunteers, internet companies, and the media to manually confirm shutdowns (Access Now, 2017). Unlike the other datasets considered here, Access Now publishes their data at the level of individual instances of censorship (e.g., a specific internet shutdown), with additional information about the context for that instance (Taye, Reference Taye2020).

A comparatively new method for detecting internet censorship includes both automated sensing and oversight, where various methods are used to alleviate the challenges of automated remote sensing without requiring human oversight or in-country volunteers (Sfakianakis et al., Reference Sfakianakis, Athanasopoulos and Ioannidis2011; Pearce et al., Reference Pearce, Ensafi, Li, Feamster and Paxson2018; VanderSloot et al., Reference VanderSloot, McDonald, Scott, Halderman and Ensafi2018; Weinberg, Reference Weinberg2018; Hoang et al., Reference Hoang, Doreen and Polychronakis2019; Raman et al., Reference Raman, Stoll, Dalek, Sarabi, Ramesh, Scott and Ensafi2020). These methods are lauded as being more efficient and ethical, as they do not endanger in-country volunteers who may be subject to government reprisals for assisting in identifying government censorship (Crandall et al., Reference Crandall, Crete-Nishihata and Knockel2015; Pearce et al., Reference Pearce, Ensafi, Li, Feamster and Paxson2018; VanderSloot et al., Reference VanderSloot, McDonald, Scott, Halderman and Ensafi2018). However, despite the promise for academics and users of circumvention tools, the current forms of these data are too inaccessible and disaggregated to be useful to donors or advocates, and therefore we do not include any in our analysis.

3. Comparing Censorship Data

Given the importance of these data for researchers, donors, policymakers, and civil society, it is vital they be as accurate as possible. Without omniscience, we cannot know whether any of these data are perfectly accurate (in that they capture all and only instances of internet censorship). However, it is possible to assess the likelihood that datasets include false positives (they capture censorship that did not actually occur) or false negatives (they do not capture censorship when it occurs)Footnote 4 by examining their methodology, as well as comparing how often and where they agree or disagree with one another. While we cannot determine with certainty whether false positives or false negatives occur in any given dataset, the findings from our empirical analyses, combined with each dataset’s methodology, and our broader literature review, all suggest that remotely measured data with manual oversight are less likely to contain false positives, but may be more vulnerable to false negatives. Conversely, some expert analyses appear more likely to include false positives but may be less vulnerable to false negatives. Recognizing this may help consumers of these data identify tradeoffs when selecting which datasets to utilize. In Section 4 we discuss some potential explanations for these differences, while in Section 5 we discuss the implications for policymakers and other users of these data.

3.1. Methods

In order to compare datasets, we focus on three concepts covered by multiple datasets: (1) did a country’s government filter political content on the internet in a given year?, (2) did a country’s government block social media in a given year?, and (3) did a country’s government shut down the internet in a given year? The exact questions asked by each source, as well as the scales used to score them, are described in Table 2. As noted in Section 3.3, we conduct sensitivity testing on the process of converting these scores into binary values. We use Stata to compare answers from each dataset for the same countries and years. We use a binary comparison instead of taking regressions of the original data because this allows us to isolate the more objective question of whether or not a government censored the internet from other questions of depth or pervasiveness of censorship which may be more subjective or dependent on the scale of the data. Additionally, simplified data can be more useful to donor organizations that are trying to simply answer the question of whether or not a government censored the internet in a given year.

Table 2. Data sources for analysis

Note. The datasets ask nearly identical questions on internet filtering and internet shutdowns, but measure these questions using very different methodologies.

Abbreviation: ONI, OpenNet initiative.

Due to a lack of overlap in the years and concepts covered by these datasets, it is not possible to compare all variables across all datasets. V-Dem is the only dataset that overlaps temporally with ONI, but ONI does not contain any measure of internet shutdowns (Coppedge, Reference Coppedge, Gerring, Knutsen, Lindberg, Teorell, Altman, Bernhard, Fish, Glynn, Hicken, Luhrmann, Marquardt, McMann, Paxton, Pemstein, Seim, Sigman, Skaaning, Staton, Wilson, Cornell, Alizada, Gastaldi, Gjerløw, Hindle, Ilchenko, Maxwell, Mechkova, Medzihorsky, von Römer, Sundström, Tzelgov, Wang, Wig and Ziblatt2020). Therefore V-Dem and ONI are compared on the two internet filtering questions (political content and social media) from 2007–2012. Freedom House, V-Dem, and Access Now overlap temporally from 2016–2020, but Access Now does not contain information on the filtering of political content (note that this is an update to the data published in Fletcher and Hayes-Birchler, Reference Fletcher and Hayes-Birchler2020). Therefore, Freedom House, V-Dem, and Access Now are compared on the concepts of social media blockages and internet shutdowns from 2016–2020. For all comparisons, only countries and years covered by all datasets are included. This includes 325 observations compared between Freedom House, V-Dem, and Access Now and 74 observations compared between V-Dem and ONI.

Since these datasets are on different scales, we first convert all of the scores into binary yes/no responses for the three questions, except for Freedom House’s dataset, which is already binary. In the Access Now dataset, any country listed as having a “full-shutdown” in a particular year is counted as shutting down the internet. If a country is listed as having a “service-based” shutdown it is counted as having blocked social media (Access Now, 2017). If a country is listed as having “full and service-based shutdowns” this indicates the government both shutdown the internet for some location or period of time and also blocked social media at another location or period of time; as such, it is counted in both categories. In order to convert V-Dem data to binary values we consider any response other than “Never or almost never” as censorship occurring in the country. Similarly, for ONI, any score other than “No evidence of filtering” is counted as censorship occurring in the country.

We then compare these binary scores across each relevant dataset to determine whether responses for each variable are the same across datasets. In other words, if V-Dem states that a given country filtered political content, blocked social media, and shutdown the internet in a given year, do the other datasets agree with this assessment? Where datasets disagree, we then categorize how often each dataset uniquely identified instances of censorship (indicating potential false positives) and how often each dataset uniquely identified instances of non-censorship (indicating potential false negatives).

The literature suggests that remote measurement without oversight is likely to result in both false positives and false negatives (Weaver et al., Reference Weaver, Sommer and Paxson2009; Pearce et al., Reference Pearce, Ensafi, Li, Feamster and Paxson2018; Weinberg, Reference Weinberg2018; Yadav and Chakravarty, Reference Yadav and Chakravarty2018). However, we anticipate that the remotely measured datasets we examine will result in fewer false positives than remote measurement without oversight due to the manual oversight and emphasis on verifiability in their methods. In an attempt to guard against the false positives common to the automated elements of remote measurement, these datasets establish for themselves a burden of proof to verify specific instance of filtering or shutdowns. The same burden of proof does not apply to the expert analysis methodology. We anticipate that this burden of proof may result in more false negatives in these remote measured datasets, as they may believe a country is censoring its internet but cannot verify it and therefore do not count it.

3.2. Findings

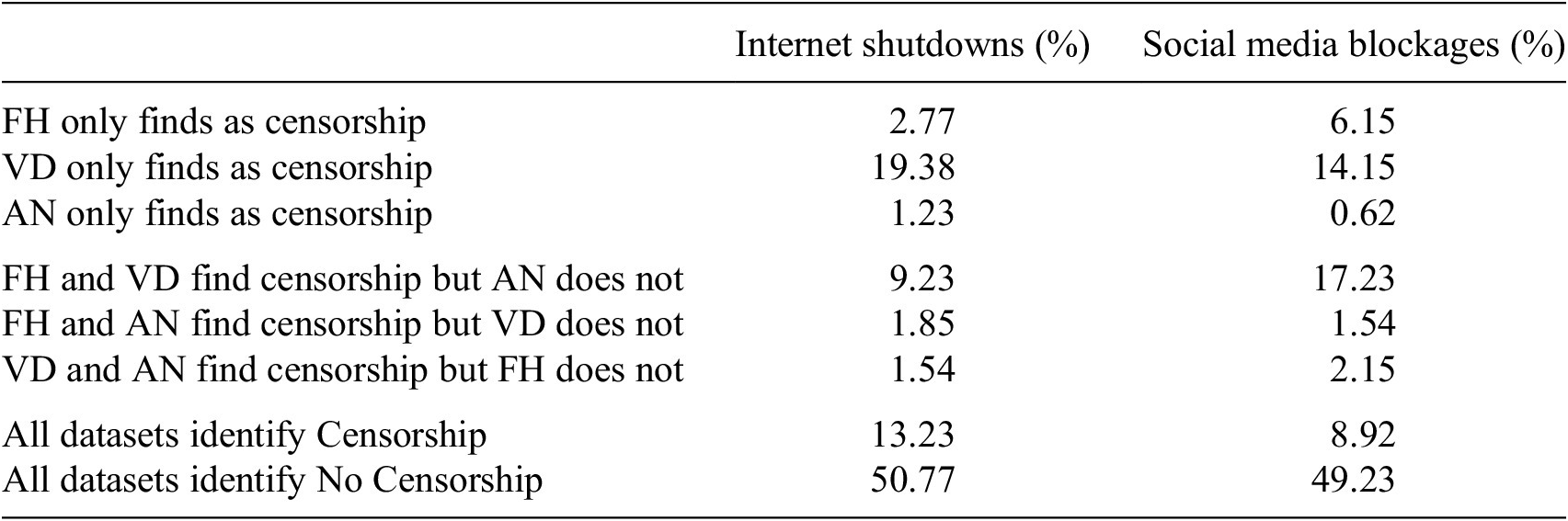

Our findings support the hypothesis that remotely measured datasets are less likely to contain false positives than expert analyses. Table 3 depicts the findings of the analysis of Freedom House (FH), V-Dem (VD), and Access Now (AN) on the concepts of social media blockage and internet shutdown. For each column, each cell is mutually exclusive and all the rows in a column are jointly exhaustive.

Table 3. Freedom house, V-Dem, & access now comparison

Note. The expert analyses (particularly V-Dem) are more likely to uniquely identify censorship, while the remotely measured data is more likely to uniquely identify non-censorship. Comparisons are based on 325 country/year-level observations.

While all three datasets agreed as to whether a country shut down the internet in a given year in the majority of cases (64%), they disagree in at least a third of cases. The disagreement is more pronounced for social media blockages, where the three sources agree only slightly more than half the time (58.15%). For both concepts, Access Now has the fewest instances (1.23% and 0.62%) of uniquely identifying censorship. The fact that in 99% of cases for both types of censorship, at least one of the expert analyses agrees with Access Now in identifying censorship––combined with the verifiable evidence Access Now publishes for each occurrence––indicates comparatively few false positives in the Access Now dataset (i.e., if there is a false positive in the Access Now dataset, it is unlikely it could be avoided by using an expert analysis instead).

Conversely, V-Dem is the sole dataset to identify censorship in 19.38% of instances for internet shutdowns and 14.15% of instances for social media blockages. This combined with the lack of verifiable evidence for its scores may indicate a higher rate of false positives. It may alternatively suggest that V-Dem finds instances of censorship missed by other datasets, and the other datasets include some false negatives. An analysis comparing V-Dem’s expert analysis and remote measurement of data on cyber security in Kawerau (Reference Kawerau2021) finds similarly that V-Dem was more likely to identify that there are vulnerabilities than a remote measurement system.

To investigate the issue of false negatives, we examine the cases where a dataset was the only one not to list a country as censoring the internet. As hypothesized, Access Now is the most likely to omit a country from its list of censors when both other datasets find that censorship occurred (9.23% of cases for internet shutdowns and 17.23% for social media blockages). V-Dem and Freedom House each have low shares of cases where they uniquely identified a country as not censoring the internet (1.9% and 1.5% for V-Dem, and 1.5% and 2.15% for Freedom House), indicating potentially fewer false negatives.

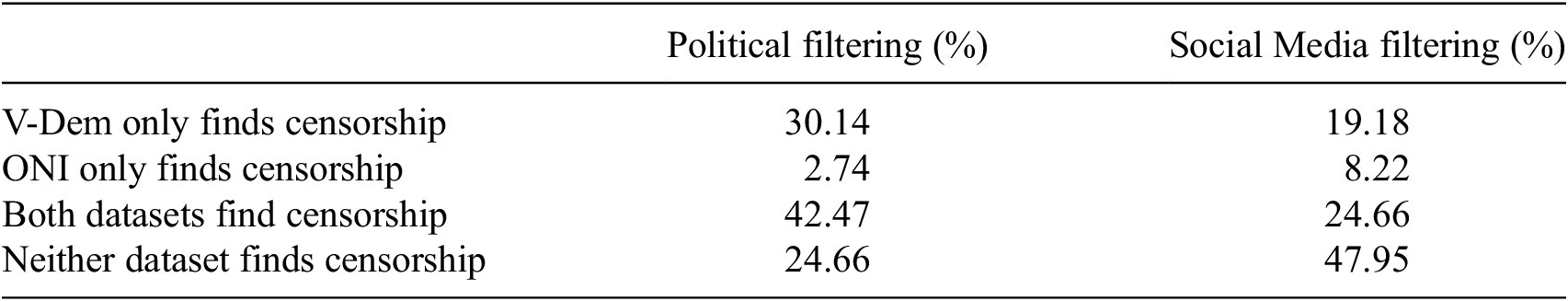

Table 4 presents the findings of the analysis of V-Dem and ONI on the two issues they both covered: social media blockages and political filtering. The two data sources agree in over two-thirds of cases, but once again V-Dem is more likely to uniquely identify censorship (in 19.8% of cases for social media blockages and 30.14% of cases for political filtering). Although there are also cases where ONI uniquely identified censorship, they were far less frequent (8.22% of cases for social media blockages and 2.74% of cases for political filtering.)

Table 4. V-Dem & ONI comparison

Note. V-Dem uniquely identifies censorship much more often than ONI for both political and social media filtering. Comparisons are based on 74 country/year-level observations.

Abbreviation: ONI, OpenNet initiative.

3.3. Sensitivity testing

To assess the robustness of these conclusions, we run three sensitivity tests to determine how much our conclusions are dependent on the underlying assumptions we have made. First, we test a second model of V-Dem treating both “rarely” and “never or almost never” as instances of non-censorship. Second, because the Freedom House dataset is offset from the calendar year, we match the exact months of the Freedom House dataset with the exact months of the Access Now dataset for one year. Finally, we compare just V-Dem and Access Now (with 895 observations) to determine whether the small sample size may be skewing the data.

V-Dem aggregates responses from many experts on a five-point scale. In our initial analysis, we only considered instances of censorship whenever the ordinal response was in the highest category: “Never or almost never”. However, respondents may be prone to central tendency bias, making them less likely to select the extreme values even when they have no evidence of censorship. We therefore re-ran the same analyses but considered both “rarely” and “never or almost never” as non-censorship in V-Dem’s data. Under this model, V-Dem uniquely identifies many fewer instances of censorship, but in three of the four comparisons, still uniquely identified more instances of censorship than the remote sensing dataset. Only comparing ONI and V-Dem on social media filtering with this alternative model did V-Dem uniquely identify fewer instances of censorship: V-Dem uniquely identified 5.5%, while ONI uniquely identified 8.2%. It is notable that using this alternative model for V-Dem increased the overall agreement of all the datasets in all four comparisons by anywhere from 6% to 14%. This indicates that V-Dem might benefit from instituting some controls for central tendency bias in their data to avoid mislabeling instances of non-censorship as censorship, as this puts it more in line with the results of other datasets, but as no such measures have been implemented, and these data are still documenting at least some censorship, our general conclusions still hold.

Freedom House’s data is offset from the calendar year. Since censorship events can last several months, (Taye, Reference Taye2020) this may not impact the comparison substantially, but to check the impacts of this offset, we match the months of the Freedom House report exactly using the Access Now data for the 2017 Freedom House data. Using these parameters, Access Now identifies shutdowns that Freedom House does not in 6% of cases (compared to 3% in the original model), while Freedom House identifies shutdowns that Access Now does not 12.3% of the time (compared to 12% in the original model). In terms of social media blockages, Access Now identifies blockages that Freedom House does not in 4.6% of cases (compared to 2.8% in the original), and Freedom House identifies blockages that Access Now does not in 27.8% of cases (compared to 23.4% in the original model). Given that there is minimal variation and Access Now continues to identify censorship much less frequently, the differences in these datasets are not wholly due to the differences in time periods covered and would be unlikely to change our conclusion.

Finally, the sample size of these data is somewhat small due to the need to have overlapping time periods and countries. We test the impact of this small sample size by also comparing just V-Dem and Access Now, using all 895 observations shared by both datasets. We find that V-Dem continues to uniquely identify instances of censorship more than Access Now. V-Dem identifies shutdowns that Access Now does not in 24.24% of all cases using this larger dataset, while Access Now uniquely identifies shutdowns in 1.67% of all cases. In terms of social media, V-Dem identifies blockages that Access Now does not in 24.58% of cases, while Access Now identifies blockages V-Dem does not in just 1.01% of cases. All of these sensitivity tests support the initial conclusion that generally expert analyses are more prone to over-identifying censorship that may not exist, while remote sensing is more prone to under-identifying censorship when it may actually exist.

4. Analysis

While we cannot determine conclusively which dataset is most accurate, our findings suggest that remote measurement with human oversight results in fewer false positives than expert analysis, although it may be more vulnerable to false negatives. Conversely, our analysis also suggests that expert analyses, V-Dem in particular, include more false positives than remote measurement, though they may provide a more complete picture of internet censorship.

4.1. Individual case analysis

At least some of the instances of censorship identified by the expert analyses but not by Access Now appear to be accurate, as they are confirmed by other sources, such as a social media blockage in Kazakhstan in 2019, or social media blockages in the U.A.E. in 2020 (Human Rights Watch, 2020; Turak, Reference Turak2020). While others such as Cuba’s 2020 internet shutdown or Libya’s 2020 internet shutdown, cannot be verified by any other source and so may well be false positives from the expert analyses.

One explanation for the apparent false negatives found in remote measurement data could be that these methods are constrained by the number of in-country volunteers or journalists who can manually confirm each instance of censorship. In countries with significant limitations on civil liberties or press freedoms journalists and civil society organizations may lack the capacity to confirm censorship. This is borne out in the data, as the majority of censorship identified by Freedom House and V-Dem but not Access Now come from North Africa, the Middle East, and Central Asia. These regions have some of the strictest limitations on the media in the world (Reporters Without Borders, 2020). Of the 30 instances of shutdowns identified by Freedom House and V-Dem, 20 are in one of these regions. Azerbaijan, Kazakhstan, and Uzbekistan, alone account for nine of these instances, with Kazakhstan being identified as shutting down the internet by both expert analyses but not Access Now in 2016, 2017, 2019, and 2020. A similar pattern can be seen in the social media blockages. Of the 56 instances of social media blockages identified by the two expert analyses but not Access Now, 30 are in the Middle East or Central Asia.

There are several possible explanations for the many instances of censorship that are identified by V-Dem, but no other datasets, beyond the concerns about central tendency bias that have already been discussed. Looking at news reports from the countries where V-Dem uniquely identifies internet censorship, it may also be the case that some experts are conflating social media blockages with full internet shutdowns. This is supported by instances such as Venezuela in 2019, where, according to news reports, the government blocked access to social media platforms, but did not shut down the internet (Gold, Reference Gold2019), but V-Dem identifies this as a shutdown. Saudi Arabia (Dahan, Reference Dahan2019), and Cuba (Amnesty International, 2017) appear to be in the same situation. Experts may also conflate civil liberties in general with internet censorship as appears to have happened in the Philippines where V-Dem identifies a shutdown despite no other evidence for one (Engagemedia and Sinar Project, 2018). This would explain the high degree of internal correlation in V-Dem’s indicators noted by Frantz et al. (Reference Frantz, Kendall-Taylor and Wright2020): if experts are conflating different types of censorship, the data may be picking up on the general level of freedom in a country instead of the specific question of an internet or social media shut down. This would explain why V-Dem’s data shows that shutdowns were almost as prevalent in 2000 as they are today, in spite of the literature suggesting they were very rare before 2011 (Roberts et al., Reference Roberts, Zuckerman and Palfrey2011; Subramanian, Reference Subramanian2012; CIPESA, 2019) and very prevalent today (Rydzak, Reference Rydzak2018; Taye, Reference Taye2020).

However, there do appear to be instances where V-Dem is accurately picking up on censorship that is not captured in the other two datasets, such as in Lebanon where independent news sources confirm that social media apps were blocked in 2019, but only V-Dem identified censorship (Hall, Reference Hall2019). This might also be the case in Rwanda where some sources report government control of social media and communication apps in the lead-up to the 2017 elections (AFP, 2017; McDevitt, Reference McDevitt2017) and only V-Dem identified social media blockages, which suggests that instances of V-Dem uniquely identifying censorship are likely a mix of false positives and V-Dem picking up on censorship that the other datasets miss.

A similar pattern emerges with Freedom House’s data. Though there are fewer cases of censorship uniquely identified by Freedom House than V-Dem, both still identify many more than Access Now. There, are several potential explanations for this. Freedom House may be more willing to call something government censorship even if it is only suspected but not corroborated. For example, shutdowns in Zambia in 2016 and 2020, were captured only by Freedom House, and the evidence is inconclusive if they were intentional or simple technical outages (Gambanga, Reference Gambanga2016; Mwango, Reference Mwango2020). In other situations, Freedom House may be conflating the shutdowns of other media with internet shutdowns, such as when it was the only source to document an internet shutdown in the Philippines in 2020 (there was no evidence of an internet shutdown, but the government did shut down the major broadcasting network and phone communications (Ballaran, Reference Ballaran2018; Ramos, Reference Ramos2020)). Some of the differences may arise from which platforms the datasets classify as “social media,” with Freedom House including smaller sites like Telegram and Medium (leading to Freedom House uniquely identifying censorship in Bahrain and Malaysia (Marczak, Reference Marczak2016; Freedom House, 2017), which other datasets might classify as messaging apps (in the case of Telegram) or news sites (in the case of Medium). While there are fewer instances than V-Dem, Freedom House’s uniquely identified censorship appears to also be a mix of false positives/uncorroborated censorship, and accurately identified cases of censorship that other datasets miss or would classify differently. One advantage of Freedom House and Access Now over V-Dem is that they include citations of these instances, even if Freedom House has a lower bar for confirming censorship than Access Now.

4.2. Limitations

There are several limitations to the methodology used in this paper. It is possible, and even likely that the expert identified by Freedom House is one of the experts that fill out V-Dem’s survey, given the limited number of country experts for certain countries. However, given the fact that V-Dem surveys over 20,000 experts each year, including around 100–200 experts per country, it is unlikely that a single expert that fills out Freedom House’s survey would shift the final V-Dem results substantially (Coppedge et al., Reference Coppedge, Gerring, Knutsen, Lindberg, Teorell, Marquardt, Medzihorsky, Pemstein, Alizada, Gastaldi, Hindle, Pernes, von Römer, Tzelgov, Wang and Wilson2021). However, there is a potential that experts might communicate with each other and form similar opinions about a given country, increasing the correlation between these surveys.

The internet has changed rapidly since it was created and will likely continue to change (Cohen-Almagor, Reference Cohen-Almagor and Luppicini2013). This means that some of our results may be dependent on the particular years that are being measured. As noted above, many of V-Dem’s likely false positives are from older years where V-Dem found internet shutdowns, but experts agree that they were not particularly prevalent (Roberts et al., Reference Roberts, Zuckerman and Palfrey2011; Subramanian, Reference Subramanian2012; CIPESA, 2019). This relates to the concern that, given that there are only two datasets for each method, these conclusions may not be generalizable. As the internet continues to change, and technology for censoring and detecting censorship changes, these findings may not continue to hold. However, these conclusions are valid for donor organizations making decisions right now, and for the current universe of available datasets on internet censorship.

Further, as noted above, this analysis only captures certain components of internet censorship, it does not capture components such as a government requesting that a social media company self-censor, take down particular types of content or posts themselves, or make them unavailable in a particular country (Zittrain et al., Reference Zittrain, Faris, Noman, Clark, Tilton and Morrison-Westphal2017). Self-censorship is challenging to measure, and a review of the policies of social media companies may be more effective to measure this type of censorship than either remote sensing or expert analysis, but more research is needed (Zhong et al., Reference Zhong, Wang and Huang2017; Zittrain et al., Reference Zittrain, Faris, Noman, Clark, Tilton and Morrison-Westphal2017; Shen and Truex, Reference Shen and Truex2021).

5. Conclusion

There are a range of considerations that go into determining which dataset is the best measure of internet censorship for a given purpose. While perfect accuracy is desirable, given the high levels of disagreement it is unlikely that any one dataset is completely accurate. Consumers of these data should therefore consider whether they prefer a higher likelihood of false positives or false negatives. Would they rather falsely accuse a country of censoring the internet when it does not, or allow a country that does censor the internet to claim that its internet is free unopposed?

Some consumers may prefer that their data be fully verifiable: that there should be a high burden of proof to show a country has censored their internet, even if that means missing some instances. Our analysis indicates that such consumers would be best served by a remotely measured dataset with manual oversight, using methods similar to Access Now or ONI, where each instance of censorship has been verified, and where an average of 96.8% of cases of censorship are supported by two or more datasets. Other consumers may prefer that as many instances of censorship are captured as possible, even if that means including some countries which may not have engaged in censorship. Given that many governments try to hide their censorship––and that many are quite sophisticated in doing so––these consumers may worry that the burden of proof of remote measurement methods with human oversight leaves them vulnerable to missing too many cases of censorship, particularly in especially repressive regimes. These consumers would be advised to use an expert analysis dataset instead, such as Freedom on the Net or V-Dem.

Based on our review of the literature, we would expect that multilateral and bilateral donor organizations may prefer data that is verifiable and therefore may be better served by remotely sensed data than expert analysis when measuring internet censorship. Honig (Reference Honig2019) notes an incentive for international development organizations to prefer clear measurable data to ensure accountability given the challenges of managing projects from a distance. Chattopadhyay (Reference Chattopadhyay2016) notes the importance of verifiable data for goals like the SDGs, and the political challenges with basing such goals on subjective assessments. These organizations may also simply be influenced by political and diplomatic pressure to not give or take away development aid on the basis of subjective unverifiable assessments. In fact, in 2020 the U.S. development agency, the Millennium Challenge Corporation shifted from using Freedom House’s Freedom on the Net data to the #KeepItOn data from Access Now to inform their assessment of which countries are well governed enough to receive assistance (Millennium Challenge Corporation, 2019, 2020). Other donors have expressed a desire for objective, verifiable, and actionable datasets to inform similar measures of country performance (European Commission, 2016; USAID, 2019).

Advocacy and civil society organizations, on the other hand, may prefer data that ensures all methods of censorship are captured. Organizations that are focused on advocating for fundamental freedoms may be less concerned if the indices are capturing a general climate of civil liberties (as V-Dem appears to be) since they may be working on multiple fronts and would be concerned if a country that was very restrictive was shown to be free because instances of restriction could not be validated. Additionally, organizations that work in the Middle East, North Africa, and Central Asia may prefer expert analyses that appear to capture restrictions in these areas more consistently.

There are of course other, logistical considerations that users consider when choosing a particular dataset. Users that want universal country coverage may avoid Freedom House’s data, which only covers 65 countries. Users looking to create a time series may prefer V-Dem’s data, as it starts in 2000. Some users may prefer data at the incident level as opposed to the country level, which makes Access Now’s dataset more appealing. Others may prefer that the data include a clear index and ranking of countries to better “name and shame” to leverage policy change. Some users might prefer a dataset that goes deep on specific questions, such as Access Now on internet shutdowns, while others may be more interested in binary answers to many different questions, such as those provided by Freedom House’s Key Internet Controls.

Progress has been made to create remotely measured datasets with automated oversight, which may be more accurate than either of the methods reviewed here (Pearce et al., Reference Pearce, Ensafi, Li, Feamster and Paxson2018; VanderSloot et al., Reference VanderSloot, McDonald, Scott, Halderman and Ensafi2018; Weinberg, Reference Weinberg2018; Hoang et al., Reference Hoang, Doreen and Polychronakis2019; Raman et al., Reference Raman, Stoll, Dalek, Sarabi, Ramesh, Scott and Ensafi2020). However, the current versions of these datasets fail to meet many of the logistical considerations above. They are often too technical or disaggregated to be useful to donors and advocacy organizations. Therefore, there is an opportunity for future work in the aggregation of these, potentially more accurate, datasets into annually ranked indices of censorship that are more accessible to donor and advocacy organizations.

Acknowledgments

Thanks to Daniel Barnes, Jennifer Sturdy, Cindy Sobieski, Maïté Hostetter, and Alexandra Berry for reviewing. An earlier conference version of this paper can be found here (https://doi.org/10.5281/zenodo.7384697). This current paper includes updates on the data and expands upon the analysis of the original conference paper.

Competing Interests

The authors are or have been employed by the Millennium Challenge Corporation (MCC). The views expressed herein are those of the authors and should not be construed as an express or implied endorsement of those views by the MCC nor the U.S. Government.

Author Contributions

Conceptualization: T.F., A.H-B.; Data curation: T.F.; Methodology: T.F., A.H-B.; Revision for publication: T.F.; Writing original draft: T.F., A.H-B. All authors approved the final submitted draft.

Data Availability Statement

Replication data and code can be found at Zenodo: https://zenodo.org/record/7384697#.Y-GljK3MJPY.

Funding Statement

The initial research underpinning this work was funded by the Millennium Challenge Corporation. The views expressed herein are those of the authors and should not be construed as an express or implied endorsement of those views by the MCC nor the U.S. Government.

Comments

No Comments have been published for this article.