1 Introduction

1.1 Gradual typing

Gradual typing (Siek & Taha, Reference Siek and Taha2006) is a language feature designed to combine complementary benefits of static typing and dynamic typing languages. A gradual typing program allows the integration of dynamically typed code and statically typed code. While dynamically typed fragment requires additional runtime safety check as dynamic typing language, the statically typed fragment is guaranteed blame-free by the gradual type system. Thus, gradual typing enables developers to do rapid prototyping and seamlessly evolve into statically typed programs. Over the past decade, there have been many advances in gradual typing, both in academia and industry.

The academic field is mainly dedicated to combining gradual typing with other language features, including both expressiveness (object (Siek & Taha, Reference Siek and Taha2007; Chung et al., Reference Chung, Li, Nardelli and Vitek2018), effect (Bañados Schwerter et al., Reference Bañados Schwerter, Garcia and Tanter2014, Reference Bañados Schwerter, Garcia and Tanter2016;Wadler, Reference Wadler2021), polymorphism (Ahmed et al., Reference Ahmed, Findler, Siek and Wadler2011, Reference Ahmed, Jamner, Siek and Wadler2017; Igarashi et al., Reference Igarashi, Sekiyama and Igarashi2017), set theoretic types (Toro & Tanter, Reference Toro and Tanter2017; Castagna et al., Reference Castagna, Lanvin, Petrucciani and Siek2019)), and implementation optimization (Pycket (Bauman et al., Reference Bauman, Bolz, Hirschfeld, Kirilichev, Pape, Siek and Tobin-Hochstadt2015), Grift (Kuhlenschmidt et al., Reference Kuhlenschmidt, Almahallawi and Siek2019)).

In the industrial field, there are some practical gradually typed languages and optional type checkers. Practical gradually typed languages include including Typed Racket (Tobin-Hochstadt & Felleisen, Reference Tobin-Hochstadt and Felleisen2008), TypeScript (Bierman et al., Reference Bierman, Abadi and Torgersen2014), mypy (Lehtosalo et al., Reference Lehtosalo, van Rossum, Levkivskyi and Sullivan2014), Typed Clojure (Bonnaire-Sergeant et al., Reference Bonnaire-Sergeant, Davies and Tobin-Hochstadt2016), FLOW (Chaudhuri et al., Reference Chaudhuri, Vekris, Goldman, Roch and Levi2017), and so on. Most of them add unsound type systems to existing dynamically typed languages Footnote 1 .

1.2 A gradual type system hides erroneous data flows

A gradual type system can be viewed as a normal static type system with two extensions: a dynamic type

![]() $\star$

(also known as

$\star$

(also known as

![]() $?$

or Dyn) that represents dynamically typed code and a binary consistency relation that is used in place of equality to accommodate dynamic types in type rules. When only static types are involved, the consistency relation works the same as the type equality relation, while dynamic types are consistent with all types, which enables dynamically typed code to be integrated with statically typed code. However, the consistency relation is intransitive. For example, we have both

$?$

or Dyn) that represents dynamically typed code and a binary consistency relation that is used in place of equality to accommodate dynamic types in type rules. When only static types are involved, the consistency relation works the same as the type equality relation, while dynamic types are consistent with all types, which enables dynamically typed code to be integrated with statically typed code. However, the consistency relation is intransitive. For example, we have both

![]() $\mathrm{\texttt{Int}} \sim \star $

and

$\mathrm{\texttt{Int}} \sim \star $

and

![]() $\star \sim \mathrm{\texttt{Bool}}$

, but not

$\star \sim \mathrm{\texttt{Bool}}$

, but not

![]() $\mathrm{\texttt{Int}} \sim \mathrm{\texttt{Bool}}$

, since

$\mathrm{\texttt{Int}} \sim \mathrm{\texttt{Bool}}$

, since

![]() $\mathrm{\texttt{Int}} \neq \mathrm{\texttt{Bool}}$

in a static type system.

$\mathrm{\texttt{Int}} \neq \mathrm{\texttt{Bool}}$

in a static type system.

The consistency relation weakens the static type system, as a well-placed

![]() $\star$

can easily make a buggy program pass type checking. For example, consider the following STLC (Simply Typed Lambda Calculus) expression:

$\star$

can easily make a buggy program pass type checking. For example, consider the following STLC (Simply Typed Lambda Calculus) expression:

This program will be rejected by the static type system of STLC, and the result of running this program will be a dynamic type error, since a value of type Bool cannot be added to an integer value. However, in GTLC (Gradually Typed Lambda Calculus), this program will pass type checking if we change the type annotation from Int to

![]() $\star$

. As we state, we have both

$\star$

. As we state, we have both

![]() $\star \sim \mathrm{\texttt{Bool}}$

and

$\star \sim \mathrm{\texttt{Bool}}$

and

![]() $\star \sim \mathrm{\texttt{Int}}$

.

$\star \sim \mathrm{\texttt{Int}}$

.

Dynamic type is ubiquitous in many real-world gradually typed languages, where every omitted type annotation is treated as a dynamic type. To recover type soundness, a gradually typed language will check such dynamic inconsistency by runtime enforcement. The formal semantics of gradually typed language consists of two parts: (1) an independent statically typed intermediate language like blame calculus (Wadler & Findler, Reference Wadler and Findler2009), which extends the source gradually typed language with explicit type cast, and (2) a translation process, which inserts casts to the source program where consistency relation is used in static type checking.

A cast

![]() $\langle A \Leftarrow^b B \rangle v$

indicates that the guarded value v of type B is required to have type A, and this cast is identified with the blame label b. At runtime, casts in the translated program will catch dynamic inconsistency as cast error, and the responsible cast will be assigned blame. Such a mechanism is called blame tracking, derived from related research on contracts (Findler & Felleisen, Reference Findler and Felleisen2002; Tobin-Hochstadt et al., Reference Tobin-Hochstadt, Felleisen, Findler, Flatt, Greenman, Kent, St-Amour, Strickland and Takikawa2017). Compared with statically typed languages, the type safety property of gradually typed languages is characterized by blame behavior, which is one of the original results of Tobin-Hochstadt and Felleisen (Reference Tobin-Hochstadt and Felleisen2006) and subsequently improved in the follow-up research.

$\langle A \Leftarrow^b B \rangle v$

indicates that the guarded value v of type B is required to have type A, and this cast is identified with the blame label b. At runtime, casts in the translated program will catch dynamic inconsistency as cast error, and the responsible cast will be assigned blame. Such a mechanism is called blame tracking, derived from related research on contracts (Findler & Felleisen, Reference Findler and Felleisen2002; Tobin-Hochstadt et al., Reference Tobin-Hochstadt, Felleisen, Findler, Flatt, Greenman, Kent, St-Amour, Strickland and Takikawa2017). Compared with statically typed languages, the type safety property of gradually typed languages is characterized by blame behavior, which is one of the original results of Tobin-Hochstadt and Felleisen (Reference Tobin-Hochstadt and Felleisen2006) and subsequently improved in the follow-up research.

Informally, type safety of gradually typed languages ensures that every dynamic type error should be caught by a blame message. As a result, in a gradually typed programming language with type safety, a program without blame cannot go wrong. Every blame is triggered by a type cast failure, thus we can use the notion of the cast error to represent dynamic type error. In this paper, we equate dynamic type error and dynamic cast error for gradually typed languages, both of which represent type inconsistency detected at runtime.

The mentioned program 1 will be translated into the following program in blame calculus:

This program will abort with blame

![]() $b_1$

immediately after beta reduction.

$b_1$

immediately after beta reduction.

\begin{align*} &(\lambda x:\star.\ (\langle \mathrm{\texttt{Int}} \Leftarrow^{b_1} \star \rangle x) + 1) \langle \star \Leftarrow^{b_2} \mathrm{\texttt{Bool}} \rangle \mathrm{\texttt{true}} \\ \longmapsto& (\langle \mathrm{\texttt{Int}} \Leftarrow^{b_1} \star \Leftarrow^{b_2} \mathrm{\texttt{Bool}} \rangle \mathrm{\texttt{true}}) + 1 \\ \longmapsto& \mathrm{\texttt{blame}}\ b_1 \end{align*}

\begin{align*} &(\lambda x:\star.\ (\langle \mathrm{\texttt{Int}} \Leftarrow^{b_1} \star \rangle x) + 1) \langle \star \Leftarrow^{b_2} \mathrm{\texttt{Bool}} \rangle \mathrm{\texttt{true}} \\ \longmapsto& (\langle \mathrm{\texttt{Int}} \Leftarrow^{b_1} \star \Leftarrow^{b_2} \mathrm{\texttt{Bool}} \rangle \mathrm{\texttt{true}}) + 1 \\ \longmapsto& \mathrm{\texttt{blame}}\ b_1 \end{align*}

This example shows that the consistency relation makes the gradual type system too weak to reject programs with even obvious type errors. Specifically, a gradual type system cannot detect erroneous data flows imposed by the passing of values during program execution. The inserted cast

![]() $\langle \mathrm{\texttt{Int}} \Leftarrow^{b_1} \star \rangle$

will be executed on every argument of the lambda expression, but a naive static analysis tells us that the only possible argument is a boolean that cast to dynamic. Therefore, this cast never succeeds—and the gradual type system cannot detect such a problem.

$\langle \mathrm{\texttt{Int}} \Leftarrow^{b_1} \star \rangle$

will be executed on every argument of the lambda expression, but a naive static analysis tells us that the only possible argument is a boolean that cast to dynamic. Therefore, this cast never succeeds—and the gradual type system cannot detect such a problem.

1.3 Our work: Static Blame

Recovery of data flows in gradual typing programs amounts to a more strict static check. However, we cannot simply reject a program if a hidden inconsistency is detected, since we cannot assert that the detected inconsistency will necessarily trigger a runtime error in the program. For example, it may be due to a commonly used idiom in dynamic languages: a dynamic parameter accepts values of multiple types and each control flow branch handles a different type (Castagna et al., Reference Castagna, Laurent, Nguyễn and Lutze2022). Therefore, there is a natural question as to how data flow relates to the results of running the program, and under what circumstances we can assert the existence of errors in gradual typing programs by detecting erroneous data flows.

One way to tackle this problem is from the blame mechanism. Data flows are monitored by type casts at runtime, and blame messages will be thrown when a cast fails. By the blame safety property, every dynamic error can be caught by a blame message. Therefore, if we can establish the correspondence between data flows and runtime blame information, then we can prove some properties of the runtime behavior indirectly through these data flows. Consider the following trivial example program:

where the meta-variable t represents a complicated computation hard to analyze statically. In this case, the hidden erroneous data flow is that a boolean value is passed as a dynamic value and cast to type int, but

![]() $\mathrm{\texttt{Int}} \not \sim \mathrm{\texttt{Bool}}$

. Although we cannot assert whether the program will abort with a blame message or not, we can still assert the cast

$\mathrm{\texttt{Int}} \not \sim \mathrm{\texttt{Bool}}$

. Although we cannot assert whether the program will abort with a blame message or not, we can still assert the cast

![]() $\langle \mathrm{\texttt{Int}} \Leftarrow \star \rangle$

that will be inserted to the else branch is erroneous. This is because the only value passed into x is of type Bool.

$\langle \mathrm{\texttt{Int}} \Leftarrow \star \rangle$

that will be inserted to the else branch is erroneous. This is because the only value passed into x is of type Bool.

With this principle, we introduce Static Blame in this paper, a framework for establishing the correspondence between static-time data flow information and runtime behavior for gradually typed programs. As its name suggests, the goal of Static Blame is to determine possible runtime blame messages of a program at static time, enabling a more rigorous static check than type checking.

The key concept of Static Blame is type flow, which gives a uniform treatment to type casts and flow constraints. As the standard flow constraints, type flow models the value passing relation among the contexts of a program, while type casts can be viewed as special value passing monitored by blame mechanism. By intuition, a type flow

![]() $\hat{T} \triangleright_b \hat{S}$

denotes that values in context

$\hat{T} \triangleright_b \hat{S}$

denotes that values in context

![]() $\hat{T}$

may flow into context

$\hat{T}$

may flow into context

![]() $\hat{S}$

during program execution.

$\hat{S}$

during program execution.

![]() $\hat{T}$

(resp.

$\hat{T}$

(resp.

![]() $\hat{S}$

) is a labeled type, which is used to formalize the notion of runtime context, as the standard technique program labels (Nielson et al., Reference Nielson, Nielson and Hankin1999). Moreover, we say that

$\hat{S}$

) is a labeled type, which is used to formalize the notion of runtime context, as the standard technique program labels (Nielson et al., Reference Nielson, Nielson and Hankin1999). Moreover, we say that

![]() $\hat{T}$

is an inflow of

$\hat{T}$

is an inflow of

![]() $\hat{S}$

, while

$\hat{S}$

, while

![]() $\hat{S}$

is an outflow of

$\hat{S}$

is an outflow of

![]() $\hat{T}$

. With type flow, Static Blame can reveal data flows mediated by dynamic types by type flow analysis, which consists of a generation process and a transitivity analysis. We develop Static Blame on a standard formal gradual typing lambda calculus

$\hat{T}$

. With type flow, Static Blame can reveal data flows mediated by dynamic types by type flow analysis, which consists of a generation process and a transitivity analysis. We develop Static Blame on a standard formal gradual typing lambda calculus

![]() $\lambda_\star$

, an extension of GTLC by adding subtyping relation. It bears strong resemblance to the language

$\lambda_\star$

, an extension of GTLC by adding subtyping relation. It bears strong resemblance to the language

![]() $\mathbf{Ob}^?_{<:}$

in Siek and Taha (Reference Siek and Taha2007), albeit without object-oriented features. Its runtime semantics is defined by a translation process into a blame calculus

$\mathbf{Ob}^?_{<:}$

in Siek and Taha (Reference Siek and Taha2007), albeit without object-oriented features. Its runtime semantics is defined by a translation process into a blame calculus

![]() $\lambda_B$

, which admits a standard Natural semantics

Footnote 2

of gradually typed languages.

$\lambda_B$

, which admits a standard Natural semantics

Footnote 2

of gradually typed languages.

The form of the type flow is designed to be similar to type casts in blame calculus and indicate a direct correspondence between type flows and type casts. With the formal system that will be developed later, every type cast

![]() $\langle S\Leftarrow^b T\rangle$

occurring in the execution corresponds directly to a type flow

$\langle S\Leftarrow^b T\rangle$

occurring in the execution corresponds directly to a type flow

![]() $\hat{T} \triangleright_b \hat{S}$

. The type flow analysis ensures that for every possible type cast combination

$\hat{T} \triangleright_b \hat{S}$

. The type flow analysis ensures that for every possible type cast combination

![]() $\langle S\Leftarrow^{b_1} G\rangle \langle G \Leftarrow^{b_2} T\rangle $

occurring in the execution, there will be type flows

$\langle S\Leftarrow^{b_1} G\rangle \langle G \Leftarrow^{b_2} T\rangle $

occurring in the execution, there will be type flows

![]() $\hat{G} \triangleright_{b_1} \hat{S}, \hat{T} \triangleright_{b_2} \hat{G}$

generated by type flow analysis. As a result, Static Blame can exploit the possible failure of a gradually typed program statically.

$\hat{G} \triangleright_{b_1} \hat{S}, \hat{T} \triangleright_{b_2} \hat{G}$

generated by type flow analysis. As a result, Static Blame can exploit the possible failure of a gradually typed program statically.

However, flow analysis requires minor changes to fit in gradually typed languages. The compilation and evaluation of a gradually typed program will generate cast expressions that are not presented in the source code, which therefore cannot be simply denoted by certain textual positions in the source code. For example, in the blame calculus, the evaluation of term application v’ w involves a cast decomposition when the applied term v’ is a cast

![]() $\langle T_1 \to S_2 \Leftarrow^b S_1 \to T_2 \rangle v$

between function types.

$\langle T_1 \to S_2 \Leftarrow^b S_1 \to T_2 \rangle v$

between function types.

The sub-expression

![]() $(\langle T_2 \Leftarrow^b T_1 \rangle w)$

inside the right hand is newly generated and cannot be viewed as an evaluation result of any proper sub-expression inside the left hand. As a result, labeled types encode more information than program labels. Besides a textual identifier, a labeled type

$(\langle T_2 \Leftarrow^b T_1 \rangle w)$

inside the right hand is newly generated and cannot be viewed as an evaluation result of any proper sub-expression inside the left hand. As a result, labeled types encode more information than program labels. Besides a textual identifier, a labeled type

![]() $\hat{T}$

also carries a gradual type T and a list (maybe empty) of context refinements to indicate these new contexts. The carried type T in

$\hat{T}$

also carries a gradual type T and a list (maybe empty) of context refinements to indicate these new contexts. The carried type T in

![]() $\hat{T}$

is just a designed redundancy to keep the similarity between type flow and type cast in form.

$\hat{T}$

is just a designed redundancy to keep the similarity between type flow and type cast in form.

1.4 Potential error and error classification

To demonstrate the effects of the Static Blame framework, we also develop a classification of potential errors detected from type flow analysis and prove formal properties of each category in the classification. Generally speaking, for a type flow

![]() $\hat{\star}_1 \triangleright_{b_1} \hat{S}$

gotten in type flow analysis, if there is also a type flow

$\hat{\star}_1 \triangleright_{b_1} \hat{S}$

gotten in type flow analysis, if there is also a type flow

![]() $\hat{T} \triangleright_{b_2} \triangleright \hat{\star}_2$

such that

$\hat{T} \triangleright_{b_2} \triangleright \hat{\star}_2$

such that

![]() $T \not \sim S$

and

$T \not \sim S$

and

![]() $\hat{\star}_1 = \hat{\star}_2$

, then a potential error is detected. In other words, a potential error is an inconsistent flow via dynamic types. Specifically, we classify detected potential errors into three categories in a way similar to may-must information (Nielson et al., Reference Nielson, Nielson and Hankin1999) in software analysis. Namely,

$\hat{\star}_1 = \hat{\star}_2$

, then a potential error is detected. In other words, a potential error is an inconsistent flow via dynamic types. Specifically, we classify detected potential errors into three categories in a way similar to may-must information (Nielson et al., Reference Nielson, Nielson and Hankin1999) in software analysis. Namely,

1. normal potential errors for type flows that may be an erroneous data flow.

2. strict potential errors for type flows that must be an erroneous data flow.

3. wrong dynamic types for dynamic types that always hide erroneous data flows.

For example, consider the following example program as a variant of expression: 3:

In the compiled blame calculus code, any value passed to x will be cast to a boolean by

![]() $\langle \mathrm{\texttt{Bool}} \Leftarrow \star \rangle x$

in the

$\langle \mathrm{\texttt{Bool}} \Leftarrow \star \rangle x$

in the

![]() $\neg x$

and to an integer by

$\neg x$

and to an integer by

![]() $\langle \mathrm{\texttt{Int}} \Leftarrow \star \rangle x$

in the

$\langle \mathrm{\texttt{Int}} \Leftarrow \star \rangle x$

in the

![]() $x + 1$

. Suppose that these two type casts correspond to type flows

$x + 1$

. Suppose that these two type casts correspond to type flows

![]() $\hat{\star} \triangleright_{b_1} \hat{\mathrm{\texttt{Bool}}}$

and

$\hat{\star} \triangleright_{b_1} \hat{\mathrm{\texttt{Bool}}}$

and

![]() $\hat{\star} \triangleright_{b_2} \hat{\mathrm{\texttt{Int}}}$

where

$\hat{\star} \triangleright_{b_2} \hat{\mathrm{\texttt{Int}}}$

where

![]() $\hat{\star}$

denotes the labeled type of x, then we can induce that:

$\hat{\star}$

denotes the labeled type of x, then we can induce that:

1. if there exists an inflow of type T inconsistent with Bool (resp. Int) via

$\hat{\star}$

, the type flow

$\hat{\star}$

, the type flow

$\hat{\star} \triangleright_{b_1} \hat{\mathrm{\texttt{Bool}}}$

(resp.

$\hat{\star} \triangleright_{b_1} \hat{\mathrm{\texttt{Bool}}}$

(resp.

$\hat{\star} \triangleright_{b_2} \hat{\mathrm{\texttt{Int}}}$

) may fail;

$\hat{\star} \triangleright_{b_2} \hat{\mathrm{\texttt{Int}}}$

) may fail;2. if every inflow via

$\hat{\star}$

is inconsistent with Bool (resp. Int), the type flow

$\hat{\star}$

is inconsistent with Bool (resp. Int), the type flow

$\hat{\star} \triangleright_{b_1} \hat{\mathrm{\texttt{Bool}}}$

(resp.

$\hat{\star} \triangleright_{b_1} \hat{\mathrm{\texttt{Bool}}}$

(resp.

$\hat{\star} \triangleright_{b_2} \hat{\mathrm{\texttt{Int}}}$

) must fail;

$\hat{\star} \triangleright_{b_2} \hat{\mathrm{\texttt{Int}}}$

) must fail;3. if each inflow of

$\hat{\star}$

is inconsistent with each outflow of

$\hat{\star}$

is inconsistent with each outflow of

$\hat{\star}$

,

$\hat{\star}$

,

$\hat{\star}$

is a wrong dynamic type.

$\hat{\star}$

is a wrong dynamic type.

The correspondence of type flow and type cast by the Static Blame framework guarantees several formal properties of our classification. For normal potential errors, we prove that the detection is complete, namely if no normal potential error is detected in a program, then no cast can fail at runtime, implying that the program will not abort with any blame. In other words, every type cast that fails at runtime will be reported as a normal potential error.

As we explained earlier, a detected potential error is not guaranteed to lead to an actual runtime cast failure. Therefore, the detection of normal potential errors is not sound, and mere completeness is a weak property. Note that it is also complete to naively treat every cast as a potential error. In contrast, the detection of strict potential errors is more precise. We claim that strict potential errors are sound with respect to erroneous type casts, whereas erroneous type casts are type casts that can never succeed.

The main difficulty of proof is how to define erroneous type casts formally. An intuitive attempt is to state that every program with erroneous type casts must abort with a blame message. But a cast inserted in the compiled gradual typing program may not execute. Moreover, a higher-order cast will not trigger a cast failure until it is used.

Therefore, we define erroneous type cast with respect to its runtime execution and prove that if an erroneous type cast is executed at runtime, then the whole program must abort with a blame message in one or more steps. Then, we can conclude our claim with a proof that strict potential error is sound with respect to erroneous type casts under the correspondence developed by the Static Blame framework.

Finally, a wrong dynamic type is a labeled dynamic type that always hides wrong data flows, which means that every non-dynamic inflow is inconsistent with every non-dynamic outflow. We will show that every non-dynamic outflow is a strict potential error. With the soundness of strict potential error, running a gradual typing program will immediately abort with a blame message whenever a value of wrong dynamic type is used. Or, values that are held in wrong dynamic types (recall that labeled types represent contexts) can never be safely used.

The notion of type flow and correspondence originated from a previous work of Rastogi et al. (Reference Rastogi, Chaudhuri and Hosmer2012). The goal of their work is also known as automatic type migration now. That is, they tried to infer a more precise type for every dynamic type in source code, and not reject any statically well-typed program. A more detailed comparison between our work and studies on type migration is presented in Section 6.

Our contributions are summarized as follows:

• We propose the Static Blame framework on a standard gradually typed language

$\lambda_\star$

. Static blame establishes the correspondence between static-time data flow information and runtime behavior for gradually typed programs. It consists of three parts: the concepts labeled type and type flow, which give a uniform treatment to type cast and data flow relations (Section 3.1); type flow analysis, a method to generate type flows by transitivity analysis (Section 3.2); flow-cast correspondence, a direct correspondence between type flows and type casts for a

$\lambda_\star$

. Static blame establishes the correspondence between static-time data flow information and runtime behavior for gradually typed programs. It consists of three parts: the concepts labeled type and type flow, which give a uniform treatment to type cast and data flow relations (Section 3.1); type flow analysis, a method to generate type flows by transitivity analysis (Section 3.2); flow-cast correspondence, a direct correspondence between type flows and type casts for a

$\lambda_\star$

program (Section 3.3).

$\lambda_\star$

program (Section 3.3).• We present a practical application of Static Blame by detecting potential errors from type flow analysis. We successfully characterize how potential errors affect program execution by classifying detected potential errors into three categories and proving formal properties of them, including: completeness of normal potential error (Section 4.1); soundness up to erroneous casts of strict potential error (Section 4.2); and inhabitants of wrong dynamic types are unsafe to use (Section 4.3).

• We implemented a bug detector SLOG (Static Blame fOr Grift) based Static Blame on Grift, a gradually typed variant of STLC (Section 5.1.1). We evaluated SLOG on a benchmark generated by mutation analysis from the original benchmark of Grift (Section 5.3). The effectiveness of Static Blame is validated that SLOG can successfully detect potential errors, and its performance is acceptable for programs of small and medium sizes within two or three thousand lines of code (Section 5.4). The implementation and data are publicly available Footnote 3 .

The rest of this paper is organized as follows. Section 2 introduces the syntax and semantics of a gradually typed language, including a surface language

![]() $\lambda_\star$

and an intermediate language

$\lambda_\star$

and an intermediate language

![]() $\lambda_B$

. Section 3 introduces the formal definition of the Static Blame framework. Section 4 develops the detection and classification of potential errors based on Static Blame. Section 5 evaluates effectiveness and performance of Static Blame. Section 6 reviews related work.

$\lambda_B$

. Section 3 introduces the formal definition of the Static Blame framework. Section 4 develops the detection and classification of potential errors based on Static Blame. Section 5 evaluates effectiveness and performance of Static Blame. Section 6 reviews related work.

2 Background: Program syntax and semantics

In this section, we give formal definitions of the gradual typing lambda calculus

![]() $\lambda_\star$

and blame calculus

$\lambda_\star$

and blame calculus

![]() $\lambda_B$

. The blame calculus

$\lambda_B$

. The blame calculus

![]() $\lambda_B$

is not space-efficient (Herman et al., Reference Herman, Tomb and Flanagan2010), allowing unbounded accumulation of runtime casts, to simplify development and proof of relevant properties. This choice does not impair the validity of our framework, since space-efficient semantics can be designed equivalent to a normal semantic (Siek & Wadler, Reference Siek and Wadler2010; Siek et al., Reference Siek, Vitousek, Cimini, Tobin-Hochstadt and Garcia2015).

$\lambda_B$

is not space-efficient (Herman et al., Reference Herman, Tomb and Flanagan2010), allowing unbounded accumulation of runtime casts, to simplify development and proof of relevant properties. This choice does not impair the validity of our framework, since space-efficient semantics can be designed equivalent to a normal semantic (Siek & Wadler, Reference Siek and Wadler2010; Siek et al., Reference Siek, Vitousek, Cimini, Tobin-Hochstadt and Garcia2015).

2.1 Syntax

The syntax of

![]() $\lambda_B$

and

$\lambda_B$

and

![]() $\lambda_\star$

are both given in figure 1. Since

$\lambda_\star$

are both given in figure 1. Since

![]() $\lambda_B$

is an extension of

$\lambda_B$

is an extension of

![]() $\lambda_\star$

, they share some common syntactic categories. The parts without highlighting are common to both of them, the light-gray highlighted part belongs to

$\lambda_\star$

, they share some common syntactic categories. The parts without highlighting are common to both of them, the light-gray highlighted part belongs to

![]() $\lambda_B$

, and the gray highlighted part belongs to

$\lambda_B$

, and the gray highlighted part belongs to

![]() $\lambda_\star$

. In other words,

$\lambda_\star$

. In other words,

![]() $\lambda_B$

extends

$\lambda_B$

extends

![]() $\lambda_\star$

by substituting light-gray part for dark-gray part in

$\lambda_\star$

by substituting light-gray part for dark-gray part in

![]() $\lambda_\star$

. In this paper, the denotation of shared syntactic symbols will be clear from context.

$\lambda_\star$

. In this paper, the denotation of shared syntactic symbols will be clear from context.

Fig. 1. Syntax of

![]() $\lambda_\star$

and

$\lambda_\star$

and

![]() $\lambda_B.$

$\lambda_B.$

We let G,T or S range over types. A gradual type is either a base type

![]() $\iota$

, the dynamic type

$\iota$

, the dynamic type

![]() $\star$

, a function type

$\star$

, a function type

![]() $T \to S$

, or a record type

$T \to S$

, or a record type

![]() $\{\overline{l_i : G_i} \}$

. Each field label l belongs to a countably infinite set

$\{\overline{l_i : G_i} \}$

. Each field label l belongs to a countably infinite set

![]() $\mathcal{L}$

, which is assumed to be disjoint with other syntactic categories. With subscripted

$\mathcal{L}$

, which is assumed to be disjoint with other syntactic categories. With subscripted

![]() $\star$

, type metavariables

$\star$

, type metavariables

![]() $G_\star,T_\star$

and

$G_\star,T_\star$

and

![]() $S_\star$

indicate gradual types that are not

$S_\star$

indicate gradual types that are not

![]() $\star$

. Note that types like

$\star$

. Note that types like

![]() $\star \to \star,\{l:\star\}$

are still

$\star \to \star,\{l:\star\}$

are still

![]() $G_\star$

types. We give an inductive definition of them in Figure 1. We let x,y,z range over term variables, which belong to another countably infinite set

$G_\star$

types. We give an inductive definition of them in Figure 1. We let x,y,z range over term variables, which belong to another countably infinite set

![]() $\mathcal{V}$

. We let t range over terms, and e,s range over expressions.

$\mathcal{V}$

. We let t range over terms, and e,s range over expressions.

An expression is a term with a context label attached to it. In the source code of a gradually typed programming language, a context label is merely a syntactical program label

![]() $\omega$

which belongs to another denumerable set

$\omega$

which belongs to another denumerable set

![]() $\Omega$

. In the semantics Section 2.2, we will explore how context labels can be employed to identify and subsequently track the evaluation of expressions in the source code. Importantly, context labels have no impact on the semantics of

$\Omega$

. In the semantics Section 2.2, we will explore how context labels can be employed to identify and subsequently track the evaluation of expressions in the source code. Importantly, context labels have no impact on the semantics of

![]() $\lambda_B$

. In Section 3, context labels play a fundamental role in Static Blame as the definition of type flow is based on them. Consequently, the entire type flow analysis algorithm relies on context labels.

$\lambda_B$

. In Section 3, context labels play a fundamental role in Static Blame as the definition of type flow is based on them. Consequently, the entire type flow analysis algorithm relies on context labels.

A context label also contains a list of context refinements to denote newly generated contexts during compilation and evaluation, the precise meaning will be explained later in the semantics Section 2.2.

A term may be a variable x, a constant c, a lambda expression

![]() $\lambda x:G. e$

, an application

$\lambda x:G. e$

, an application

![]() $e_1 e_2$

, a record

$e_1 e_2$

, a record

![]() $\{\overline{l_i = e_i} \}$

, a record projection

$\{\overline{l_i = e_i} \}$

, a record projection

![]() $e.l$

, or a conditional expression

$e.l$

, or a conditional expression

![]() $\mathrm{\texttt{if}}\ e_1\ \mathrm{\texttt{then}}\ e_2\ \mathrm{\texttt{else}}\ e_3$

.

$\mathrm{\texttt{if}}\ e_1\ \mathrm{\texttt{then}}\ e_2\ \mathrm{\texttt{else}}\ e_3$

.

Terms of

![]() $\lambda_\star$

also contain ascription

$\lambda_\star$

also contain ascription

![]() $e :: G$

, which does not exist in the blame calculus

$e :: G$

, which does not exist in the blame calculus

![]() $\lambda_B$

. Ascriptions denote manual type casts by programmers and will be replaced by explicit type casts in

$\lambda_B$

. Ascriptions denote manual type casts by programmers and will be replaced by explicit type casts in

![]() $\lambda_B$

during translation. A type cast

$\lambda_B$

during translation. A type cast

![]() $\langle S \Leftarrow^b T \rangle e $

denotes casting the type of term t from T to S at run-time and is identified by a blame label b which is also a program label. In this paper,

$\langle S \Leftarrow^b T \rangle e $

denotes casting the type of term t from T to S at run-time and is identified by a blame label b which is also a program label. In this paper,

![]() $\langle T_1 \Leftarrow^{b_1} T_2 \Leftarrow^{b_2} T_3 ... T_n \Leftarrow^{b_n} T_{n+1} \rangle$

is an abbreviation of the sequence of casts

$\langle T_1 \Leftarrow^{b_1} T_2 \Leftarrow^{b_2} T_3 ... T_n \Leftarrow^{b_n} T_{n+1} \rangle$

is an abbreviation of the sequence of casts

![]() $ \langle T_1 \Leftarrow^{b_1} T_2 \rangle \langle T_2 \Leftarrow^{b_2} T_3 \rangle ... \langle T_n \Leftarrow^{b_n} T_{n+1} \rangle$

. Whenever employing this abbreviation, we will provide further clarification if it is not trivial to omit context labels.

$ \langle T_1 \Leftarrow^{b_1} T_2 \rangle \langle T_2 \Leftarrow^{b_2} T_3 \rangle ... \langle T_n \Leftarrow^{b_n} T_{n+1} \rangle$

. Whenever employing this abbreviation, we will provide further clarification if it is not trivial to omit context labels.

The reuse of context label as blame label is nothing deep. It is just a convenient way to make the compilation process deterministic, which needs to generate fresh blame label to identify different type casts occurring in the compiled program. The blame label itself is just an identifier to distinguish different casts without additional semantics. Therefore, the main task of the blame label generation process is to ensure that different casts have different blame labels in the compilation result (see Proposition 2.9).

We also assume a denumerable set of constants as the definition of blame calculus of Walder and Findler (2009). Constants include values of base types and operations on them. Therefore, each constant is static. Specifically, We assume a meta function ty that maps every constant to its predefined type in the standard way. For example,

![]() $ty(\mathrm{\texttt{true}})$

and

$ty(\mathrm{\texttt{true}})$

and

![]() $ty(\mathrm{\texttt{false}})$

are defined as type Bool, while

$ty(\mathrm{\texttt{false}})$

are defined as type Bool, while

![]() $ty(+)$

and

$ty(+)$

and

![]() $ty(-)$

are defined as

$ty(-)$

are defined as

![]() $\mathrm{\texttt{Int}} \to \mathrm{\texttt{Int}} \to \mathrm{\texttt{Int}}$

. Being static means that for any constant c, the type ty(c) does not contain any

$\mathrm{\texttt{Int}} \to \mathrm{\texttt{Int}} \to \mathrm{\texttt{Int}}$

. Being static means that for any constant c, the type ty(c) does not contain any

![]() $\star$

within it.

$\star$

within it.

Since

![]() $\lambda_\star$

is a gradually typed language extended by subtyping relation, consistent subtyping

$\lambda_\star$

is a gradually typed language extended by subtyping relation, consistent subtyping

![]() $T <' S$

is used in typing rules rather than consistency. The declarative definition of consistent subtyping is given in Figure 1 along with standard consistency. The distinction between

$T <' S$

is used in typing rules rather than consistency. The declarative definition of consistent subtyping is given in Figure 1 along with standard consistency. The distinction between

![]() $T <' S$

and consistency is the width subtyping rule for records.

$T <' S$

and consistency is the width subtyping rule for records.

Consistency is a structural relation. A base type is consistent with itself. A dynamic type is consistent with every type. Two function types are consistent if their domains and ranges are consistent. Two record types are consistent if they have the same fields and their fields are consistent. We refer to Garcia et al. (Reference Garcia, Clark and Tanter2016) for more details about the design principle of consistency relation.

The consistent subtyping relation merely extends standard subtyping relation with two additional rules stating that a dynamic type

![]() $\star$

satisfies consistent subtyping with any gradual type in both directions. This definition shows that consistent subtyping is mostly a structural relation.

$\star$

satisfies consistent subtyping with any gradual type in both directions. This definition shows that consistent subtyping is mostly a structural relation.

![]() $T <' S$

if every pair (T’,S’) of corresponding parts in type trees of T and S satisfies subtyping relation or one of them is

$T <' S$

if every pair (T’,S’) of corresponding parts in type trees of T and S satisfies subtyping relation or one of them is

![]() $\star$

. We refer to Xie et al. (Reference Xie, Bi, d. S. Oliveira and Schrijvers2020) for a fairly comprehensive research of consistent subtyping.

$\star$

. We refer to Xie et al. (Reference Xie, Bi, d. S. Oliveira and Schrijvers2020) for a fairly comprehensive research of consistent subtyping.

Figure 2 describes the static type system and translation relation of

![]() $\lambda_\star$

simultaneously. By omitting the highlighted parts, it defines the type system of

$\lambda_\star$

simultaneously. By omitting the highlighted parts, it defines the type system of

![]() $\lambda_\star$

as a ternary relation

$\lambda_\star$

as a ternary relation

![]() $\Gamma \vdash e : G$

. And by adding the highlighted parts, it defines the translation static semantics as a relation

$\Gamma \vdash e : G$

. And by adding the highlighted parts, it defines the translation static semantics as a relation

![]() $\Gamma \vdash t \rightsquigarrow s : G$

, where s denotes terms in

$\Gamma \vdash t \rightsquigarrow s : G$

, where s denotes terms in

![]() $\lambda_B$

.

$\lambda_B$

.

Fig. 2. Static semantics of

![]() $\lambda_\star.$

$\lambda_\star.$

We let

![]() $\Gamma$

range over type environments, which are partial functions that map term variables into gradual types and are represented by unordered sets of pairs

$\Gamma$

range over type environments, which are partial functions that map term variables into gradual types and are represented by unordered sets of pairs

![]() $x:G$

. The extension of type environment

$x:G$

. The extension of type environment

![]() $\Gamma,(x:G)$

is an abbreviation of set union, where we assume the new variable x is not in the domain of

$\Gamma,(x:G)$

is an abbreviation of set union, where we assume the new variable x is not in the domain of

![]() $\Gamma$

.

$\Gamma$

.

There are two points we need to clarify in Figure 2. First, the rule T_If involves a join operator

![]() $\vee$

ensuring that branches of a conditional expression can have different types.

$\vee$

ensuring that branches of a conditional expression can have different types.

Definition 2.1 (

![]() $\vee$

and

$\vee$

and

![]() $\wedge$

). The operation

$\wedge$

). The operation

![]() $\vee$

and

$\vee$

and

![]() $\wedge$

is defined as

$\wedge$

is defined as

\begin{align*} &\star \vee G = G \vee \star = \star \quad \star \wedge G = G \wedge \star = G \quad \iota \vee \iota = \iota \wedge \iota = \iota \\ &(T_{11} \to T_{12}) \vee (T_{21} \to T_{22}) = (T_{11} \wedge T_{21}) \to (T_{12} \vee T_{22}) \\ &(T_{11} \to T_{12}) \wedge (T_{21} \to T_{22}) = (T_{11} \vee T_{21}) \to (T_{12} \wedge T_{22}) \\ &\{ \overline{l_i:T_{i1}}, \overline{l_j:T_j} \} \vee \{ \overline{l_i:T_{i2}}, \overline{l_k:T_k} \} = \{ \overline{l_i:T_{i1} \vee T_{i2}}\} &\mathrm{, where }\{\overline{l_j}\} \cap \{\overline{l_k}\} = \emptyset \\ &\{ \overline{l_i:T_{i1}}, \overline{l_j:T_j} \} \wedge \{ \overline{l_i:T_{i2}}, \overline{l_k:T_k} \} = \{ \overline{l_i:T_{i1} \wedge T_{i2}}, \overline{l_j:T_j}, \overline{l_k:T_k} \} &\mathrm{, where }\{\overline{l_j}\} \cap \{\overline{l_k}\} = \emptyset \\\end{align*}

\begin{align*} &\star \vee G = G \vee \star = \star \quad \star \wedge G = G \wedge \star = G \quad \iota \vee \iota = \iota \wedge \iota = \iota \\ &(T_{11} \to T_{12}) \vee (T_{21} \to T_{22}) = (T_{11} \wedge T_{21}) \to (T_{12} \vee T_{22}) \\ &(T_{11} \to T_{12}) \wedge (T_{21} \to T_{22}) = (T_{11} \vee T_{21}) \to (T_{12} \wedge T_{22}) \\ &\{ \overline{l_i:T_{i1}}, \overline{l_j:T_j} \} \vee \{ \overline{l_i:T_{i2}}, \overline{l_k:T_k} \} = \{ \overline{l_i:T_{i1} \vee T_{i2}}\} &\mathrm{, where }\{\overline{l_j}\} \cap \{\overline{l_k}\} = \emptyset \\ &\{ \overline{l_i:T_{i1}}, \overline{l_j:T_j} \} \wedge \{ \overline{l_i:T_{i2}}, \overline{l_k:T_k} \} = \{ \overline{l_i:T_{i1} \wedge T_{i2}}, \overline{l_j:T_j}, \overline{l_k:T_k} \} &\mathrm{, where }\{\overline{l_j}\} \cap \{\overline{l_k}\} = \emptyset \\\end{align*}

If defined,

![]() $T \vee S$

is a common consistent supertype of T and S, while

$T \vee S$

is a common consistent supertype of T and S, while

![]() $T \wedge S$

is a common consistent subtype of T and S.

$T \wedge S$

is a common consistent subtype of T and S.

Proposition 2.2. For every gradual types T and S,

1. if

$T \vee S$

is defined, then

$T \vee S$

is defined, then

$T <' T \vee S$

and

$T <' T \vee S$

and

$S <' T \vee S$

;

$S <' T \vee S$

;2. if

$T \wedge S$

is defined, then

$T \wedge S$

is defined, then

$T \wedge S <' T$

and

$T \wedge S <' T$

and

$T \wedge S <' S$

.

$T \wedge S <' S$

.

Second, the translation process requires context refinement by

![]() $\blacktriangleleft$

for every newly generated sub-expression. The symbol

$\blacktriangleleft$

for every newly generated sub-expression. The symbol

![]() $\blacktriangleleft$

is used for cast expressions. The expression with the label

$\blacktriangleleft$

is used for cast expressions. The expression with the label

![]() $p\blacktriangleleft$

will be cast to the expression with label p. For example, in T_App the argument expression

$p\blacktriangleleft$

will be cast to the expression with label p. For example, in T_App the argument expression

![]() $e_2$

in

$e_2$

in

![]() $\lambda_\star$

is assumed to be translated to an expression

$\lambda_\star$

is assumed to be translated to an expression

![]() $t_2^{\,p_2}$

, and a cast is inserted to form a new argument expression

$t_2^{\,p_2}$

, and a cast is inserted to form a new argument expression

![]() $(\langle T_1 \Leftarrow^{\,p_2} T_2 \rangle t_2^{\,p_2\blacktriangleleft})^{\,p_2}$

in

$(\langle T_1 \Leftarrow^{\,p_2} T_2 \rangle t_2^{\,p_2\blacktriangleleft})^{\,p_2}$

in

![]() $\lambda_B$

. We let the new argument expression keep the label

$\lambda_B$

. We let the new argument expression keep the label

![]() $p_2$

after translation. To maintain the uniqueness of context labels (Proposition 2.9), the label of the term

$p_2$

after translation. To maintain the uniqueness of context labels (Proposition 2.9), the label of the term

![]() $t_2$

is a refined label

$t_2$

is a refined label

![]() $p_2\blacktriangleleft$

, which means “the value of this expression will be cast to the value of

$p_2\blacktriangleleft$

, which means “the value of this expression will be cast to the value of

![]() $p_2$

”. Similarly in T_AppDyn and T_If, this kind of context refinement by

$p_2$

”. Similarly in T_AppDyn and T_If, this kind of context refinement by

![]() $\blacktriangleleft$

happens where casts are inserted. Note that T_Ann does not need context refinement, since an ascription is directly transformed into a cast and no new sub-expression is generated. Other type rules are standard.

$\blacktriangleleft$

happens where casts are inserted. Note that T_Ann does not need context refinement, since an ascription is directly transformed into a cast and no new sub-expression is generated. Other type rules are standard.

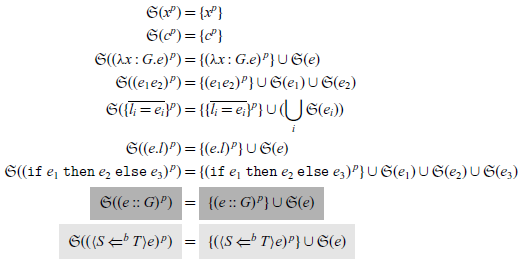

Definition 2.3 (sub-expressions and

![]() $\mathfrak{S}(e)$

). The sub-expressions of an expression e in

$\mathfrak{S}(e)$

). The sub-expressions of an expression e in

![]() $\lambda_\star$

or

$\lambda_\star$

or

![]() $\lambda_B$

, denoted by

$\lambda_B$

, denoted by

![]() $\mathfrak{S}(e)$

, is a multi-set defined by

$\mathfrak{S}(e)$

, is a multi-set defined by

The light-gray highlighted part belongs to

![]() $\lambda_B$

, and the gray highlighted part belongs to

$\lambda_B$

, and the gray highlighted part belongs to

![]() $\lambda_\star$

. Other parts are common.

$\lambda_\star$

. Other parts are common.

Definition 2.4. (

![]() $m_{\mathfrak{S}(e)}$

.) Let

$m_{\mathfrak{S}(e)}$

.) Let

![]() $m_{\mathfrak{S}(e)}$

denote the multiplicity function of

$m_{\mathfrak{S}(e)}$

denote the multiplicity function of

![]() $\mathfrak{S}(e)$

that maps every expression to the number of its occurrence in

$\mathfrak{S}(e)$

that maps every expression to the number of its occurrence in

![]() $\mathfrak{S}(e)$

.

$\mathfrak{S}(e)$

.

Definition 2.5 (expression occurring in e). We say an expression e’ occurs in e if

![]() $m_{\mathfrak{S}(e)}(e')>0$

.

$m_{\mathfrak{S}(e)}(e')>0$

.

Definition 2.6. (well-labeled). An expression e is well-labeled if:

1. for all e’ occurring in e,

$m_{\mathfrak{S}(e)}(e')=1$

;

$m_{\mathfrak{S}(e)}(e')=1$

;2. for every context label p, there is at most one sub-expression e’ occurring in e with context label p, i.e., the cardinal of the set

$\{e' \mathrm{occurring in \textit{e}} \mid e' = t^p \mathrm{ for some \textit{t}}\}$

is at most 1.

$\{e' \mathrm{occurring in \textit{e}} \mid e' = t^p \mathrm{ for some \textit{t}}\}$

is at most 1.

That is, there will not be two exactly the same sub-expressions in e. Every sub-expression has a unique program label attached to it.

Definition 2.7. (cast occurring in e) We say a type cast

![]() $\langle S \Leftarrow^b T\rangle$

occurs in e for e an expression in

$\langle S \Leftarrow^b T\rangle$

occurs in e for e an expression in

![]() $\lambda_B$

, if there is a sub-expression

$\lambda_B$

, if there is a sub-expression

![]() $(\langle S \Leftarrow^b T\rangle e')^{\,p}$

occurring in e. Similarly, we say that a type cast combination

$(\langle S \Leftarrow^b T\rangle e')^{\,p}$

occurring in e. Similarly, we say that a type cast combination

![]() $\langle T_1 \Leftarrow^{b_1} T_2 \Leftarrow^{b_2} T_3 ... T_n \Leftarrow^{b_n} T_{n+1} \rangle$

occurs in e if there is a sub-expression

$\langle T_1 \Leftarrow^{b_1} T_2 \Leftarrow^{b_2} T_3 ... T_n \Leftarrow^{b_n} T_{n+1} \rangle$

occurs in e if there is a sub-expression

![]() $(\langle T_1 \Leftarrow^{b_1} T_2 \Leftarrow^{b_2} T_3 ... T_n \Leftarrow^{b_n} T_{n+1} \rangle e')^{\,p}$

(where the omitted context labels are kept the same) occurring in e.

$(\langle T_1 \Leftarrow^{b_1} T_2 \Leftarrow^{b_2} T_3 ... T_n \Leftarrow^{b_n} T_{n+1} \rangle e')^{\,p}$

(where the omitted context labels are kept the same) occurring in e.

Definition 2.8. (occurring as an attached label; occurring as a blame label) We say a context label p occurs as an attached label in e, if there is a sub-expression

![]() $t^p$

occurring in e. Similarly, we say p occurs as a blame label in e, if there is a sub-expression

$t^p$

occurring in e. Similarly, we say p occurs as a blame label in e, if there is a sub-expression

![]() $(\langle S \Leftarrow^b T\rangle e')^{\,p'}$

occurring in e satisfying

$(\langle S \Leftarrow^b T\rangle e')^{\,p'}$

occurring in e satisfying

![]() $b=p$

.

$b=p$

.

Now we can formally assert that our representation of blame label is appropriate.

Proposition 2.9. Suppose that

![]() $\Gamma \vdash e \rightsquigarrow s : G$

where e is a well-labeled expression in

$\Gamma \vdash e \rightsquigarrow s : G$

where e is a well-labeled expression in

![]() $\lambda_\star$

, s is also well-labeled, and for every blame label b there is at most one type cast expression occurring in e with blame label b, i.e., the cardinal of set

$\lambda_\star$

, s is also well-labeled, and for every blame label b there is at most one type cast expression occurring in e with blame label b, i.e., the cardinal of set

![]() $\{e' \mathrm{occurring in \textit{e}} \mid e' = (\langle S \Leftarrow^b T\rangle e'')^{\,p}) \mathrm{ for some} $

S,T,e”

$\{e' \mathrm{occurring in \textit{e}} \mid e' = (\langle S \Leftarrow^b T\rangle e'')^{\,p}) \mathrm{ for some} $

S,T,e”

![]() $\}$

is at most 1.

$\}$

is at most 1.

Well-labeled is just an auxiliary definition to formalize the assumption that every sub-expression has a unique label attached to it.

Proof It is sufficient to show that, in each step of the translation process, newly inserted casts will use fresh blame labels. By an easy induction, we will show that, for every program e, if

![]() $\Gamma \vdash e \rightsquigarrow t^p : G$

, then p does not occur as a blame label in t. That is, the out-most attached context label p will never be used as a blame label inside t.

$\Gamma \vdash e \rightsquigarrow t^p : G$

, then p does not occur as a blame label in t. That is, the out-most attached context label p will never be used as a blame label inside t.

The proposition we need to prove is a direct corollary. Indeed, in the translation process defined in Figure 2, the newly generated blame labels are always the out-most attached context labels of the (translation result of) direct sub-terms. Since these context labels do not occur as blame labels in these direct sub-terms, the generated blame labels are therefore fresh.

Recall that every context label p occurs at most once in e. Moreover, we give two trivial observations about

![]() $\Gamma \vdash e \rightsquigarrow t^p : G$

without proof: (1) p must occur in e as an attached label (2) if a cast

$\Gamma \vdash e \rightsquigarrow t^p : G$

without proof: (1) p must occur in e as an attached label (2) if a cast

![]() $\langle S \Leftarrow^{\,p'} T \rangle$

occurs in t, then p’ must occur in e as an attached label.

$\langle S \Leftarrow^{\,p'} T \rangle$

occurs in t, then p’ must occur in e as an attached label.

Induction on

![]() $\Gamma \vdash e \rightsquigarrow t^p$

. Case T_Var and Case T_Con are direct.

$\Gamma \vdash e \rightsquigarrow t^p$

. Case T_Var and Case T_Con are direct.

Case T_Ann:

![]() $\Gamma \vdash e \rightsquigarrow t^{\,p_1} :T$

and

$\Gamma \vdash e \rightsquigarrow t^{\,p_1} :T$

and

![]() $\Gamma \vdash (e :: G)^{\,p_2} \rightsquigarrow (\langle G \Leftarrow^{\,p_1} T \rangle t^{\,p_1})^{\,p_2}$

. From the induction hypothesis and our observation, there is no cast with blame label

$\Gamma \vdash (e :: G)^{\,p_2} \rightsquigarrow (\langle G \Leftarrow^{\,p_1} T \rangle t^{\,p_1})^{\,p_2}$

. From the induction hypothesis and our observation, there is no cast with blame label

![]() $p_1$

or

$p_1$

or

![]() $p_2$

occurs in t, thus the conclusion holds for

$p_2$

occurs in t, thus the conclusion holds for

![]() $(\langle G \Leftarrow^{\,p_1} T \rangle t^{\,p_1})^{\,p_2}$

.

$(\langle G \Leftarrow^{\,p_1} T \rangle t^{\,p_1})^{\,p_2}$

.

Case T_Lam:

![]() $\Gamma, (x:G) \vdash e \rightsquigarrow s :T$

and

$\Gamma, (x:G) \vdash e \rightsquigarrow s :T$

and

![]() $\Gamma \vdash (\lambda x:G.e)^{\,p} \rightsquigarrow (\lambda x:G.s)^{\,p} : G \to T$

. From our observation, p does not occur as a blame label in s. Apply the induction hypothesis, we know that every p occurs in s as a blame label at most once. Then the conclusion holds for

$\Gamma \vdash (\lambda x:G.e)^{\,p} \rightsquigarrow (\lambda x:G.s)^{\,p} : G \to T$

. From our observation, p does not occur as a blame label in s. Apply the induction hypothesis, we know that every p occurs in s as a blame label at most once. Then the conclusion holds for

![]() $(\lambda x:G.s)^{\,p}$

.

$(\lambda x:G.s)^{\,p}$

.

Case T_AppDyn:

![]() $\Gamma \vdash e_1 \rightsquigarrow t_1^{\,p_1} : \star$

and

$\Gamma \vdash e_1 \rightsquigarrow t_1^{\,p_1} : \star$

and

![]() $\Gamma \vdash e_2 \rightsquigarrow t_2^{\,p_2} :G$

. The result is

$\Gamma \vdash e_2 \rightsquigarrow t_2^{\,p_2} :G$

. The result is

![]() $e = ((\langle \star \to \star \Leftarrow^{\,p_1} \star \rangle t_1^{\,p_1\blacktriangleleft} )^{\,p_1} (\langle \star \Leftarrow^{\,p_2} G \rangle t_2^{\,p_2\blacktriangleleft})^{\,p_2})^{\,p_3} $

. By the induction hypothesis and our observation, we know that

$e = ((\langle \star \to \star \Leftarrow^{\,p_1} \star \rangle t_1^{\,p_1\blacktriangleleft} )^{\,p_1} (\langle \star \Leftarrow^{\,p_2} G \rangle t_2^{\,p_2\blacktriangleleft})^{\,p_2})^{\,p_3} $

. By the induction hypothesis and our observation, we know that

![]() $p_1,p_2,p_3$

does not occur as a blame label in

$p_1,p_2,p_3$

does not occur as a blame label in

![]() $t_1$

, nor in

$t_1$

, nor in

![]() $t_2$

. Then the conclusion holds for e. Other cases are similar.

$t_2$

. Then the conclusion holds for e. Other cases are similar.

2.2 Semantics of

$\lambda_B$

Lg

$\lambda_B$

Lg

The whole definition of

![]() $\lambda_B$

is given in Figure 3, extending the syntax in Figure 1. As an intermediate language of gradual languages, the type system and runtime semantics of

$\lambda_B$

is given in Figure 3, extending the syntax in Figure 1. As an intermediate language of gradual languages, the type system and runtime semantics of

![]() $\lambda_B$

are standard. We let u,v,w range over values, r over intermediate evaluation results, and E over evaluation contexts. A value expression

$\lambda_B$

are standard. We let u,v,w range over values, r over intermediate evaluation results, and E over evaluation contexts. A value expression

![]() $v^p$

is an expression whose inner term v is a value. A value v is either a constant, a variable, a function, a record where each field is a value expression, a cast between functions or records, or an injection into dynamic from a non-dynamic type. Note this definition of value admits unbounded accumulation of casts on values.

$v^p$

is an expression whose inner term v is a value. A value v is either a constant, a variable, a function, a record where each field is a value expression, a cast between functions or records, or an injection into dynamic from a non-dynamic type. Note this definition of value admits unbounded accumulation of casts on values.

Fig. 3. The language

![]() $\lambda_B.$

$\lambda_B.$

Since

![]() $\lambda_B$

is an extension of

$\lambda_B$

is an extension of

![]() $\lambda_\star$

, we reuse the relation

$\lambda_\star$

, we reuse the relation

![]() $\Gamma \vdash e : G$

to represent the type system of

$\Gamma \vdash e : G$

to represent the type system of

![]() $\lambda_B$

.

$\lambda_B$

.

![]() $\lambda_B$

is a statically typed language. For a program t in

$\lambda_B$

is a statically typed language. For a program t in

![]() $\lambda_\star$

, it is trivial that

$\lambda_\star$

, it is trivial that

![]() $\emptyset \vdash e \rightsquigarrow s : G$

implies

$\emptyset \vdash e \rightsquigarrow s : G$

implies

![]() $\emptyset \vdash s : G$

.

$\emptyset \vdash s : G$

.

We use a binary relation

![]() $e \longrightarrow r$

to indicate a single-step reduction from an expression e to a result r. We say an expression e is a

$e \longrightarrow r$

to indicate a single-step reduction from an expression e to a result r. We say an expression e is a

![]() $\longrightarrow$

-redex if there exists an r such that

$\longrightarrow$

-redex if there exists an r such that

![]() $e \longrightarrow r$

. The standard reduction relation

$e \longrightarrow r$

. The standard reduction relation

![]() $e \longmapsto r$

is a simple closure of

$e \longmapsto r$

is a simple closure of

![]() $\longrightarrow$

. By induction, if e can be decomposed to E[e’] as a combination of an evaluation context E and a

$\longrightarrow$

. By induction, if e can be decomposed to E[e’] as a combination of an evaluation context E and a

![]() $\longrightarrow$

-redex e’, then e can reduce in one step by reducing e’ in one step. We use

$\longrightarrow$

-redex e’, then e can reduce in one step by reducing e’ in one step. We use

![]() $e \longmapsto^* r$

to indicate zero or more steps of reduction.

$e \longmapsto^* r$

to indicate zero or more steps of reduction.

The reader can disregard the manipulation of context labels momentarily and observe that the definition of

![]() $e \longrightarrow r$

is straightforward. A detailed explanation of context label manipulation will will be provided later. We assume an interpreting function

$e \longrightarrow r$

is straightforward. A detailed explanation of context label manipulation will will be provided later. We assume an interpreting function

![]() $[\![ ]\!]$

which interprets a constant c with respect to ty(c). If ty(c) is a function type, we assume the return value of

$[\![ ]\!]$

which interprets a constant c with respect to ty(c). If ty(c) is a function type, we assume the return value of

![]() $[\![ c ]\!] $

is still a constant. For example,

$[\![ c ]\!] $

is still a constant. For example,

![]() $[\![ + ]\!] v$

is itself a constant which can be interpreted as a computable function that maps any integer n to the result of

$[\![ + ]\!] v$

is itself a constant which can be interpreted as a computable function that maps any integer n to the result of

![]() $v+n$

if v is an integer, and undefined otherwise. Rules for application, record projection, conditional expression, cast elimination, and cast decomposition are standard. Note that we also give a rule concerning record type besides function type. A cast between two record types is resolved into a less complicated cast between types of the fields being accessed.

$v+n$

if v is an integer, and undefined otherwise. Rules for application, record projection, conditional expression, cast elimination, and cast decomposition are standard. Note that we also give a rule concerning record type besides function type. A cast between two record types is resolved into a less complicated cast between types of the fields being accessed.

In Figure 3, blame is always assigned to the downcast. Therefore,

![]() $\lambda_B$

resembles the D strategy in Siek et al. (Reference Siek, Garcia and Taha2009). Static Blame is easy to migrate between different blame strategies by simply changing the process of flow analysis that will be introduced in Section 3 to match the runtime semantics.

$\lambda_B$

resembles the D strategy in Siek et al. (Reference Siek, Garcia and Taha2009). Static Blame is easy to migrate between different blame strategies by simply changing the process of flow analysis that will be introduced in Section 3 to match the runtime semantics.

Now we give a detailed explanation of context label manipulation in reduction. Note that context labels occurring in an expression do not affect its evaluation. The general design principle which we will prove later is that if

![]() $t_1^{\,p_1} \longmapsto t_2^{\,p_2}$

then

$t_1^{\,p_1} \longmapsto t_2^{\,p_2}$

then

![]() $p_1 = p_2$

. In other words, context label is a way to track evaluation steps similar to program labels in standard flow analysis. As a result,

$p_1 = p_2$

. In other words, context label is a way to track evaluation steps similar to program labels in standard flow analysis. As a result,

![]() $t[x := v]$

is still defined as a term substitution to preserve attached context labels. More specifically,

$t[x := v]$

is still defined as a term substitution to preserve attached context labels. More specifically,

![]() $x^p[x := v]=v^p$

.

$x^p[x := v]=v^p$

.

Additionally, context labels enable us to encode type cast, specifying both the source and the target. For a type cast expression

![]() $(\langle S \Leftarrow^b T \rangle t^{\,p_1})^{\,p_2}$

, we can interpret it not only as “the type of t is cast to S” but also as “the value of expression

$(\langle S \Leftarrow^b T \rangle t^{\,p_1})^{\,p_2}$

, we can interpret it not only as “the type of t is cast to S” but also as “the value of expression

![]() $p_1$

will become the value of expression

$p_1$

will become the value of expression

![]() $p_2$

through the cast

$p_2$

through the cast

![]() $\langle S \Leftarrow^b T \rangle$

”. By regarding type casts as a special form of value passing, it becomes possible to statically infer all possible combinations of type casts if one can obtain all flow information within the program. This is precisely the approach employed by Static Blame as formally demonstrated in Corollary 3.15.

$\langle S \Leftarrow^b T \rangle$

”. By regarding type casts as a special form of value passing, it becomes possible to statically infer all possible combinations of type casts if one can obtain all flow information within the program. This is precisely the approach employed by Static Blame as formally demonstrated in Corollary 3.15.

As in compilation relation, context refinement happens when cast expressions are generated. The refined context label p attached to an expression

![]() $t^p$

expresses which expression

$t^p$

expresses which expression

![]() $t^p$

will cast to. For example, in function cast decomposition,

$t^p$

will cast to. For example, in function cast decomposition,

the generated expression

![]() ${\color{red}(}\langle S_1 \Leftarrow^b T_1 \rangle w^{\,p_3} {\color{red})}^{\,p_1?}$

will be cast to the argument of the expression

${\color{red}(}\langle S_1 \Leftarrow^b T_1 \rangle w^{\,p_3} {\color{red})}^{\,p_1?}$

will be cast to the argument of the expression

![]() $v^{p_1}$

inside the left hand. Therefore, it has the refined context label

$v^{p_1}$

inside the left hand. Therefore, it has the refined context label

![]() $p_1?$

. Also, note that abandoning the context label

$p_1?$

. Also, note that abandoning the context label

![]() $p_2$

is acceptable in this reduction rule. First, considering that

$p_2$

is acceptable in this reduction rule. First, considering that

![]() $v^{p_1}$

is a value, we already know that the only possible value that will flow into

$v^{p_1}$

is a value, we already know that the only possible value that will flow into

![]() $p_2$

is exactly

$p_2$

is exactly

![]() $v^{p_1}$

. Second, the expression

$v^{p_1}$

. Second, the expression

![]() $p_2$

will be eliminated and disappear after reduction. Consequently, preserving the context label

$p_2$

will be eliminated and disappear after reduction. Consequently, preserving the context label

![]() $p_1$

is sufficient to maintain the necessary information for the remaining reduction steps.

$p_1$

is sufficient to maintain the necessary information for the remaining reduction steps.

Static Blame has four different kinds of context refinement

![]() $\epsilon$

, namely

$\epsilon$

, namely

![]() $\blacktriangleleft$

,

$\blacktriangleleft$

,

![]() $?$

,

$?$

,

![]() $!$

and l. All of them express a cast-to relation. In detail, an expression with context label

$!$

and l. All of them express a cast-to relation. In detail, an expression with context label

![]() $p\blacktriangleleft$

means that e will cast to an expression with context label p; an expression with context label

$p\blacktriangleleft$

means that e will cast to an expression with context label p; an expression with context label

![]() $p?$

(resp.

$p?$

(resp.

![]() $p!$

/pl) means that e will cast to a parameter (resp. a return value/an l field) of expressions with context label p. The concept context refinement is highly inspired by the concept “type kind” in Rastogi et al. (Reference Rastogi, Chaudhuri and Hosmer2012) and shares the same notation. It also has something in common with the concept “Tag” in Vitousek et al. (Reference Vitousek, Swords and Siek2017), and a more detailed discussion is listed in related work.

$p!$

/pl) means that e will cast to a parameter (resp. a return value/an l field) of expressions with context label p. The concept context refinement is highly inspired by the concept “type kind” in Rastogi et al. (Reference Rastogi, Chaudhuri and Hosmer2012) and shares the same notation. It also has something in common with the concept “Tag” in Vitousek et al. (Reference Vitousek, Swords and Siek2017), and a more detailed discussion is listed in related work.

We conclude this section with several formal properties of

![]() $\lambda_B$

.

$\lambda_B$

.

Lemma 2.10. Suppose that

![]() $t^{\,p}$

is an expression in

$t^{\,p}$

is an expression in

![]() $\lambda_B$

with

$\lambda_B$

with

![]() $\Gamma \vdash t^p : G$

, if

$\Gamma \vdash t^p : G$

, if

![]() $t^{\,p} \longmapsto^{*} (t^{\prime})^{\,p'}$

, then

$t^{\,p} \longmapsto^{*} (t^{\prime})^{\,p'}$

, then

![]() $p = p'$

.

$p = p'$

.

Proof It is sufficient to consider the single-step case

![]() $t^{\,p} \longmapsto (t^{\prime})^{\,p'}$

. Suppose that

$t^{\,p} \longmapsto (t^{\prime})^{\,p'}$

. Suppose that

![]() $t^{\,p} = E[t_1^{\,p_1}],(t^{\prime})^{\,p'} = E[t_2^{\,p_2}]$

and

$t^{\,p} = E[t_1^{\,p_1}],(t^{\prime})^{\,p'} = E[t_2^{\,p_2}]$

and

![]() $t_1^{\,p_1} \longrightarrow t_2^{\,p_2}$

, the conclusion is trivial if E is not a hole, hence we can assume that

$t_1^{\,p_1} \longrightarrow t_2^{\,p_2}$

, the conclusion is trivial if E is not a hole, hence we can assume that

![]() $t^{\,p} \longrightarrow (t^{\prime})^{\,p'}$

, but the conclusion is also immediate from the definition of

$t^{\,p} \longrightarrow (t^{\prime})^{\,p'}$

, but the conclusion is also immediate from the definition of

![]() $e \longrightarrow r$

.

$e \longrightarrow r$

.

The determinism of our semantics is ensured by the unique decomposition theorem.

Theorem 2.11 (Unique Decomposition.) For a well-typed closed expression e in

![]() $\lambda_B$

, either e is a value expression, or there exists an unique decomposition

$\lambda_B$

, either e is a value expression, or there exists an unique decomposition

![]() $e=E[s]$

, where s is a

$e=E[s]$

, where s is a

![]() $\longrightarrow$

-redex.

$\longrightarrow$

-redex.

The type safety property consists of a progress lemma and a preservation lemma. The progress lemma is a corollary of the unique decomposition theorem.

Theorem 2.12 (Type Safety.) For blame calculus

![]() $\lambda_B$

, we have:

$\lambda_B$

, we have:

1. (Preservation) For a

$\lambda_B$

expression e, if

$\lambda_B$

expression e, if

$\Gamma \vdash e:G$

and

$\Gamma \vdash e:G$

and

$e \longmapsto s$

, then

$e \longmapsto s$

, then

$\Gamma \vdash s : G$

.

$\Gamma \vdash s : G$

.2. (Progress) For a

$\lambda_B$

expression e, if

$\lambda_B$

expression e, if

$\emptyset \vdash e:G$

, then one of the following is true:

$\emptyset \vdash e:G$

, then one of the following is true:-

a. there exists an s such that

$e \longmapsto s$

,

$e \longmapsto s$

, b. e is a value expression

$v^p$

,

$v^p$

,c. there exists a blame label b such that

.

.

-

3 The Static Blame framework

In this section, we introduce the formal definition of the Static Blame framework.

3.1 Labeled type and type flow

Recall that the main purpose of the Static Blame framework is to exploit data flows and develop a correspondence between static constraints and runtime behavior. The Static Blame framework achieves its goal with the concept of type flow which corresponds straightforwardly to type cast, and the type flow analysis which computes type flows statically.

The syntax of labeled type and type flow is given in Figure 4. Type flow consists of labeled types. As we explained in Section 1.3, a labeled type is an identifier used for tracking expression evaluation as program labels in standard flow analysis. Formally, a labeled type

![]() $\hat{G}$

is just a pair

$\hat{G}$

is just a pair

![]() $\langle G,p \rangle$

of a gradual type G and a context label p. By intuition, the labeled type represents an expression

$\langle G,p \rangle$

of a gradual type G and a context label p. By intuition, the labeled type represents an expression

![]() $t^p$

in

$t^p$

in

![]() $\lambda_B$

with the same context label. The gradual type G is a design redundancy since

$\lambda_B$

with the same context label. The gradual type G is a design redundancy since

![]() $\lambda_B$

is a statically typed language. The type of an expression e will not change during evaluation by preservation property. This redundancy gives Static Blame a straightforward correspondence: for a cast expression

$\lambda_B$

is a statically typed language. The type of an expression e will not change during evaluation by preservation property. This redundancy gives Static Blame a straightforward correspondence: for a cast expression

![]() $(\langle S \Leftarrow^b T\rangle t^{\,p_1})^{\,p_2}$

, it corresponds to type flow

$(\langle S \Leftarrow^b T\rangle t^{\,p_1})^{\,p_2}$

, it corresponds to type flow

![]() $\hat{T} \triangleright_b \hat{S}$

where

$\hat{T} \triangleright_b \hat{S}$

where

![]() $\hat{T} = \langle T,p_1 \rangle$

and

$\hat{T} = \langle T,p_1 \rangle$

and

![]() $\hat{S} = \langle S,p_2 \rangle$

. Similarly, for a cast combination

$\hat{S} = \langle S,p_2 \rangle$

. Similarly, for a cast combination

![]() $(\langle T_{n+1} \Leftarrow^{b_n} T_n ... T_3 \Leftarrow^{b_2} T_2 \Leftarrow^{b_1} T_{1} \rangle t^{\,p_1})^{\,p_{n+1}}$

where the omitted context labels are

$(\langle T_{n+1} \Leftarrow^{b_n} T_n ... T_3 \Leftarrow^{b_2} T_2 \Leftarrow^{b_1} T_{1} \rangle t^{\,p_1})^{\,p_{n+1}}$

where the omitted context labels are

![]() $p_2...p_n$

, it corresponds to a type flow combination

$p_2...p_n$

, it corresponds to a type flow combination

![]() $\hat{T_1} \triangleright_{b_1} ... \triangleright_{b_n} \hat{T}_{n+1}$

where

$\hat{T_1} \triangleright_{b_1} ... \triangleright_{b_n} \hat{T}_{n+1}$

where

![]() $\hat{T_i} = \langle T_i, p_i \rangle$

. The notation

$\hat{T_i} = \langle T_i, p_i \rangle$

. The notation

![]() $\hat{T_1} \triangleright_{b_1} ... \triangleright_{b_n} \hat{T}_{n+1}$

is an abbreviation for a collection of type flows

$\hat{T_1} \triangleright_{b_1} ... \triangleright_{b_n} \hat{T}_{n+1}$

is an abbreviation for a collection of type flows

![]() $\hat{T_1} \triangleright_{b_1} \hat{T_2}, \hat{T_2} \triangleright_{b_2} ... \hat{T}_{n} \triangleright_{b_n} \hat{T}_{n+1}$

.

$\hat{T_1} \triangleright_{b_1} \hat{T_2}, \hat{T_2} \triangleright_{b_2} ... \hat{T}_{n} \triangleright_{b_n} \hat{T}_{n+1}$

.

Fig. 4. Syntax for labeled type and type flow.

Type flow is an instance of constraint in standard flow analysis. Static Blame views a cast expression as a special value passing monitored by the blame mechanism. By intuition, a type flow

![]() $\langle T,p_1 \rangle \triangleright_b \langle S,p_2 \rangle$

means that the value of expression

$\langle T,p_1 \rangle \triangleright_b \langle S,p_2 \rangle$

means that the value of expression

![]() $t_1^{\,p_1}$

will be passed into expression

$t_1^{\,p_1}$

will be passed into expression

![]() $t_2^{\,p_2}$