Book contents

- Frontmatter

- Contents

- 1 Introduction

- 2 High-Dimensional Space

- 3 Best-Fit Subspaces and Singular Value Decomposition (SVD)

- 4 Random Walks and Markov Chains

- 5 Machine Learning

- 6 Algorithms for Massive Data Problems: Streaming, Sketching, and Sampling

- 7 Clustering

- 8 Random Graphs

- 9 Topic Models, Nonnegative Matrix Factorization, Hidden Markov Models, and Graphical Models

- 10 Other Topics

- 11 Wavelets

- 12 Background Material

- References

- Index

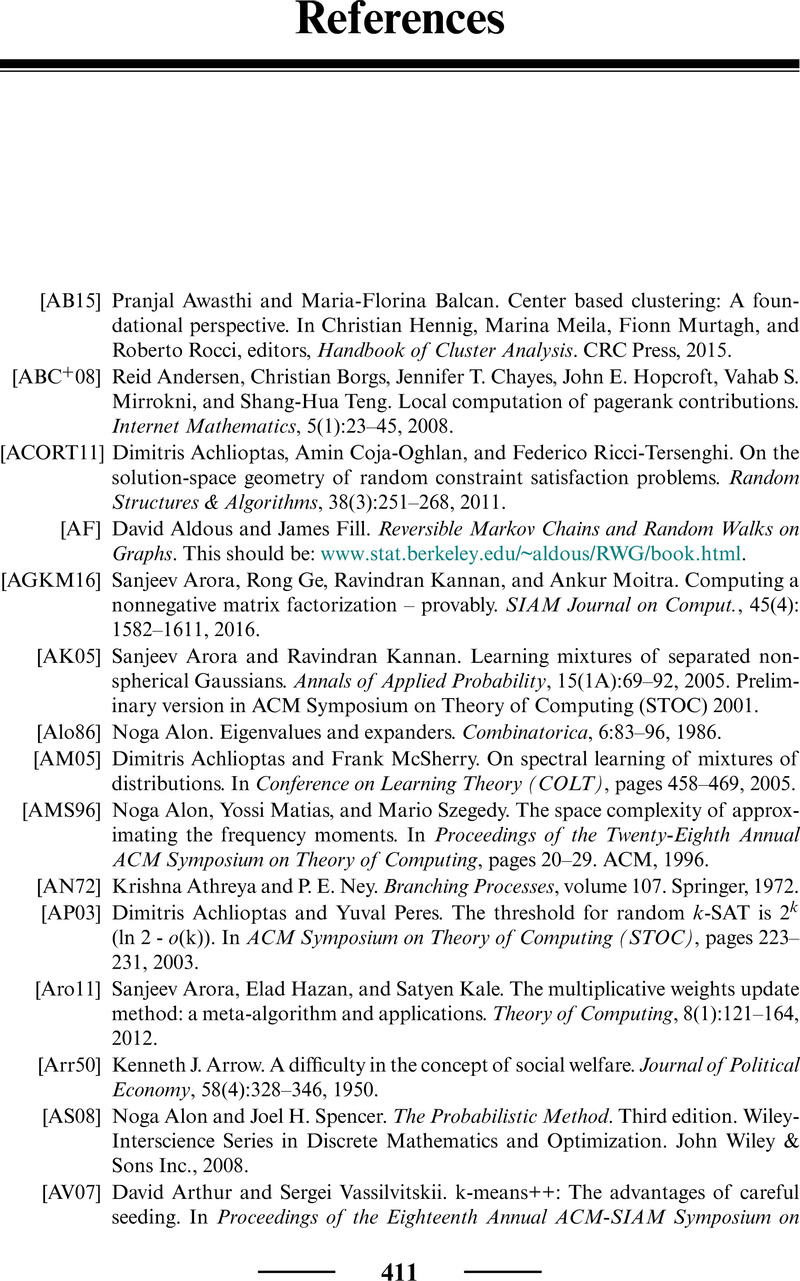

- References

References

Published online by Cambridge University Press: 17 January 2020

- Frontmatter

- Contents

- 1 Introduction

- 2 High-Dimensional Space

- 3 Best-Fit Subspaces and Singular Value Decomposition (SVD)

- 4 Random Walks and Markov Chains

- 5 Machine Learning

- 6 Algorithms for Massive Data Problems: Streaming, Sketching, and Sampling

- 7 Clustering

- 8 Random Graphs

- 9 Topic Models, Nonnegative Matrix Factorization, Hidden Markov Models, and Graphical Models

- 10 Other Topics

- 11 Wavelets

- 12 Background Material

- References

- Index

- References

Summary

Information

- Type

- Chapter

- Information

- Foundations of Data Science , pp. 411 - 420Publisher: Cambridge University PressPrint publication year: 2020